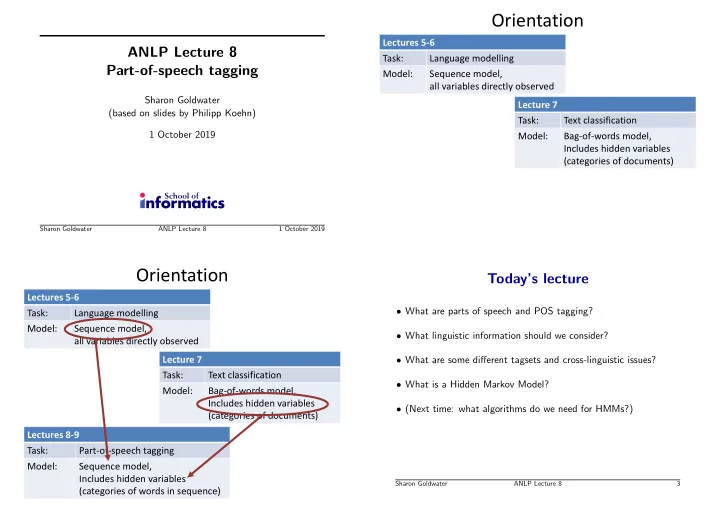

Orientation Lectures 5-6 ANLP Lecture 8 Task: Language modelling Part-of-speech tagging Model: Sequence model, all variables directly observed Sharon Goldwater Lecture 7 (based on slides by Philipp Koehn) Task: Text classification 1 October 2019 Model: Bag-of-words model, Includes hidden variables (categories of documents) Sharon Goldwater ANLP Lecture 8 1 October 2019 Orientation Today’s lecture Lectures 5-6 • What are parts of speech and POS tagging? Task: Language modelling Model: Sequence model, • What linguistic information should we consider? all variables directly observed Lecture 7 • What are some different tagsets and cross-linguistic issues? Task: Text classification • What is a Hidden Markov Model? Model: Bag-of-words model, Includes hidden variables • (Next time: what algorithms do we need for HMMs?) (categories of documents) Lectures 8-9 Task: Part-of-speech tagging Model: Sequence model, Includes hidden variables Sharon Goldwater ANLP Lecture 8 3 (categories of words in sequence)

What is part of speech tagging? Other tagging tasks Other problems can also be framed as tagging (sequence labelling): • Given a string: • Case restoration: If we just get lowercased text, we may want This is a simple sentence to restore proper casing, e.g. the river Thames • Identify parts of speech (syntactic categories): • Named entity recognition: it may also be useful to find names of persons, organizations, etc. in the text, e.g. Barack Obama This/DET is/VERB a/DET simple/ADJ sentence/NOUN • Information field segmentation: Given specific type of text • First step towards syntactic analysis (classified advert, bibiography entry), identify which words belong to which “fields” (price/size/#bedrooms, author/title/year) • Illustrates use of hidden Markov models to label sequences • Prosodic marking: In speech synthesis, which words/syllables have stress/intonation changes, e.g. He’s going. vs He’s going? Sharon Goldwater ANLP Lecture 8 4 Sharon Goldwater ANLP Lecture 8 5 Parts of Speech How many parts of speech? • Open class words (or content words) • Both linguistic and practical considerations – nouns, verbs, adjectives, adverbs • Corpus annotators decide. Distinguish between – mostly content-bearing: they refer to objects, actions, and – proper nouns (names) and common nouns? features in the world – open class, since there is no limit to what these words are, new – singular and plural nouns? ones are added all the time ( email, website ). – past and present tense verbs? – auxiliary and main verbs? • Closed class words (or function words) – etc – pronouns, determiners, prepositions, connectives, ... – there is a limited number of these – mostly functional: to tie the concepts of a sentence together Sharon Goldwater ANLP Lecture 8 6 Sharon Goldwater ANLP Lecture 8 7

English POS tag sets Usually have 40-100 tags. For example, • Brown corpus (87 tags) – One of the earliest large corpora collected for computational linguistics (1960s) – A balanced corpus: different genres (fiction, news, academic, editorial, etc) • Penn Treebank corpus (45 tags) – First large corpus annotated with POS and full syntactic trees (1992) – Possibly the most-used corpus in NLP – Originally, just text from the Wall Street Journal (WSJ) Sharon Goldwater ANLP Lecture 8 8 J&M Fig 5.6: Penn Treebank POS tags POS tags in other languages Universal POS tags (Petrov et al., 2011) • Morphologically rich languages often have compound • A move in the other direction morphosyntactic tags • Simplify the set of tags to lowest common denominator across (J&M, p.196) languages Noun+A3sg+P2sg+Nom • Hundreds or thousands of possible combinations • Map existing annotations onto universal tags { VB, VBD, VBG, VBN, VBP, VBZ, MD } ⇒ VERB • Predicting these requires more complex methods than what we will discuss (e.g., may combine an FST with a probabilistic • Allows interoperability of systems across languages disambiguation system) • Promoted by Google and others Sharon Goldwater ANLP Lecture 8 10 Sharon Goldwater ANLP Lecture 8 11

Universal POS tags (Petrov et al., 2011) Why is POS tagging hard? NOUN (nouns) The usual reasons! VERB (verbs) • Ambiguity: ADJ (adjectives) glass of water/NOUN vs. water/VERB the plants ADV (adverbs) lie/VERB down vs. tell a lie/NOUN PRON (pronouns) wind/VERB down vs. a mighty wind/NOUN (homographs) DET (determiners and articles) ADP (prepositions and postpositions) How about time flies like an arrow ? NUM (numerals) CONJ (conjunctions) • Sparse data: PRT (particles) – Words we haven’t seen before (at all, or in this context) ’.’ (punctuation marks) – Word-Tag pairs we haven’t seen before X (anything else, such as abbreviations or foreign words) Sharon Goldwater ANLP Lecture 8 12 Sharon Goldwater ANLP Lecture 8 13 Relevant knowledge for POS tagging A probabilistic model for tagging Let’s define a new generative process for sentences. • The word itself • To generate sentence of length n : – Some words may only be nouns, e.g. arrow – Some words are ambiguous, e.g. like, flies Let t 0 = <s> – Probabilities may help, if one tag is more likely than another For i = 1 to n Choose a tag conditioned on previous tag: P ( t i | t i − 1 ) • Local context Choose a word conditioned on its tag: P ( w i | t i ) – two determiners rarely follow each other – two base form verbs rarely follow each other • So, model assumes: – determiner is almost always followed by adjective or noun – Each tag depends only on previous tag: a bigram model over tags. – Words are conditionally independent given tags Sharon Goldwater ANLP Lecture 8 14 Sharon Goldwater ANLP Lecture 8 15

Generative process example Probabilistic finite-state machine • Arrows indicate probabilistic dependencies: • One way to view the model: sentences are generated by walking through states in a graph. Each state represents a tag. </s> <s> DT NN VBD DT NNS VBG START VB NN IN a cat saw the rats jumping DET END • Prob of moving from state s to s ′ ( transition probability ): P ( t i = s ′ | t i − 1 = s ) Sharon Goldwater ANLP Lecture 8 17 Probabilistic finite-state machine What can we do with this model? • When passing through a state, emit a word. • Simplest thing: if we know the parameters (tag transition and word emission probabilities), can compute the probability of a like tagged sentence. flies • Let S = w 1 . . . w n be the sentence and T = t 1 . . . t n be the VB corresponding tag sequence. Then n � p ( S, T ) = P ( t i | t i − 1 ) P ( w i | t i ) • Prob of emitting w from state s ( emission probability ): i =1 P ( w i = w | t i = s ) Sharon Goldwater ANLP Lecture 8 18 Sharon Goldwater ANLP Lecture 8 19

Example: computing joint prob. P ( S, T ) Example: computing joint prob. P ( S, T ) What’s the probability of this tagged sentence? What’s the probability of this tagged sentence? This/DT is/VB a/DT simple/JJ sentence/NN This/DT is/VB a/DT simple/JJ sentence/NN • First, add begin- and end-of-sentence <s> and </s> . Then: n � p ( S, T ) = P ( t i | t i − 1 ) P ( w i | t i ) i =1 = P ( DT | <s> ) P ( VB | DT ) P ( DT | VB ) P ( JJ | DT ) P ( NN | JJ ) P ( </s> | NN ) · P ( This | DT ) P ( is | VB ) P ( a | DT ) P ( simple | JJ ) P ( sentence | NN ) • But now we need to plug in probabilities... from where? Sharon Goldwater ANLP Lecture 8 20 Sharon Goldwater ANLP Lecture 8 21 Training the model Training the model Given a corpus annotated with tags (e.g., Penn Treebank), Given a corpus annotated with tags (e.g., Penn Treebank), we estimate P ( w i | t i ) and P ( t i | t i − 1 ) using familiar methods we estimate P ( w i | t i ) and P ( t i | t i − 1 ) using familiar methods (MLE/smoothing) (MLE/smoothing) (Fig from J&M draft 3rd edition) Sharon Goldwater ANLP Lecture 8 22 Sharon Goldwater ANLP Lecture 8 23

Training the model But... tagging? Given a corpus annotated with tags (e.g., Penn Treebank), Normally, we want to use the model to find the best tag sequence we estimate P ( w i | t i ) and P ( t i | t i − 1 ) using familiar methods for an untagged sentence. (MLE/smoothing) • Thus, the name of the model: hidden Markov model – Markov : because of Markov assumption (tag/state only depends on immediately previous tag/state). – hidden : because we only observe the words/emissions; the tags/states are hidden (or latent ) variables. • FSM view: given a sequence of words, what is the most probable state path that generated them? (Fig from J&M draft 3rd edition) Sharon Goldwater ANLP Lecture 8 24 Sharon Goldwater ANLP Lecture 8 25 Hidden Markov Model (HMM) Formalizing the tagging problem HMM is actually a very general model for sequences. Elements of Normally, we want to use the model to find the best tag sequence an HMM: T for an untagged sentence S : • a set of states (here: the tags) argmax T p ( T | S ) • an output alphabet (here: words) • intitial state (here: beginning of sentence) • state transition probabilities (here: p ( t i | t i − 1 ) ) • symbol emission probabilities (here: p ( w i | t i ) ) Sharon Goldwater ANLP Lecture 8 26 Sharon Goldwater ANLP Lecture 8 27

Recommend

More recommend