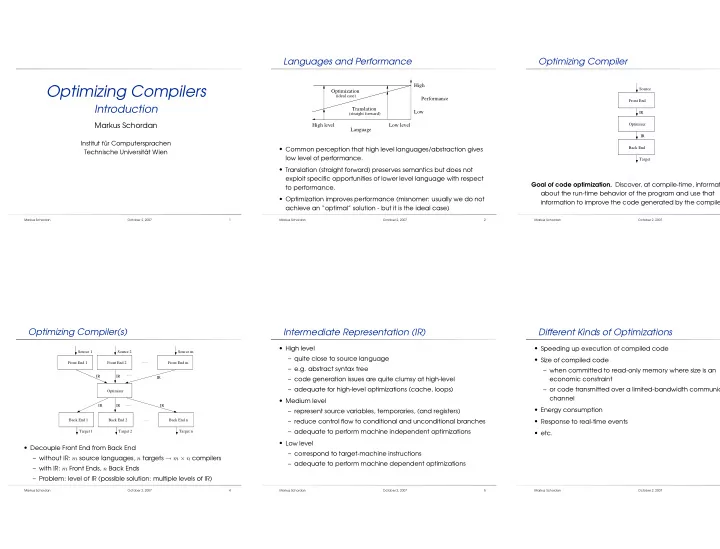

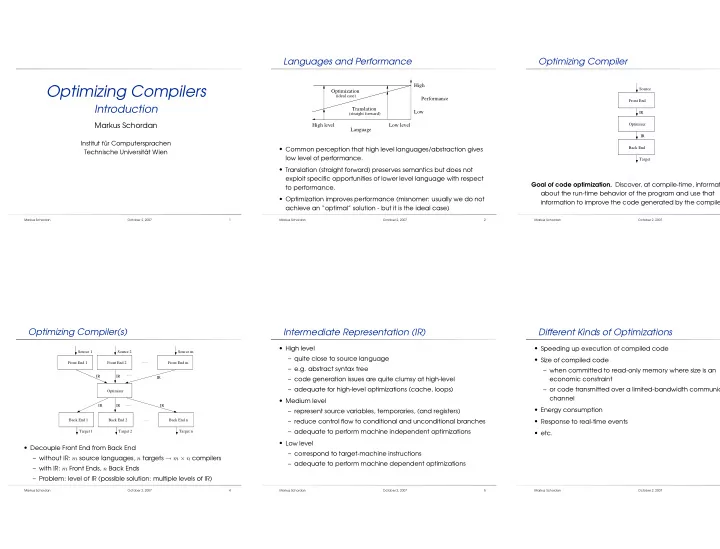

Languages and Performance Optimizing Compiler High Optimizing Compilers Source Optimization (ideal case) Performance Front End Introduction Translation Low IR (straight forward) Markus Schordan Optimizer High level Low level Language IR Institut f¨ ur Computersprachen • Common perception that high level languages/abstraction gives Back End Technische Universit ¨ at Wien low level of performance. Target • Translation (straight forward) preserves semantics but does not exploit specific opportunities of lower level language with respect Goal of code optimization. Discover, at compile-time, informat to performance. about the run-time behavior of the program and use that • Optimization improves performance (misnomer: usually we do not information to improve the code generated by the compile achieve an “optimal” solution - but it is the ideal case) Markus Schordan October 2, 2007 1 Markus Schordan October 2, 2007 2 Markus Schordan October 2, 2007 Optimizing Compiler(s) Intermediate Representation (IR) Different Kinds of Optimizations • High level • Speeding up execution of compiled code Source 1 Source 2 Source m – quite close to source language • Size of compiled code ...... Front End 1 Front End 2 Front End m – e.g. abstract syntax tree – when committed to read-only memory where size is an ...... IR IR IR – code generation issues are quite clumsy at high-level economic constraint – adequate for high-level optimizations (cache, loops) – or code transmitted over a limited-bandwidth communic Optimizer channel • Medium level ...... IR IR IR • Energy consumption – represent source variables, temporaries, (and registers) ...... • Response to real-time events Back End 1 Back End 2 Back End n – reduce control flow to conditional and unconditional branches – adequate to perform machine independent optimizations Target 1 Target 2 Target n • etc. • Low level • Decouple Front End from Back End – correspond to target-machine instructions – without IR: m source languages, n targets → m × n compilers – adequate to perform machine dependent optimizations – with IR: m Front Ends, n Back Ends – Problem: level of IR (possible solution: multiple levels of IR) Markus Schordan October 2, 2007 4 Markus Schordan October 2, 2007 5 Markus Schordan October 2, 2007

Considerations for Optimization Scope of Optimization (1) Scope of Optimization (2) • Local • Safety • Inter-procedural (whole program) – basic blocks – correctness: generated code must have the same meaning as – entire program the input code – statements are executed sequentially – exposes new opportunities but also new challenges – meaning: is the observable behavior of the program – if any statement is executed the entire block is executed * name-scoping • Profitability – limited to improvements that involve operations that all occur in * parameter binding the same block – improvement of code * virtual methods • Intra-procedural (global) – trade offs between different kinds of optimizations * recursive methods (number of variables?) – entire procedure • Problems – scalability to program size – procedure provides a natural boundary for both analysis and – reading past array bounds, pointer arithmetics, etc. transformation – procedures are abstractions encapsulating and insulating run-time environments – opportunities for improvements that local optimizations do not have Markus Schordan October 2, 2007 7 Markus Schordan October 2, 2007 8 Markus Schordan October 2, 2007 Optimization Taxonomy Machine Independent Optimizations (1) Machine Independent Optimizations (2) • Dead code elimination • Eliminate redundancy Optimizations are categorized by the effect they have on the code. – eliminate useless or unreachable code – replace redundant computation with a reference to prev – algebraic identities • Machine independent computed value • Code motion – largely ignore the details of the target machine – e.g. common subexpression elimination, value numberin – move operation to place where it executes less frequently – in many cases profitability of a transformation depends on • Enable other transformations detailed machine-dependent issues, but those are ignored – loop invariant code motion, hoisting, constant propagation – rearrange code to expose more opportunities for other • Machine dependent • Specialize transformations – explicitly consider details of the target machine – to specific context in which an operation will execute – e.g. inlining, cloning – many of these transformations fall into the realm of code – operator strength reduction, constant propagation, peephole generation optimization – some are within the scope of the optimizer (some cache optimizations, some expose instruction level parallelism) Markus Schordan October 2, 2007 10 Markus Schordan October 2, 2007 11 Markus Schordan October 2, 2007

Machine Dependent Optimizations Example: C++STL Code Optimization Programming Styles - 1,2 • Different programming styles for iterating on a container and EC1: Imperative Programming • Take advantage of special hardware features performing operation on each element for ( unsigned i n t i = 0; i < mycontainer . size ( ) ; ++ i ) { – Instruction Selection • Use different levels of abstractions for iteration, container, and mycontainer[ i ] += 1.0; • Manage or hide latency operation on elements } – Arrange final code in a way that hides the latency of some • Optimization levels O1-3 compared with GNU 4.0 compiler operations Concrete example: We iterate on container ’mycontainer’ and – Instruction Scheduling perform an operation on each element EC2: Weakly Generic Programming • Manage bounded machine resources for ( vector < numeric type > :: it e r at o r i t = mycontainer . be • Container is a vector – Registers, functional units, cache memory, main memory i t != mycontainer .end ( ) ; • Elements are of type numeric_type ( double ) ++ i t ) { ∗ i t += 1.0; • Operation of adding 1 is applied to each element } • Evaluation Cases EC 1-6 Acknowledgement: Joint work with Rene Heinzl Markus Schordan October 2, 2007 13 Markus Schordan October 2, 2007 14 Markus Schordan October 2, 2007 Programming Style - 3 Programming Style - 4 Programming Styles - 5,6 EC3: Generic Programming Functional Programming with Boost::lambda EC4: Functional Programming with STL for each (mycontainer . begin ( ) , std : : for each ( mycontainer . begin ( ) , transform (mycontainer . begin ( ) , mycontainer .end( ) , mycontainer .end( ) , mycontainer .end( ) , plus n < numeric type > (1.0) ) ; boost : : lambda : : 1 +=1.0 ) ; mycontainer . begin ( ) , bind2nd( std : : plus < numeric type > () , 1 . 0 ) ) ; Functor Functional Programming with Boost::phoenix template < class datatype > std : : for each ( mycontainer . begin ( ) , mycontainer .end( ) , struct plus n • plus: binary function object that returns the result of adding its first phoenix : : arg1 += 1.0 ) ; { and second arguments plus n (datatype member) :member(member) {} • bind2nd: Templatized utility for binding values to function objects void operator ( ) ( datatype& value ) { value += member; } • Use of unnamed function object. private : datatype member; } ; Markus Schordan October 2, 2007 16 Markus Schordan October 2, 2007 17 Markus Schordan October 2, 2007

Recommend

More recommend