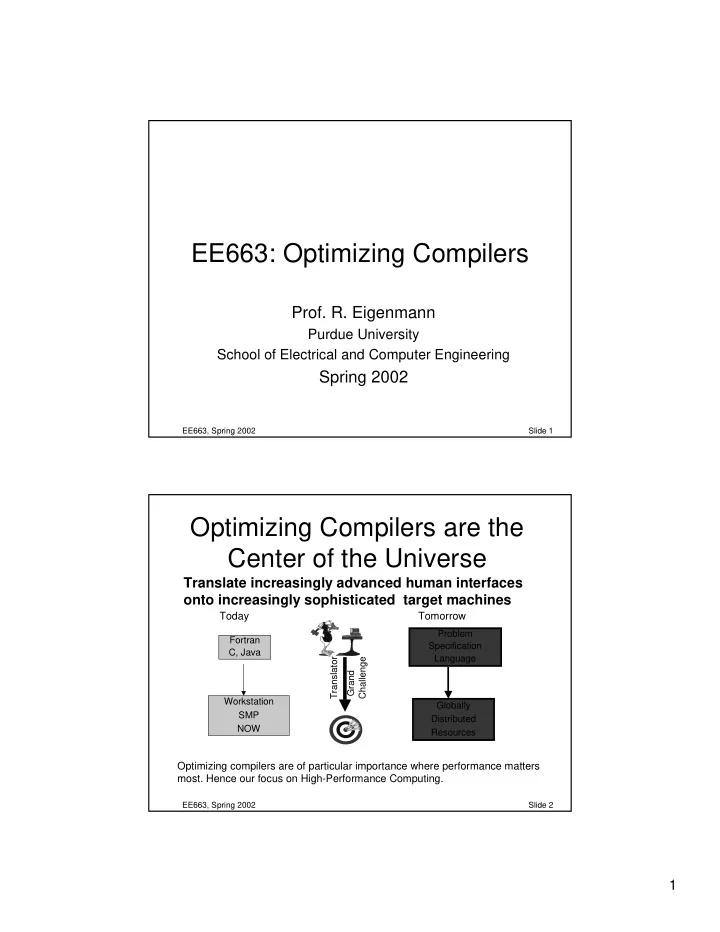

EE663: Optimizing Compilers Prof. R. Eigenmann Purdue University School of Electrical and Computer Engineering Spring 2002 EE663, Spring 2002 Slide 1 Optimizing Compilers are the Center of the Universe Translate increasingly advanced human interfaces onto increasingly sophisticated target machines Today Tomorrow Problem Fortran Specification C, Java Language Translator Challenge Grand Workstation Globally SMP Distributed NOW Resources Optimizing compilers are of particular importance where performance matters most. Hence our focus on High-Performance Computing. EE663, Spring 2002 Slide 2 1

Optimizing Compiler Research Worldwide (a very incomplete list) • Illinois (David Kuck, David Padua, Constantine Polychronopoulos, Vikram Adve, L.V. Kale) closest to our terminology (we will put more emphasis on evaluation) Actual compilers: Parafrase, Polaris, Promis. • Rice Univ. (Ken Kennedy, Keith Cooper): distributed memory machines. Previously much work in vectorizing and parallelizing compilers. Actual compilers: AFC, Parascope, Fortran-D system. • Stanford (John Hennessey, Monica Lam): Parallelization technology for shared-memory multiprocessors. More emphasis on locality enhancing techniques. Actual compilers: SUIF (center piece of the “national compiler infrastructure”) EE663, Spring 2002 Slide 3 More Compiler Research • Maryland (Bill Pugh, Chau-Wen Tseng), Irvine (Alex Nicolau), Toronto (Tarek Abdelrahman, Michael Voss), Minnesota (Pen-Chung Yew, David Lilja), Cornell (Keshav Pingali), Syracuse (Geoffrey Fox), Northwestern (Prith Banerjee), MIT (Martin Rinard, Vivek Sarkar), Delaware (Guang Gao), Rochester (Chen Ding), Rutgers (Barbara Ryders, Ulrich Kremer), Texas A&M (L. Rauchwerger), Pittsburgh (Rajiv Gupta, Mary Lou Soffa), Ohio State (Joel Saltz, Gagan Agrawal, P. Sadayappan), San Diego (Jeanne Ferrante, Larry Carter), Louisiana State (J. Ramanujam), U. Washington (Larry Snyder), Indiana University (Dennis Gannon), U. Texas@Austin (Calvin Lin), Purdue (Zhiyuan Li, Rudolf Eigenmann) • International efforts: Barcelona (Valero, Labarta, Ayguade...), Malaga (Zapata, Plata). Several German and French groups. Japan (Hoichi Muraoka, Hironori Kasahara, Mitsuhisa Sato, Kazuki Joe,...). • Industry: IBM (Manish Gupta, Sam Midkiff, Jose Moreira, Vivek Sarkar), Compaq (Nikhil), Intel (Utpal Banerjee, David Sehr, Milind Girkar). EE663, Spring 2002 Slide 4 2

Issues in Optimizing / Parallelizing Compilers At a very high level: • Detecting parallelism • Mapping parallelism onto the machine • Compiler infrastructures EE663, Spring 2002 Slide 5 Detecting Parallelism • Program analysis techniques • Data dependence analysis • Dependence removing techniques • Parallelization in the presence of dependences • Runtime dependence detection EE663, Spring 2002 Slide 6 3

Mapping Parallelism onto the Machine • Exploiting parallelism at many levels – distributed memory machines (clusters or global networks) – multiprocessors (our focus) – instruction-level parallelism – (vector machines) • Locality enhancement EE663, Spring 2002 Slide 7 Compiler Infrastructures • Compiler generator languages and tools • Compiler implementations • Orchestrating compiler techniques (when to apply which technique) • Benchmarking and performance evaluation EE663, Spring 2002 Slide 8 4

Parallelizing Compiler Books • Ken Kennedy, John Allen: Optimizing Compilers for Modern Architectures: A Dependence-based Approach (2001) • Michael Wolfe: High-Performance Compilers for Parallel Computing (1996) • Utpal Banerjee: several books on Data Dependence Analysis and Transformations • Zima&Chapman: Supercompilers for Parallel and Vector Computers (1991) • Constantine Polychronopoulos, Parallel Programming and Compilers (1988) EE663, Spring 2002 Slide 9 Course Approach There are many schools on optimizing compilers. Our approach is performance-driven Initial course schedule: – Blume study - the simple techniques (paper #15) – The Cedar Fortran Experiments (paper #27) – Analysis and Transformation techniques in the Polaris compiler (paper #48) – Additional transformations (open-ended list) For list of papers, see www.ece.purdue.edu/~eigenman/reports/ EE663, Spring 2002 Slide 10 5

Course Format • Lectures: 70% by instructor; include hands-on exercises • Class presentations. Each student will give a presentations on a selected paper from the list on the course web page. • Projects: Implement a compiler pass within either the Gnu C 3.0 infrastructure or a new research infrastructure to be designed in this class. – Wednesday of Week #1: Announcement of project details. – Wednesday of Week #2: Preliminary project outlines due; Discuss with instructor – Thursday of Week #3: Project proposals finalized. • Exams: 1 mid-term, 1 final exam EE663, Spring 2002 Slide 11 The Heart of Automatic Parallelization Data Dependence Testing If a loop does not have data dependences between any two iterations then it can be safely executed in parallel In science/engineering applications, loop parallelism is most important. In non- numerical programs other control structures are also important EE663, Spring 2002 Slide 12 6

Data Dependence Tests: Motivating Examples Statement Reordering Loop Parallelization can these two statements be Can the iterations of this swapped? loop be run concurrently? DO i=1,100,2 DO i=1,100,2 B(2*i) = ... B(2*i) = ... ... = B(3*i) ... = B(2*i) +B(3*i) ENDDO ENDDO A data dependence exists between two data references iff: • both references access the same storage location • at least one of them is a write access EE663, Spring 2002 Slide 13 Data Dependence Tests: Concepts Terms for data dependences between statements of loop iterations. • Distance (vector): indicates how many iterations apart are source and sink of dependence. • Direction (vector): is basically the sign of the distance. There are different notations: (<,=,>) or (-1,0,+1) meaning dependence (from earlier to later, within the same, from later to earlier) iteration. • Loop-carried (or cross-iteration) dependence and non-loop-carried (or loop-independent) dependence: indicates whether or not a dependence exists within one iteration or across iterations. – For detecting parallel loops, only cross-iteration dependences matter. – equal dependences are relevant for optimizations such as statement reordering and loop distribution. • Iteration space graphs: the un-abstracted form of a dependence graph with one node per statement instance. EE663, Spring 2002 Slide 14 7

Data Dependence Tests: Formulation of the Data-dependence problem DO i=1,n the question to answer: a(4*i) = . . . can 4*i ever be equal to 2*I+1 within i ∈ [1,n] ? . . . = a(2*i+1) ENDDO In general: given • two subscript functions f and g and • loop bounds lower, upper. Does f(i 1 ) = g(i 2 ) have a solution such that lower ≤ i 1 , i 2 ≤ upper ? EE663, Spring 2002 Slide 15 Part I: Performance of Automatic Program Parallelization EE663, Spring 2002 Slide 16 8

10 Years of Parallelizing Compilers A Performance study at the beginning of the 90es (Blume study) Analyzed the performance of state-of-the-art parallelizers and vectorizers using the Perfect Benchmarks. EE663, Spring 2002 Slide 17 Overall Performance EE663, Spring 2002 Slide 18 9

Performance of Individual Techniques EE663, Spring 2002 Slide 19 Transformations measured in the “Blume Study” • Scalar expansion • Reduction parallelization • Induction variable substitution • Loop interchange • Forward Substitution • Stripmining • Loop synchronization • Recurrence substitution EE663, Spring 2002 Slide 20 10

Scalar Expansion Privatization DO PARALLEL j=1,n DO j=1,n PRIVATE t t = a(j)+b(j) t = a(j)+b(j) c(j) = t + t 2 c(j) = t + t 2 ENDDO ENDDO Expansion We assume a shared-memory model: DO PARALLEL j=1,n • by default, data is shared, i.e., all processors can see and modify it t0(j) = a(j)+b(j) • processor share the work of c(j) = t0(j) + t0(j) 2 parallel loops ENDDO EE663, Spring 2002 Slide 21 Parallel Loop Syntax and Semantics “Old” form: OpenMP: DO PARALLEL i = ilow, iup !$OMP PARALLEL PRIVATE(<private data>) Private <private data> <preamble code> <preamble code> !$OMP DO LOOP DO i = ilow, iup <loop body code> <loop body code> POSTAMBLE ENDDO <postamble code> !$OMP END DO END DO PARALLEL <postamble code> !$OMP END PARALLEL executed by all participating processors (threads) exactly once work (iterations) shared by by participating processors (threads) EE663, Spring 2002 Slide 22 11

Reduction Parallelization DO PARALLEL j=1,n PRIVATE s=0 s = s + a(j) DO j=1,n POSTAMBLE sum = sum + a(j) ATOMIC: ENDDO sum=sum+s ENDDO !$OMP PARALLEL DO !$OMP+REDUCTION(+:sum) sum = sum + SUM(1:n) DO j=1,n sum = sum + a(j) ENDDO EE663, Spring 2002 Slide 23 Induction Variable Substitution ind = ind0 ind = ind0 DO j = 1,n DO PARALLEL j = 1,n a(ind) = b(j) a(ind0+k*(j-1)) = b(j) ind = ind+k ENDDO ENDDO Note, this is the reverse of strength reduction , an important transformation in classical (code generating) compilers. R0 ← &d loop: loop: real d(20,100) ... ... DO j=1,n (R0) ← 0 ← &d+20*j R0 d(1,j)=0 (R0) ← 0 ... ENDDO ← R0+20 R0 ... jump loop jump loop EE663, Spring 2002 Slide 24 12

Recommend

More recommend