Online Knowledge-Based Support Vector Machines Gautam Kunapuli 1 , - PowerPoint PPT Presentation

Online Knowledge-Based Support Vector Machines Gautam Kunapuli 1 , Kristin P. Bennett 2 , Amina Shabbeer 2 , Richard Maclin 3 and Amina Shabbeer 2 , Richard Maclin 3 and Jude W. Shavlik 1 1 University of Wisconsin-Madison, USA 2 Rensselaer

Online Knowledge-Based Support Vector Machines Gautam Kunapuli 1 , Kristin P. Bennett 2 , Amina Shabbeer 2 , Richard Maclin 3 and Amina Shabbeer 2 , Richard Maclin 3 and Jude W. Shavlik 1 1 University of Wisconsin-Madison, USA 2 Rensselaer Polytechnic Institute, USA 3 University of Minnesota, Duluth, USA ECML 2010, Barcelona, Spain

Outline • Knowledge-Based Support Vector Machines • The Adviceptron: Online KBSVMs • A Real-World Task: Diabetes Diagnosis • A Real-World Task: Tuberculosis Isolate Classification A Real-World Task: Tuberculosis Isolate Classification • Conclusions ECML 2010, Barcelona, Spain

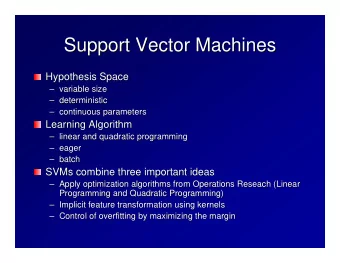

Knowledge-Based SVMs • Introduced by Fung et al (2003) • Allows incorporation of expert advice into SVM formulations • Advice is specified with respect to polyhedral Advice is specified with respect to polyhedral regions in input (feature) space � ������� � ≥ �� ∧ � ������� �� ≤ �� ⇒ � ����� � ��� � ������� � ≤ − �� ∧ � ������� � ≤ �� ∧ � ������� �� ≥ �� ⇒ � ����� � − �� �� ������� � � � ������� � ≥ �� ∧ � ������� �� ≤ − �� ⇒ � ����� � ��� • Can be incorporated into SVM formulation as constraints using advice variables ECML 2010, Barcelona, Spain

Knowledge-Based SVMs In classic SVMs, we have T Class A, y= +1 labeled data points ( x t , y t ) , t = 1, …, T . We learn a linear classifier w’x – b = 0 . The standard SVM formulation trades off regularization and loss: � � � � � � � λ � ′ ξ ��� Y � X � − b � � � ξ ≥ � , ���� �� ξ ≥ � . Class B, y = -1 ECML 2010, Barcelona, Spain

Knowledge-Based SVMs We assume an expert provides polyhedral advice of the form Class A, y= +1 D � ≤ � ⇒ � ′ � ≥ b We can transform the logic constraint above using constraint above using D � ≤ � advice variables, u D ′ � � � � � , − � ′ � − b ≥ � , ≥ � � These constraints are added to the standard formulation to Class B, y = -1 give Knowledge-Based SVMs ECML 2010, Barcelona, Spain

Knowledge-Based SVMs In general, there are m advice z � ± � sets , each with label , Class A, y= +1 for advice belonging to Class A or B, D i � ≤ � i ⇒ z i � � ′ � � − b ≥ � D � � ≤ � � Each advice set adds the following constraints to the SVM formulation i � i � z i � � � , D ′ − � i ′ � i − z i b ≥ � , D � � ≤ � � � i ≥ � Class B, y = -1 D � � ≤ � � ECML 2010, Barcelona, Spain

Knowledge-Based SVMs The batch KBSVM formulation introduces advice slack variables Class A, y= +1 to soften the advice constraints � � � � � � � � � � � � � � λ � ′ ξ � � η � � ζ � ��� . � � � ���� ���� Y � X � − b � � � ξ ≥ � , Y � X � − b � � � ξ ≥ � , D � � ≤ � � ξ ≥ � , i � i � z i � � η i � � , D ′ − � i ′ � i − z i b � ζ i ≥ � , � i , η i , ζ i ≥ � , i � � , ..., m. D � � ≤ � � Class B, y = -1 D � � ≤ � � ECML 2010, Barcelona, Spain

Outline • Knowledge-Based Support Vector Machines • The Adviceptron: Online KBSVMs • A Real-World Task: Diabetes Diagnosis • A Real-World Task: Tuberculosis Isolate Classification A Real-World Task: Tuberculosis Isolate Classification • Conclusions ECML 2010, Barcelona, Spain

Online KBSVMs • Need to derive an online version of KBSVMs • Algorithm is provided with advice and one labeled data point at each round • Algorithm should update the hypothesis at each • Algorithm should update the hypothesis at each step, w t , as well as the advice vectors , u i,t ECML 2010, Barcelona, Spain

Passive-Aggressive Algorithms • Adopt the framework of passive-aggressive algorithms (Crammer et al, 2006), where at each round, when a new data point is given, – if loss = 0, there is no update ( passive ) – if loss > 0, update weights to minimize loss ( aggressive ) – if loss > 0, update weights to minimize loss ( aggressive ) • Why passive-aggressive algorithms? – readily applicable to most SVM losses – possible to derive elegant, closed-form update rules – simple rules provide fast updates; scalable – analyze performance by deriving regret bounds ECML 2010, Barcelona, Spain

Online KBSVMs � D i , � i , z i � m • There are m advice sets, i �� � � t , y t � • At round t , the algorithm receives � t • The current hypothesis is , and the current � i,t , i � � , ..., m advice variables are At round t, the formulation for deriving an update is m m � � � � � � � � − � t � � � � � � i − � i,t � � � λ � ξ � � � � η i � � � ζ � ��� i � � ξ, � � ,η � ,ζ � ≥ � , � i �� i �� y t � ′ � t − � � ξ ≥ � , ������� �� i � i � z i � � η i � � D ′ − � i ′ � i − � � ζ i ≥ � i � � , ..., m. � i ≥ � ECML 2010, Barcelona, Spain

Formulation At The t -th Round � D i , � i , z i � m • There are m advice sets, i �� � � t , y t � • At round t , the algorithm receives � t • The current hypothesis is , and the current � i,t , i � � , ..., m advice variables are proximal terms for hypothesis and advice vectors proximal terms for hypothesis and advice vectors m m � � � � � � � � − � t � � � � � � i − � i,t � � � λ � ξ � � � � η i � � � ζ � ��� , i � � ξ, � � ,η � ,ζ � ≥ � , � i �� i �� y t � ′ � t − � � ξ ≥ � , ������� �� i � i � z i � � η i � � D ′ − � i ′ � i − � � ζ i ≥ � i � � , ..., m. � i ≥ � ECML 2010, Barcelona, Spain

Formulation At The t -th Round � D i , � i , z i � m • There are m advice sets, i �� � � t , y t � • At round t , the algorithm receives � t • The current hypothesis is , and the current � i,t , i � � , ..., m advice variables are data loss advice loss data loss advice loss m m � � � � � � � � � − � t � � � � � � i − � i,t � � � λ � ξ � � � � η i � � � ζ i ��� , � � ξ, � � ,η � ,ζ � ≥ � , � i �� i �� y t � ′ � t − � � ξ ≥ � , ������� �� i � i � z i � � η i � � D ′ − � i ′ � i − � � ζ i ≥ � i � � , ..., m. � i ≥ � ECML 2010, Barcelona, Spain

Formulation At The t -th Round � D i , � i , z i � m • There are m advice sets, i �� � � t , y t � • At round t , the algorithm receives � t • The current hypothesis is , and the current � i,t , i � � , ..., m advice variables are parameters parameters m m � � � � � � � � − � t � � � � � � i − � i,t � � � λ � ξ � � � � η i � � � ζ � ��� , i � � ξ, � � ,η � ,ζ � ≥ � , � i �� i �� y t � ′ � t − � � ξ ≥ � , ������� �� i � i � z i � � η i � � D ′ − � i ′ � i − � � ζ i ≥ � i � � , ..., m. � i ≥ � ECML 2010, Barcelona, Spain

Formulation At The t -th Round � D i , � i , z i � m • There are m advice sets, i �� � � t , y t � • At round t , the algorithm receives � t • The current hypothesis is , and the current � i,t , i � � , ..., m advice variables are m m � � � � � � � − � t � � � � � � � i − � i,t � � � λ � ξ � � � � η i � � � ζ � ��� , i � � ξ, � � ,η � ,ζ � ≥ � , � i �� i �� y t � ′ � t − � � ξ ≥ � , ������� �� inequality constraints i � i � z i � � η i � � D ′ make deriving a closed- − � i ′ � i − � � ζ i ≥ � i � � , ..., m. form update impossible � i ≥ � ECML 2010, Barcelona, Spain

Formulation At The t -th Round � D i , � i , z i � m • There are m advice sets, i �� � � t , y t � • At round t , the algorithm receives � t • The current hypothesis is , and the current � i,t , i � � , ..., m advice-vector estimates are m m � � � � � � � − � t � � � � � � � i − � i,t � � � λ � ξ � � � � η i � � � ζ � ��� , i � � ξ, � � ,η � ,ζ � ≥ � , � i �� i �� y t � ′ � t − � � ξ ≥ � , ������� �� inequality constraints i � i � z i � � η i � � D ′ make deriving a closed- − � i ′ � i − � � ζ i ≥ � i � � , ..., m. form update impossible � i ≥ � ECML 2010, Barcelona, Spain

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.