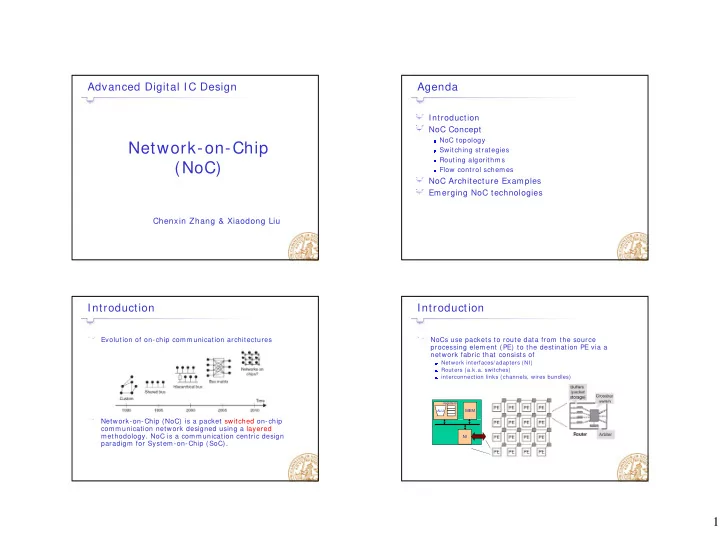

Advanced Digital IC Design Agenda Introduction NoC Concept NoC topology Network-on-Chip Switching strategies Routing algorithms (NoC) Flow control schemes NoC Architecture Examples Emerging NoC technologies Chenxin Zhang & Xiaodong Liu Introduction Introduction Evolution of on-chip communication architectures NoCs use packets to route data from the source processing element (PE) to the destination PE via a network fabric that consists of Network interfaces/ adapters (NI) Routers (a.k.a. switches) interconnection links (channels, wires bundles) registers MEM ALU N t Network-on-Chip (NoC) is a packet switched on-chip k Chi (N C) i k t it h d hi communication network designed using a layered methodology. NoC is a communication centric design NI paradigm for System-on-Chip (SoC). 1

Building Blocks: NI Building Blocks: Router (Switch) Router or Switch: receives and forwards packets Session-layer (P2P) interface with nodes Buffers have dual function: synchronization & queuing Back-end manages interface with switches Decoupling logic & synchronization Decoupling logic & synchronization Standard P2P Node protocol Proprietary link protocol Input buffers & control flow Output buffers Front end Backend & control flow Allocator Switches Node Arbiter Crossbar NoC specific backend (layers 1-4) Standardized node interface @ session layer. 1. Physical channel interface Data ports QoS & 1. Supported transactions (e.g. QoSread…) with control flow 2. Link-level protocol Routing 2. Degree of parallelism wires 3. Network-layer (packetization) 3. Session prot. control flow & negotiation 4. Transport layer (routing) Building Blocks: Links NoC Concept Connects two routers in both directions on a number of Topology wires (e.g.,32 bits) How the nodes are connected together In addition, wires for control are part of the link too Switching Switching Can be pipelined (include handshaking for asynchronous) Allocation of network resources (bandwidth, buffer capacity, … ) to information flows Routing Path selection between a source and a destination node in a particular topology Flow control Flow control How the downstream node communicates forwarding availability to the upstream node 2

Direct Topologies NoC Topology Direct Direct Topologies Indirect Each node has direct point-to-point link to a subset of other nodes in the system called neighboring nodes Irregular Irregular As the number of nodes in the system increases, the total available As the number of nodes in the system increases the total available communication bandwidth also increases Fundamental trade-off is between connectivity and cost Most direct network topologies have an orthogonal implementation, where nodes can be arranged in an n-dimensional orthogonal space e.g. n-dimensional mesh, torus, folded torus, hypercube, and octagon 2D-mesh Torus Torus topology, also called a k-ary n-cube, is an n- dimensional grid with k nodes in each dimension It is most popular topology k-ary 1-cube (1-D torus) is essentially a ring network with All links have the same All links have the same k nodes length limited scalability as performance decreases when more nodes eases physical design k-ary 2-cube (i.e., 2-D torus) topology is similar to a Area grows linearly with the regular mesh number of nodes except that nodes at the edges are connected to switches at the opposite edge via wrap-around channels Must be designed in such a long end-around connections can, however, lead to excessive way as to avoid traffic delays accumulating in the center of accumulating in the center of the mesh 3

Folding Torus Octagon Folding torus topology overcomes the long link Octagon topology is another example of a direct network limitation of a 2-D torus messages being sent between any 2 nodes require at most messages being sent between any 2 nodes require at most Meshes and tori can be extended by adding Meshes and tori can be extended by adding two hops bypass links to increase performance at the cost more octagons can be tiled together to accommodate of higher area larger designs by using one of the nodes as a bridge node Indirect Topologies Irregular or ad hoc network topologies Indirect Topologies Customized for an application Usually a mix of shared bus, direct, and indirect network Each node is connected to an external switch, and topologies p g switches have point-to-point links to other switches switches have point to point links to other switches E.g. reduced mesh, cluster-based hybrid topology Fat tree topology Butterfly topology 4

Switching techniques Packet Switching (Store and Forward) Buffers for data Circuit switching packets + Dedicated links, simple, low overhead, full bandwidth - Inflexible low utilization Inflexible, low utilization Store Packet switching + Shared links, flexible, variable bit rate (payload length) - Packet overhead � Switching mode: o Datagram switching: packet oriented Source Destination end node end node o Virtual circuit switching: connection oriented � Switching scheme: Switching scheme: P Packets are completely stored before any portion is forwarded k t l t l t d b f ti i f d d o Store and Forward (SAF) switching o Virtual Cut-through (VCT): e.g. Ethernet • Low latency, decreased reliability o Worm-Hole switching (WH): e.g. NoC • Few buffer, lower latency, decreased reliability Packet Switching (Store and Forward) Packet Switching (Virtual Cut Through) Requirement: buffers must be sized to hold entire packet Routing Forward Store Source Destination end node end node Source Destination end node end node P Packets are completely stored before any portion is forwarded k t l t l t d b f ti i f d d Portions of a packet may be forwarded (“cut-through”) to the next switch P ti f k t b f d d (“ t th h”) t th t it h before the entire packet is stored at the current switch 5

Virtual Cut Through vs. Wormhole Virtual Cut Through vs. Wormhole Buffers for data Buffers for data packets packets Requirement: Requirement: Virtual Cut Through: Packet level Virtual Cut Through buffers must be sized buffers must be sized to hold entire packet to hold entire packet Busy Link Packet completely stored at the switch Source Destination Source Destination Buffers for flits: Buffers for flits: end node end node end node end node packets can be larger packets can be larger than buffers than buffers Wormhole Wormhole: FLIT level Busy Link Packet stored along the path Source Destination Source Destination end node end node end node end node Routing Algorithms Deadlock Responsible for correctly and efficiently routing packets or circuits from the source to the destination Ensure load balancing Latency minimization Deadlock and livelock free D S 6

Livelock Static vs. Dynamic Routing Static routing + Simple logic, low overhead + Simple logic, low overhead + Guaranteed in-order packet delivery - Does not take into account current state of the network Dynamic routing + Dynamic traffic distribution according to the current state of the network - Complex, need to monitor state of the network and dynamically change routing paths Turn model based routing algorithm Turn model Basis: What is a turn Mainly for mesh NoC From one dimension to another : 90 degree turn g Analyze directions in which packets can turn in the To another virtual channel in the same direction: network 0 degree turn Determine the cycles that such turns can form To the reverse direction: 180 degree turn Prohibit just enough turns to break all cycle Turns combine to form cycles Resulting routing algorithms are: Resulting routing algorithms are: Deadlock and livelock free Minimal/ Non-minimal Highly Adaptive: based on the Network load 7

X-Y routing algorithm (Deterministic) West-First routing algorithm + x + x -x x -y -y + y + y -x + x West-First routing algorithm West-First routing algorithm W est First Algorithm W est First Algorithm D D S S 8

West-First routing algorithm North-Last routing algorithm W est First Algorithm North Last Algorithm D S D S Source routing Flow Control Required in non-Circuit Switched networks to deal with congestion Recover from transmission errors Commonly used schemes: ACK-NACK Flow control Credit based Flow control Xon/ Xoff (STALL-GO) Flow Control Block A B C “Backpressure” Buffer Don’t Don’t Buffer full send send full 9

Flow Control Schemes Flow Control Schemes Credit based flow control Credit based flow control Sender sends Receiver sends Receiver sends packets w henever packets w henever Sender resum es Sender resum es credits after they credit counter injection becom e available is not zero sender receiver sender receiver 10 2 4 0 1 3 5 6 7 8 9 10 1 0 3 5 2 3 2 4 4 6 7 8 9 5 Credit counter Credit counter X X + 5 pipelined transfer pipelined transfer Queue is Queue is not serviced not serviced Flow Control Schemes Flow Control Schemes Xon/ Xoff flow control Xon/ Xoff flow control W hen Xoff W hen Xoff W hen in Xoff, threshold is Xon Xon sender cannot reached, an Xoff a packet is inject packets Xoff Xoff notification is injected if sender receiver sender receiver sent control bit is in Xon Control bit Control bit Xoff Xoff X Xon Xon pipelined transfer pipelined transfer Queue is not serviced 10

Recommend

More recommend