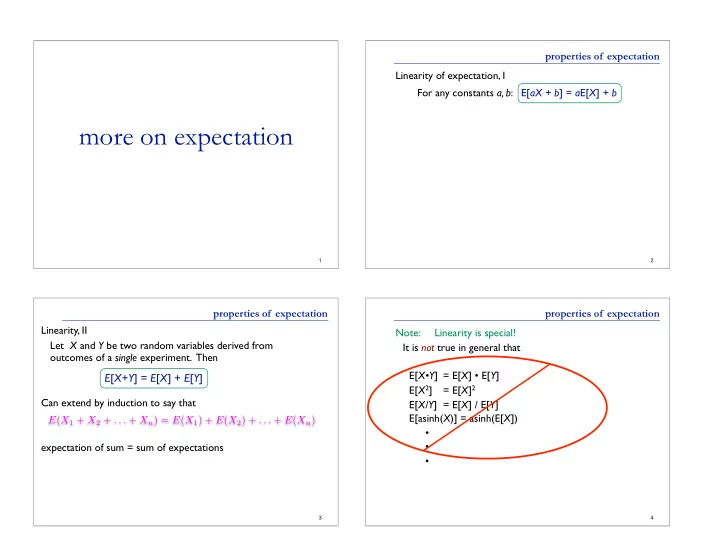

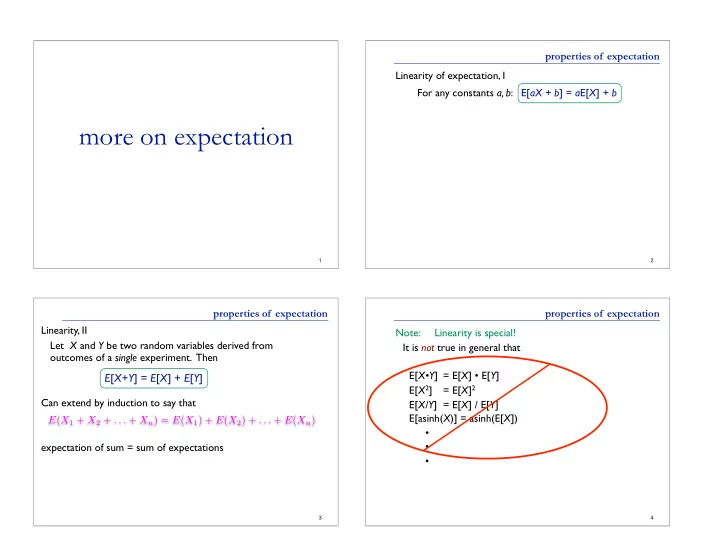

properties of expectation Linearity of expectation, I For any constants a, b : E[ aX + b ] = a E[ X ] + b more on expectation 1 2 properties of expectation properties of expectation Linearity, II Note: Linearity is special! Let X and Y be two random variables derived from It is not true in general that outcomes of a single experiment. Then E[ X•Y ] = E[ X ] • E[ Y ] E [ X+Y ] = E [ X ] + E [ Y ] E[ X 2 ] = E[ X ] 2 Can extend by induction to say that E[ X/Y ] = E[ X ] / E[ Y ] E[asinh( X )] = asinh(E[ X ]) E ( X 1 + X 2 + . . . + X n ) = E ( X 1 ) + E ( X 2 ) + . . . + E ( X n ) • • expectation of sum = sum of expectations • 3 4

risk Alice & Bob are gambling. X = Alice’s gain per flip: E[ X ] = 0 variance . . . Time passes . . . Alice (yawning) says “let’s raise the stakes” E[ Y ] = 0, as before. Are you (Bob) equally happy to play the new game? 5 6 variance variance E[ X ] measures the “average” or “central tendency” of X . Definitions What about its variability? The variance of a random variable X with mean E[ X ] = μ is Var[ X ] = E[( X - μ ) 2 ], often denoted σ 2 . The standard deviation of X is σ = √ Var[ X ] 7 8

risk variance The variance of a r.v. Alice & Bob are gambling (again). X = Alice’s gain per flip: X E ( X ) = kPr ( X = k ) X with mean E[ X ] = μ is k ∈ Range ( X ) X E ( g ( X )) = jPr ( g ( X ) = j ) Var[ X ] = E[( X - μ ) 2 ], j ∈ Range ( g ( X )) E[ X ] = 0 Var[ X ] = 1 X = g ( k ) Pr ( X = k ) often denoted σ 2 . k ∈ Range ( X ) . . . Time passes . . . Alice (yawning) says “let’s raise the stakes” Prob outcome X =============== 1/6 123 3 1/6 132 1 E[ Y ] = 0, as before. Var[ Y ] = 1,000,000 1/6 213 1 1/6 231 0 Are you (Bob) equally happy to play the new game? 1/6 312 0 1/6 321 1 9 10 what does variance tell us? mean and variance The variance of a random variable X with mean E[ X ] = μ is μ = E[ X ] is about location; σ = √ Var (X) is about spread Var[ X ] = E[( X - μ ) 2 ], often denoted σ 2 . σ≈ 2.2 # heads in 20 flips, p=.5 1: Square always ≥ 0, and exaggerated as X moves away from μ , so Var[ X ] emphasizes deviation from the mean. II: Numbers vary a lot depending on exact distribution of μ X , but it is common that X is # heads in 150 flips, p=.5 within μ ± σ ~66% of the time, and within μ ± 2 σ ~95% of the time. σ≈ 6.1 (We’ll see the reasons for this soon.) µ = 0 σ = 1 μ Blue arrows denote the interval μ ± σ (and note σ bigger in absolute terms in second ex., but smaller as a proportion of μ or max.) 11 12

example properties of variance NOT linear; Two games: Var[aX+b] = a 2 Var[X] insensitive to location (b), quadratic in scale (a) a) flip 1 coin, win Y = $100 if heads, $-100 if tails b) flip 100 coins, win Z = (#(heads) - #(tails)) dollars Same expectation in both: E[ Y ] = E[ Z ] = 0 Same extremes in both: max gain = $100; max loss = $100 0.5 0.5 Ex: 0.10 σ Y = 100 But horizontal arrows = μ ± σ σ Z = 10 0.08 E[X] = 0 variability ~ ~ Var[X] = 1 is very 0.06 ~ ~ different: 0.04 Y = 1000 X 0.02 E[Y] = E[1000 X] = 1000 E[X] = 0 0.00 Var[Y] = Var[10 3 X]=10 6 Var[X] = 10 6 -100 -50 0 50 100 14 properties of variance properties of variance Example: What is Var[X] when X is outcome of one fair die? E [( X − µ ) 2 ] Var( X ) = E [ X 2 − 2 µX + µ 2 ] = E[X] = 7/2, so E [ X 2 ] − 2 µE [ X ] + µ 2 = E [ X 2 ] − 2 µ 2 + µ 2 = E [ X 2 ] − µ 2 = E [ X 2 ] − ( E [ X ]) 2 = 15 16

properties of variance more variance examples In general: 0.10 Var[X+Y] ≠ Var[X] + Var[Y] NOT linear 0.00 ^^^^^^^ -4 -2 0 2 4 Ex 1: 0.10 Let X = ±1 based on 1 coin flip 0.00 As shown above, E[X] = 0, Var[X] = 1 -4 -2 0 2 4 Let Y = -X; then Var[Y] = (-1) 2 Var[X] = 1 0.10 But X+Y = 0, always, so Var[X+Y] = 0 0.00 -4 -2 0 2 4 Ex 2: 0.20 As another example, is Var[X+X] = 2Var[X]? 0.10 0.00 -4 -2 0 2 4 17 18 more variance examples σ 2 = 5.83 0.10 0.00 -4 -2 0 2 4 σ 2 = 10 0.10 0.00 -4 -2 0 2 4 σ 2 = 15 0.10 0.00 -4 -2 0 2 4 0.20 σ 2 = 19.7 0.10 0.00 -4 -2 0 2 4 19

Recommend

More recommend