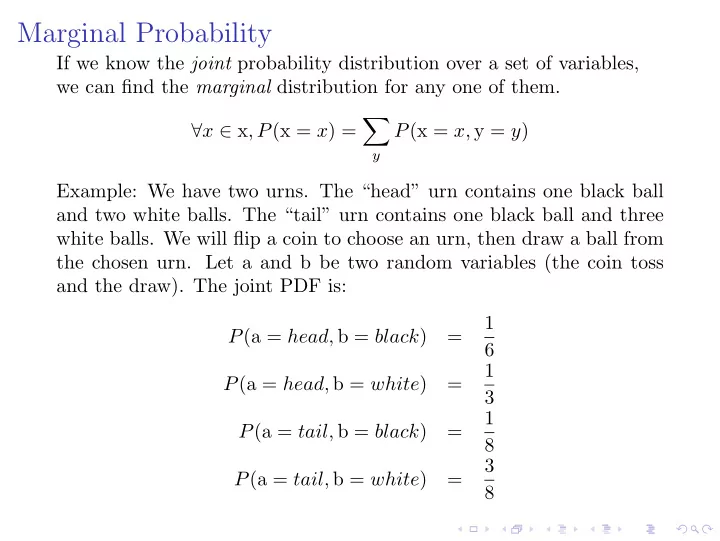

Marginal Probability If we know the joint probability distribution over a set of variables, we can find the marginal distribution for any one of them. � ∀ x ∈ x , P (x = x ) = P (x = x, y = y ) y Example: We have two urns. The “head” urn contains one black ball and two white balls. The “tail” urn contains one black ball and three white balls. We will flip a coin to choose an urn, then draw a ball from the chosen urn. Let a and b be two random variables (the coin toss and the draw). The joint PDF is: 1 P (a = head, b = black ) = 6 1 P (a = head, b = white ) = 3 1 P (a = tail, b = black ) = 8 3 P (a = tail, b = white ) = 8

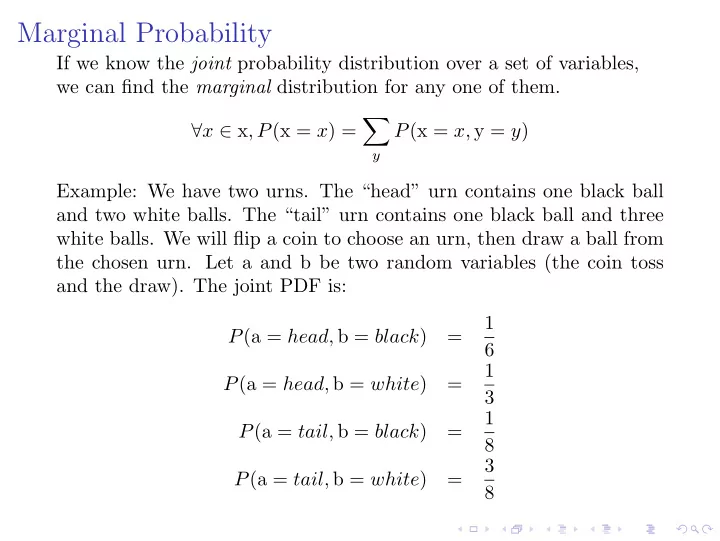

Marginal Probability The marginal probablity distribution for a is: P (a = head, b = y ) = 1 6 + 1 3 = 1 � P (a = head ) = 2 y ∈ b P (a = tail, b = y ) = 1 8 + 3 8 = 1 � P (a = tail ) = 2 y ∈ b The marginal probablity distribution for b is: P (b = black, a = y ) = 1 6 + 1 8 = 7 � P (b = black ) = 24 y ∈ a P (b = white, a = y ) = 1 3 + 3 8 = 17 � P (b = white ) = 24 y ∈ a

Conditional Probability P (y = y | x = x ) = P (y = y, x = x ) P (x = x ) From the urns: 1 P (a = head, b = black ) = 1 6 P (b = black | a = head ) = = P (a = head ) 1 3 2 1 P (a = head, b = white ) = 2 3 P (b = white | a = head ) = = 1 P (a = head ) 3 2 1 P (a = tail, b = black ) = 1 8 P (b = black | a = tail ) = = P (a = tail ) 1 4 2 3 P (a = tail, b = white ) = 3 8 P (b = white | a = tail ) = = 1 P (a = tail ) 4 2 The outcome of b is dependent on the outcome of a, so we cannot calculate P (a = a | b = b ) as a conditional probability.

Chain Rule of Conditional Probability Re-writing conditional probability: P (y = y, x = x ) P (y = y | x = x ) = P (x = x ) P (y = y, x = x ) = P (y = y | x = x ) · P (x = x ) More generally: P ( A n , . . . , A 1 ) = P ( A n | A n − 1 , . . . , A 1 ) · P ( A n − 1 , . . . , A 1 ) � n − 1 � n − 1 � n � � �� � � � � P A k = P A n | P A k · P A k k =1 k =1 k =1

Chain Rule of Conditional Probability Repeating this process with each final term creates the product: � n � n k − 1 � � � P A k = P A k | P A j k =1 k =1 j =1 With four variables, the chain rule produces this product of conditional probabilities: P ( A 4 , A 3 , A 2 , A 1 ) = P ( A 4 | A 3 , A 2 , A 1 ) · P ( A 3 | A 2 , A 1 ) · P ( A 2 | A 1 ) · P ( A 1 )

Chain Rule of Conditional Probability Urn example: P (a = head, b = black ) = P (b = black | a = head ) P (a = head ) 3 · 1 1 2 = 1 = 6 P (a = head, b = white ) = P (b = white | a = head ) P (a = head ) 2 3 · 1 2 = 1 = 3 P (a = tail, b = black ) = P (b = black | a = tail ) P (a = tail ) 1 4 · 1 2 = 1 = 8 P (a = tail, b = white ) = P (b = white | a = tail ) P (a = tail ) 3 4 · 1 2 = 3 = 8

Independence Two random variables x and y are independent if their joint probability distribution is equal to the product of their marginal distributions: ∀ x ∈ x, y ∈ y, p (x = x, y = y ) = p (x = x ) p (y = y )

Independence - Example If we flip two coins, c and d: 1 1 P (c = head ) = P (c = head, d = head ) = 2 4 1 1 P (c = tail ) = P (c = head, d = tail ) = 2 4 1 1 P (d = head ) = P (c = tail, d = head ) = 2 4 1 1 P (d = tail ) = P (c = tail, d = tail ) = 2 4 1 P (c = head, d = head ) = 4 1 P (c = head ) = 2 1 P (h = head ) = 2 Since P (c = c, d = d ) = P (c = c ) P (d = d ) ∀ c ∈ c , d ∈ d , (1) c and d are independent.

Independence - example From the Urn example: 1 P (a = head, b = black ) = 6 1 P (a = head ) = 2 7 P (b = black ) = 24 Since 1 6 � = 1 2 · 7 24 a and be are not independent.

Conditional Independence Two random variables x and y are c onditionally independent given a third random variable z if: ∀ x ∈ x ,y ∈ y , z ∈ z , p (x = x, y = y | z = z ) = p (x = x | z = z ) p (y = y | z = z ) Change the Urn example so that we will flip a coin (a), then draw from both urns (b and c). The outcomes of b and c are conditionally independent of a. Notation: x ⊥ y means that x is independent of y x ⊥ y | z means that x and y are conditionally independent on z

Bayes Theorem and a-Priori Probability In the Urn example, we could not calculate P (a = a | b = b ) as a conditional probability, because b is dependent on a. However, we can calculate the a-prioi probabilities using Bayes Theorem: P ( A | B ) = P ( B | A ) P ( A ) P ( B ) Urn example: P (b = black | a = head ) P (a = head ) = 4 P (a = head | b = black ) = P (b = black ) 7 P (b = black | a = tail ) P (a = tail ) = 3 P (a = tail | b = black ) = P (b = black ) 7 P (b = white | a = head ) P (a = head ) = 8 P (a = head | b = white ) = P (b = white ) 17 P (b = white | a = tail ) P (a = tail ) = 9 P (a = tail | b = white ) = P (b = white ) 17

Recommend

More recommend