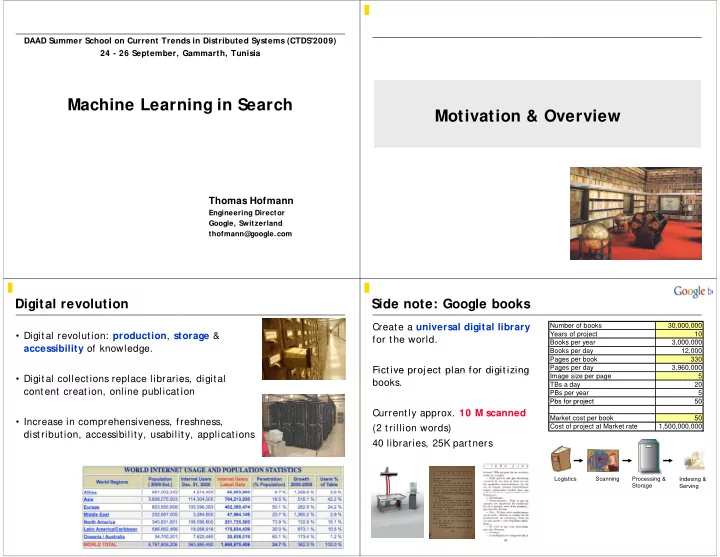

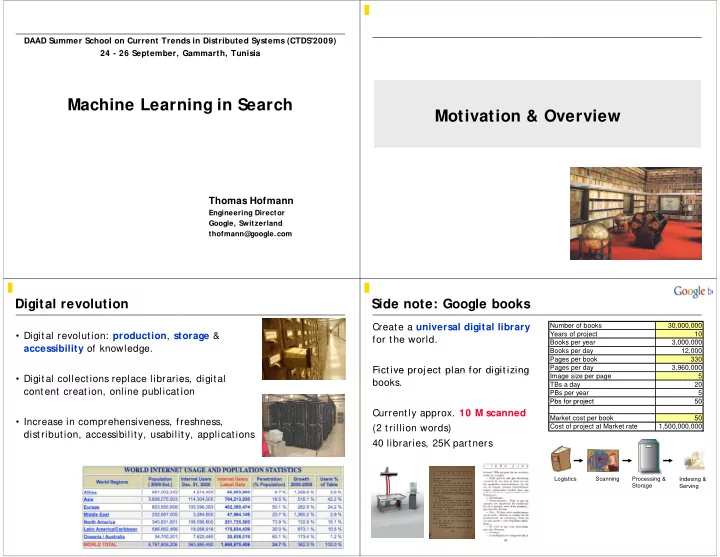

DAAD Summer School on Current Trend ds in Distributed Systems (CTDS'2009) 24 - 26 September, Gammarth, Tunisia Machine Learn ning in Search Motivation & Overview Thomas Hofmann Engineering Director g g Google, Switzerland thofmann@ google.com Digital revolution g Side note: Google books g Create a universal digital library Number of books 30,000,000 • Digital revolution: production , st g p , torage & g Years of project 10 for the world. for the world B Books per year k 3 000 000 3,000,000 accessibility of knowledge. Books per day 12,000 Pages per book 330 Pages per day g p y 3,960,000 Fictive proj ect plan for digitizing Fictive proj ect plan for digitizing Image size per page 5 • Digital collections replace librarie es, digital books. TBs a day 20 content creation, online publicat i ion PBs per year 5 Pbs for project Pbs for project 50 50 Currently approx. 10 M scanned Market cost per book 50 • Increase in comprehensiveness, fr reshness, Cost of project at Market rate 1,500,000,000 (2 trillion words) ( ) distribution, accessibility, usabilit distribution accessibility usabilit ty, applications ty applications 40 libraries, 25K partners Logistics Scanning Processing & Indexing & Storage Serving

Search as a principle & proble p p p em Machine learning in search g syntax semanti “ The difficulty seem ms to be, not so much that we publish unduly in vi publish unduly in vi ew of the extent and variety of ew of the extent and variety of information information bits & bytes bit & b t present day interest ts, but rather that publication has & knowledge been extended far beyond our present ability to make real use of the reco real use of the reco ord . The summation of human ord The summation of human 0 001001010 1 01100010 experience is being expanded at a prodigious rate, and the means we use fo or threading through the consequent maze to the momen maze to the momen ntarily important item is the same as ntarily important item is the same as 0010 1000 was used in the day ys of square-rigged ships. ” interpreted data –meaning, p g, un-interpreted data - interest, intention, know- mac chine ledge, information need text, images, etc. lear rning We live in a search society – belief f that (almost) everything is known, we j ust have t to find the information interpretation p g generalization W We search for everything – the righ h f thi th i h ht b ht book, k unsupervised learning & data mining : supervised learning : movie, car, house, vacation trip, ba argain, discover hidden regularities, generate generalize from given examples, partner, search engine etc. partner, search engine etc. semantically meaningful representations semantically meaningful representations, classification & recognition, emulate classification & recognition emulate predictive modeling, statistics human experts V. Bush, As we may think , Atlantic Monthly, 176 (1945), pp. 101-108 Document Annotations • Categories as metadata: example, Reuters news stories 1. Text Categorization M13 = MONEY MARKETS M132 = FOREX MARKETS MCAT = MARKETS

Text categorization & taxono g omies taxonomies: international pat : p tent classification (IPC) ( ) Business Taxonomies Business Taxonomies , group, subgroup ≈ 69,000 Tasks : : IPC: section, class, subclass � As A sign documents to one of more pre-defined i d t t f d fi d ca tegories Document Classification Document Classification A: Human Necessities � Ro H: Electricity oute messages to an appropriate expert, g pp p p , em mployee, or department B: Performing Operations; G: Physics Digital Libraries Digital Libraries Transporting � Au Medical Terminology Medical Terminology utomatically organize content into folders F: Mechanical Engineering g g C: Chemistry; Metallurgy C Ch i t M t ll E: Fixed Construction Types of texts : Patent Classification Patent Classification D: Textiles; Pa aper Email folders Email folders � tex � tex xt documents xt documents D01: Natural or � we D21: Paper; ... eb pages, web sites artificial threads or � me fibres; Spinning Web Directories Web Directories me essages emails S essages, emails, S MS chat transcripts MS , chat transcripts D07: Ropes; ... D07: Ropes; ... � pa assages & paragraphs, sentences D03: Weav ving D02: Yarns; Warping or D06: Treatment of Beaming; ... Textiles; ... Help Desks CRM Help Desks CRM Types of categories D04: Braiding; Lace D05: Sewing; Making; Knitting; ... Embroidering; Tufting � to pics, functions, genre, author, style, dichot o (e .g. spam/ nom-spam), industry vertical, / ) i d i l Semantic Web Semantic Web D03C: Shedding mechanisms; Pattern D03D: Wov ven fabrics; D03J:Auxiliary weaving se ntiment, language cards or chains; Punching of cards; Methods o f weaving; Looms apparatus; Weavers’ tools; Designing patterns Shuttles Solution (?): Explicit knowled ( ) p dge elicitation g Solution (!): Example-based t ( ) p text categorization g inductive training examples knowledge inference knowledge knowledge engineer acquisition acquisition acquisition acquisition expert expert M132 = FOREX MARKETS trainin trainin ng ng knowledge k knowledge k l d l d learning machine base base /* some ‘complicated algorithm */ if contains(‘yen’) or cont tains(‘euro’) then label=M132 M132 = FOREX MARKETS recall problems: bl low cove erage M132 = FOREX MARKE expert moderat moderat e accuracy e accuracy elicitatio n is often difficult and time ‐ consuming

Term document matrix & doc cument vectors D = document collection W = lexicon/vocabulary w j intelligence j 2. Supervised Classification T exas Instruments said it has developed term document matrix the first 32-bit computer chip designed W W specifically for artificial intelligence applications [...] w 1 ... w j ... w J d i d d d d 1 ... ... D D t ... ... d i d i = ... 0 1 ... 2 0 ... ... ... X X d I term weighting Binary Classification y Perceptron Learning Algorith p g g m • Each document is encoded as a f feature vector • Predict whether document belon Predict whether document belon ngs to a given category or not ngs to a given category or not. • Invented in the late d h l 1950ies • Use linear classifier Use linear classifier • Extremely simple, yet Parameter powerful (extensions) powerful (extensions) • Geometric view: separating hype er-planes • Discarded by Minsky & • Discarded by Minsky & Papert 1960ies • Goal: minimize expected classifi cation error • Re-discovered in the 1990ies • Mistake driven algorithm • Given: training set of labeled exa amples

Novikoff’s Theorem Separation Margin p g • Functional margin of a data point • Functional margin of a data point t with respect to classifier t with respect to classifier (signed distance, if weight vector (signed distance, if weight vector r = unit length) r unit length) • Theorem: Theorem: (R is the radius of a data enclosing sphere) Novikoff’s Theorem: Proof Compression Bound p • Lower Bound Lower Bound Theorem: Theorem: • Upper Bound • S queezing relations

Proof of compression bound p Generalization Bound • Generalization bound: Generalization bound: • The fewer mistakes are made in t training, the better the guaranteed accuracy of the class guaranteed accuracy of the class ifier. ifier. Margin Maximization (Support g ( pp t Vector Machines) support vector machines pp • S eparation margin (and sparsenes ss) crucial for perceptron learning restriction to linear classifiers • Idea: explicitly maximize separat tion margin 0 0 0 0 1 1 0 0 4 4 4 4 + + + + + + + + + 2 0 1 1 0 0 + + + + + − + + − − − − − − − − − − − − − − − − − − − maximum margin principle + + • Reformulate as quadratic program m + + + + + + + + + + + + + + + + + + + + + + + + − + + + − + + + − − − − − − − − + + + − − − − − − − − − − − − − − − − − − − − − − − − − − − − − − − T. Joachims, Learning to Classify Text Using Support Vector r Machines: Methods, Theory, and Algorithms , Kluwer, 2002

Precision & Recall 1. Text Categorization (cont’d) Experimental Evaluation p Practical Use Cases Google • • Text categorization results: Text categorization results: • label Web pages as child safe or n not (for safe search) • classifies billions of pages • Many other features (other than t text) used Recommind • Map documents to corporate taxo onomy • MindS erver classification • uses S VM light package • • Machine Learning award 2009: m Machine Learning award 2009: m most influential paper from 1999 most influential paper from 1999 [ Thorsten Joachims, ICML 1999 ] ] • Much follow-up research …

Recommend

More recommend