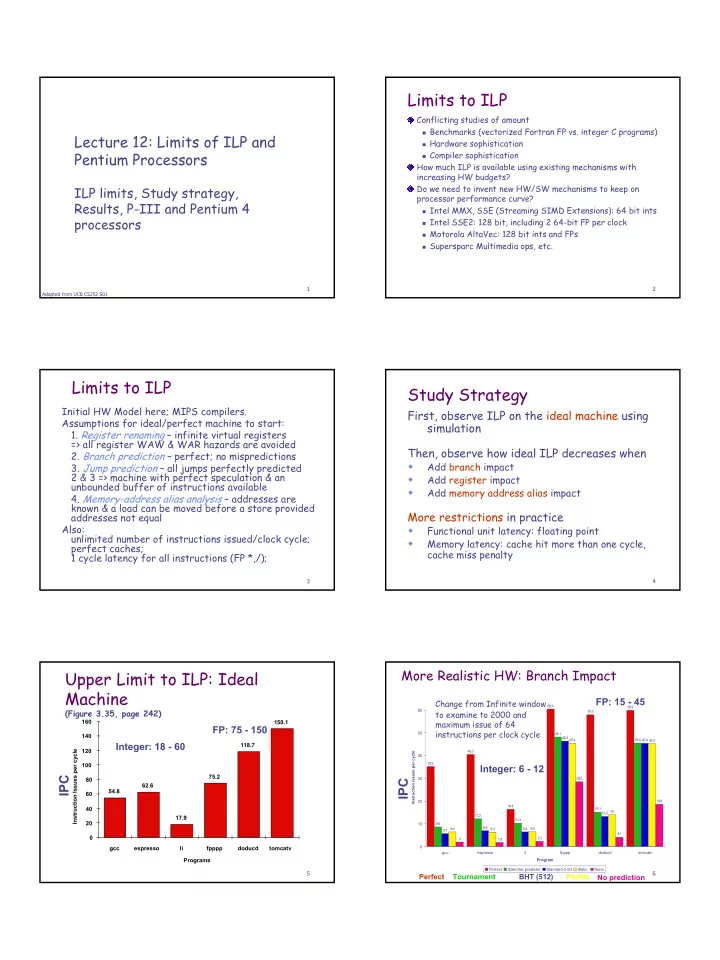

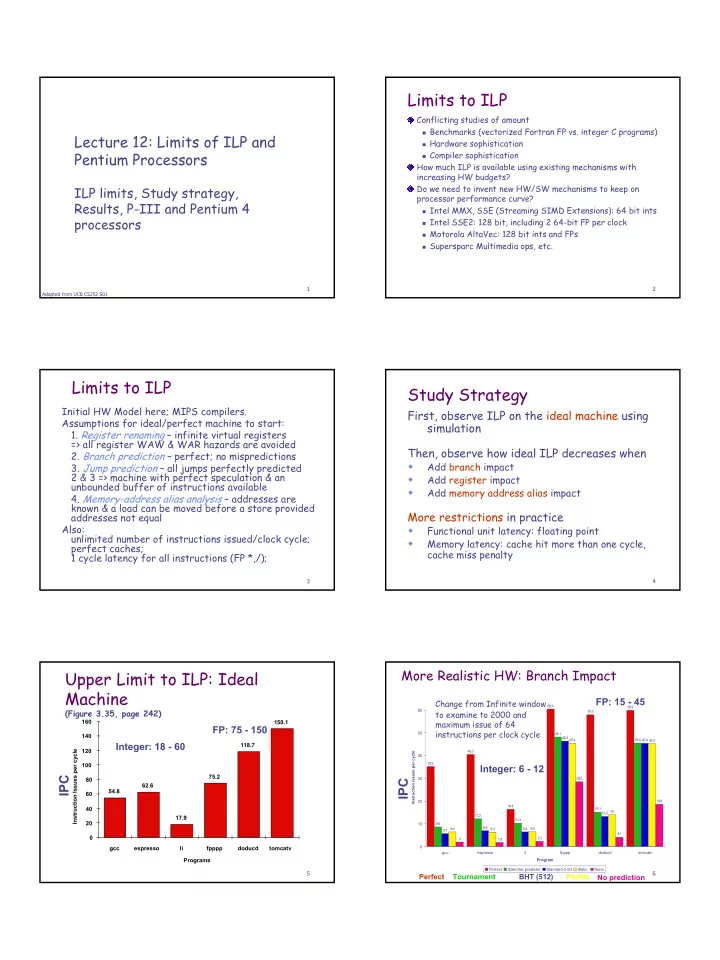

Limits to ILP Conflicting studies of amount � Benchmarks (vectorized Fortran FP vs. integer C programs) Lecture 12: Limits of ILP and � Hardware sophistication Pentium Processors � Compiler sophistication How much ILP is available using existing mechanisms with increasing HW budgets? ILP limits, Study strategy, Do we need to invent new HW/SW mechanisms to keep on processor performance curve? Results, P-III and Pentium 4 � Intel MMX, SSE (Streaming SIMD Extensions): 64 bit ints processors � Intel SSE2: 128 bit, including 2 64-bit FP per clock � Motorola AltaVec: 128 bit ints and FPs � Supersparc Multimedia ops, etc. 1 2 Adapted from UCB CS252 S01 Limits to ILP Study Strategy Initial HW Model here; MIPS compilers. First, observe ILP on the ideal machine using Assumptions for ideal/perfect machine to start: simulation 1. Register renaming – infinite virtual registers => all register WAW & WAR hazards are avoided Then, observe how ideal ILP decreases when 2. Branch prediction – perfect; no mispredictions Add branch impact 3. Jump prediction – all jumps perfectly predicted � 2 & 3 => machine with perfect speculation & an Add register impact � unbounded buffer of instructions available Add memory address alias impact � 4. Memory-address alias analysis – addresses are known & a load can be moved before a store provided More restrictions in practice addresses not equal Also: Functional unit latency: floating point � unlimited number of instructions issued/clock cycle; Memory latency: cache hit more than one cycle, � perfect caches; cache miss penalty 1 cycle latency for all instructions (FP *,/); 3 4 More Realistic HW: Branch Impact Upper Limit to ILP: Ideal Machine FP: 15 - 45 Change from Infinite window 60.9 59.9 (Figure 3.35, page 242) 60 to examine to 2000 and 57.9 160 maximum issue of 64 150.1 FP: 75 - 150 instructions per clock cycle 50 48.1 140 46.3 45.4 45.5 45.4 45.2 Integer: 18 - 60 118.7 120 Instruction Issues per cycle 40.5 Instruction issues per cycle 40 35.2 100 Integer: 6 - 12 IPC 75.2 80 30 28.5 IPC 62.6 54.8 60 20 18.6 16.4 40 15.1 14 13.2 17.9 12.2 10.3 20 10 8.6 6.9 6.5 6.4 6.3 6.4 5.7 4.1 0 2.3 2 1.8 gcc espresso li fpppp doducd tomcatv 0 gcc espresso li fpppp doducd tomcatv Programs Program Perfect Selective predictor Standard 2-bit Static None 5 6 Perfect Tournament BHT (512) Profile No prediction 1

More Realistic HW: More Realistic HW: Renaming Register Impact Memory Address Alias Impact FP: 11 - 45 49 49 70 50 45 45 Change 2000 instr 45 Change 2000 instr 59 FP: 4 - 45 60 window, 64 instr 40 window, 64 instr issue, 8K 54 (Fortran, 2 level Prediction, 256 issue, 8K 2 level 35 49 50 no heap) renaming registers Instruction issues per cycle Prediction 45 30 44 25 40 35 Integer: 4 - 9 20 Integer: 5 - 15 16 16 IPC IPC 15 30 29 28 15 12 10 9 10 20 7 7 20 6 5 5 4 4 5 16 4 4 4 5 3 3 3 15 15 15 13 12 12 12 11 11 11 10 10 10 0 10 9 7 6 5 5 5 5 5 5 5 gcc espresso li fpppp doducd tomcatv 4 4 4 Program 0 gcc espresso li fpppp doducd tomcatv Perfect Global/stack Perfect Inspection None Program Perfect Global/Stack perf; Inspec. None Infinite 256 128 64 32 None 7 8 Infinite 256 128 64 32 None heap conflicts Assem. How to Exceed ILP Limits of More Realistic HW: this study? Memory Address Alias Impact 49 49 WAR and WAW hazards through memory: 50 45 45 eliminated WAW and WAR hazards through 45 Change 2000 instr FP: 4 - 45 register renaming, but not in memory usage 40 window, 64 instr issue, 8K (Fortran, 2 level Prediction, 256 35 Unnecessary dependences (compiler not unrolling no heap) renaming registers 30 loops so iteration variable dependence) 25 Overcoming the data flow limit: value prediction, Integer: 4 - 9 20 16 16 predicting values and speculating on prediction IPC 15 15 12 10 � Address value prediction and speculation 9 10 7 7 6 5 predicts addresses and speculates by 5 4 5 4 4 4 3 3 4 5 3 reordering loads and stores; could provide 0 better aliasing analysis, only need predict if gcc espresso li fpppp doducd tomcatv Program addresses = Perfect Global/stack Perfect Inspection None Perfect Global/Stack perf; Inspec. None heap conflicts Assem. 9 10 Workstation Microprocessors SPEC 2000 Performance 3/2001 Source: Microprocessor Report, www.MPRonline.com 3/2001 1.5X 3.8X 1.2X 1.6X Max issue: 4 instructions (many CPUs) Max rename registers: 128 (Pentium 4) Max BHT: 4K x 9 (Alpha 21264B), 16Kx2 (Ultra III) Max Window Size (OOO): 126 intructions (Pent. 4) Max Pipeline: 22/24 stages (Pentium 4) 1.7X 11 12 Source: Microprocessor Report, www.MPRonline.com 2

Dynamic Scheduling in P6 Conclusion (Pentium Pro, II, III) 1985-2000: 1000X performance � Moore’s Law transistors/chip => Moore’s Law for Q: How pipeline 1 to 17 byte 80x86 instructions? Performance/MPU P6 doesn’t pipeline 80x86 instructions Hennessy: industry been following a roadmap of ideas known in 1985 to exploit Instruction Level Parallelism P6 decode unit translates the Intel instructions into and (real) Moore’s Law to get 1.55X/year 72-bit micro-operations (~ MIPS) � Caches, Pipelining, Superscalar, Branch Prediction, Sends micro-operations to reorder buffer & Out-of-order execution, … reservation stations ILP limits: To make performance progress in future need to have explicit parallelism from programmer vs. implicit Many instructions translate to 1 to 4 micro-operations parallelism of ILP exploited by compiler, HW? Complex 80x86 instructions are executed by a � Otherwise drop to old rate of 1.3X per year? conventional microprogram (8K x 72 bits) that issues long � Less than 1.3X because of processor-memory sequences of micro-operations performance gap? Impact on you: if you care about performance, 14 clocks in total pipeline (~ 3 state machines) better think about explicitly parallel algorithms vs. rely on ILP? 13 14 P6 Pipeline Dynamic Scheduling in P6 14 clocks in total (~3 state machines) Parameter 80x86 microops 8 stages are used for in-order instruction Max. instructions issued/clock 3 6 fetch, decode, and issue Max. instr. complete exec./clock 5 � Takes 1 clock cycle to determine length of Max. instr. commited/clock 3 80x86 instructions + 2 more to create the micro-operations (uops) Window (Instrs in reorder buffer) 40 3 stages are used for out-of-order execution in Number of reservations stations 20 one of 5 separate functional units Number of rename registers 40 3 stages are used for instruction commit No. integer functional units (FUs) 2 No. floating point FUs 1 Reserv. Reorder Execu- Gradu- No. SIMD Fl. Pt. FUs 1 16B Instr 6 uops Station Buffer Instr tion ation Renaming Decode No. memory Fus 1 load + 1 store Fetch units 3 uops 3 uops 3 Instr 16B (5) /clk /clk /clk /clk 15 16 Pentium III Die Photo P6 Block EBL/BBL - Bus logic, Front, Back Diagram MOB - Memory Order Buffer Packed FPU - MMX Fl. Pt. (SSE) IEU - Integer Execution Unit FAU - Fl. Pt. Arithmetic Unit MIU - Memory Interface Unit DCU - Data Cache Unit PMH - Page Miss Handler DTLB - Data TLB BAC - Branch Address Calculator RAT - Register Alias Table SIMD - Packed Fl. Pt. RS - Reservation Station BTB - Branch Target Buffer IFU - Instruction Fetch Unit (+I$) ID - Instruction Decode ROB - Reorder Buffer 1st Pentium III, Katmai: 9.5 M transistors, 12.3 * MS - Micro-instruction Sequencer 17 18 10.4 mm in 0.25-mi. with 5 layers of aluminum 3

P6 Performance: uops/x86 instr P6 Performance: Stalls at decode stage 200 MHz, 8KI$/8KD$/256KL2$, 66 MHz bus I$ misses or lack of RS/Reorder buf. entry go go Instruction stream Resource capacity stalls m88ksim m88ksim gcc gcc compress compress li li ijpeg ijpeg perl perl vortex vortex tomcatv tomcatv swim swim su2cor su2cor hydro2d hydro2d mgrid mgrid applu applu turb3d turb3d apsi apsi fpppp fpppp wave5 wave5 1 1.1 1.2 1.3 1.4 1.5 1.6 1.7 0 0.5 1 1.5 2 2.5 3 1.2 to 1.6 uops per IA-32 instruction: 1.36 avg. (1.37 integer) 19 20 0.5 to 2.5 Stall cycles per instruction: 0.98 avg. (0.36 integer) P6 Performance: Speculation rate P6 Performance: Branch Mispredict Rate (% instructions issued that do not commit) go go m88ksim m88ksim gcc gcc compress compress li li ijpeg ijpeg perl perl vortex vortex tomcatv tomcatv swim swim su2cor su2cor BTB miss frequency hydro2d hydro2d Mispredict frequency mgrid mgrid applu applu turb3d turb3d apsi apsi fpppp fpppp wave5 wave5 0% 5% 10% 15% 20% 25% 30% 35% 40% 45% 0% 10% 20% 30% 40% 50% 60% 10% to 40% Miss/Mispredict ratio: 20% avg. (29% integer) 1% to 60% instructions do not commit: 20% avg (30% integer) 21 22 P6 Performance: Cache Misses/1k instr P6 Performance: uops commit/clock go go m88ksim m88ksim gcc gcc L1 Instruction compress compress L1 Data li li L2 ijpeg ijpeg perl perl 0 uops commit vortex vortex tomcatv 1 uop commits tomcatv swim swim 2 uops commit su2cor su2cor 3 uops commit hydro2d hydro2d mgrid mgrid applu applu Average Integer turb3d turb3d 0: 55% 0: 40% apsi 1: 13% 1: 21% apsi fpppp 2: 8% 2: 12% fpppp wave5 3: 23% 3: 27% wave5 0 20 40 60 80 100 120 140 160 0% 20% 40% 60% 80% 100% 10 to 160 Misses per Thousand Instructions: 49 avg (30 integer) 23 24 4

Recommend

More recommend