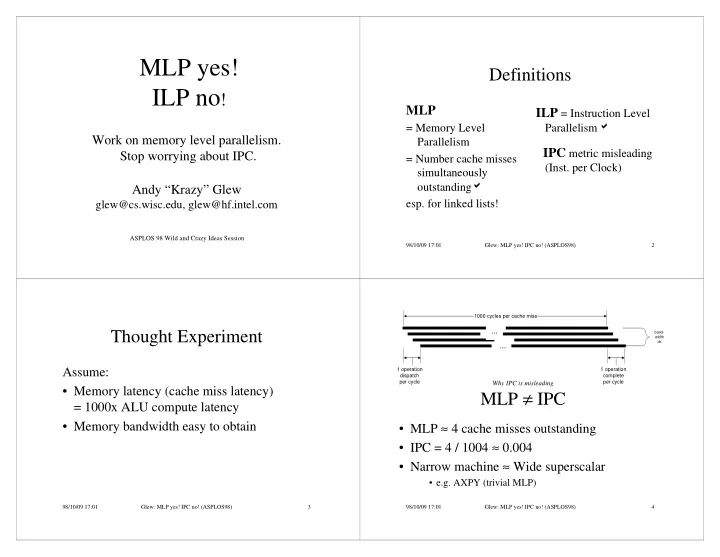

MLP yes! Definitions ILP no ! MLP ILP = Instruction Level = Memory Level Parallelism � Work on memory level parallelism. Parallelism IPC metric misleading Stop worrying about IPC. = Number cache misses (Inst. per Clock) simultaneously outstanding � Andy “Krazy” Glew esp. for linked lists! glew@cs.wisc.edu, glew@hf.intel.com ASPLOS 98 Wild and Crazy Ideas Session 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 2 1000 cycles per cache miss Thought Experiment ... band- width ok ... Assume: 1 operation 1 operation dispatch complete per cycle per cycle Why IPC is misleading • Memory latency (cache miss latency) MLP ≠ IPC = 1000x ALU compute latency • Memory bandwidth easy to obtain • MLP ≈ 4 cache misses outstanding • IPC = 4 / 1004 ≈ 0.004 • Narrow machine ≈ Wide superscalar • e.g. AXPY (trivial MLP) 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 3 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 4

Mindset Microarchitecture Impact Main Points • CPU idle � Branch Prediction waiting for memory • Need changed Processors with low IPC � Data Value Prediction • Low IPC <1 mindset can have high MLP to seek MLP – deep instruction windows • Present OOO CPUs � Prefetching – highly non-blocking caches optimizations I-cache miss • MLP enablers • IPC a bad metric D-cache miss � Multithreading – implicit multithreading for ILP critical path – hardware skiplists non-critical – large microarchitecture data structures e.g. main memory compression 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 5 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 6 MLP processor sketch Pointer Chasing is critical Different Tasks Potential Threads Same Task Active Calls Threads Loops Convergences Large linked data Solution Retirement Instruction Stream Forgetful structures Window HW Skip Lists Window e.g. 3D graphics “world” Execution Units Register ∝ memory in size File Cache # skips ∝ memory >> cache Inner Loop EUs ⇒ store in main memory Brute force doesn’t help Skip w. compression Pointer Main Memory Compression create – increasing window Hints, e.g. Skip Pts Compressed Data Data Cache – increasing frequency Skip Pointer prefetch 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 7 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 8

Backup Slides Conclusion Inevitable forward march of ILP •Slides not included in the short (8 minute) will continue presentation, – the MT generations •answer likely questions • explicit •useful if slides photocopied • implicit – the MLP generation No shortage of ideas to make uniprocessors faster. 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 9 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 10 AXPY Processors with low IPC can have high MLP Examples a*x i +y i • Simplifying assumptions EUs Misses Uarch Time N Sketch – AXPY: 1 64 InO N*1000 • simplest case unoptimized, other cases the usual: SW pipelining, accumulators, etc. 16 64 OOO N/64*1000 – Linked Data Structures 1 64 OOO N/64*1000 • randomized list / tree layout + 64*4 • independent visit functions 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 11 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 12

Tree Traversal Tree Algorithms V(p) { p → l && V(p → l); p → r && V(p → r); VV(p); } EUs Misses Uarch Time N Sketch • Combinatoric explosion N-ary trees • Traversal order skiplists ≈ threading 1 64 InO N*(1000+V) – works if similar traversals repeated 16 64 OOO N*1000 • Searches 1 64 OOO N*1000 – key equal searches → hash tables + 64*4 – proximity and range searches 1 64 OOO + N/m*1000 → traversals skiplists + 64*4 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 13 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 14 Caveats - Do I believe this s**t? Caveats - Do I believe this s**t? Cache Hierarchies • Is there a memory • Aren’t circuits getting • Multilevel cache hierarchies wall? YES slower? NO.Wires are – latency = sqrt(size) getting slower. Gates • Do I care about branch are still getting faster. – reportedly of diminishing effectiveness prediction? YES, but in Tricks make ALUs the MLP world it is a – large working set applications? faster still. secondary effect. (not SPEC95) • Isn’t MLP a form of • Do I care about IPC? ILP? YES. IPC is not a In inner loops. Not metric of ILP. when latency cache missing. 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 15 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 16

Unused Slides Alternate MLP architecture • SW skip lists • The following slides are not used in the – library data structures(STL) current MLP presentation, and contain new – or, compiler… information. E.g. they are skipped just • Prefetch instructions because of time. – Eager prefetch of linked data structures ⇒ less speedup than hardware MLP if tree nodes big – Traversal order threading + skip lists ⇒ same speedup as hardware MLP 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 17 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 18 Data structures + MLP least Large Instruction Windows • Arrays: trivial; easy MLP Forget / Recompute Brute Force • Linear linked lists: skip lists work 2+ windows sophisticated – large windows spill to • N-ary Trees • retirement (=OOO) RAM – combinatoric explosion • non-blocking – cache frequently used parts – traversals easier to parallelize than searches Oldest instruction blocked ⇒ advance window Expandable, Split, esp. proximity searches marking result unknown. Instruction Windows • Hash tables Mispredict – Sohi / Multiscalar – already minimally cache missing ⇒ set non-blocking window = retirement window. most – conflicts: hash probing > MLP chaining 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 19 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 20

Processors with low IPC can have high MLP Datascalar Examples • Limited MLP help Here: Backup: • AXPY • Tree Traversal • E.g. 4-way datascalar (planar) • Linear ** 3D graphics ⇒ M size /4, M latency /2 - faster Linked large linked structures ⇒ Interconnect delay = M latency /2 List ≈ memory Linked list, randomized: >> cache ¼ M latency /2 + ¾ M latency /2 = M latency /2 randomized • N-way: 1 1 1 1 N − + L → M interconne ct latency 2 N N N ≥ 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 21 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 22 Processors with low IPC can have high MLP Processors with low IPC can have high MLP AXPY Linear Linked List A * X i + Y i for(p=hd;p=p → nxt;) visit(p) EUs Misses Uarch Time N Sketch Wide Superscalar CPU Narrow CPU 4 misses deep 6 misses deep 1 * InO N*(1000+V) 16 64 OOO N*1000 1 64 OOO N*1000 + 64*4 Many cache misses ⇒ Narrow - Wide = small startup cost 1 64 OOO + N/m*1000 Why not spend HW on cache misses, not superscalar EUs? skiplists m + 64*4 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 23 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 24

MLP enablers Skip Lists MLP enablers Large µ arch Data Structures Problem Solution HW skip lists Store in main • Convert list to tree ≥ 1 pointer per node memory ⇒ eager prefetch now helps ⇒ MLP=m ∝ memory size compress >> any “cache” • Can be done by reserve Large Instruction Spill to reserved RAM – software (library, compiler?) Window Cache physical – hardware registers • store skip pointers in (compressed) main memory Forget / recompute 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 25 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 26 MLP processor super non-blocking • Narrow EUs • HW skiplists More Backups – wide inner loops? – stored in main memory • Deep instruction with compression window • Smart Sequential Algorithms – cached / spilled to Non-obsolete slides RAM – Belady lookahead in added to end – forget / recompute instruction window because of paper’s updateable • Deeply non-blocking • Dynamic MT qualities cache 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 27

Caveats - Do I believe this s**t? Caveats - Do I believe this s**t? Speed, Voltage, Power Workloads • Brainiacs vs. Speed • Speed is fungible with • Unabashedly single-user Demons superscalarness – servers can use MT – déjà vu all over again – 8GHz 1-way • Q: next killer app.? = 1 GHz 8-way • Power – Is there one? – if circuit speeds trade – Speed ∝ Voltage off – Does it fit in cache? Glew: no. Power ∝ Voltage 2 – sequential always – Superscalar parallelism – Probably something like 3D graphics easier than parallel to save power virtual worlds. may be a good thing ≠ ? performance 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 29 98/10/09 17:01 Glew: MLP yes! IPC no! (ASPLOS98) 30

Recommend

More recommend