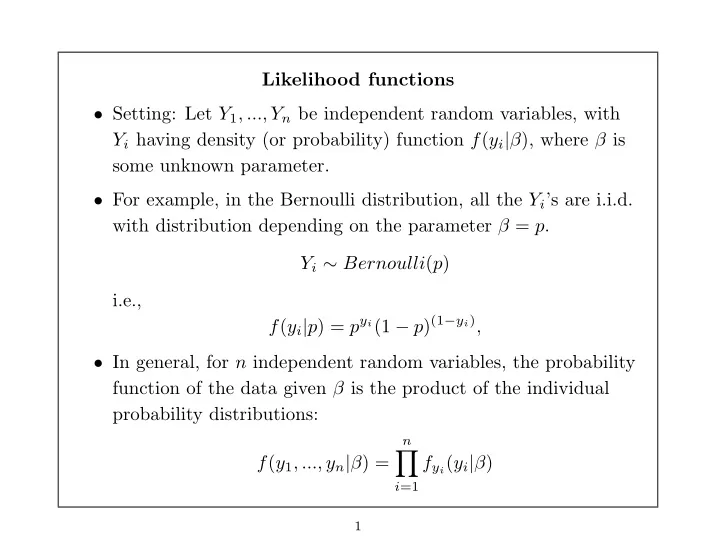

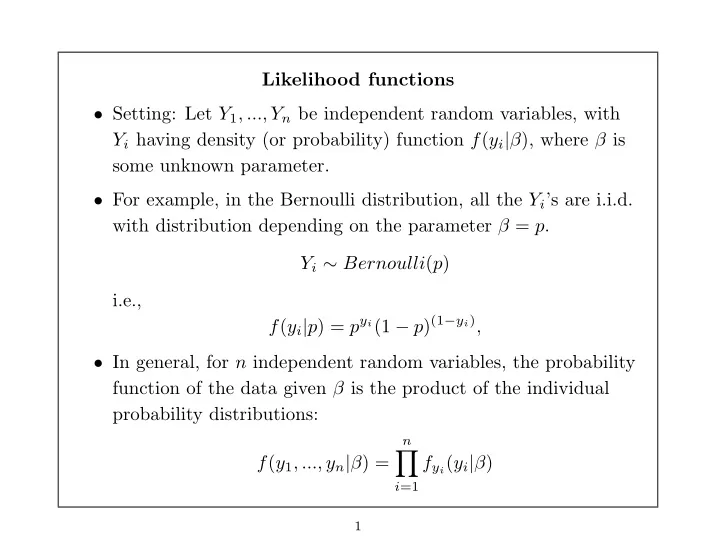

Likelihood functions • Setting: Let Y 1 , ..., Y n be independent random variables, with Y i having density (or probability) function f ( y i | β ) , where β is some unknown parameter. • For example, in the Bernoulli distribution, all the Y i ’s are i.i.d. with distribution depending on the parameter β = p. Y i ∼ Bernoulli ( p ) i.e., f ( y i | p ) = p y i (1 − p ) (1 − y i ) , • In general, for n independent random variables, the probability function of the data given β is the product of the individual probability distributions: n � f ( y 1 , ..., y n | β ) = f y i ( y i | β ) i =1 1

• The Likelihood function of β given the data are equivalent to the probability function of the data given β : n � L ( β ) = L ( β | y 1 , ..., y n ) = f y i ( y i | β ) . i =1 • Once you take the random sample of size n, the Y i ’s are known, but β is not – in fact, the only unknown in the likelihood is the parameter β. • Example: The Likelihood function of p for a sample of n Bernoulli r.v.’s is: n � � n i =1 y i (1 − p ) n − � n p y i (1 − p ) (1 − y i ) = p i =1 y i L ( p ) = i =1 2

• Maximum Likelihood Estimator (MLE) of β - the value, ˆ β , which maximizes the likelihood L ( β ) or the log-likelihood log L ( β ) as a function of β, given the observed Y i ’s. • The value ˆ β that maximizes L ( β ) also maximizes log L ( β ), since the latter is a monotone function of L ( β ). • It is usually easier to maximize log L ( β ) , ( why? ) so we focus on the log-likelihood. • Most of the estimates we will discuss in this class will be MLE’s. 3

• For most distributions, the maximum is found by solving ∂ log L ( β ) = 0 ∂β • Technically, we need to verify that we are at a maximum (rather than a minimum) by seeing if the second derivative is negative at ˆ β, i.e., � ∂ 2 log L ( β ) � < 0 ∂β 2 β = ˆ β • The opposite of the second derivative, − ∂ 2 log L ( β ) , is called the dβ 2 “ information ”. This quantity plays an important part in likelihood theory. 4

Example: Bernoulli data • The likelihood is � n i =1 p y i (1 − p ) 1 − y i L ( p ) = p y (1 − p ) n − y , = where n � Y = Y i = number of successes i − 1 5

• The log-likelihood is log L ( p ) = y log p + ( n − y ) log(1 − p ) , • The first derivative is ∂ log L ( p ) = y p − n − y 1 − p = y − np ∂p p (1 − p ) Setting this to 0 and solving for � p, you get p = y � n 6

• The second derivative of the log-likelihood is ∂ 2 log L ( p ) = − y p 2 − ( n − y ) ∂p 2 (1 − p ) 2 • Evaluating at p = � p : � ∂ 2 log L ( p ) � y ( n − y ) = − ( y/n ) 2 − ∂p 2 (1 − ( y/n )) 2 p = � p − n 2 n 2 = y − < 0 ( n − y ) • When 0 < y < n, the 2nd derivative at � p is negative, so � p is the maximum. • When y = 0 or y = n, the estimate � p = 0 or � p = 1 is said to be on the ‘boundary’. 7

Properties of MLE’s Any two likelihoods, L 1 ( β ) and L 2 ( β ), that are proportional, i.e., L 2 ( β ) = α L 1 ( β ) (where α is a constant that does not depend on β ) yield the same maximum likelihood estimator 8

• Example: If we had started with the Binomial distribution of Y = � n i =1 Y i rather than n independent Bernoulli r.v.’s, then the likelihood would be: n p y (1 − p ) n − y , f ( y ) = y • For this distribution, the log-likelihood is n + y log p + ( n − y ) log(1 − p ) , log L ( p ) = log y 9

• The first derivative of the binomial log-likelihood is ∂ log L ( p ) ∂p n + ∂ [ y log p +( n − y ) log(1 − p )] log ∂ = ∂p ∂p y 0 + ∂ [ y log p +( n − y ) log(1 − p )] = ∂p ∂ [ y log p +( n − y ) log(1 − p )] = ∂p This is exactly the same as the first derivative of the log-likelihood for n independent Bernoulli’s. • Therefore, we get the same MLE for independent Bernoulli data and Binomial data (both are based on Y = � n i =1 Y i ) • The likelihood, L 2 ( p ) of the Binomial data is proportional to 10

the likelihood, L 1 ( p ) based on the original Bernoulli data, since L 2 ( p ) = αL 1 ( p ) n where α = y 11

Asymptotic Properties of MLE’s • The exact distribution of the MLE can be very complicated, so we often have to rely on large sample methods instead. • Using a Taylor series expansion and the Delta Method, the following properties can be shown as n → ∞ : (1) ˆ β is asymptotically unbiased ∗ : E (ˆ β ) → β (2) ˆ β is consistent : pr {| ˆ β − β | > ǫ } → 0 , (3) ˆ β is asymptotically efficient (it achieves the minimum variance among all asymptotically unbiased estimators) ∗ - (Note that it may be biased in small samples) 12

• In addition, using the Central Limit Theorem, it can be shown that MLE’s are asymptotically normally distributed , i.e, ˆ ∼ N [ β, V ar (ˆ · β β )] , where V ar (ˆ β ) is the inverse of the expected value of the information: � � ∂ 2 log L ( β ) �� − 1 V ar (ˆ β ) = − E , ∂β 2 (Note, however, that V ar (ˆ β ) is itself a function of β. We will come back to examine this issue later) 13

Example: Bernoulli data (continued) • From the above MLE theory, we know that, for large n, · � ∼ N [ p, V ar ( � p p )] where � � d 2 log L ( p ) �� − 1 V ar ( � p ) = − E , dp 2 • Recall, the second derivative of the log-likelihood is ∂ 2 log L ( p ) = − y p 2 − ( n − y ) ∂p 2 (1 − p ) 2 so that the ’information’ equals − ∂ 2 log L ( p ) = y p 2 + ( n − y ) ∂p 2 (1 − p ) 2 14

• The expected value of the information is � � − ∂ 2 log L ( p ) E ( Y ) + E ( n − Y ) E = ∂p 2 p 2 (1 − p ) 2 np p 2 + n (1 − p ) = (1 − p ) 2 n n = p + (1 − p ) n = p (1 − p ) 15

• To get the asymptotic variance, we now take the inverse: � � − 1 n = p (1 − p ) V ar ( � p ) = p (1 − p ) n • This confirms what we already derived using the CLT: � � p, p (1 − p ) · � p ∼ N n 16

MLE’s of functions The MLE of a function is the function of the MLE, i.e., g (ˆ The MLE of g ( β ) is β ) Variance of g (ˆ β ) : Two possible methods for calculating the variance are: (1) Apply the Delta Method to g (ˆ β ) According to the Delta method the variance of the function g (ˆ β ) is V ar [ g (ˆ β )] = [ g ′ ( β )] 2 V ar (ˆ β ) (2) Rewrite the likelihood in terms of θ = g ( β ) , then take the second derivative of the corresponding log-likelihood with respect to θ. 17

Example: Binomial data p = Y • The MLE of p is � n . What is the MLE of logit( p )? • Using the above result, the MLE of logit( p ) is logit( Y n ) • Calculating V ar (logit( � p )): – Method (1): already shown – Method (2): Let θ = logit( p ) = log ( p/ (1 − p )) . After some algebra, you can show that e θ p = 1 + e θ 18

Substitute e θ / (1 + e θ ) for p in the likelihood: n p y (1 − p ) n − y f ( y ) = y � � y � � n − y n e θ e θ = 1 − 1+ e θ 1+ e θ y Then take 2nd derivatives of the log-likelihood with respect to θ to find the information, and compute the inverse. 19

Confidence Intervals and Hypothesis Testing I. Confidence Intervals • From MLE theory, we know that for large n, � � p, p (1 − p ) · p � ∼ N n • A 95% confidence interval for p can thus be constructed as: � p (1 − p ) � p ± 1 . 96 n However, we do not know p in the variance. 20

• Since � p is a consistent estimate of p, we can replace p (1 − p ) in the variance by p (1 − � � p ) , and still get 95% coverage (in large samples). • Therefore, a large sample confidence interval for p is: � p (1 − � � p ) � p ± 1 . 96 . n 21

Large Sample Confidence Interval for β • In general, suppose we want a 95% confidence interval for β. For large n , we know that ˆ ∼ N [ β, V ar (ˆ · β β )] , where V ar (ˆ β ) is the inverse of the expected value of the information, and is a function of β . • If we knew β in V ar (ˆ β ) , we could form an asymptotic 95% confidence interval for β with � ˆ V ar (ˆ β ± 1 . 96 β ) . (But.... if we knew β, we wouldn’t need a confidence interval in the first place!) 22

• Since we do not know β, we have to estimate V ar (ˆ β ) by replacing β with its consistent estimator ˆ β : V ar (ˆ � β ) = [ V ar (ˆ β )] β = ˆ β • Then the confidence interval � ˆ V ar (ˆ � β ± 1 . 96 β ) , will have coverage of 95% in large samples. 23

Confidence Interval for a Function of β A large sample 95% confidence interval for g ( β ) is � g (ˆ V ar [ g (ˆ � β ) ± 1 . 96 β )] where V ar [ g (ˆ � β )] = { V ar [ g (ˆ β )] } β = ˆ β 24

Some motivation for this result: • Using the Delta method, we know that for large samples, g (ˆ ∼ N { g ( β ) , V ar [ g (ˆ · β ) β )] } . where V ar [ g (ˆ β )] = [ g ′ ( β )] 2 [ V ar (ˆ β )] } , is a function of β. • Since ˆ β is a consistent estimate of β for large samples, we can substitute ˆ β for β in our estimate of the variance, i.e., V ar (ˆ β ). 25

Recommend

More recommend