Chapter 8: Estimation In this chapter we will cover: 1. The - PDF document

Chapter 8: Estimation In this chapter we will cover: 1. The likelihood and maximum likelihood estimation ( 8.3, 8.5 Rice) 2. Introduction to decision theory( 15.115.3) The likelihood function We have seen many cases where we have

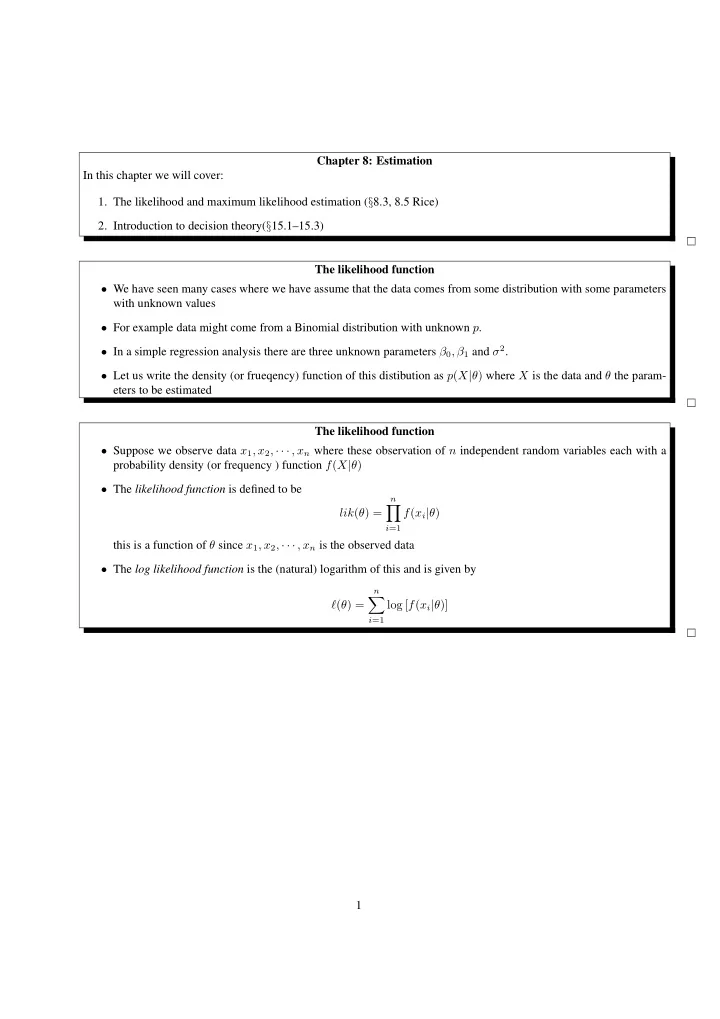

Chapter 8: Estimation In this chapter we will cover: 1. The likelihood and maximum likelihood estimation ( § 8.3, 8.5 Rice) 2. Introduction to decision theory( § 15.1–15.3) The likelihood function • We have seen many cases where we have assume that the data comes from some distribution with some parameters with unknown values • For example data might come from a Binomial distribution with unknown p . • In a simple regression analysis there are three unknown parameters β 0 , β 1 and σ 2 . • Let us write the density (or frueqency) function of this distibution as p ( X | θ ) where X is the data and θ the param- eters to be estimated The likelihood function • Suppose we observe data x 1 , x 2 , · · · , x n where these observation of n independent random variables each with a probability density (or frequency ) function f ( X | θ ) • The likelihood function is defined to be n � lik ( θ ) = f ( x i | θ ) i =1 this is a function of θ since x 1 , x 2 , · · · , x n is the observed data • The log likelihood function is the (natural) logarithm of this and is given by n � ℓ ( θ ) = log [ f ( x i | θ )] i =1 1

Example: Poisson distribution • The number of asbestos fibres in a number of grid squares were counted. It is assumed that the number will follow a Poisson distribution. • Suppose we have observed the asbestos data given on page 247 Rice: i.e the counts are: 31, 34, 26, 28, 29, 27, 27, 24, 19, 34,27, 21,18, 30, 18, 17, 31, 16, 24, 24, 28, 18,22. • We would like the estimate the value of the rate parameter λ for this Poisson distribution • The likelihood function for the Poisson is given by n =23 λ x i exp( − λ ) � x i ! i =1 • The log likelihood is the given by n n � � ℓ ( λ ) = log( λ ) x i − nλ − log x i ! i =1 i =1 Example: Log-likelihood function 1268 1266 1264 Log likelihood 1262 1260 1258 20 22 24 26 28 30 lambda The plot shows the log likelihood function for the asbestos data. It is approximately quadratic 2

The maximum likelihood estimate • One way of estimating the true parameter value θ given a set of data is to use the maximum likelihood estimate (mle) • This is defined to be ˆ θ and is the value of θ as which the log likelihood function is maximised • You can think of it as the parameter value which makes the observed data “most likely”. • The mle is a random variable since it depends on the data Example A: Poisson distribution • From Rice page 254 the MLE for a Poisson distribution is given by ˆ λ = ¯ X the sample mean of the data 1268 1266 1264 Log likelihood 1262 1260 1258 20 22 24 26 28 30 lambda Example B: Normal distribution • If X 1 , X 2 , . . . , X n are independent and come from a N ( µ, σ 2 ) distribution • The likelihood function is given by m � � 2 � 1 − 1 � x i − µ � √ f ( x 1 , . . . , x n | µ, σ ) = 2 π exp 2 σ σ i =1 • The MLE’s for µ and σ are � n � 1 � µ = ¯ � ( X i − ¯ � ˆ X, ˆ σ = X ) 2 n i =1 3

Confidence intervals • Just as in Chapter 7 when we were sampling from finite populations when we are estimating parameters we can think of the idea of a confidence interval • Here we want to estimate θ the parameters of a given distribution • The interval should captures the uncertainty in the point estimate ˆ θ . • A confidence interval is an interval based on sample values. It has the statistical property that it contains the true value some fixed percentage (say 95% ) of the times it is used • For the MLE the confidence intervals are typically calculated using limit theorems. Normal distribution • For the sample mean ¯ x , which is the MLE for µ , the 100(1 − α )% confidence interval is given by � √ nt n − 1 ( α s √ nt n − 1 ( α s � x − ¯ 2 ) , ¯ x + 2 ) where n is the sample size, S is the sample variance n 1 s 2 = � ( x i − ¯ x ) n − 1 i =1 • The term t n − 1 ( α 2 ) denotes the point beyond which the t -distribution with n − 1 degrees of freedom has probability α/ 2 • For a 95% confidence interval, Table 4 gives the following t n − 1 ( α n 2 ) 5 2.571 10 2.228 20 2.086 ∞ 1.96 Normal distribution • Suppose that the sample mean for a sample of size 25 is − 1 . 23 , the sample variance is 25 . 1 • The 95% confidence interval for µ is given by � � � � 25 . 1 25 . 1 − 1 . 23 − 25 t α , − 1 . 23 + 25 t α • From table 4 we see that we need the value for a t-distribution with 25 − 1 = 24 degrees of freedom, i.e. 2 . 064 • Thus the 95% confidence interval is ( − 1 . 23 − 1 . 002 × 2 . 064 , − 1 . 23 + 1 . 002 × 2 . 064) 4

Normal distribution • Let χ 2 n − 1 denote the chi-squared distribution with n − 1 degrees of freedom. This is tabulated in Table 3, Page A8 of Rice. • Then let χ 2 n − 1 ( α ) be the point beyond which the χ 2 distribution has probability α • The 100(1 − α )% confidence interval for σ 2 in a Normal sample is σ 2 σ 2 � n ˆ n ˆ � n − 1 ( α/ 2) , n − 1 (1 − α/ 2) , χ 2 χ 2 • If we want a 95% confidence interval with n = 25 then you use the α = 0 . 05 , so χ 2 24 (0 . 025) = 12 . 40 , χ 2 24 (0 . 975) = 39 . 36 Poisson distrubution • The mle of a Poisson ( λ ) distribution is the sample mean ¯ x . • For large samples approximate confidence 100(1 − α ) confidence intervals for λ are given by � � � � x ¯ x ¯ x − z ( α/ 2) ¯ n, ¯ x + z ( α/ 2) n where z ( α ) comes from the standard normal table • If we want a 95% confidence interval z ( α/ 2) = 1 . 96 Recommended Questions From § 8.9 Rice please look at questions 3(a, b) , 4(a, c), 5(b), 22, 26, 27, 28, 29. 5

Bayesian methods and decision theory • This material is from § 15.1-15.2 of Rice • Decision theory views statistics as a mathematical tool for making decisions in the face of uncertainty • The decision maker has to choose an action a from a set A of all possible actions • The data on which the decision is made is X and comes from a distribution function with parameters θ . These represent states of nature • The decision is made by a statistical decision function d such that a = d ( X ) • The risk in using the decision rule d is evaluated by a loss function l ( θ, d ( X )) which depends on the actual state of nature θ Example A • Suppose a manufacturer produces a lot of N items, n are randomly sampled and checked to see if they are defective • Let ˆ p be the sample proportion which are defective • If the lot is sold for $ M with a penalty $ P if the number of defective items in the lot exceeds a proportion p 0 • The action space is { sell , junk } • The data is X = ˆ p and the state of nature is θ = p the proportion in the lot • The loss function is given by State of nature Sell Junk p < p 0 − $ M $ C p ≥ p 0 $ P $ C Risk • The loss function l ( θ, d ( X )) depends on the random variable X • The risk is called the expected loss i.e. R ( θ, d ) = E [ l ( θ, d ( X )] • We want to find decision rules d which have ‘small’ risk • One problem is that the risk R ( θ, d ) depends on θ which not known to us 6

Minimax rule • It is possible to have two decision rules d 1 and d 2 such that R ( θ 1 , d 1 ) < R ( θ 1 , d 2 ) R ( θ 2 , d 1 ) > R ( θ 2 , d 2 ) • Thus d 1 is preferred if θ 1 was the state of nature, but d 2 would be preferred is θ 2 was • The minimax rule takes a very conservative approach and considers the worst possible risk i.e. max θ ∈ Θ [ R ( θ, d )] • It then chooses the decision function d ∗ that minimizes this maximum risk i.e. � � min max θ ∈ Θ [ R ( θ, d )] d • If such a rule exists it is called the minimax rule Bayes rule • An alternative to the conservative minimax rule is to assume some distribution p ( θ ) called the prior distribution • Then look at the average risk i.e. B ( d ) = E [ R ( θ, d )] where this expectation is over the prior distribution p ( θ ) • The decision function d ∗∗ which minimizes the Bayes risk is called the Bayes rule Example • Suppose that the lot contains 21 items and one of them is randomly sampled • The remaining 20 units can be sold for $1 each but if they are defective it costs $2 . • To junk the whole lot costs $1 • Suppose that there are two decisions: d 1 : sell if the sample is good, junk if sample is defective d 2 : Always sell • We can evaluate these rules by the minimax and bayes criterion 7

Example: Minimax • Let X = 1 if the tested item is good and 0 if defective, and let k the the actual number of defective items (i.e. the state of nature) • The loss functions are then � − 20 + 2 k if X = 1 l ( k, d 1 ( X )) = 1 if X = 0 � − 20 + 2 k if X = 1 l ( k, d 2 ( X )) = − 20 + 2( k − 1) if X = 0 • The risk function then is the expected value of X for which we need the distribution of X i.e. P ( X = 0) = k 21 , P ( X = 1) = 1 − k 21 • So the risk functions are − 20 + 3 k − 2 k 2 R ( k, d 1 ) = 21 − 20 + 40 k R ( k, d 2 ) = 21 8

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.