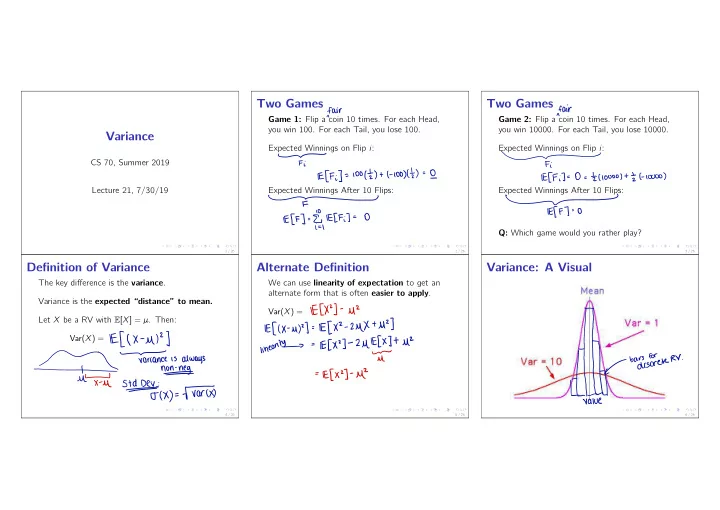

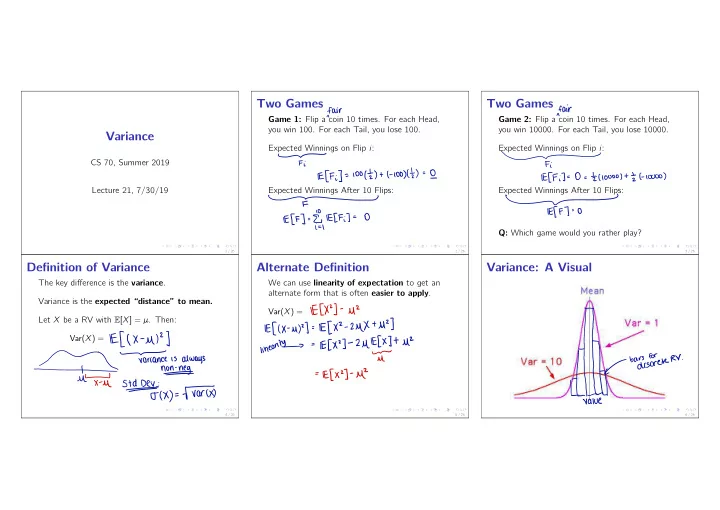

Two Games Two Games fair fair Game 1: Flip a coin 10 times. For each Head, Game 2: Flip a coin 10 times. For each Head, you win 100. For each Tail, you lose 100. you win 10000. For each Tail, you lose 10000. Variance Expected Winnings on Flip i : Expected Winnings on Flip i : - - CS 70, Summer 2019 Fi Fi = 100ft ) too ) ( I ) I Effi ] I = Ill I t Effi ] O NOOO ) ) t C- - 0000 = = Lecture 21, 7/30/19 Expected Winnings After 10 Flips: Expected Winnings After 10 Flips: - I - fi , Eff 7=0 Effi O ECF ] ] = - Q: Which game would you rather play? 1 / 26 2 / 26 3 / 26 Definition of Variance Alternate Definition Variance: A Visual The key difference is the variance . We can use linearity of expectation to get an alternate form that is often easier to apply . Variance is the expected “distance” to mean. ' ] IECX uz Var( X ) = - - # Let X be a RV with E [ X ] = µ . Then: 2µXtu2 ) IE [ XZ - MY ] EH X ' I = - Ell - ul - 2µE[ X Var( X ) = x ]tµ2 IECXZ ] linear # = ftp.aais.irenrr - # Tartan always U is . 2 ] = Efx non-neg-ttfustdD-jgxy.TW if - - Value 4 / 26 5 / 26 6 / 26

Variance of a Bernoulli Variance of a Dice Roll Variance of a Dice Roll 25+36 ] f- ( I E [ R 2 ] = Let X ∼ Bernoulli( p ) . What is the variance of a single 6-sided dice roll? 9 16 4 t t t t ÷ .ro Then E [ X ] = p roll { 1,2 , 3,4 , 5,63 R = value dice of a . f- ( 91 ) = What is X 2 ? E [ X 2 ] ? What is R 2 ? - p ) . a = { / ENT tip to - - Ifp RIB - CIEL IE [ R2 ] Wwf X' to Var( R ) = =p * ÷÷÷÷ - EF . af = - FE EXT ) 2 Var[ X ] = ECXZ ] . = F- - P ) - P ) . ) ( Notes ph " Ber ( pp = rare 'D = it Bercp ) Var CX ) X - 7 / 26 8 / 26 9 / 26 Variance of a Geometric Variance of a Geometric II Variance of a Geometric III Recall E [ X ] = 1 Know the variance; proof optional, but good From the distribution of X , we know: p . ① .€ , - p I - - p )2pt practice with manipulating RVs . lP[X=i]=pt( )pth I -_ - Efx 'T - CENT ) ' . . . CX ) - ¥ var - - HEI . ¥ From E [ X ] , we know: Let X ∼ Geometric( p ) . - p )2pt - p -1311 Cfp )p IECX I Strategy: Nice expression for p · E [ X 2 ] 2. - I 1- . . . - - ' I . p - ppp ② I Efx - p ) p 9h t 4h . I t =L t z.pt/4l-plpt6CtpTpt...)tfp-Ci-p7p-ltP5p.ypIEfX4=2IECX = . . . - PIP ] - fit Yf I - - pl Efx 4 C I p t . ② - ① - z sides both Subtract from . - ( - PPP - p - p ) p t 3 I I t 5 C I 1 PIE t . . . = ] 1 - t f- p - ftp.T Efxi for - Li - P2P 2 solve . ) fz.pt/4l-plpt6CtpTpt = ECxy=2 . . 10 / 26 11 / 26 12 / 26

Variance of a Poisson Variance of a Poisson II Break Same: know the variance; proof optional, but Use E [ X ( X − 1 )] to compute Var( X ) . good practice with functions of RVs . - Efx ] ) 2 i ] Efxz ] var ( X ) l deft qf iffy IPCX = = Let X ∼ Poisson( λ ) . → ← - IFECXIJ - - IDTECX ] = Efxlx Strategy: Compute E [ X ( X − 1 )] . Would you rather only wear sweatpants for the . e- X Efx ( X THAY 1 ) ] - = . rest of your life, or never get to wear sweatpants - - E) ! -(# I i I ever again? lastyszu.de X X = = ' Fez E ← taywrreen.es - - - xx e = j 22 =e# I A = 13 / 26 14 / 26 15 / 26 Properties of Variance I: Scale Properties of Variance II: Shift Example: Shift It! Consider the following RV: Let X be a RV, and let c 2 R be a constant. Let X be a RV, and let c 2 R be a constant. Let E [ X ] = µ . Let E [ X ] = µ . 1 w.p. 0 . 4 Then, let µ 0 = E [ X + c ] = Utc X = 3 w.p. 0 . 2 Var ( X ) e. Var( cX ) = - FEENY 5 w.p. 0 . 4 varix ) I lin Var( X + c ) = - 3) C ex ) IE , . Var = Var C X Efffxtc 'T ] ) ) = U varcxtc = - - Cc EXIT What is Var( X ) ? Shift it ! Efe xD = =EAXtEUXY ) - CHE ⇒ it :: : = CHECK ] EXT ) ' * of Vary , - my * ' EAX - = - 0.2=3.2 - 351=4 . 0.8 to IEC X shift . ( X ) * Cz var = ten → - 3 ] = O IEC X 3.2 - 3D Var C C x = 16 / 26 17 / 26 18 / 26

Sum of Independent RVs Variance of a Binomial Sum of Dependent RVs Main strategy: linearity of expectation and Let X 1 , . . . , X n be independent RVs. Then: Let X ∼ Bin( n , p ) . Then, indicator variables Var( X 1 + . . . + X n ) = Var( X 1 ) + . . . + Var( X n ) X = Xitxzt Useful Fact: . . ( X 1 + X 2 + . . . + X n ) 2 = Proof: Tomorrow ! Here, X i ∼ -1 .tl/nBerlp)XiiidTfdVarCX,)tVarCXz)t...tVarlXn)--n.VarCXi Xitxzt Today: Focus on applications. Var( X ) = .tl/nKXitXzt.-.tXn)-=CXftXit...tXn2)tCXiXztXiX3t.-.tXn-iXn . . ) ) Binh ,p ) x - =µpFpTH ) - Y Bincnitp - D - - non - VARY ) - Vari ) Ee Xi - jxi t alter ? = ' , xix ; 19 / 26 20 / 26 21 / 26 HW Mixups (Fixed Points) HW Mixups II HW Mixups III What is S 2 i ? E [ S 2 (In notes.) n students hand in HW. I mix up their i ] ? Using our useful fact : ' ] HW randomly and return it, so that every possible - I : If I E [ S 2 ] = . tsn ) * I Els it t Effs Sat mixup is equally likely . , t si - = Ef En . . - if jsisjl sit Let S = # of students who get their own HW. , linearity student i For i 6 = j , what is S i S j ? E [ S i S j ] ? for : indicator Last time: defined S i = jsisj ] t IEC Effi ? Si 2) HW " i own getting = . . sit :¥÷÷÷÷ Ber Hn ) S i ∼ . Efs - 1) EES , sit s , 2) In t = n n ⇒ T Using linearity of expectation : ÷ . t ECS . ] = Efs Elsie , It tsn I ECS , Sat E [ S ] = t . . . . - = then ) 22 / 26 23 / 26 24 / 26

HW Mixups IV Summary Put it all together to compute Var( X ) . Today: ' - CECXD I Variance measures how far you deviate from Efx 4 C x ) var - = FEW tncn-DEG.SI - mean I Variance is additive for independent RVs ; riff n#n¥n proof to come tomorrow I = t - I Use linearity of expectation and indicator of = variables 25 / 26 26 / 26

Recommend

More recommend