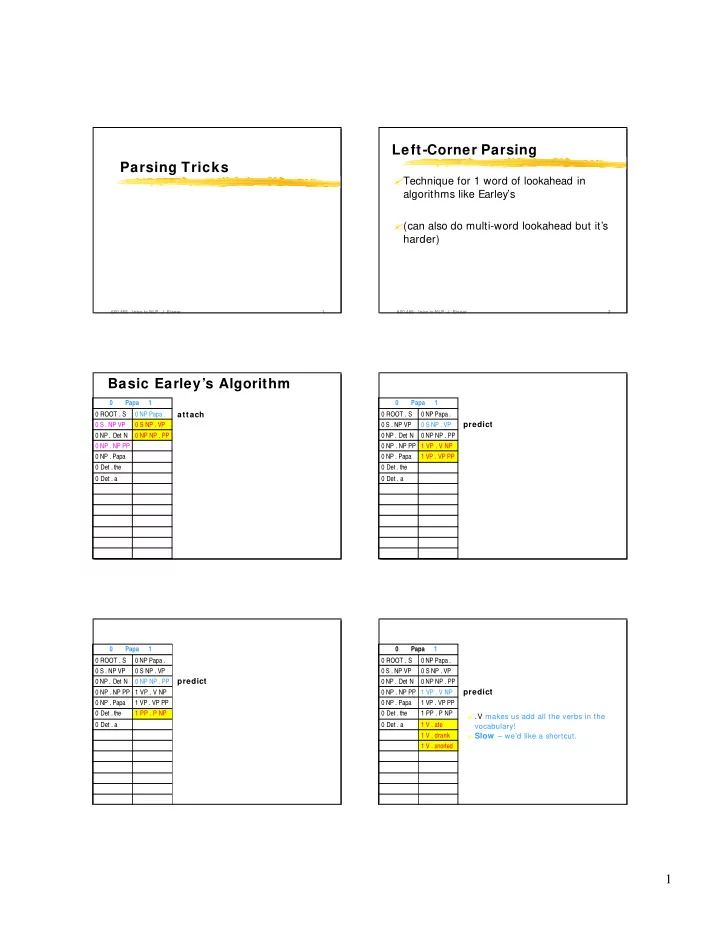

Left-Corner Parsing Parsing Tricks � Technique for 1 word of lookahead in algorithms like Earley’s � (can also do multi-word lookahead but it’s harder) 600.465 - Intro to NLP - J. Eisner 1 600.465 - Intro to NLP - J. Eisner 2 Basic Earley’s Algorithm 0 Papa 1 0 Papa 1 0 ROOT . S 0 NP Papa . attach 0 ROOT . S 0 NP Papa . predict 0 S . NP VP 0 S NP . VP 0 S . NP VP 0 S NP . VP 0 NP . Det N 0 NP NP . PP 0 NP . Det N 0 NP NP . PP 0 NP . NP PP 0 NP . NP PP 1 VP . V NP 0 NP . Papa 0 NP . Papa 1 VP . VP PP 0 Det . the 0 Det . the 0 Det . a 0 Det . a 0 Papa 1 0 Papa 1 0 ROOT . S 0 NP Papa . 0 ROOT . S 0 NP Papa . 0 S . NP VP 0 S NP . VP 0 S . NP VP 0 S NP . VP 0 NP . Det N 0 NP NP . PP predict 0 NP . Det N 0 NP NP . PP 0 NP . NP PP 1 VP . V NP 0 NP . NP PP 1 VP . V NP predict 0 NP . Papa 1 VP . VP PP 0 NP . Papa 1 VP . VP PP 0 Det . the 1 PP . P NP 0 Det . the 1 PP . P NP � .V makes us add all the verbs in the 0 Det . a 0 Det . a 1 V . ate vocabulary! � Slow – we’d like a shortcut. 1 V . drank 1 V . snorted 1

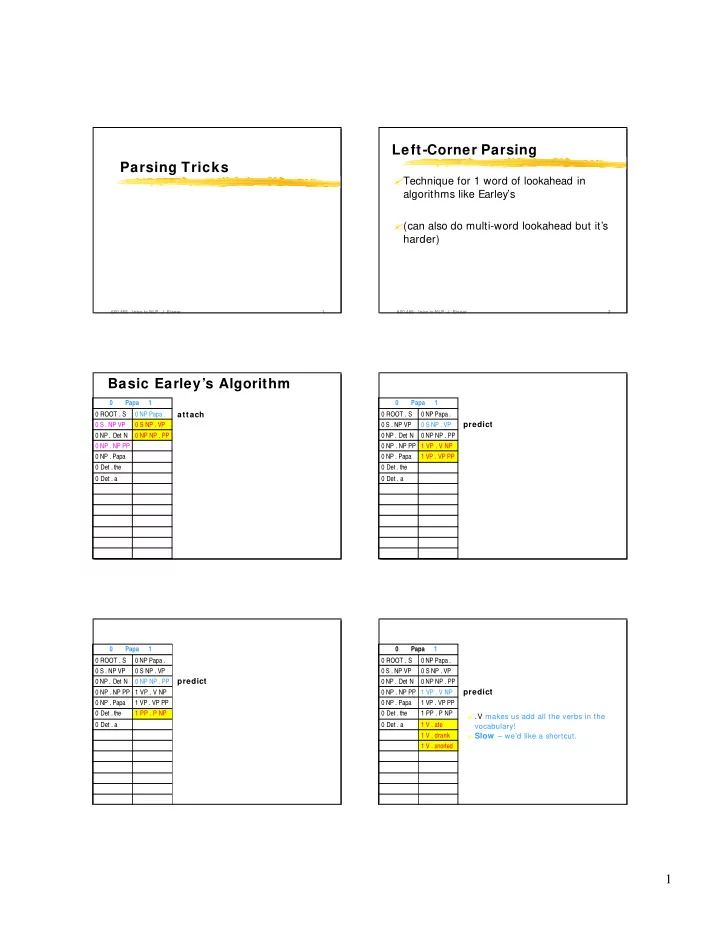

0 Papa 1 0 Papa 1 0 ROOT . S 0 NP Papa . 0 ROOT . S 0 NP Papa . 0 S . NP VP 0 S NP . VP 0 S . NP VP 0 S NP . VP 0 NP . Det N 0 NP NP . PP 0 NP . Det N 0 NP NP . PP 0 NP . NP PP 1 VP . V NP 0 NP . NP PP 1 VP . V NP predict 0 NP . Papa 1 VP . VP PP 0 NP . Papa 1 VP . VP PP predict 0 Det . the 1 PP . P NP 0 Det . the 1 PP . P NP � Every .VP adds all VP � … rules again. � .P makes us add all the prepositions … 0 Det . a 1 V . ate � Before adding a rule, check it’s not a 0 Det . a 1 V . ate 1 V . drank 1 V . drank duplicate. � Slow if there are > 700 VP � … rules, 1 V . snorted 1 V . snorted so what will you do in Homework 3? 1 P . with 1-word lookahead w ould help 1-word lookahead w ould help 0 Papa 1 ate 0 Papa 1 ate 0 ROOT . S 0 NP Papa . 0 ROOT . S 0 NP Papa . 0 S . NP VP 0 S NP . VP 0 S . NP VP 0 S NP . VP 0 NP . Det N 0 NP NP . PP 0 NP . Det N 0 NP NP . PP 0 NP . NP PP 1 VP . V NP 0 NP . NP PP 1 VP . V NP In fact, no point in adding any constituent 0 NP . Papa 1 VP . VP PP 0 NP . Papa 1 VP . VP PP that can’t start with ate 0 Det . the 1 PP . P NP 0 Det . the 1 PP . P NP Don’t bother adding PP, P, etc. 0 Det . a 1 V . ate 0 Det . a 1 V . ate 1 V . drank 1 V . drank 1 V . snorted 1 V . snorted No point in adding words other than ate No point in adding words other than ate 1 P . with 1 P . with With Left-Corner Filter 0 Papa 1 ate 0 Papa 1 ate 0 ROOT . S 0 NP Papa . attach 0 ROOT . S 0 NP Papa . 0 S . NP VP 0 S NP . VP 0 S . NP VP 0 S NP . VP predict 0 NP . Det N 0 NP NP . PP 0 NP . Det N 0 NP NP . PP PP can’t start with ate 0 NP . NP PP 0 NP . NP PP 1 VP . V NP 0 NP . Papa 0 NP . Papa 1 VP . VP PP Birth control – now we won’t predict 0 Det . the 0 Det . the 1 PP . P NP 0 Det . a 0 Det . a 1 PP . ate either! Need to know that ate can’t start PP Take closure of all categories that it does start … 2

0 Papa 1 ate 0 Papa 1 ate 0 ROOT . S 0 NP Papa . 0 ROOT . S 0 NP Papa . 0 S . NP VP 0 S NP . VP 0 S . NP VP 0 S NP . VP 0 NP . Det N 0 NP NP . PP 0 NP . Det N 0 NP NP . PP predict 0 NP . NP PP 1 VP . V NP 0 NP . NP PP 1 VP . V NP predict 0 NP . Papa 1 VP . VP PP 0 NP . Papa 1 VP . VP PP 0 Det . the 1 V . ate 0 Det . the 1 V . ate 0 Det . a 1 V . drank 0 Det . a 1 V . drank 1 V . snorted 1 V . snorted Merging Right-Hand Sides Merging Right-Hand Sides � Similarly, grammar might have rules � Grammar might have rules X ? X ? A G H P A G H P X ? X ? B G H P A G H Q � Could end up with both of these in chart: � Could end up with both of these in chart: ( 2, X ? A . G H P) in column 5 ( 2, X ? A . G H P) in column 5 ( 2, X ? B . G H P) in column 5 ( 2, X ? A . G H Q) in column 5 � But these are now interchangeable: if one � Not interchangeable, but we’ll be processing produces X then so will the other them in parallel for a while … � To avoid this redundancy, can always use � Solution: write grammar as X ? A G H (P| Q) dotted rules of this form: X ? ... G H P 600.465 - Intro to NLP - J. Eisner 15 600.465 - Intro to NLP - J. Eisner 16 Merging Right-Hand Sides Merging Right-Hand Sides X ? ( A | B) G H (P | Q) � Combining the two previous cases: NP ? ( Det | ? ) Adj * N X ? A G H P � These are regular expressions! X ? A G H Q � Build their minimal DFAs: X ? B G H P X ? A P B G H Q X ? becomes G H B Q X ? ( A | B) G H (P | Q) Adj � And often nice to write stuff like � Automaton states Det NP ? NP ? ( Det | ? ) Adj * N replace dotted N rules ( X ? Adj A G . H P ) N 600.465 - Intro to NLP - J. Eisner 17 600.465 - Intro to NLP - J. Eisner 18 3

Merging Right-Hand Sides Merging Right-Hand Sides Indeed, all NP ? rules can be unioned into a single DFA! Indeed, all NP ? rules can be unioned into a single DFA! NP ? NP ? ADJP ADJP JJ JJ NN NNS ADJP ADJP JJ JJ NN NNS ADJP NP ? ADJP DT NN | ADJP DT NN NP ? ADJP JJ NN | ADJP JJ NN NP ? NP ? ADJP JJ NN NNS | ADJP JJ NN NNS DT NP ? ADJP JJ NNS | ADJP JJ NNS NP ? ADJP NN | ADJP NN DFA NP ? ADJP NN NN | ADJP NN NN NP NP ? regular ADJP NN NNS | ADJP NN NNS NP ? ADJP NNS | ADJP NNS expression NP ? ADJP NPR | ADJP NPR NP ? ADJP NPRS | ADJP NPRS NP ? DT | DT ADJ NP ? DT ADJP | DT ADJP NP ? ADJP ? DT ADJP , JJ NN | DT ADJP , JJ NN NP ? P DT ADJP ADJP NN | DT ADJP ADJP NN NP ? DT ADJP JJ JJ NN | DT ADJP JJ JJ NN NP ? DT ADJP JJ NN | DT ADJP JJ NN NP ? DT ADJP JJ NN NN | DT ADJP JJ NN NN ADJP etc. etc. 600.465 - Intro to NLP - J. Eisner 19 600.465 - Intro to NLP - J. Eisner 20 Earley’s Algorithm on DFAs Earley’s Algorithm on DFAs � What does Earley ’s algorithm now look like? � What does Earley ’s algorithm now look like? PP PP … … Adj PP VP ? VP ? Det … … NP ? NP NP N Adj PP ? … N Column 4 Column 4 … … (2, ) predict (2, ) predict (4, ) (4, ) 600.465 - Intro to NLP - J. Eisner 21 600.465 - Intro to NLP - J. Eisner 22 Earley’s Algorithm on DFAs Earley’s Algorithm on DFAs � What does Earley ’s algorithm now look like? � What does Earley ’s algorithm now look like? PP PP … … Adj PP Adj PP VP ? VP ? Det Det … … NP ? NP ? NP NP N N Adj Adj PP ? … PP ? … N N Column 4 Column 5 … Column 7 Column 4 Column 5 … Column 7 … … … … predict predict (2, ) (4, ) (2, ) (4, ) or attach? or attach? (4, ) (4, ) (7, ) Both! (4, ) (4, ) (4, ) (4, ) (2, ) 600.465 - Intro to NLP - J. Eisner 23 600.465 - Intro to NLP - J. Eisner 24 4

Recommend

More recommend