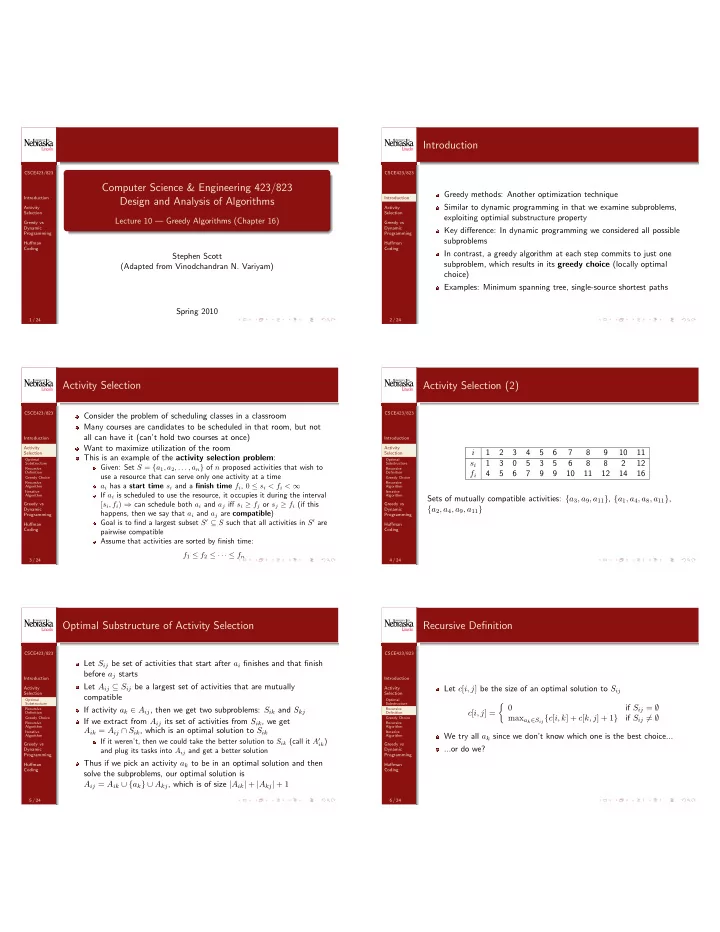

Introduction CSCE423/823 CSCE423/823 Computer Science & Engineering 423/823 Greedy methods: Another optimization technique Introduction Introduction Design and Analysis of Algorithms Similar to dynamic programming in that we examine subproblems, Activity Activity Selection Selection exploiting optimial substructure property Lecture 10 — Greedy Algorithms (Chapter 16) Greedy vs Greedy vs Dynamic Dynamic Key difference: In dynamic programming we considered all possible Programming Programming subproblems Huffman Huffman Coding Coding In contrast, a greedy algorithm at each step commits to just one Stephen Scott subproblem, which results in its greedy choice (locally optimal (Adapted from Vinodchandran N. Variyam) choice) Examples: Minimum spanning tree, single-source shortest paths Spring 2010 1 / 24 2 / 24 Activity Selection Activity Selection (2) CSCE423/823 CSCE423/823 Consider the problem of scheduling classes in a classroom Many courses are candidates to be scheduled in that room, but not all can have it (can’t hold two courses at once) Introduction Introduction Want to maximize utilization of the room Activity Activity i 1 2 3 4 5 6 7 8 9 10 11 Selection Selection This is an example of the activity selection problem : Optimal Optimal s i 1 3 0 5 3 5 6 8 8 2 12 Substructure Substructure Given: Set S = { a 1 , a 2 , . . . , a n } of n proposed activities that wish to Recursive Recursive f i 4 5 6 7 9 9 10 11 12 14 16 Definition Definition use a resource that can serve only one activity at a time Greedy Choice Greedy Choice Recursive Recursive a i has a start time s i and a finish time f i , 0 ≤ s i < f i < ∞ Algorithm Algorithm Iterative Iterative If a i is scheduled to use the resource, it occupies it during the interval Algorithm Algorithm Sets of mutually compatible activities: { a 3 , a 9 , a 11 } , { a 1 , a 4 , a 8 , a 11 } , [ s i , f i ) ⇒ can schedule both a i and a j iff s i ≥ f j or s j ≥ f i (if this Greedy vs Greedy vs { a 2 , a 4 , a 9 , a 11 } Dynamic Dynamic happens, then we say that a i and a j are compatible ) Programming Programming Goal is to find a largest subset S ′ ⊆ S such that all activities in S ′ are Huffman Huffman Coding Coding pairwise compatible Assume that activities are sorted by finish time: f 1 ≤ f 2 ≤ · · · ≤ f n 3 / 24 4 / 24 Optimal Substructure of Activity Selection Recursive Definition CSCE423/823 CSCE423/823 Let S ij be set of activities that start after a i finishes and that finish before a j starts Introduction Introduction Let A ij ⊆ S ij be a largest set of activities that are mutually Let c [ i, j ] be the size of an optimal solution to S ij Activity Activity Selection Selection compatible � 0 Optimal Optimal Substructure Substructure if S ij = ∅ Recursive If activity a k ∈ A ij , then we get two subproblems: S ik and S kj Recursive c [ i, j ] = Definition Definition max a k ∈ S ij { c [ i, k ] + c [ k, j ] + 1 } if S ij � = ∅ Greedy Choice Greedy Choice If we extract from A ij its set of activities from S ik , we get Recursive Recursive Algorithm Algorithm A ik = A ij ∩ S ik , which is an optimal solution to S ik Iterative Iterative We try all a k since we don’t know which one is the best choice... Algorithm Algorithm If it weren’t, then we could take the better solution to S ik (call it A ′ ik ) Greedy vs Greedy vs ...or do we? Dynamic and plug its tasks into A ij and get a better solution Dynamic Programming Programming Thus if we pick an activity a k to be in an optimal solution and then Huffman Huffman Coding Coding solve the subproblems, our optimal solution is A ij = A ik ∪ { a k } ∪ A kj , which is of size | A ik | + | A kj | + 1 5 / 24 6 / 24

Greedy Choice Greedy Choice (2) CSCE423/823 CSCE423/823 Theorem: Consider any nonempty subproblem S k and let a m be an activity in S k with earliest finish time. Then a m is in some What if, instead of trying all activities a k , we simply chose the one Introduction Introduction maximum-size subset of mutually compatible activities of S k with the earliest finish time of all those still compatible with the Activity Activity Let A k be an optimal solution to S k and let a j have earliest finish Selection Selection scheduled ones? Optimal Optimal time of all in A k Substructure Substructure This is a greedy choice in that it maximizes the amount of time left Recursive Recursive If a j = a m , we’re done Definition Definition Greedy Choice over to schedule other activities Greedy Choice If a j � = a m , then define A ′ k = A k \ { a j } ∪ { a m } Recursive Recursive Algorithm Algorithm Activities in A ′ are mutually compatible since those in A are mutually Let S k = { a i ∈ S : s i ≥ f k } be set of activities that start after a k Iterative Iterative Algorithm Algorithm compatible and f m ≤ f j finishes Greedy vs Greedy vs Since | A ′ k | = | A k | , we get that A ′ k is a maximum-size subset of Dynamic Dynamic If we greedily choose a 1 first (with earliest finish time), then S 1 is Programming Programming mutually compatible activities of S k that includes a m Huffman the only subproblem to solve Huffman What this means is that there is an optimal solution that uses the Coding Coding greedy choice 7 / 24 8 / 24 Recursive Algorithm Recursive Algorithm (2) CSCE423/823 CSCE423/823 m = k + 1 1 Introduction Introduction while m ≤ n and s [ m ] < f [ k ] do Activity 2 Activity Selection Selection m = m + 1 3 Optimal Optimal Substructure Substructure Recursive end Recursive 4 Definition Definition Greedy Choice Greedy Choice if m ≤ n then Recursive 5 Recursive Algorithm Algorithm return { a m }∪ Recursive-Activity- Iterative Iterative 6 Algorithm Algorithm Selector ( s, f, m, n ) Greedy vs Greedy vs Dynamic Dynamic else return ∅ Programming Programming 7 Huffman Huffman Algorithm 1: Recursive-Activity- Coding Coding Selector ( s, f, k, n ) 9 / 24 10 / 24 Iterative Algorithm Greedy vs Dynamic Programming CSCE423/823 CSCE423/823 A = { a 1 } When can we get away with a greedy algorithm instead of DP? 1 Introduction Introduction k = 1 When we can argue that the greedy choice is part of an optimal 2 Activity Activity solution, implying that we need not explore all subproblems for m = 2 to n do Selection 3 Selection Optimal if s [ m ] ≥ f [ k ] then Example: The knapsack problem 4 Greedy vs Substructure Recursive Dynamic A = A ∪ { a m } There are n items that a thief can steal, item i weighing w i pounds Definition 5 Programming Greedy Choice and worth v i dollars Recursive k = m Huffman 6 Algorithm Coding The thief’s goal is to steal a set of items weighing at most W pounds Iterative Algorithm end 7 and maximizes total value Greedy vs Dynamic return A In the 0-1 knapsack problem , each item must be taken in its entirety 8 Programming (e.g. gold bars) Algorithm 2: Greedy-Activity-Selector ( s, f, n ) Huffman In the fractional knapsack problem , the thief can take part of an Coding item and get a proportional amount of its value (e.g. gold dust) What is the time complexity? What would it have been if we’d approached this as a DP problem? 11 / 24 12 / 24

Recommend

More recommend