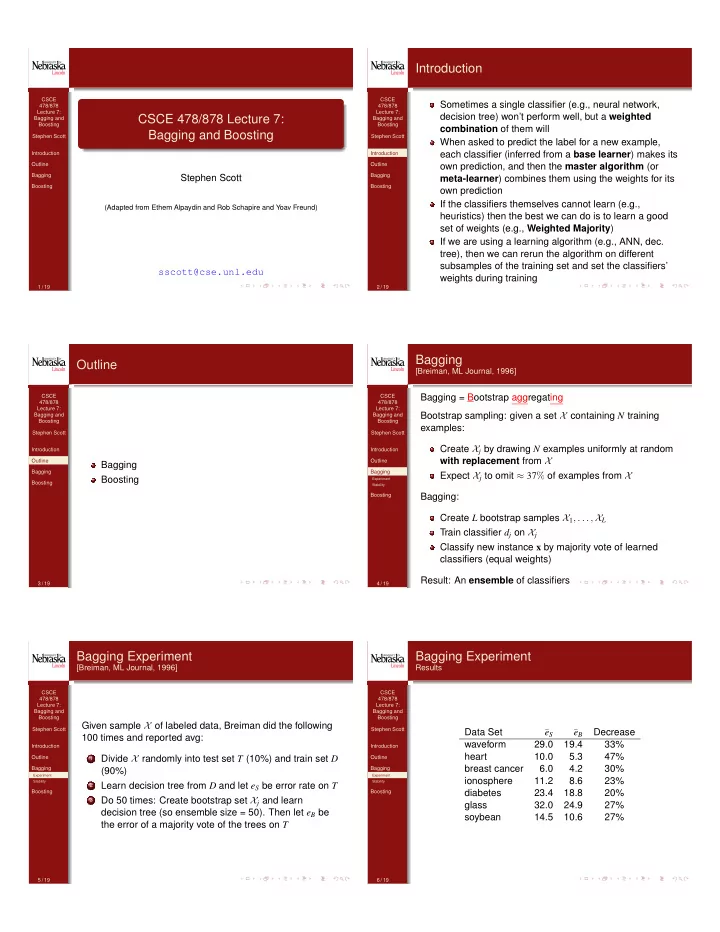

Introduction CSCE CSCE Sometimes a single classifier (e.g., neural network, 478/878 478/878 Lecture 7: Lecture 7: decision tree) won’t perform well, but a weighted CSCE 478/878 Lecture 7: Bagging and Bagging and Boosting Boosting combination of them will Bagging and Boosting Stephen Scott Stephen Scott When asked to predict the label for a new example, each classifier (inferred from a base learner ) makes its Introduction Introduction Outline Outline own prediction, and then the master algorithm (or Bagging Stephen Scott Bagging meta-learner ) combines them using the weights for its Boosting Boosting own prediction If the classifiers themselves cannot learn (e.g., (Adapted from Ethem Alpaydin and Rob Schapire and Yoav Freund) heuristics) then the best we can do is to learn a good set of weights (e.g., Weighted Majority ) If we are using a learning algorithm (e.g., ANN, dec. tree), then we can rerun the algorithm on different subsamples of the training set and set the classifiers’ sscott@cse.unl.edu weights during training 1 / 19 2 / 19 Bagging Outline [Breiman, ML Journal, 1996] CSCE CSCE Bagging = Bootstrap aggregating 478/878 478/878 Lecture 7: Lecture 7: Bootstrap sampling: given a set X containing N training Bagging and Bagging and Boosting Boosting examples: Stephen Scott Stephen Scott Create X j by drawing N examples uniformly at random Introduction Introduction with replacement from X Outline Outline Bagging Bagging Bagging Expect X j to omit ≈ 37 % of examples from X Boosting Experiment Boosting Stability Boosting Bagging: Create L bootstrap samples X 1 , . . . , X L Train classifier d j on X j Classify new instance x by majority vote of learned classifiers (equal weights) Result: An ensemble of classifiers 3 / 19 4 / 19 Bagging Experiment Bagging Experiment [Breiman, ML Journal, 1996] Results CSCE CSCE 478/878 478/878 Lecture 7: Lecture 7: Bagging and Bagging and Boosting Boosting Given sample X of labeled data, Breiman did the following Stephen Scott Stephen Scott Data Set ¯ ¯ Decrease e S e B 100 times and reported avg: waveform 29.0 19.4 33% Introduction Introduction heart 10.0 5.3 47% Outline Divide X randomly into test set T (10%) and train set D Outline 1 breast cancer 6.0 4.2 30% Bagging (90%) Bagging Experiment Experiment ionosphere 11.2 8.6 23% Stability Stability Learn decision tree from D and let e S be error rate on T 2 Boosting Boosting diabetes 23.4 18.8 20% Do 50 times: Create bootstrap set X j and learn 3 glass 32.0 24.9 27% decision tree (so ensemble size = 50). Then let e B be soybean 14.5 10.6 27% the error of a majority vote of the trees on T 5 / 19 6 / 19

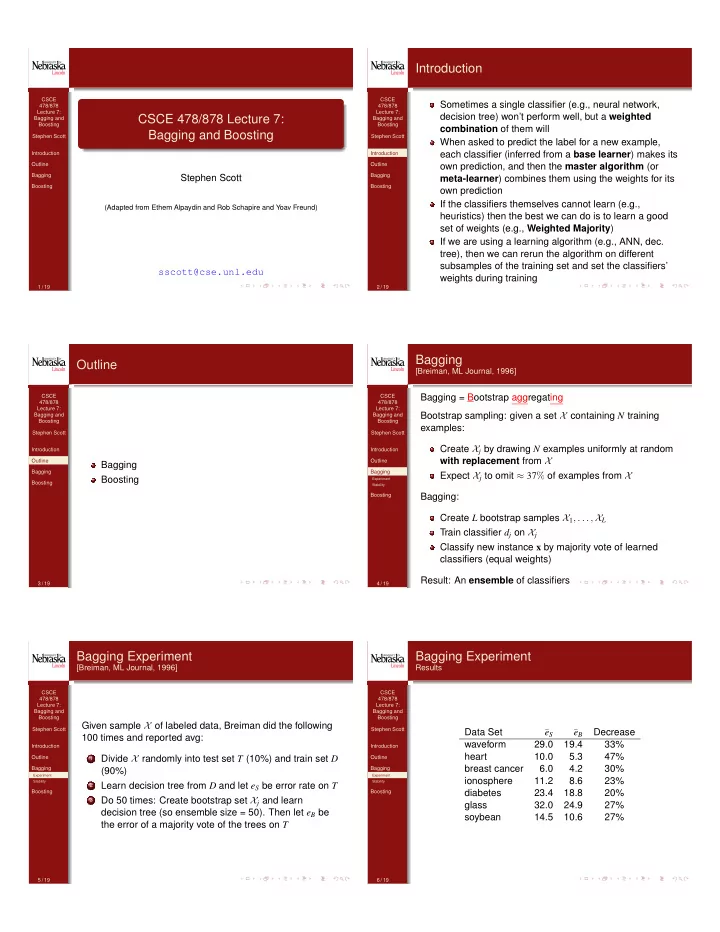

Bagging Experiment When Does Bagging Help? (cont’d) CSCE CSCE Same experiment, but using a nearest neighbor classifier, 478/878 478/878 Lecture 7: where prediction of new example x ’s label is that of x ’s Lecture 7: Bagging and Bagging and Boosting Boosting nearest neighbor in training set, where distance is e.g., Stephen Scott Euclidean distance Stephen Scott When learner is unstable , i.e., if small change in training set causes large change in hypothesis produced Introduction Introduction Results Outline Outline Decision trees, neural networks Bagging Bagging Data Set ¯ e S ¯ e B Decrease Experiment Experiment Not nearest neighbor Stability Stability waveform 26.1 26.1 0% Boosting Boosting heart 6.3 6.3 0% Experimentally, bagging can help substantially for unstable breast cancer 4.9 4.9 0% learners; can somewhat degrade results for stable learners ionosphere 35.7 35.7 0% diabetes 16.4 16.4 0% glass 16.4 16.4 0% What happened? 7 / 19 8 / 19 Boosting Boosting [Schapire & Freund Book] Algorithm Idea [ p j ↔ D j ; d j ↔ h j ] CSCE CSCE 478/878 478/878 Lecture 7: Lecture 7: Repeat for j = 1 , . . . , L : Bagging and Bagging and Boosting Boosting Similar to bagging, but don’t always sample uniformly; Run learning algorithm on examples randomly drawn Stephen Scott Stephen Scott 1 instead adjust resampling distribution p j over X to focus from training set X according to distribution p j ( p 1 = Introduction Introduction attention on previously misclassified examples uniform) Outline Outline Can sample X according to p j and train normally, or Final classifier weights learned classifiers, but not uniform; Bagging Bagging directly minimize error on X w.r.t. p j Boosting instead weight of classifier d j depends on its performance Boosting Algorithm Algorithm Output of learner is binary hypothesis d j 2 on data it was trained on Example Example Experimental Results Experimental Results Compute error p j ( d j ) = error of d j on examples from X 3 Miscellany Miscellany Final classifier is weighted combination of d 1 , . . . , d L , where drawn according to p j (can compute exactly) d j ’s weight depends on its error on X w.r.t. p j Create p j + 1 from p j by decreasing weight of instances 4 that d j predicts correctly 9 / 19 10 / 19 Boosting Boosting Algorithm Pseudocode (Fig 17.2) Algorithm Pseudocode (Schapire & Freund) CSCE CSCE Given: (x 1 , y 1 ), . . . , (x m , y m ) where x i ∈ X , y i ∈ { − 1 , + 1 } . 478/878 478/878 Initialize: D 1 (i) = 1 /m for i = 1 , . . . , m . Lecture 7: Lecture 7: Bagging and Bagging and For t = 1 , . . . , T : Boosting Boosting • Train weak learner using distribution D t . Stephen Scott Stephen Scott • Get weak hypothesis h t : X → { − 1 , + 1 } . • Aim: select h t to minimalize the weighted error: Introduction Introduction � t . = Pr i ∼ D t [ h t (x i ) ̸ = y i ] . Outline Outline • Choose α t = 1 � 1 − � t � 2 ln . Bagging Bagging � t • Update, for i = 1 , . . . , m : Boosting Boosting � e − α t Algorithm Algorithm D t + 1 (i) = D t (i) if h t (x i ) = y i Example Example × Z t e α t if h t (x i ) ̸ = y i Experimental Results Experimental Results Miscellany Miscellany = D t (i) exp ( − α t y i h t (x i )) , Z t where Z t is a normalization factor (chosen so that D t + 1 will be a distribution). Output the final hypothesis: � T � � H(x) = sign α t h t (x) . t = 1 11 / 19 12 / 19

Boosting Boosting Schapire & Freund Example: Decision Stumps Schapire & Freund Example: Decision Stumps ⇣ ⌘ ⇣ ⌘ 1 − ✏ j 1 − ✏ j D j = p j ; h j = d j ; α j = 1 2 ln ( 1 / β j ) = 1 D j = p j ; h j = d j ; α j = 1 2 ln ( 1 / β j ) = 1 2 ln 2 ln ✏ j ✏ j D 1 h 1 CSCE CSCE 2 478/878 478/878 Lecture 7: 4 Lecture 7: 1 3 Bagging and Bagging and Boosting Boosting 6 1 2 3 4 5 6 7 8 9 10 5 7 Stephen Scott Stephen Scott D 1 (i) 0.10 0.10 0.10 0.10 0.10 0.10 0.10 0.10 0.10 0.10 � 1 = 0 . 30, α 1 ≈ 0 . 42 e − α 1 y i h 1 (x i ) 1.53 1.53 1.53 0.65 0.65 0.65 0.65 0.65 0.65 0.65 Introduction 8 10 Introduction D 1 (i) e − α 1 y i h 1 (x i ) 0.15 0.15 0.15 0.07 0.07 0.07 0.07 0.07 0.07 0.07 Z 1 ≈ 0 . 92 Outline 9 Outline D 2 (i) 0.17 0.17 0.17 0.07 0.07 0.07 0.07 0.07 0.07 0.07 � 2 ≈ 0 . 21, α 2 ≈ 0 . 65 Bagging Bagging e − α 2 y i h 2 (x i ) 0.52 0.52 0.52 0.52 0.52 1.91 1.91 0.52 1.91 0.52 D 2 (i) e − α 2 y i h 2 (x i ) 0.09 0.09 0.09 0.04 0.04 0.14 0.14 0.04 0.14 0.04 Z 2 ≈ 0 . 82 Boosting Boosting D 2 h 2 Algorithm Algorithm D 3 (i) 0.11 0.11 0.11 0.05 0.05 0.17 0.17 0.05 0.17 0.05 � 3 ≈ 0 . 14, α 3 ≈ 0 . 92 Example Example e − α 3 y i h 3 (x i ) 0.40 0.40 0.40 2.52 2.52 0.40 0.40 2.52 0.40 0.40 Experimental Results Experimental Results D 3 (i) e − α 3 y i h 3 (x i ) 0.04 0.04 0.04 0.11 0.11 0.07 0.07 0.11 0.07 0.02 Z 3 ≈ 0 . 69 Miscellany Miscellany Calculations are shown for the ten examples as numbered in the figure. Examples on which hypothesis h t makes a mistake are indicated by underlined figures in the rows marked D t . 13 / 19 14 / 19 Boosting Boosting Schapire & Freund Example: Decision Stumps Example (cont’d) ⇣ ⌘ 1 − ✏ j D j = p j ; h j = d j ; α j = 1 2 ln ( 1 / β j ) = 1 2 ln ✏ j CSCE CSCE 478/878 478/878 Lecture 7: Lecture 7: Bagging and Bagging and H = sign 0.42 + 0.65 + 0.92 final Boosting Boosting D 3 Stephen Scott Stephen Scott Introduction Introduction h 3 Outline Outline Not in original Bagging Bagging hypothesis class! Boosting Boosting = Algorithm Algorithm Example Example Experimental Results Experimental Results Miscellany Miscellany In this case, need at least two of the three hypotheses to predict + 1 for weighted sum to exceed 0. 15 / 19 16 / 19 Boosting Boosting Experimental Results Experimental Results (cont’d) CSCE CSCE 478/878 478/878 Lecture 7: Lecture 7: Bagging and Bagging and Scatter plot: Percent classification error of non-boosted vs Boosting Boosting boosted on 27 learning tasks 30 30 Stephen Scott Stephen Scott 25 25 Introduction 30 Introduction Boosting C4.5 80 20 20 Outline Outline 25 C4.5 Bagging Bagging 15 15 60 20 Stumps Boosting Boosting C4.5 10 10 Algorithm Algorithm 15 40 Example Example 5 5 Experimental Results Experimental Results 10 Miscellany Miscellany 20 0 0 0 5 10 15 20 25 30 0 5 10 15 20 25 30 5 Boosting stumps Boosting stumps 0 0 0 20 40 60 80 0 5 10 15 20 25 30 Boosting stumps Boosting C4.5 17 / 19 18 / 19

Recommend

More recommend