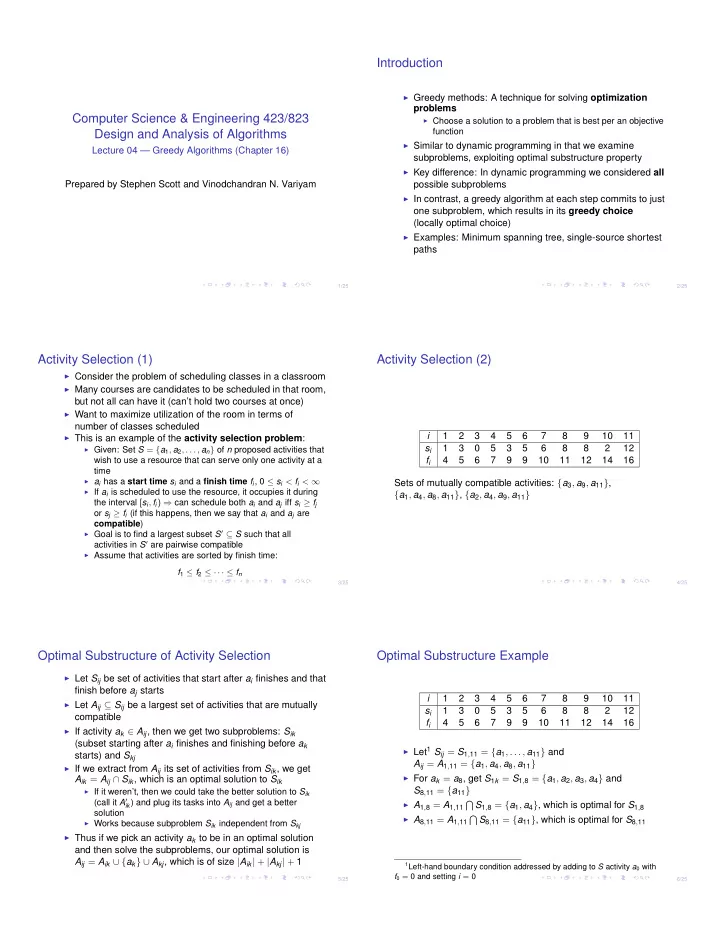

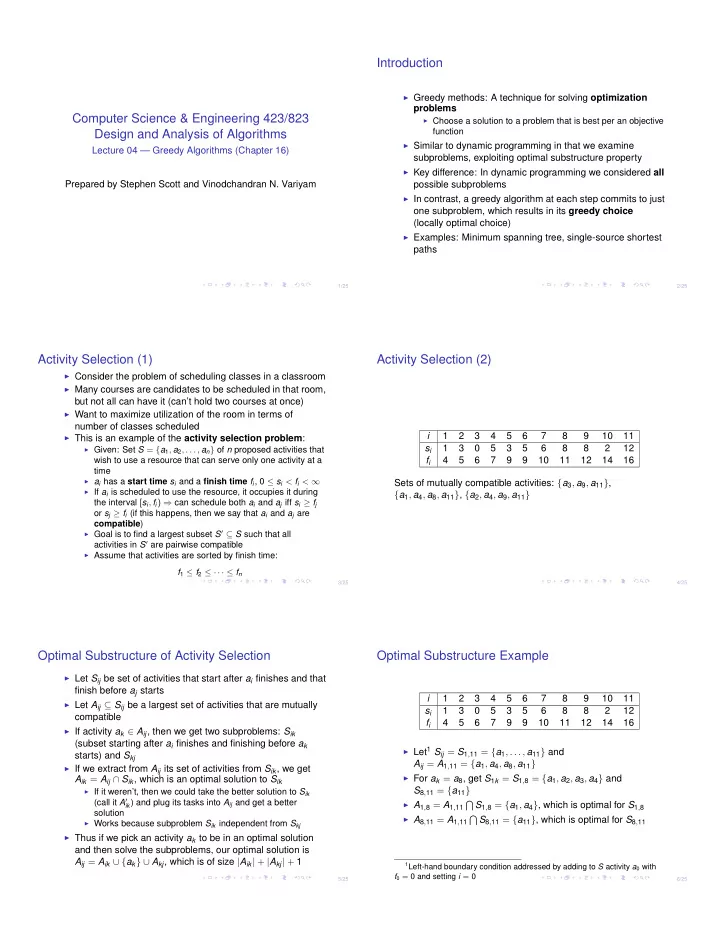

Introduction I Greedy methods: A technique for solving optimization problems Computer Science & Engineering 423/823 I Choose a solution to a problem that is best per an objective function Design and Analysis of Algorithms I Similar to dynamic programming in that we examine Lecture 04 — Greedy Algorithms (Chapter 16) subproblems, exploiting optimal substructure property I Key difference: In dynamic programming we considered all Prepared by Stephen Scott and Vinodchandran N. Variyam possible subproblems I In contrast, a greedy algorithm at each step commits to just one subproblem, which results in its greedy choice (locally optimal choice) I Examples: Minimum spanning tree, single-source shortest paths 1/25 2/25 Activity Selection (1) Activity Selection (2) I Consider the problem of scheduling classes in a classroom I Many courses are candidates to be scheduled in that room, but not all can have it (can’t hold two courses at once) I Want to maximize utilization of the room in terms of number of classes scheduled i 1 2 3 4 5 6 7 8 9 10 11 I This is an example of the activity selection problem : I Given: Set S = { a 1 , a 2 , . . . , a n } of n proposed activities that s i 1 3 0 5 3 5 6 8 8 2 12 f i 4 5 6 7 9 9 10 11 12 14 16 wish to use a resource that can serve only one activity at a time I a i has a start time s i and a finish time f i , 0 s i < f i < 1 Sets of mutually compatible activities: { a 3 , a 9 , a 11 } , I If a i is scheduled to use the resource, it occupies it during { a 1 , a 4 , a 8 , a 11 } , { a 2 , a 4 , a 9 , a 11 } the interval [ s i , f i ) ) can schedule both a i and a j iff s i � f j or s j � f i (if this happens, then we say that a i and a j are compatible ) I Goal is to find a largest subset S 0 ✓ S such that all activities in S 0 are pairwise compatible I Assume that activities are sorted by finish time: f 1 f 2 · · · f n 3/25 4/25 Optimal Substructure of Activity Selection Optimal Substructure Example I Let S ij be set of activities that start after a i finishes and that finish before a j starts i 1 2 3 4 5 6 7 8 9 10 11 I Let A ij ✓ S ij be a largest set of activities that are mutually s i 1 3 0 5 3 5 6 8 8 2 12 compatible f i 4 5 6 7 9 9 10 11 12 14 16 I If activity a k 2 A ij , then we get two subproblems: S ik (subset starting after a i finishes and finishing before a k I Let 1 S ij = S 1 , 11 = { a 1 , . . . , a 11 } and starts) and S kj A ij = A 1 , 11 = { a 1 , a 4 , a 8 , a 11 } I If we extract from A ij its set of activities from S ik , we get I For a k = a 8 , get S 1 k = S 1 , 8 = { a 1 , a 2 , a 3 , a 4 } and A ik = A ij \ S ik , which is an optimal solution to S ik I If it weren’t, then we could take the better solution to S ik S 8 , 11 = { a 11 } T S 1 , 8 = { a 1 , a 4 } , which is optimal for S 1 , 8 (call it A 0 ik ) and plug its tasks into A ij and get a better I A 1 , 8 = A 1 , 11 solution T S 8 , 11 = { a 11 } , which is optimal for S 8 , 11 I A 8 , 11 = A 1 , 11 I Works because subproblem S ik independent from S kj I Thus if we pick an activity a k to be in an optimal solution and then solve the subproblems, our optimal solution is A ij = A ik [ { a k } [ A kj , which is of size | A ik | + | A kj | + 1 1 Left-hand boundary condition addressed by adding to S activity a 0 with f 0 = 0 and setting i = 0 5/25 6/25

Recursive Definition Greedy Choice (1) I What if, instead of trying all activities a k , we simply chose I Let c [ i , j ] be the size of an optimal solution to S ij the one with the earliest finish time of all those still ⇢ 0 compatible with the scheduled ones? if S ij = ; c [ i , j ] = I This is a greedy choice in that it maximizes the amount of max a k 2 S ij { c [ i , k ] + c [ k , j ] + 1 } if S ij 6 = ; time left over to schedule other activities I In dynamic programming, we need to try all a k since we I Let S k = { a i 2 S : s i � f k } be set of activities that start don’t know which one is the best choice... after a k finishes I ...or do we? I If we greedily choose a 1 first (with earliest finish time), then S 1 is the only subproblem to solve 7/25 8/25 Greedy Choice (2) Greedy-Activity-Selector ( s , f , n ) I Theorem: Consider any nonempty subproblem S k and let a m be an activity in S k with earliest finish time. Then a m is 1 A = { a 1 } ; in some maximum-size subset of mutually compatible activities of S k 2 k = 1 ; I Proof (by construction): 3 for m = 2 to n do I Let A k be an optimal solution to S k and let a j have earliest if s [ m ] � f [ k ] then 4 finish time of all in A k A = A [ { a m } ; 5 I If a j = a m , we’re done k = m I If a j 6 = a m , then define A 0 6 k = A k \ { a j } [ { a m } I Activities in A 0 are mutually compatible since those in A are 7 end mutually compatible and f m f j 8 return A I Since | A 0 k | = | A k | , we get that A 0 k is a maximum-size subset of mutually compatible activities of S k that includes a m What is the time complexity? I What this means is that there exists an optimal solution that uses the greedy choice 9/25 10/25 Example Greedy vs Dynamic Programming (1) I Like with dynamic programming, greedy leverages a problem’s optimal substructure property I When can we get away with a greedy algorithm instead of DP? I When we can argue that the greedy choice is part of an optimal solution, implying that we need not explore all subproblems I Example: The knapsack problem I There are n items that a thief can steal, item i weighing w i pounds and worth v i dollars I The thief’s goal is to steal a set of items weighing at most W pounds and maximizes total value I In the 0-1 knapsack problem , each item must be taken in its entirety (e.g., gold bars) I In the fractional knapsack problem , the thief can take part of an item and get a proportional amount of its value (e.g., gold dust) 11/25 12/25

Greedy vs Dynamic Programming (2) Greedy vs Dynamic Programming (3) I There’s a greedy algorithm for the fractional knapsack problem I Sort the items by v i / w i and choose the items in descending order I Has greedy choice property, since any optimal solution lacking the greedy choice can have the greedy choice swapped in I Works because one can always completely fill the knapsack at the last step I Greedy strategy does not work for 0-1 knapsack, but do Problem instance 0-1 (greedy is suboptimal) Fractional have O ( nW ) -time dynamic programming algorithm I Note that time complexity is pseudopolynomial I Decision problem is NP-complete 13/25 14/25 Huffman Coding Huffman Coding (2) Can represent any encoding as a binary tree I Interested in encoding a file of symbols from some alphabet I Want to minimize the size of the file, based on the frequencies of the symbols I Fixed-length code uses d log 2 n e bits per symbol, where n is the size of the alphabet C I Variable-length code uses fewer bits for more frequent symbols a b c d e f Frequency (in thousands) 45 13 12 16 9 5 If c . freq = frequency of codeword and d T ( c ) = depth, cost of Fixed-length codeword 000 001 010 011 100 101 tree T is X Variable-length codeword 0 101 100 111 1101 1100 B ( T ) = c . freq · d T ( c ) c 2 C Fixed-length code uses 300k bits, variable-length uses 224k 15/25 16/25 Algorithm for Optimal Codes Huffman ( C ) I Can get an optimal code by finding an appropriate prefix 1 n = | C | ; code , where no codeword is a prefix of another 2 Q = C // min-priority queue ; 3 for i = 1 to n � 1 do I Optimal code also corresponds to a full binary tree allocate node z ; 4 I Huffman’s algorithm builds an optimal code by greedily z . left = x = E XTRACT -M IN ( Q ) ; 5 building its tree z . right = y = E XTRACT -M IN ( Q ) ; 6 I Given alphabet C (which corresponds to leaves), find the z . freq = x . freq + y . freq ; 7 two least frequent ones, merge them into a subtree I NSERT ( Q , z ) ; 8 I Frequency of new subtree is the sum of the frequencies of 9 end its children 10 return E XTRACT -M IN ( Q ) // return root ; I Then add the subtree back into the set for future consideration Time complexity: n � 1 iterations, O (log n ) time per iteration, total O ( n log n ) 17/25 18/25

Recommend

More recommend