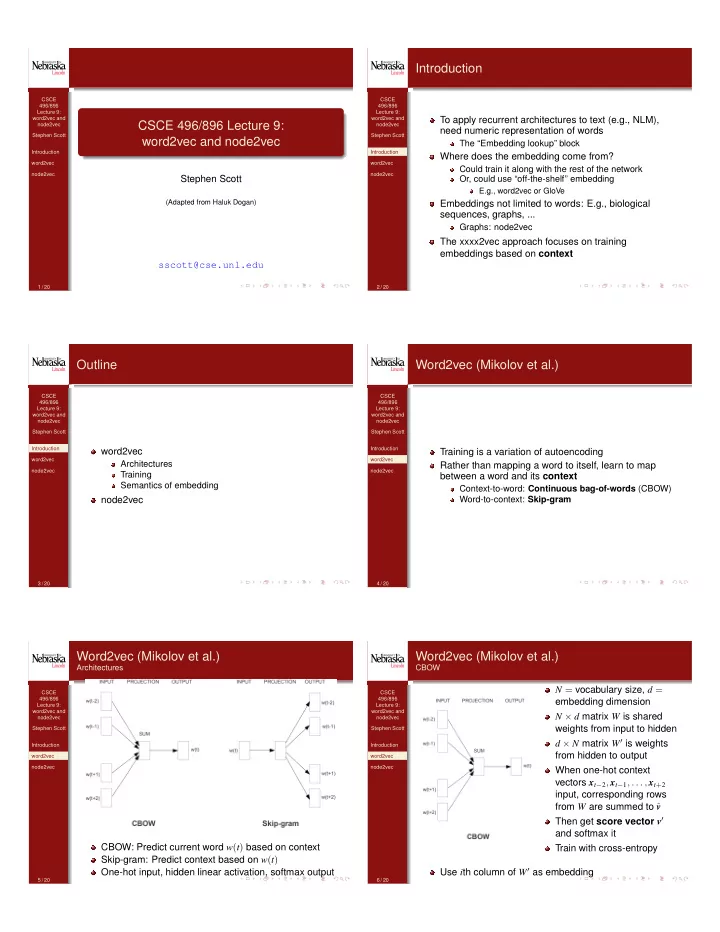

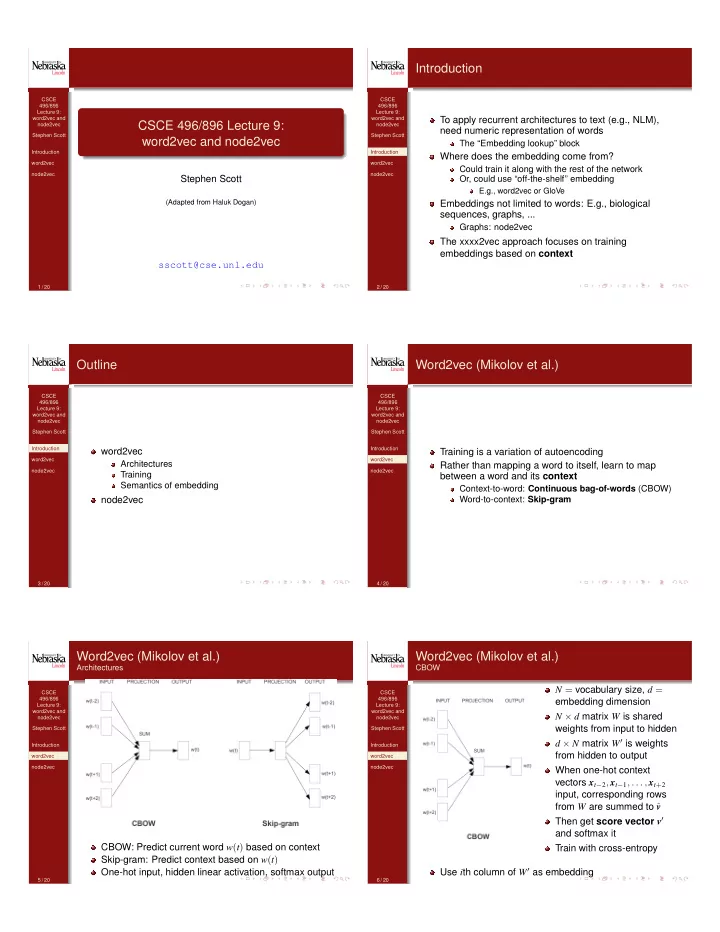

Introduction CSCE CSCE 496/896 496/896 Lecture 9: Lecture 9: word2vec and word2vec and To apply recurrent architectures to text (e.g., NLM), CSCE 496/896 Lecture 9: node2vec node2vec need numeric representation of words Stephen Scott Stephen Scott word2vec and node2vec The “Embedding lookup” block Introduction Introduction Where does the embedding come from? word2vec word2vec Could train it along with the rest of the network node2vec node2vec Stephen Scott Or, could use “off-the-shelf” embedding E.g., word2vec or GloVe (Adapted from Haluk Dogan) Embeddings not limited to words: E.g., biological sequences, graphs, ... Graphs: node2vec The xxxx2vec approach focuses on training embeddings based on context sscott@cse.unl.edu 1 / 20 2 / 20 Outline Word2vec (Mikolov et al.) CSCE CSCE 496/896 496/896 Lecture 9: Lecture 9: word2vec and word2vec and node2vec node2vec Stephen Scott Stephen Scott Introduction Introduction word2vec Training is a variation of autoencoding word2vec word2vec Architectures Rather than mapping a word to itself, learn to map node2vec node2vec Training between a word and its context Semantics of embedding Context-to-word: Continuous bag-of-words (CBOW) node2vec Word-to-context: Skip-gram 3 / 20 4 / 20 Word2vec (Mikolov et al.) Word2vec (Mikolov et al.) Architectures CBOW N = vocabulary size, d = CSCE CSCE 496/896 496/896 embedding dimension Lecture 9: Lecture 9: word2vec and word2vec and N ⇥ d matrix W is shared node2vec node2vec weights from input to hidden Stephen Scott Stephen Scott d ⇥ N matrix W 0 is weights Introduction Introduction from hidden to output word2vec word2vec node2vec node2vec When one-hot context vectors x t � 2 , x t � 1 , . . . , x t + 2 input, corresponding rows from W are summed to ˆ v Then get score vector v 0 and softmax it CBOW: Predict current word w ( t ) based on context Train with cross-entropy Skip-gram: Predict context based on w ( t ) Use i th column of W 0 as embedding One-hot input, hidden linear activation, softmax output 5 / 20 6 / 20

Word2vec (Mikolov et al.) Word2vec (Mikolov et al.) Skip-gram Skip-gram CSCE CSCE Equivalent to maximizing log probability 496/896 496/896 Lecture 9: Lecture 9: word2vec and word2vec and Symmetric to CBOW: use i th row node2vec node2vec X log P ( w t + j | w t ) of W as embedding Stephen Scott Stephen Scott j 2 { � c , � ( c � 1 ) ,..., ( c � 1 ) , c } , j 6 = 0 Goal is to maximize Introduction Introduction Softmax output and linear activation imply P ( w t � 2 , w t � 1 , w t + 1 , w t + 2 | w t ) word2vec word2vec node2vec Same as minimizing node2vec ⇣ ⌘ 0 > exp v w O v w I � log P ( w t � 2 , w t � 1 , w t + 1 , w t + 2 | w t ) P ( w O | w I ) = P N � 0 > � i = 1 exp Assume words are independent v i v w I given w t : 0 where v w I is w I ’s (input word) row from W and v i is w i ’s P ( w t � 2 , w t � 1 , w t + 1 , w t + 2 | w t ) = (output word) column from W 0 Q j 2 { � 2 , � 1 , 1 , 2 } P ( w t + j | w t ) I.e., trying to maximize dot product (similarity) between words in same context Problem: N is big ( ⇡ 10 5 – 10 7 ) 7 / 20 8 / 20 Word2vec (Mikolov et al.) Word2vec (Mikolov et al.) Skip-gram Semantics CSCE CSCE Speed up evaluation via negative sampling 496/896 496/896 Lecture 9: Lecture 9: Update the weight of each target word and only a small word2vec and word2vec and node2vec node2vec number (5–20) of negative words Stephen Scott Stephen Scott I.e., do not update for all N words To estimate P ( w O | w I ) , use Introduction Introduction word2vec word2vec k node2vec ⇣ ⌘ h ⇣ ⌘i node2vec 0 > X 0 > log � + log � � v E w i ⇠ P n ( w ) v w O v w I w i v w I i = 1 I.e., learn to distinguish target word w O from words drawn from noise distribution f ( w i ) 3 / 4 P n ( w i ) = j = 1 f ( w j ) 3 / 4 , P N where f ( w i ) is frequency of word w i in corpus I.e., P n ( w i ) is a unigram distribution Distances between countries and capitals similar 9 / 20 10 / 20 Word2vec (Mikolov et al.) Node2vec (Grover and Leskovec, 2016) Semantics CSCE CSCE 496/896 496/896 Lecture 9: Lecture 9: word2vec and word2vec and node2vec node2vec Stephen Scott Stephen Scott Word2vec’s approach generalizes beyond text Introduction Introduction All we need to do is represent the context of an instance word2vec word2vec to embed together instances with similar contexts node2vec node2vec E.g., biological sequences, nodes in a graph Node2vec defines its context for a node based on its Analogies: a is to b as c is to d local neighborhood, role in the graph, etc. Given normalized embeddings x a , x b , and x c , compute y = x b � x a + x c Find d maximizing cosine: x d y > / ( k x d kk y k ) 11 / 20 12 / 20

Node2vec (Grover and Leskovec, 2016) Node2vec (Grover and Leskovec, 2016) Notation CSCE CSCE Organization of nodes is based on: 496/896 496/896 Homophily: Nodes that are Lecture 9: Lecture 9: word2vec and word2vec and highly interconnected and node2vec node2vec cluster together should G = ( V , E ) Stephen Scott Stephen Scott embed near each other A is a |V| ⇥ |V| adjacency matrix Introduction Introduction f : V ! R d is a mapping function from individual nodes Structural roles: Nodes with similar roles in the graph word2vec word2vec (e.g., hubs) should embed near each other node2vec to feature representations node2vec |V| ⇥ d matrix u and s 1 belong to the same community of nodes N S ( u ) ⇢ V denotes a neighborhood of node u generated u and s 6 in two distinct communities share same structural role of a hub node through a neighborhood sampling strategy S Objective: Preserve local neighborhoods of nodes Goal Embed nodes from the same network community closely together Nodes that share similar roles have similar embeddings 13 / 20 14 / 20 node2vec node2vec Objective function CSCE CSCE 496/896 Key Contribution: Defining a flexible notion of a node’s 496/896 Lecture 9: Lecture 9: max f P log P ( N S ( u ) | f ( u )) word2vec and word2vec and network neighborhood. node2vec node2vec u 2 V Stephen Scott Stephen Scott BFS: role of the vertex 1 Assumptions: Introduction far apart from each other but share similar kind of Introduction word2vec vertices word2vec Conditional independence: node2vec DFS: community node2vec 2 Q P ( N S ( u ) | f ( u )) = P ( n i | f ( u )) reachability/closeness of the two nodes n i 2 N S ( u ) my friend’s friend’s friend has a higher chance to belong Symmetry in feature space: to the same community as me exp( f ( n i ) · f ( u )) P ( n i | f ( u )) = P exp( f ( v ) · f ( u )) v 2 V Objective function simplifies to: 2 3 X X max 4 � log Z u + f ( n i ) · f ( u ) 5 f u 2 V n i 2 N S ( u ) 15 / 20 16 / 20 Node2vec (Grover and Leskovec, 2016) Node2vec (Grover and Leskovec, 2016) Neighborhood Sampling Neighborhood Sampling CSCE CSCE Search bias ↵ : ⇡ vx = ↵ pq ( t , x ) w vx where 496/896 496/896 Lecture 9: Lecture 9: word2vec and word2vec and Given a source node u , we simulate a random walk of fixed 8 1 if d tx = 0 node2vec node2vec p > length ` : < Stephen Scott Stephen Scott ↵ pq ( t , x ) = if d tx = 1 1 ( > 1 Introduction Introduction if d tx = 2 : π vx if ( v , x ) 2 E q P ( c i = x | c i � 1 = v ) = Z word2vec word2vec 0 otherwise node2vec node2vec Return parameter p : Controls the likelihood of immediately revisiting a node c 0 = u in the walk ⇡ vx is the unnormalized transition probability If p > max( q , 1 ) less likely to sample an already visited node Z is the normalization constant. avoids 2-hop redundancy in sampling 2 nd order Markovian If p < min( q , 1 ) backtrack a step keep the walk local 17 / 20 18 / 20

Node2vec (Grover and Leskovec, 2016) Node2vec (Grover and Leskovec, 2016) Neighborhood Sampling Algorithm CSCE CSCE Implicit bias due to choice 496/896 496/896 Lecture 9: Lecture 9: of the start node u word2vec and word2vec and node2vec node2vec Simulating r random Stephen Scott Stephen Scott In-out parameter q : walks of fixed length ` starting from every Introduction Introduction If q > 1 inward exploration node word2vec word2vec Local view node2vec node2vec BFS behavior If q < 1 outward exploration Global view DFS behavior Phases: Preprocessing to compute transition probabilities 1 Random walks 2 Optimization using SGD 3 Each phase is parallelizable and executed asynchronously 19 / 20 20 / 20

Recommend

More recommend