Infotheory for Statistics and Learning Lecture 1 • Entropy • Relative entropy • Mutual information • f -divergence Mikael Skoglund 1/16 Entropy Over ( R , B ) , consider a discrete RV X with all probability in a countable set X ∈ B , the alphabet of X Let p X ( x ) be the pmf of X for x ∈ X The (Shannon) entropy of X � H ( X ) = − p X ( x ) log p X ( x ) x ∈X • the logarithm is base-2 if not declared otherwise • sometimes denoted H ( p X ) to emphasize the pmf p X • H ( X ) ≥ 0 with = only if p X ( x ) = 1 for some x ∈ X • H ( X ) ≤ log |X| (for |X| < ∞ ) with = only if p X ( x ) = 1 / |X| • H ( p X ) is concave in p X Mikael Skoglund 2/16

For two discrete RVs X and Y , with alphabets X and Y and a joint pmf p XY ( x, y ) , we have the joint entropy � H ( X, Y ) = − p XY ( x, y ) log p XY ( x, y ) x ∈X ,y ∈Y Conditional entropy � � H ( Y | X ) = − p X ( x ) p Y | X ( y | x ) log p Y | X ( y | x ) x y � = p X ( x ) H ( Y | X = x ) x = H ( X, Y ) − H ( X ) Extension to > 2 variables straightforward Mikael Skoglund 3/16 Relative Entropy Assume P and Q are two prob. measures over (Ω , A ) Emphasize expectation w.r.t. P (or Q ) as E P [ · ] (or E Q [ · ] ) The relative entropy between P and Q � log dP � D ( P � Q ) = E P dQ if P ≪ Q and D ( P � Q ) = ∞ otherwise • D ( P � Q ) ≥ 0 with = only if P = Q on A • D ( P � Q ) is convex in ( P, Q ) , i.e. D ( λP 1 +(1 − λ ) P 2 � λQ 1 +(1 − λ ) Q 2 ) ≤ λD ( P 1 � Q 1 )+(1 − λ ) D ( P 2 � Q 2 ) Also known as divergence, or Kullback–Leibler (KL) divergence D ( P � Q ) is not a metric (why?), but is still generally considered a measure of “distance” between P and Q Mikael Skoglund 4/16

For discrete RVs: P → p X and Q → p Y , p X ( x ) log p X ( x ) � D ( p X � p Y ) = p Y ( x ) x For abs. continuous RVs : P → P X → f X and Q → P Y → f Y , f X ( x ) log f X ( x ) � D ( P X � P Y ) = D ( f X � f Y ) = f Y ( x ) dx For a discrete RV X (with |X| < ∞ ), note that p X ( x ) log p X ( x ) � H ( X ) = log |X| − 1 / |X| x ⇒ H ( p X ) is concave in p X , entropy is negative distance to uniform Mikael Skoglund 5/16 Mutual Information Two variables X and Y with joint distribution P XY on ( R 2 , B 2 ) and marginals P X and P Y on ( R , B ) Mutual information I ( X ; Y ) = D ( P XY � P X ⊗ P Y ) where P X ⊗ P Y is the product distribution on ( R 2 , B 2 ) Discrete: p XY ( x, y ) log p XY ( x, y ) � I ( X ; Y ) = p X ( x ) p Y ( y ) x,y Abs. continuous: � f XY ( x, y ) log f XY ( x, y ) I ( X ; Y ) = f X ( x ) f Y ( y ) dxdy Mikael Skoglund 6/16

For discrete RVs, we see that I ( X ; Y ) = H ( X ) + H ( Y ) − H ( X, Y ) = H ( X ) − H ( X | Y ) = H ( Y ) − H ( Y | X ) For abs. continuous P X define differential entropy as � h ( X ) = − D ( P X � λ ) = − f X ( x ) log f X ( x ) dλ where λ is Lebesgue measure on ( R , B ) , then we get I ( X ; Y ) = h ( X ) + h ( Y ) − h ( X, Y ) = h ( X ) − h ( X | Y ) = h ( Y ) − h ( Y | X ) Saying h ( X ) = − D ( P X � λ ) is a slight abuse, since λ is not a probability measure. Still, h ( X ) can be interpreted as negative distance to “uniform” Mikael Skoglund 7/16 Since I ( X ; Y ) = D ( P XY � P X ⊗ P Y ) I ( X ; Y ) ≥ 0 with = only if P XY = P X ⊗ P Y , i.e. X and Y indep. Furthermore, since I ( X ; Y ) = H ( Y ) − H ( Y | X ) I ( X ; Y ) = h ( Y ) − h ( Y | X ) or we get H ( Y | X ) ≤ H ( Y ) and h ( Y | X ) ≤ h ( Y ) , conditioning reduces entropy Mikael Skoglund 8/16

f -divergence f : (0 , ∞ ) → R convex, strictly convex at x = 1 and f (1) = 0 Two probability measures P and Q on (Ω , A ) µ any measure on (Ω , A ) such that both P ≪ µ and Q ≪ µ Let p ( ω ) = dP q ( ω ) = dQ dµ ( ω ) , dµ ( ω ) The f -divergence between P and Q � p ( ω ) � � � p ( ω ) �� � D f ( P � Q ) = f dQ = E Q f q ( ω ) q ( ω ) When P ≪ Q we have p ( ω ) q ( ω ) = dP � � dP �� dQ ( ω ) and thus D f ( P � Q ) = E Q f dQ ( ω ) Mikael Skoglund 9/16 When both P and Q are discrete, i.e. there is a countable set K ∈ A such that P ( K ) = Q ( K ) = 1 , let µ = counting measure on K , i.e. µ ( F ) = | F | for F ⊂ K . Then p and q are pmf’s and � p ( ω ) � � D f ( P � Q ) = q ( ω ) f q ( ω ) ω ∈ K When (Ω , A ) = ( R , B ) and both P and Q have R–N derivatives w.r.t. Lebesgue measure µ = λ on B , then p and q are pdfs and � � p ( x ) � D f ( P � Q ) = q ( x ) f dx q ( x ) In general, D f ( P � Q ) ≥ 0 with = only for P = Q on A Also, D f ( P � Q ) is convex in ( P, Q ) Mikael Skoglund 10/16

Examples (assuming P ≪ Q ): Relative entropy, f ( x ) = x log x � dP � � � dQ log dP log dP D f ( P � Q ) = D ( P � Q ) = E Q = E P dQ dQ Total variation, f ( x ) = 1 2 | x − 1 | � � D f ( P � Q ) = TV ( P, Q ) = 1 dP � � dQ − 1 ( P ( A ) − Q ( A )) 2 E Q � = sup � � � A ∈A • discrete TV ( P, Q ) = 1 � | p ( x ) − q ( x ) | 2 x • abs. continuous TV ( P, Q ) = 1 � | p ( x ) − q ( x ) | dx 2 Mikael Skoglund 11/16 χ 2 -divergence, χ 2 ( P, Q ) , f ( x ) = ( x − 1) 2 Squared Hellinger distance, H 2 ( P, Q ) , f ( x ) = (1 − √ x ) 2 � Hellinger distance, H ( P, Q ) = H 2 ( P, Q ) Le Cam distance, LC ( P � Q ) , f ( x ) = (1 − x ) / (2 x + 2) Jensen–Shannon symmetrized divergence, 2 x 2 f ( x ) = x log x + 1 + log x + 1 � � � P + Q � � P + Q � � � JS ( P � Q ) = D P + D Q � � 2 2 � � Mikael Skoglund 12/16

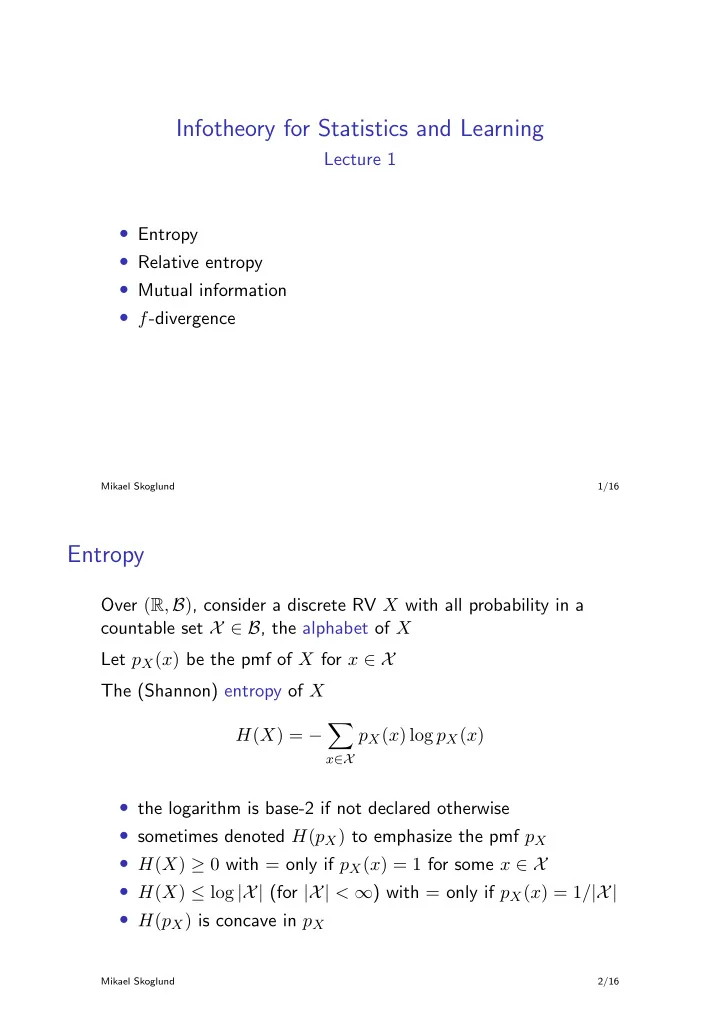

Inequalities for f -divergences Consider D f ( P � Q ) and D g ( P � Q ) for P and Q on (Ω , A ) Let R ( f, g ) = { ( D f , D g ) : over P and Q } and R 2 ( f, g ) = R ( f, g ) for the special case Ω = { 0 , 1 } and A = σ ( { 0 , 1 } ) = {∅ , { 0 } , { 1 } , { 0 , 1 }} Theorem: For any (Ω , A ) , R = the convex hull of R 2 Let F ( x ) = inf { y : ( x, y ) ∈ R ( f, g ) } then D g ( P � Q ) ≥ F ( D f ( P � Q )) Mikael Skoglund 13/16 Example: For g ( x ) = x ln x and f ( x ) = | x − 1 | , it can be proved 1 that ( x, F ( x )) is obtained from � � 1 − (coth( t ) − 1 t ) 2 x = t t 2 � � t F = log + t coth( t ) − sinh 2 ( t ) sinh( t ) by varying t ∈ (0 , ∞ ) That is, given a t , resulting in ( x, F ) , we have D g ( P � Q ) = D ( P � Q ) ≥ F for D f ( P � Q ) = 2 TV ( P, Q ) = x (with D ( P � Q ) in nats, i.e. based on ln x ) 1 See A. A. Fedotov, P. Harremo¨ es and F. Topsøe, “Refinements of Pinsker’s inequality,” IEEE Trans. IT , 2003. The paper uses V ( P � Q ) = 2 TV ( P � Q ) Mikael Skoglund 14/16

0.6 0.5 0.4 0.3 0.2 0.1 0.2 0.4 0.6 0.8 1.0 Blue: The curve ( x ( t ) , F ( t )) for t > 0 Green: The function x 2 / 2 Thus we have Pinsker’s inequality D ( P � Q ) ≥ 1 2( D f ( P � Q )) 2 = 2 ( TV ( P, Q )) 2 Or, for D ( P � Q ) in bits: D ( P � Q ) ≥ 2 log e ( TV ( P, Q )) 2 Mikael Skoglund 15/16 Other inequalities between f -divergences: 1 � 2 H 2 ( P, Q ) ≤ TV ( P, Q ) ≤ H ( P, Q ) 1 − H 2 ( P, Q ) / 4 2 D ( P � Q ) ≥ 2 log 2 − H 2 ( P, Q ) D ( P � Q ) ≤ log(1 + χ 2 ( P � Q )) 1 2 H 2 ( P, Q ) ≤ LC ( P, Q ) ≤ H 2 ( P, Q ) χ 2 ( P � Q ) ≥ 4 ( TV ( P, Q )) 2 For discrete p and q , “reverse Pinsker” � 2 � 2 log e min x q ( x )( TV ( p, q )) 2 min x q ( x )( TV ( p, q )) 2 D ( p � q ) ≤ log ≤ 1 + Mikael Skoglund 16/16

Recommend

More recommend