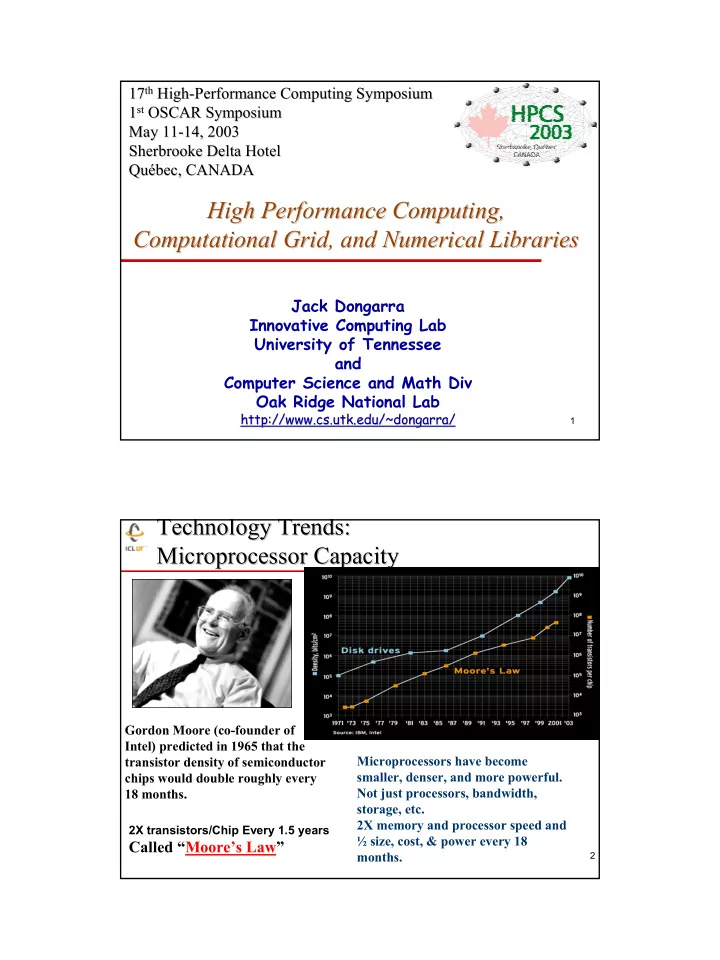

th High 17 th 17 High- -Performance Computing Symposium Performance Computing Symposium st OSCAR Symposium 1 st 1 OSCAR Symposium May 11- -14, 2003 14, 2003 May 11 Sherbrooke Delta Hotel Sherbrooke Delta Hotel Québec, CANADA Québec, CANADA High Performance Computing, High Performance Computing, Computational Grid, and Numerical Libraries Computational Grid, and Numerical Libraries Jack Dongarra Innovative Computing Lab University of Tennessee and Computer Science and Math Div Oak Ridge National Lab http://www.cs.utk.edu/~dongarra www.cs.utk.edu/~dongarra/ / http:// 1 Technology Trends: Technology Trends: Microprocessor Capacity Microprocessor Capacity Gordon Moore (co-founder of Intel) predicted in 1965 that the transistor density of semiconductor Microprocessors have become chips would double roughly every smaller, denser, and more powerful. 18 months. Not just processors, bandwidth, storage, etc. 2X memory and processor speed and 2X transistors/Chip Every 1.5 years ½ size, cost, & power every 18 Called “Moore’s Law” months. 2 1

Moore’s Law Moore’s Law Super Scalar/Vector/Parallel 1 PFlop/s Earth Parallel Simulator ASCI White ASCI Red Pacific 1 TFlop/s TMC CM-5 Cray T3D Vector TMC CM-2 Cray 2 1 GFlop/s Cray X-MP Super Scalar Cray 1 CDC 7600 IBM 360/195 Scalar 1 MFlop/s CDC 6600 IBM 7090 1 KFlop/s UNIVAC 1 EDSAC 1 3 1950 1960 1970 1980 1990 2000 2010 H. Meuer, H. Simon, E. Strohmaier, & JD H. Meuer, H. Simon, E. Strohmaier, & JD - Listing of the 500 most powerful Computers in the World - Yardstick: Rmax from LINPACK MPP Ax=b, dense problem TPP performance Rate - Updated twice a year Size SC‘xy in the States in November Meeting in Mannheim, Germany in June - All data available from www.top500.org 4 2

X Y (S c a tte r) 1 Fastest Computer Over Time In 1980 a computation that 70 took 1 full year to complete can now be done in ~ 10 60 hours! 50 GFlop/s 40 30 TMC Cray CM-2 20 Y-MP (2048) (8) 10 Fujitsu VP-2600 0 1990 1992 1994 1996 1998 2000 Year 5 X Y (S c a tte r) 1 Fastest Computer Over Time In 1980 a computation that 700 took 1 full year to complete can now be done in ~ 16 Hitachi 600 minutes! CP- 500 PACS (2040) GFlop/s 400 TMC CM-5 Intel 300 NEC (1024) Paragon SX-3 (6788) 200 (4) Fujitsu 100 VPP-500 TMC Fujitsu CM-2 Cray (140) VP-2600 (2048) Y-MP (8) 0 1990 1992 1994 1996 1998 2000 Year 6 3

X Y (S c a tte r) 1 Fastest Computer Over Time In 1980 a computation that ASCI White 7000 took 1 full year to complete Pacific can today be done in ~ 27 (7424) 6000 seconds! Intel ASCI ASCI Red Xeon 5000 Blue (9632) GFlop/s Pacific 4000 SST (5808) 3000 Intel ASCI Red 2000 (9152) SGI ASCI Blue Hitachi Intel 1000 Fujitsu TMC Paragon CP-PACS NEC Mountain TMC VPP-500 CM-5 (2040) (6788) Fujitsu SX-3 Cray CM-2 (140) (1024) VP-2600 (4) (5040) Y-MP (8) (2048) 0 1990 1992 1994 1996 1998 2000 Year 7 XY (Scatter) 1 Fastest Computer Over Time In 1980 a computation that 70 Japanese took 1 full year to complete Earth Simulator can today be done in ~ 5.4 60 seconds! NEC 5120 50 TFlop/s 40 30 20 ASCI White Intel ASCI Pacific Intel ASCI ASCI Blue Red Xeon Hitachi Intel (7424) 10 Fujitsu TMC Red (9632) CP-PACS Mountain Paragon NEC TMC VPP-500 CM-5 (2040) (9152) (5040) (6788) Fujitsu SX-3 Cray CM-2 (140) (1024) VP-2600 (4) Y-MP (8) (2048) 0 1990 1992 1994 1996 1998 2000 2002 Year 8 4

Machines at the Top of the List Machines at the Top of the List Year Computer Measured Factor Theoretical Factor Δ Number of Efficiency Gflop/s Δ from Peak from Processors Pervious Gflop/s Pervious Year Year 2002 Earth Simulator 35860 5.0 40960 3.7 5120 88% Computer, NEC 2001 ASCI White-Pacific, 7226 1.5 11136 1.0 7424 65% IBM SP Power 3 2000 7424 44% ASCI White-Pacific, 4938 2.1 11136 3.5 IBM SP Power 3 1999 ASCI Red Intel Pentium 2379 1.1 3207 0.8 9632 74% II Xeon core 1998 ASCI Blue-Pacific SST, 2144 1.6 3868 2.1 5808 55% IBM SP 604E 1997 Intel ASCI Option Red 9152 73% 1338 3.6 1830 3.0 (200 MHz Pentium Pro) 1996 Hitachi CP-PACS 368.2 1.3 614 1.8 2048 60% 1995 Intel Paragon XP/S MP 281.1 1 338 1.0 6768 83% 1994 Intel Paragon XP/S MP 6768 83% 281.1 2.3 338 1.4 9 1993 Fujitsu NWT 124.5 236 140 53% A Tour de Force in Engineering A Tour de Force in Engineering Homogeneous, Centralized, ♦ Proprietary, Expensive! Target Application: CFD- ♦ Weather, Climate, Earthquakes 640 NEC SX/6 Nodes (mod) ♦ � 5120 CPUs which have vector ops � Each CPU 8 Gflop/s Peak 40 TFlop/s (peak) ♦ $250-$500 million for things in ♦ building Footprint of 4 tennis courts ♦ 7 MWatts ♦ � Say 10 cent/KWhr - $16.8K/day = $6M/year! Expect to be on top of Top500 ♦ until 60-100 TFlop ASCI machine arrives For the Top500 (November 2002) ♦ � Performance of ESC ≈ Σ Next Top 7 Computers � Σ of DOE computers (DP&OS) 10 = 49 TFlop/s 5

20th List: The TOP10 20th List: The TOP10 R max Area of Rank Manufacturer Computer Installation Site Country Year Installation # Proc [TF/s] 1 NEC Earth-Simulator 35.86 Earth Simulator Center Japan 2002 Research 5120 ASCI Q, Los Alamos 2 HP 7.73 USA 2002 Research 4096 AlphaServer SC National Laboratory ASCI Q, Los Alamos 2 HP 7.73 USA 2002 Research 4096 AlphaServer SC National Laboratory ASCI White Lawrence Livermore 4 IBM 7.23 USA 2000 Research 8192 SP Power3 National Laboratory Lawrence Livermore 5 Linux NetworX MCR Cluster 5.69 USA 2002 Research 8192 National Laboratory AlphaServer SC Pittsburgh 6 HP 4.46 USA 2001 Academic 3016 ES45 1 GHz Supercomputing Center AlphaServer SC Commissariat a l’Energie 7 HP 3.98 France 2001 Research 2560 ES45 1 GHz Atomique (CEA) Xeon Cluster - 3.34 Forecast Systems Laboratory - 8 HPTi USA 2002 Research 1536 Myrinet2000 NOAA 9 IBM pSeries 690 Turbo 3.16 HPCx UK 2002 Academic 1280 NCAR (National Center for 10 IBM pSeries 690 Turbo 3.16 USA 2002 Research 1216 11 Atmospheric Research) Response to the Earth Simulator: Response to the Earth Simulator: IBM Blue Gene/L and ASCI Purple IBM Blue Gene/L and ASCI Purple ♦ Announced 11/19/02 � One of 2 machines for LLNL � 360 TFlop/s � 130,000 proc � Linux � FY 2005 Plus ASCI Purple 12 IBM Power 5 based 12K proc, 100 TFlop/s 6

DOE ASCI DOE ASCI Red Storm Sandia Sandia National Lab National Lab Red Storm ♦ 10,368 compute processors, 108 cabinets � AMD Opteron @ 2.0 GHz � Cray integrator and providing the interconnect ♦ Fully connected high performance 3-D mesh interconnect. � Topology - 27 X 16 X 24 ♦ Peak of ~ 40 TF � Expected MP-Linpack >20 TF ♦ Aggregate system memory bandwidth - ~55 TB/s ♦ MPI Latency - 2 ms neighbor, 5 ms across machine ♦ Bi-Section bandwidth ~2.3 TB/s 2004 in operation ♦ Link bandwidth ~3.0 GB/s in each direction 13 TOP500 - - Performance Performance TOP500 1 Pflop/s 293 TF/s SUM 100 Tflop/s 35.8 TF/s 10 Tflop/s NEC ES N=1 1.17 TF/s IBM ASCI White 1 Tflop/s LLNL Intel ASCI Red 59.7 GF/s 100 Gflop/s Sandia Fujitsu 196 GF/s N=500 'NWT' NAL 10 Gflop/s 0.4 GF/s 1 Gflop/s My Laptop 100 Mflop/s Jun-93 Nov-93 Jun-94 Nov-94 Jun-95 Nov-95 Jun-96 Nov-96 Jun-97 Nov-97 Jun-98 Nov-98 Jun-99 Nov-99 Jun-00 Nov-00 Jun-01 Nov-01 Jun-02 Nov-02 14 7

Performance Extrapolation Performance Extrapolation 10 PFlop/s 1 PFlop/s Blue Gene 130,000 proc ASCI P 100 TFlop/s 12,544 proc Earth Simulator 10 TFlop/s Sum 1 TFlop/s N=1 100 GFlop/s PFlop/s TFlop/s computer 10 GFlop/s To enter 1 GFlop/s the list N=500 100 MFlop/s 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 9 9 9 9 9 9 9 0 0 0 0 0 0 0 0 0 0 1 - - - - - - - - - - - - - - - - - - n n n n n n n n n n n n n n n n n n u u u u u u u u u u u u u u u u u u J J J J J J J J J J J J J J J J J J 15 Performance Extrapolation Performance Extrapolation 10 PFlop/s 1 PFlop/s Blue Gene 130,000 proc ASCI P 100 TFlop/s 12,544 proc 10 TFlop/s Sum 1 TFlop/s N=1 100 GFlop/s 10 GFlop/s 1 GFlop/s My Laptop N=500 100 MFlop/s 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 9 9 9 9 9 9 9 0 0 0 0 0 0 0 0 0 0 1 - - - - - - - - - - - - - - - - - - n n n n n n n n n n n n n n n n n n u u u u u u u u u u u u u u u u u u J J J J J J J J J J J J J J J J J J 16 8

Recommend

More recommend