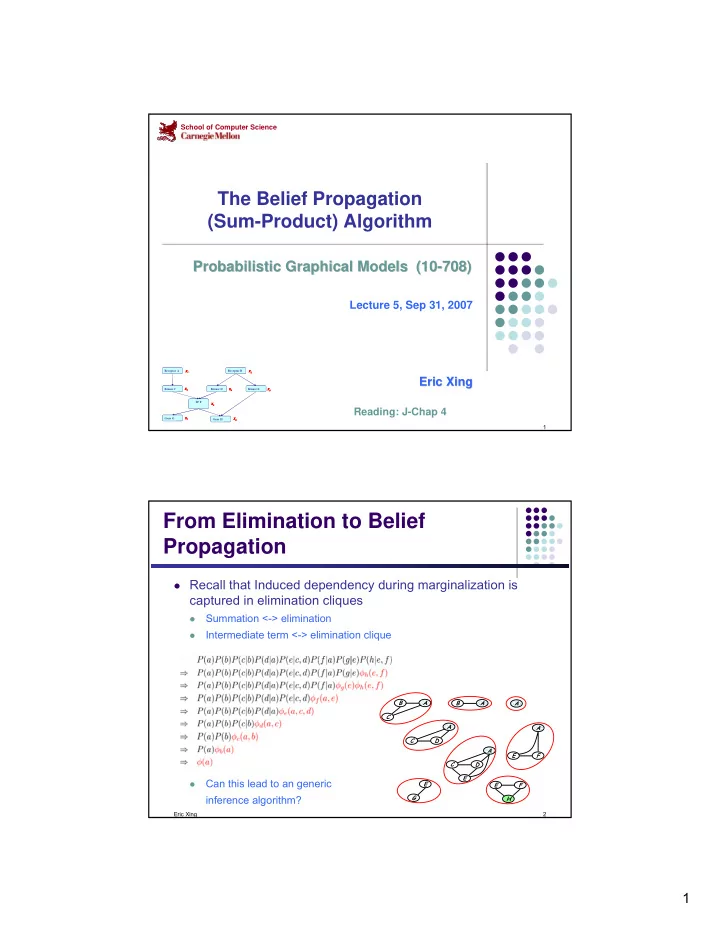

School of Computer Science The Belief Propagation (Sum-Product) Algorithm Probabilistic Graphical Models (10- Probabilistic Graphical Models (10 -708) 708) Lecture 5, Sep 31, 2007 Receptor A Receptor A X 1 X 1 X 1 Receptor B Receptor B X 2 X 2 X 2 Eric Xing Eric Xing Kinase C Kinase C X 3 X 3 X 3 Kinase D Kinase D X 4 X 4 X 4 Kinase E Kinase E X 5 X 5 X 5 TF F TF F X 6 X 6 X 6 Reading: J-Chap 4 Gene G Gene G X 7 X 7 X 7 X 8 X 8 X 8 Gene H Gene H 1 From Elimination to Belief Propagation � Recall that Induced dependency during marginalization is captured in elimination cliques Summation <-> elimination � Intermediate term <-> elimination clique � B A B A A C A A C D A E F C D E Can this lead to an generic E � E F G inference algorithm? H Eric Xing 2 1

Tree GMs Directed tree: all Undirected tree: a Poly tree: can have nodes except the root unique path between multiple parents have exactly one any pair of nodes parent We will come back to this later Eric Xing 3 Equivalence of directed and undirected trees Any undirected tree can be converted to a directed tree by choosing a root � node and directing all edges away from it A directed tree and the corresponding undirected tree make the same � conditional independence assertions Parameterizations are essentially the same. � Undirected tree: � Directed tree: � Equivalence: � Evidence:? � Eric Xing 4 2

From elimination to message passing Recall ELIMINATION algorithm: � Choose an ordering Z in which query node f is the final node � Place all potentials on an active list � Eliminate node i by removing all potentials containing i , take sum/product over x i . � Place the resultant factor back on the list � For a TREE graph: � Choose query node f as the root of the tree � View tree as a directed tree with edges pointing towards from f � Elimination ordering based on depth-first traversal � Elimination of each node can be considered as message-passing (or Belief � Propagation) directly along tree branches, rather than on some transformed graphs � thus, we can use the tree itself as a data-structure to do general inference!! Eric Xing 5 The elimination algorithm Procedure Normalization ( φ ∗ ) Procedure Initialize ( G , Z ) P ( X | E )= φ ∗ ( X )/ ∑ x φ ∗ ( X ) Let Z 1 , . . . ,Z k be an ordering of Z 1. 1. such that Z i ≺ Z j iff i < j Initialize F with the full the set of 2. factors Procedure Evidence ( E ) for each i ∈ Ι E , 1. Procedure Sum-Product-Eliminate-Var ( F = F ∪δ ( E i , e i ) F , // Set of factors Z // Variable to be eliminated Procedure Sum-Product-Variable- Elimination ( F , Z , ≺ ) ) F ′ ← { φ ∈ F : Z ∈ Scope [ φ ]} 1. for i = 1, . . . , k 1. F ′′ ← F − F ′ 2. F ← Sum-Product-Eliminate-Var( F , Z i ) ψ ← ∏ φ ∈ F ′ φ φ ∗ ← ∏ φ∈ F φ 3. 2. τ ← ∑ Z ψ 4. return φ ∗ 3. return F ′′ ∪ { τ } Normalization ( φ ∗ ) 5. 4. Eric Xing 6 3

Message passing for trees Let m ij ( x i ) denote the factor resulting from f eliminating variables from bellow up to i , which is a function of x i : This is reminiscent of a message sent i from j to i . j k l m ij ( x i ) represents a "belief" of x i from x j ! Eric Xing 7 � Elimination on trees is equivalent to message passing along tree branches! f i j k l Eric Xing 8 4

The message passing protocol: A node can send a message to its neighbors when (and only when) � it has received messages from all its other neighbors. Computing node marginals: � Naïve approach: consider each node as the root and execute the message � passing algorithm m 21 (x 1 ) X 1 Computing P(X 1 ) m 32 (x 2 ) m 42 (x 2 ) X 2 X 3 X 4 Eric Xing 9 The message passing protocol: A node can send a message to its neighbors when (and only when) � it has received messages from all its other neighbors. Computing node marginals: � Naïve approach: consider each node as the root and execute the message � passing algorithm m 12 (x 2 ) X 1 Computing P(X 2 ) m 32 (x 2 ) m 42 (x 2 ) X 2 X 3 X 4 Eric Xing 10 5

The message passing protocol: A node can send a message to its neighbors when (and only when) � it has received messages from all its other neighbors. Computing node marginals: � Naïve approach: consider each node as the root and execute the message � passing algorithm m 12 (x 2 ) X 1 Computing P(X 3 ) m 23 (x 3 ) m 42 (x 2 ) X 2 X 3 X 4 Eric Xing 11 Computing node marginals � Naïve approach: Complexity: NC � N is the number of nodes � C is the complexity of a complete message passing � � Alternative dynamic programming approach 2-Pass algorithm (next slide � ) � Complexity: 2C! � Eric Xing 12 6

The message passing protocol: � A two-pass algorithm: X 1 m 21 (X 1 ) m 12 (X 2 ) m 32 (X 2 ) m 42 (X 2 ) X 2 X 4 X 3 m 24 (X 4 ) m 23 (X 3 ) Eric Xing 13 Belief Propagation ( SP-algorithm ): Sequential implementation Eric Xing 14 7

Belief Propagation ( SP-algorithm ): Parallel synchronous implementation For a node of degree d, whenever messages have arrived on any subset of d-1 � node, compute the message for the remaining edge and send! A pair of messages have been computed for each edge, one for each direction � All incoming messages are eventually computed for each node � Eric Xing 15 Correctness of BP on tree � Collollary: the synchronous implementation is "non-blocking" � Thm: The Message Passage Guarantees obtaining all marginals in the tree � What about non-tree? Eric Xing 16 8

Another view of SP: Factor Graph � Example 1 X 1 X 5 X 1 X 5 f d f a X 3 X 3 f c f e f b X 2 X 4 X 2 X 4 P(X 1 ) P(X 2 ) P(X 3 |X 1 ,X 2 ) P(X 5 |X 1 ,X 3 ) P(X 4 |X 2 ,X 3 ) f a (X 1 ) f b (X 2 ) f c (X 3 ,X 1 ,X 2 ) f d (X 5 ,X 1 ,X 3 ) f e (X 4 ,X 2 ,X 3 ) Eric Xing 17 Factor Graphs � Example 2 X 1 X 1 f a f c X 2 X 3 X 2 X 3 f b ψ( x 1 ,x 2 ,x 3 ) = f a (x 1 ,x 2 )f b (x 2 ,x 3 )f c (x 3 ,x 1 ) � Example 3 X 1 X 1 f a X 2 X 3 X 2 X 3 ψ( x 1 ,x 2 ,x 3 ) = f a (x 1 ,x 2 ,x 3 ) Eric Xing 18 9

Factor Tree � A Factor graph is a Factor Tree if the undirected graph obtained by ignoring the distinction between variable nodes and factor nodes is an undirected tree X 1 X 1 f a X 2 X 3 X 2 X 3 ψ( x 1 ,x 2 ,x 3 ) = f a (x 1 ,x 2 ,x 3 ) Eric Xing 19 Message Passing on a Factor Tree � Two kinds of messages ν : from variables to factors 1. µ : from factors to variables 2. f 1 x j f s x i f s x i f 3 x k Eric Xing 20 10

Message Passing on a Factor Tree, con'd � Message passing protocol: A node can send a message to a neighboring node only when it has � received messages from all its other neighbors � Marginal probability of nodes: f 1 x j f s x i f s x i f 3 x k P(x i ) ∝ ∏ s 2 N(i) µ si (x i ) ∝ ν is (x i ) µ si (x i ) Eric Xing 21 BP on a Factor Tree X 3 X 1 X 2 ν 1d µ e2 µ d2 ν 3e f d f e X 3 X 1 X 2 µ d1 ν 2d ν 2e µ e3 µ c3 µ a1 ν 3c ν 1a µ b2 ν 2b f c f a f b Eric Xing 22 11

Why factor graph? � Tree-like graphs to Factor trees X 1 X 1 X 2 X 2 X 4 X 3 X 3 X 4 X 5 X 6 X 5 X 6 Eric Xing 23 Poly-trees to Factor trees X 2 X 1 X 1 X 2 X 3 X 4 X 3 X 4 X 5 X 5 Eric Xing 24 12

Why factor graph? Because FG turns tree-like � X 1 graphs to factor trees, X 1 and trees are a data-structure � X 2 X 2 that guarantees correctness of BP ! X 4 X 3 X 3 X 4 X 5 X 6 X 5 X 6 X 2 X 1 X 1 X 2 X 3 X 4 X 3 X 4 X 5 X 5 Eric Xing 25 Max-product algorithm: computing MAP probabilities f i j k l Eric Xing 26 13

Max-product algorithm: computing MAP configurations using a final bookkeeping backward pass f i j k l Eric Xing 27 Summary � Sum-Product algorithm computes singleton marginal probabilities on: Trees � Tree-like graphs � Poly-trees � � Maximum a posteriori configurations can be computed by replacing sum with max in the sum-product algorithm Extra bookkeeping required � Eric Xing 28 14

Inference on general GM Now, what if the GM is not a tree-like graph? � Can we still directly run message � message-passing protocol along its edges? For non-trees, we do not have the guarantee that message-passing � will be consistent! Then what? � Construct a graph data-structure from P that has a tree structure, and run � message-passing on it! � Junction tree algorithm Eric Xing 29 Elimination Clique � Recall that Induced dependency during marginalization is captured in elimination cliques Summation <-> elimination � Intermediate term <-> elimination clique � B A B A A C A A C D A E F C D E Can this lead to an generic E � E F G inference algorithm? H Eric Xing 30 15

A Clique Tree B A B A A m m b c C m A A d m C D f m A E F e C D m h E m E E F g G H ( , , ) m a c d e ∑ = p ( e | c , d ) m ( e ) m ( a , e ) g f e Eric Xing 31 From Elimination to Message Passing Elimination ≡ message passing on a clique tree � B A B A B A B A B A B A B A B A A C D C D C D C D C D C C D E F E F E F E F E G H G H G ≡ B A B A A m m c b C m A A d m C D f A m ( , , ) m a c d E F e e C D ∑ = m p ( e | c , d ) m ( e ) m ( a , e ) g f h E E e m E F g G H Messages can be reused � Eric Xing 32 16

Recommend

More recommend