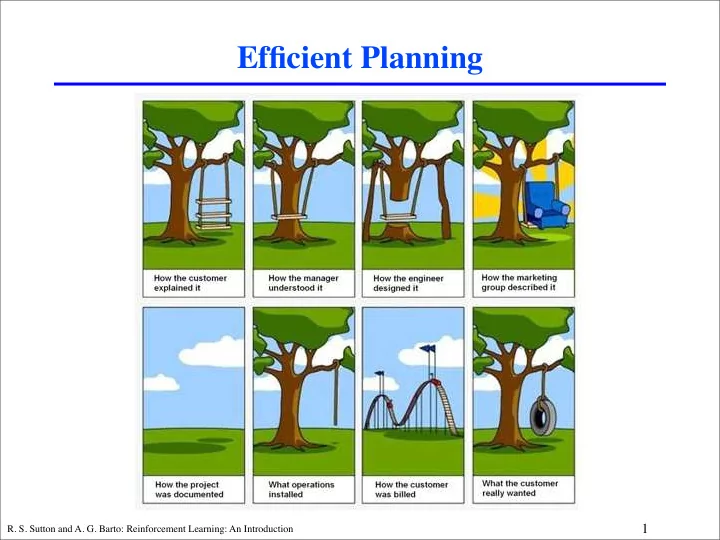

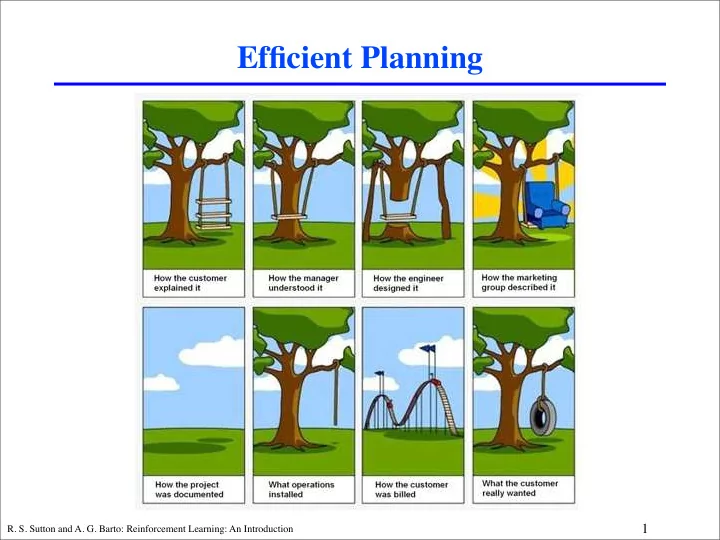

Efficient Planning 1 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Tuesday class summary: Planning: any computational process that uses a model to create or improve a policy Dyna framework: 2 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Questions during class “Why use simulated experience? Can’t you directly compute solution based on model?” “Wouldn’t it be better to plan backwards from goal” 3 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

How to Achieve Efficient Planning? What type of backup is better? Sample vs. full backups Incremental vs. less incremental backups How to order the backups? 4 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

What is Efficient Planning? Planning algorithm A is more efficient than planning algorithm B if: it can compute the optimal policy (or value function) in less time. given the same amount of computation time, it improves the policy (or value function) more. 5 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

What backup type is best? 6 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Full vs. Sample Backups Sample backups Value Full backups estimated (one-step TD) (DP) s s ! ( s ) a a v π V r r s' s' policy evaluation TD(0) s max a v * V *( s ) r s' value iteration s,a s,a r r Q ! ( a , s ) q π s' s' a' a' Q-policy evaluation Sarsa s,a s,a r r s' s' Q * q * ( a , s ) max max a' a' Q-value iteration Q-learning 7 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Full vs. Sample Backups 1 full sample backups backups b = 2 (branching factor) RMS error in value b =10 estimate b =100 b =1000 b =10,000 0 0 1 b 2 b a 0 Q ( s 0 , a 0 ) max Number of computations b successor states, equally likely; initial error = 1; assume all next states’ values are correct 8 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Small Backups Small backups are single-successor backups based on the model Small backups have the same computational complexity as sample backups Small backups have no sampling error Small backups require storage for ‘old’ values 9 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Main Idea behind Small Backups Consider estimate A that is constructed from a weighted sum estimates . X i X full backup: A w i X i i What can we do if we know that only a single successor, , y X j . changed value since the last backup? − x j Let be the old value of , used to construct the current + X j X value of A . The value A can then be updated for a single successor by adding the difference between the new and the old value: A A + w j ( X j � x j ) small backup: 10 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Small vs. Sample Backups 1 0.8 r left = +1 e z i s RMS − p e s t t 0.6 n a r right = -1 t s n o c r = +1 ) , sample backup: TD(0), decaying step − size 0 error ( D T p : u k c a b e l p m a 0.4 s (normalized) r 0.2 t f r e l r i g h small backup t 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 step − size / step − size decay = +1 = -1 1 r s a n n o normalized RMS error d i o t 0.8 i m n s t a r g i n a y α e c d 0 ) , D ( T p : k u a c b l e m p a s 0.6 r left = +1 0.4 r = +1 r right = +1 sample backup: TD(0), constant α 0.2 small backup 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 alpha / decay 11 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Small vs. Sample Backups B A C transition probability state values 1 10 8 0.667 6 4 0.333 2 0 0 state A state B state A state B 12 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Backup Ordering 13 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Backup Ordering Do Forever: 1) Select a state s 2 S according to some selection strategy H 2) Apply a full backup to s : h i r ( s, a ) + P s 0 p ( s 0 | s, a ) V ( s 0 ) V ( s ) max a ˆ Asynchronous Value Iteration For every selection strategy H that selects each state infinitely often the values V converge to the optimal value function V ⇤ The rate of convergence depends strongly on the selection strategy H 14 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

The Trade-Off For any effective ordering strategy the cost that is saved by having to perform less backups should out-weigh the cost of maintaining the ordering: cost to maintain cost savings ordering due to fewer backups 15 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Prioritized Sweeping Which states or state-action pairs should be generated during planning? Work backwards from states whose values have just changed: Maintain a queue of state-action pairs whose values would change a lot if backed up, prioritized by the size of the change When a new backup occurs, insert predecessors according to their priorities Always perform backups from first in queue Moore & Atkeson 1993; Peng & Williams 1993 improved by McMahan & Gordon 2005; Van Seijen 2013 16 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Moore and Atekson’s Prioritized Sweeping Published in 1993. 17 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Prioritized Sweeping vs. Dyna-Q Both use n =5 backups per environmental interaction 18 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Bellman Error Ordering Bellman error is a measure for the difference between the current value and the value after a full backup: � i� h X p ( s 0 | s, a ) V ( s 0 ) BE ( s ) = � V ( s ) � max r ( s, a ) + ˆ � � � a s 0 19 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Bellman Error Ordering initialize V ( s ) arbitrarily for all s compute BE ( s ) for all s loop { until convergence } select state s 0 with worst Bellman error perform full backup of s 0 BE ( s 0 ) ← 0 s of s 0 do for all predecessor states ¯ recompute BE (¯ s ) end for end loop To get positive trade-off: comp. time Bellman error << comp time Full backup 20 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Prioritized Sweeping with Small Backups initialize V ( s ) arbitrarily for all s initialize U ( s ) = V ( s ) for all s initialize Q ( s, a ) = V ( s ) for all s, a initialize N sa , N s 0 sa to 0 for all s, a, s 0 loop { over episodes } initialize s repeat { for each step in the episode } select action a , based on Q ( s, · ) take action a , observe r and s 0 N s 0 sa ← N s 0 N sa ← N sa + 1; sa + 1 ⇥ ⇤ Q ( s, a ) ← Q ( s, a )( N sa − 1) + r + γ V ( s 0 ) /N sa V ( s ) ← max b Q ( s, b ) p ← | V ( s ) − U ( s ) | if s is on queue, set its priority to p ; otherwise, add it with priority p for a number of update cycles do s 0 from queue remove top state ¯ s 0 ) − V (¯ s 0 ) ∆ U ← U (¯ V (¯ s 0 ) ← V U ¯ s 0 ) s 0 a ) pairs with N ¯ for all (¯ s, ¯ a > 0 do s ¯ ¯ s 0 a ) + γ N ¯ Q (¯ s, ¯ a ) ← Q (¯ s, ¯ a /N ¯ a · ∆ U s ¯ s ¯ ¯ U (¯ s ) ← max b Q (¯ s, b ) p ← | V (¯ s ) − U (¯ s ) | if s is on queue, set its priority to p ; otherwise, add it with priority p end for end for s ← s 0 until s is terminal end loop 21 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Empirical Comparison 0.55 PS, Moore & Atkeson initial error 0.5 0.45 RMS 0.4 error 0.35 PS, Wiering & Schmidhuber (avg. over P S , P e n first 10 5 obs) 0.3 g & W i l l i a m s 0.25 PS, small backups 0.2 0.15 value iteration 0.1 0 0.2 0.4 0.6 0.8 1 1.2 1.4 1.6 − 6 x 10 comp. time per observation [s] 22 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction ation tas

Trajectory Sampling Trajectory sampling: perform backups along simulated trajectories This samples from the on-policy distribution Advantages when function approximation is used (Chapter 8) Focusing of computation: can cause vast uninteresting parts of the state space to be (usefully) ignored: Initial states Irrelevant states Reachable under optimal control 23 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Trajectory Sampling Experiment one-step full tabular backups uniform: cycled through all state- action pairs on-policy: backed up along simulated trajectories 200 randomly generated undiscounted episodic tasks 2 actions for each state, each with b equally likely next states 0.1 prob of transition to terminal state expected reward on each transition selected from mean 0 variance 1 Gaussian 24 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Heuristic Search Used for action selection, not for changing a value function (=heuristic evaluation function) Backed-up values are computed, but typically discarded Extension of the idea of a greedy policy — only deeper Also suggests ways to select states to backup: smart focusing: 25 R. S. Sutton and A. G. Barto: Reinforcement Learning: An Introduction

Recommend

More recommend