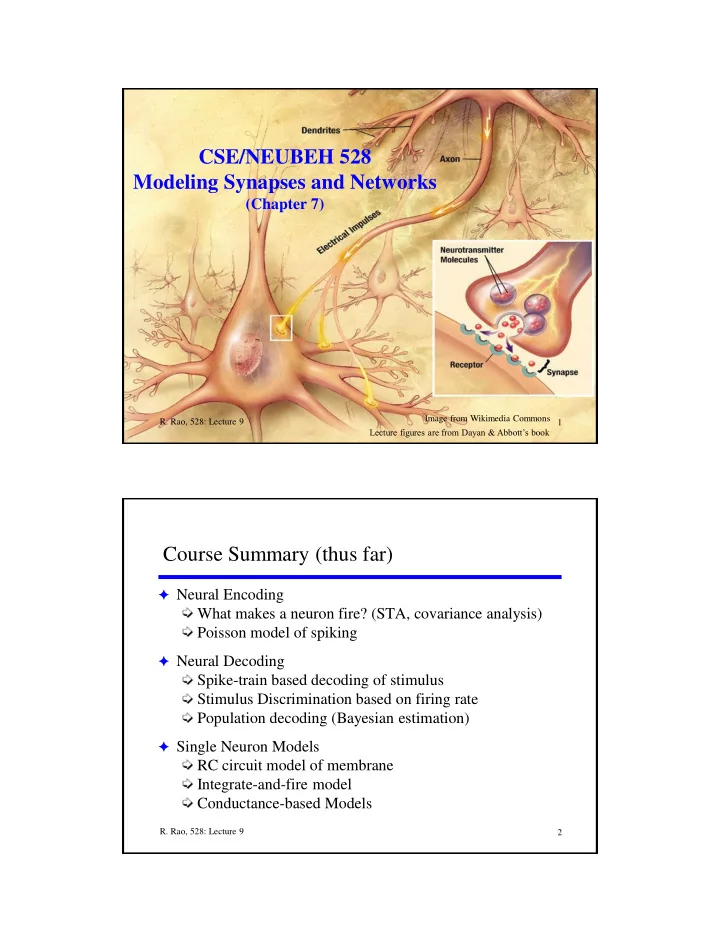

CSE/NEUBEH 528 Modeling Synapses and Networks (Chapter 7) Image from Wikimedia Commons R. Rao, 528: Lecture 9 1 Lecture figures are from Dayan & Abbott’s book Course Summary (thus far) F Neural Encoding What makes a neuron fire? (STA, covariance analysis) Poisson model of spiking F Neural Decoding Spike-train based decoding of stimulus Stimulus Discrimination based on firing rate Population decoding (Bayesian estimation) F Single Neuron Models RC circuit model of membrane Integrate-and-fire model Conductance-based Models R. Rao, 528: Lecture 9 2

Today’s Agenda F Computation in Networks of Neurons Modeling synaptic inputs From spiking to firing-rate based networks Feedforward Networks Linear Recurrent Networks R. Rao, 528: Lecture 9 3 SYNAPSES ! R. Rao, 528: Lecture 9 4 Image Credit: Kennedy lab, Caltech. http://www.its.caltech.edu/~mbkla

What do synapses do? Spike Increase or decrease postsynaptic membrane potential R. Rao, 528: Lecture 9 5 Image Source: Wikimedia Commons An Excitatory Synapse Input spike Spike Neurotransmitter release (e.g., Glutamate) Binds to receptors Ion channels open positive ions (e.g. Na+) enter cell Depolarization due to EPSP (excitatory postsynaptic potential) R. Rao, 528: Lecture 9 6 Image Source: Wikimedia Commons

An Inhibitory Synapse Input spike Spike Neurotransmitter release (e.g., GABA) Binds to receptors Ion channels open positive ions (e.g., K+) leave cell Hyperpolarization due to IPSP (inhibitory postsynaptic potential) R. Rao, 528: Lecture 9 7 Image Source: Wikimedia Commons Flashback Membrane Model V dV ( V E ) I L e c , or equivalent ly m m = r m c m = R m C m dt r A m membrane time dV ( V E ) I R constant m L e m dt R. Rao, 528: Lecture 9 8

Flashback! The Integrate-and-Fire Model V Models a passive leaky membrane dV ( V E ) I R E 70 mV m L e m L dt (resting potential) If V > V threshold Spike V 50 mV threshold Then reset: V = V reset V E reset L R. Rao, 528: Lecture 9 9 Flashback! Hodgkin-Huxley Model L K Na dV I e c i m m dt A 4 3 ( ) ( ) ( ) i g V E g n V E g m h V E m L , max L K , max K Na , max Na E L = -54 mV, E K = -77 mV, E Na = +50 mV R. Rao, 528: Lecture 9 10

How do we model the effects of a synapse on the membrane potential V ? Synapse ? R. Rao, 528: Lecture 9 11 Modeling Synaptic Inputs Synaptic V conductance Synapse dV ( V E ) r g ( V E ) I R m L m s s e m dt g g P P Probability of postsynaptic channel opening s s , max rel s (= fraction of channels opened) Probability of transmitter release given an input spike R. Rao, 528: Lecture 9 12

Basic Synapse Model F Assume P rel = 1 fraction of channels F Model the effect of a single spike input on P s opened F Kinetic Model of postsynaptic channels: s Open Closed s dP s ( 1 P ) P s s s s dt Closing rate Opening rate Fraction of channels open Fraction of channels closed R. Rao, 528: Lecture 9 13 What does P s look like over time? t 1 K ( t ) e s s Exponential function K ( t ) gives reasonable fit to biological data (other options: difference of exponentials, “alpha” function) R. Rao, 528: Lecture 9 14

Linear Filter Model of Synaptic Input to a Neuron Synaptic weight Input Spike w b Train b (t) b ( t ) = i δ ( t-t i ) ( t i are the input spike times) t 1 Filter for synapse b : K ( t ) e s s I ( t ) w K ( t t ) Synaptic current for b : b b i t t i t w K ( t ) ( ) d b b R. Rao, 528: Lecture 9 15 Modeling Networks of Neurons F Option 1: Use spiking neurons Advantages : Model computation and learning based on: Spike Timing Spike Correlations/Synchrony between neurons Disadvantages : Computationally expensive F Option 2: Use neurons with firing-rate outputs (real valued outputs) Advantages : Greater efficiency, scales well to large networks Disadvantages : Ignores spike timing issues F Question: How are these two approaches related? R. Rao, 528: Lecture 9 16

From Spiking to Firing Rate Models w 1 w N Spikes Spikes 1 (t) N (t) I ( t ) I ( t ) Total synaptic current s b b t Spike train b (t) I ( t ) w K ( t ) ( ) d s b b b t Firing rate u b (t) w K ( t ) u ( ) d b b b R. Rao, 528: Lecture 9 17 Synaptic Current Dynamics in Firing Rate Model t 1 K ( t ) e F Suppose synaptic kernel K is exponential: s s t Differentiating w.r.t. time t , I ( t ) w K ( t ) u ( ) d s b b b dI s we get I w u s s b b dt b w I u s R. Rao, 528: Lecture 9 18

Output Firing-Rate Dynamics F How is the output firing rate v related to synaptic inputs? dv dI w s v F ( I ( t )) I u s s r s dt dt F Looks very much like membrane equation: dV ( V E ) I R m L e m dt F On-board derivations of special cases obtained from comparing the relative magnitudes of r and s … (see also pages 234-236 in the text) R. Rao, 528: Lecture 9 19 How good are Firing Rate Models? Input I(t) = I 0 + I 1 cos( t) Firing rate model v(t) = F(I(t)) describes this well but not this case R. Rao, 528: Lecture 9 20

Feedforward versus Recurrent Networks d v v F ( W u M v ) dt Output Decay Input Feedback For feedforward networks, matrix M = 0 R. Rao, 528: Lecture 9 21 Example: Linear Feedforward Network d v Dynamics: v W u dt Steady State v W u (set d v / dt to 0): ss 1 0 0 0 1 1 1 1 0 0 0 2 0 1 1 0 0 What is v ss ? W u 2 0 0 1 1 0 2 0 0 0 1 1 1 1 0 0 0 1 R. Rao, 528: Lecture 9 22

Linear Feedforward Network 1 0 0 0 1 0 1 1 1 0 0 0 1 2 0 1 1 0 0 0 v ss W u 2 0 0 1 1 0 0 2 0 0 0 1 1 1 1 1 0 0 0 1 0 What is the network doing? R. Rao, 528: Lecture 9 23 Linear Filtering for Edge Detection Filter 0 1 1 0 0 (and shifted versions in W) 0 1 1 Input 2 0 Input 2 Output 0 2 1 1 0 Output R. Rao, 528: Lecture 9 24

Example of Edge Detection in a 2D Image http://www.alexandria.nu/ai/blog/entry.asp?E=51 R. Rao, 528: Lecture 9 25 Edge detectors in the visual system Examples of receptive fields in primary visual cortex (V1) V1 (From Nicholls et al., 1992) R. Rao, 528: Lecture 9 26

Filtering network is computing derivatives! df f ( x h ) f ( x ) 0 1 1 0 0 lim dx h h 0 Discrete approximat ion f ( x 1 ) f ( x ) 2 d f f ( x h ) f ( x ) 0 1 2 1 0 lim 2 dx h h 0 Disc. approx. f ( x 1 ) f ( x ) f ( x ) f ( x 1 ) f ( x 1 ) 2 f ( x ) f ( x 1 ) R. Rao, 528: Lecture 9 27 Feedforward Networks: Example 2 Coordinate Transformation Output: Premotor Cortex Neuron with Body-Based Tuning Curves Input: Area 7a Neurons with Gaze-Dependent Tuning Curves (From Section 7.3 in Dayan & Abbott) R. Rao, 528: Lecture 9 28

Output of Coordinate Transformation Network Head fixed; gaze shifted to g 1 g 2 g 3 Same tuning curve regardless of gaze angle Premotor cortex neuron responds to stimulus location relative to body , not retinal image location R. Rao, 528: Lecture 9 (See section 7.3 in Dayan & Abbott for details) 29 Linear Recurrent Networks d v W v u M v dt Output Decay Input Feedback R. Rao, 528: Lecture 9 30

Next Class: Recurrent Networks F To Do: Homework 2 Find a final project topic and partner(s) R. Rao, 528: Lecture 9 31

Recommend

More recommend