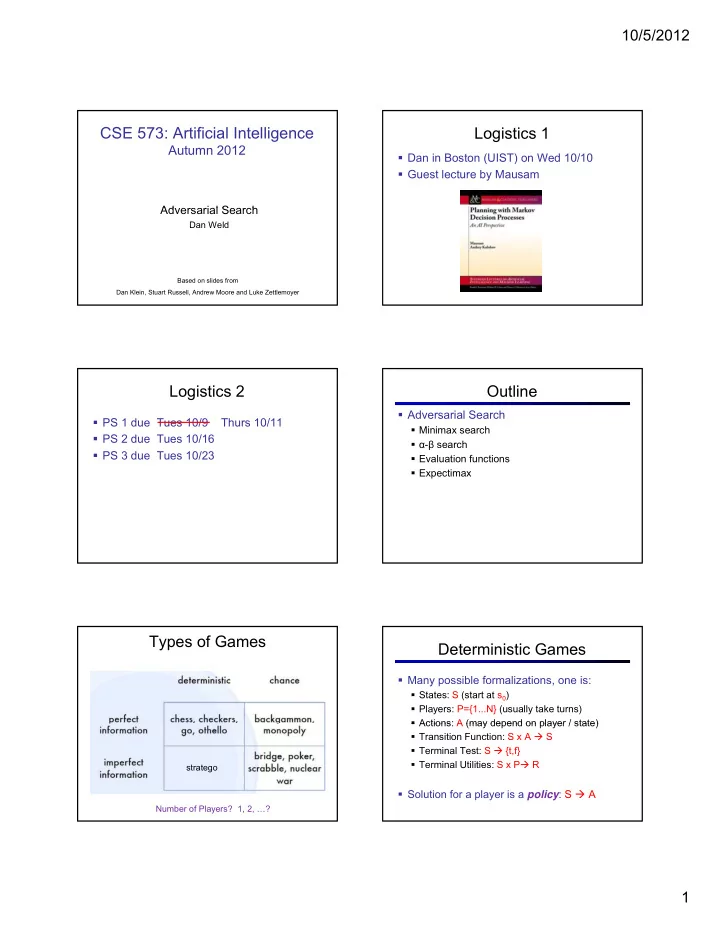

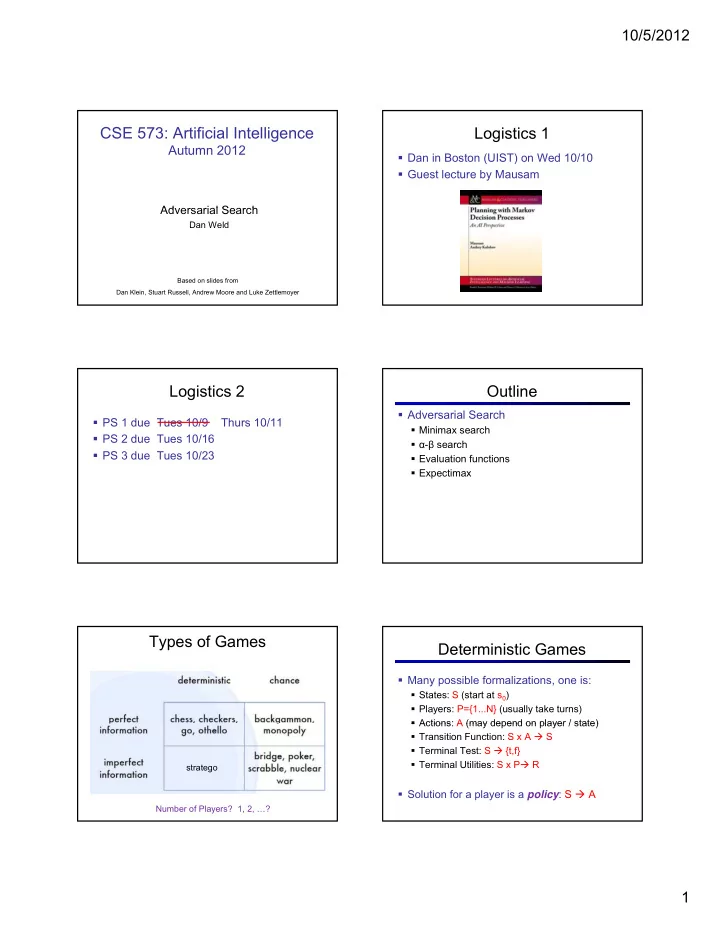

10/5/2012 CSE 573: Artificial Intelligence Logistics 1 Autumn 2012 Dan in Boston (UIST) on Wed 10/10 Guest lecture by Mausam Ad Adversarial Search i l S h Dan Weld Based on slides from Dan Klein, Stuart Russell, Andrew Moore and Luke Zettlemoyer 1 Logistics 2 Outline Adversarial Search PS 1 due Tues 10/9 Thurs 10/11 Minimax search PS 2 due Tues 10/16 α - β search PS 3 due Tues 10/23 Evaluation functions Expectimax Types of Games Deterministic Games Many possible formalizations, one is: States: S (start at s 0 ) Players: P={1...N} (usually take turns) Actions: A (may depend on player / state) Actions: A (may depend on player / state) Transition Function: S x A S Terminal Test: S {t,f} Terminal Utilities: S x P R stratego Solution for a player is a policy : S A Number of Players? 1, 2, …? 1

10/5/2012 Deterministic Two-Player Tic-tac-toe Game Tree E.g. tic-tac-toe, chess, checkers Zero-sum games One player maximizes result max The other minimizes result min min Minimax search 8 2 5 6 A state-space search tree Players alternate Choose move to position with highest minimax value = best achievable utility against best play Minimax Example Minimax Example max max min min 3 Minimax Example Minimax Example max max min min 2 2 2 3 3 2

10/5/2012 Minimax Example Minimax Search max 3 min 3 2 2 Minimax Properties Do We Need to Evaluate Every Node? Optimal? Yes, against perfect player. Otherwise? max Time complexity? O(b m ) min min Space complexity? O(bm) 10 10 9 100 For chess, b 35, m 100 Exact solution is completely infeasible But, do we need to explore the whole tree? - Pruning Example - Pruning is the best value that MAX can get at any choice point Player 3 along the current path a Opponent If n becomes worse than , If n becomes orse than 2 3 ? MAX will avoid it, so can stop considering n ʼ s other children Player Define similarly for MIN Opponent n n Progress of search… 3

10/5/2012 Alpha-Beta Pseudocode Alpha-Beta Pruning Example inputs: state , current game state α , value of best alternative for MAX on path to state β , value of best alternative for MIN on path to state returns: a utility value function M AX -V ALUE ( state, α , β ) function M IN -V ALUE ( state, α , β ) if T ERMINAL -T EST ( state ) then if T ERMINAL -T EST ( state ) then return U TILITY ( state ) return U TILITY ( state ) return U TILITY ( state ) return U TILITY ( state ) v ← −∞ v ← + ∞ for a, s in S UCCESSORS ( state ) do for a, s in S UCCESSORS ( state ) do v ← M AX ( v , M IN -V ALUE ( s , α , β )) v ← M IN ( v , M AX -V ALUE ( s , α , β )) if v ≥ β then return v if v ≤ α then return v α ← M AX ( α , v ) β ← M IN ( β , v ) return v return v 0 7 4 2 1 5 6 2 3 5 9 At max node: At min node: Prune if v ; Prune if v; α is MAX ʼ s best alternative here or above Update Update β is MIN ʼ s best alternative here or above Alpha-Beta Pruning Properties Resource Limits This pruning has no effect on final result at the root Cannot search to leaves max 4 Depth-limited search -2 4 min min Values of intermediate nodes might be wrong! Instead, search a limited depth of tree -1 -2 4 9 but, they are bounds Replace terminal utilities with heuristic eval function for non-terminal positions p Guarantee of optimal play is gone Good child ordering improves effectiveness of pruning Example: Suppose we have 100 seconds, can With “ perfect ordering ” : explore 10K nodes / sec Time complexity drops to O(b m/2 ) So can check 1M nodes per move Doubles solvable depth! reaches about depth 8 Full search of, e.g. chess, is still hopeless… decent chess program ? ? ? ? Heuristic Evaluation Function Evaluation for Pacman Function which scores non-terminals Ideal function: returns the utility of the position In practice: typically weighted linear sum of features: What features would be good for Pacman? e.g. f 1 ( s ) = (num white queens – num black queens), etc. 4

10/5/2012 Which algorithm? Why Pacman Starves α - β , depth 4, simple eval fun He knows his score will go up by eating the dot now He knows his score will go up just as much by eating the dot later on the dot later on There are no point-scoring QuickTime™ and a opportunities after eating GIF decompressor are needed to see this picture. the dot Therefore, waiting seems just as good as eating Which algorithm? Which Algorithm? α - β , depth 4, better eval fun Minimax: no point in trying QuickTime™ and a QuickTime™ and a GIF decompressor GIF decompressor are needed to see this picture. are needed to see this picture. 3 ply look ahead, ghosts move randomly Which Algorithm? Stochastic Single-Player What if we don ʼ t know what the Expectimax: wins some of the time result of an action will be? E.g., In solitaire, shuffle is unknown max In minesweeper, mine locations average g Can do expectimax search Chance nodes, like actions QuickTime™ and a GIF decompressor except the environment controls are needed to see this picture. the action chosen 10 4 5 7 Max nodes as before Chance nodes take average (expectation) of value of children 3 ply look ahead, ghosts move randomly Soon, we ʼ ll generalize this problem to a Markov Decision Process 5

10/5/2012 Reminder: Probabilities Maximum Expected Utility A random variable models an event with unknown outcome Why should we average utilities? Why not minimax? A probability distribution assigns weights to outcomes Example: traffic on freeway? Principle of maximum expected utility: an agent should Random variable: T = whether there ʼ s traffic chose the action which maximizes its expected utility, Outcomes: T in {none, light, heavy} given its knowledge Distribution: P(T=none) = 0.25, P(T=light) = 0.55, P(T=heavy) = 0.20 General principle for decision making Some laws of probability (read ch 13): Often taken as the definition of rationality Probabilities are always non-negative We ʼ ll see this idea over and over in this course! Probabilities over all possible outcomes sum to one Let ʼ s decompress this definition… As we get more evidence, probabilities may change: P(T=heavy) = 0.20, P(T=heavy | Hour=5pm) = 0.60 We ʼ ll talk about methods for reasoning and updating probabilities later What are Probabilities? Uncertainty Everywhere Objectivist / frequentist answer: Not just for games of chance! Averages over repeated experiments I ʼ m sick: will I sneeze this minute? E.g. empirically estimating P(rain) from historical observation Email contains “ FREE! ” : is it spam? E.g. pacman ʼ s estimate of what the ghost will do, given what it Tummy hurts: have appendicitis? has done in the past Robot rotated wheel three times: how far did it advance? Assertion about how future experiments will go (in the limit) p g ( ) Makes one think of inherently random events, like rolling dice Sources of uncertainty in random variables: Inherently random process (dice, opponent, etc) Subjectivist / Bayesian answer: Insufficient or weak evidence Degrees of belief about unobserved variables Ignorance of underlying processes E.g. an agent ʼ s belief that it ʼ s raining, given the temperature Unmodeled variables E.g. pacman ʼ s belief that the ghost will turn left, given the state The world ʼ s just noisy – it doesn ʼ t behave according to plan! Often learn probabilities from past experiences (more later) New evidence updates beliefs (more later) Review: Expectations Utilities Real valued functions of random variables: Utilities are functions from outcomes (states of the world) to real numbers that describe an agent ʼ s preferences Expectation of a function of a random variable Where do utilities come from? In a game may be simple (+1/ 1) In a game, may be simple (+1/-1) Utilities summarize the agent ʼ s goals Example: Expected value of a fair die roll Theorem: any set of preferences between outcomes can be summarized as a utility function (provided the preferences meet X P f certain conditions) 1 1/6 1 2 1/6 2 In general, we hard-wire utilities and let actions emerge (why don ʼ t we let agents decide their own utilities?) 3 1/6 3 4 1/6 4 5 1/6 5 More on utilities soon… 6 1/6 6 6

Recommend

More recommend