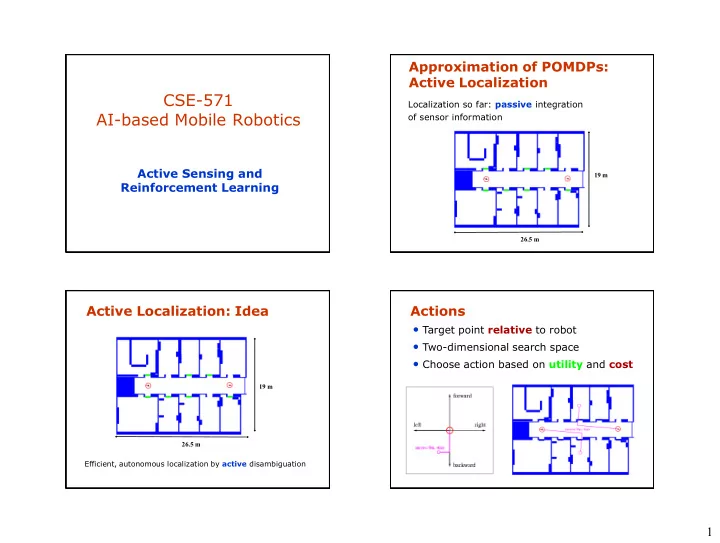

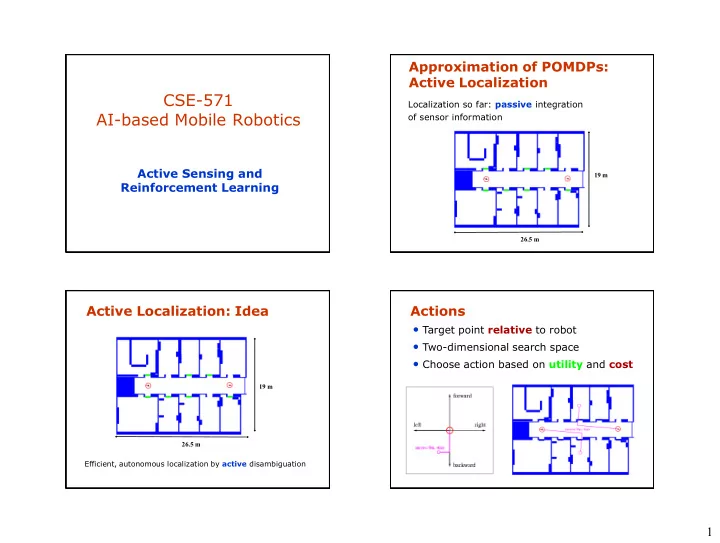

Approximation of POMDPs: Active Localization CSE-571 Localization so far: passive integration AI-based Mobile Robotics of sensor information Active Sensing and 19 m Reinforcement Learning 26.5 m Active Localization: Idea Actions • Target point relative to robot • Two-dimensional search space • Choose action based on utility and cost 19 m 26.5 m Efficient, autonomous localization by active disambiguation 1

Utilities Costs: Occupancy Probabilities • Given by change in uncertainty • Costs are based on • Uncertainty measured by entropy occupancy probabilities H ( X ) Bel ( x ) log Bel ( x ) x p ( a ) Bel ( x ) p ( f ( x )) occ occ a U ( a ) H ( X ) E [ H ( X )] x a p ( z | x ) Bel ( x | a ) H ( X ) p ( z | x ) Bel ( x | a ) log p ( z | a ) z , a Costs: Optimal Path Action Selection • Choose action based on • Given by cost-optimal path to expected utility and costs the target • Cost-optimal path determined a arg max ( U ( a ) C ( a )) through value iteration a • Execution: • cost-optimal path C ( a ) p ( a ) min [ C ( b )] occ • reactive collision b avoidance 2

Experimental Results RL for Active Sensing • Random navigation failed in 9 out of 10 test runs • Active localization succeeded in all 20 test runs Active Sensing in Multi-State Active Sensing Domains Sensors have limited coverage & range Uncertainty in multiple, different state variables Robocup: robot & ball location, relative goal location, … Question: Where to move / point sensors? Which uncertainties should be minimized? Typical scenario: Uncertainty in only one type of Importance of uncertainties changes over time. state variable Ball location has to be known very accurately before a kick. Robot location [Fox et al., 98; Kroese & Bunschoten, 99; Roy & Thrun 99] Accuracy not important if ball is on other side of the field. Object / target location(s) [Denzler & Brown, 02; Kreuchner Has to consider sequence of sensing actions! et al., 04, Chung et al., 04] RoboCup: typically use hand-coded strategies. Predominant approach: Minimize expected uncertainty (entropy) 3

Converting Beliefs to Augmented Projected Uncertainty (Goal States Orientation) r g State variables Goal (a) (b) Uncertainty variables Belief Augmented state (c) (d) Why Reinforcement Learning? Least-squares Policy Iteration Model-free approach No accurate model of the robot and the environment. Approximates Q-function by linear function of state features k ˆ Particularly difficult to assess how Q ( s , a ) Q ( s , a ; w ) ( s , a ) w j j No discretization needed j 1 (projected) entropies evolve over time. No iterative procedure needed for policy evaluation Possible to simulate robot and noise in actions and observations. Off-policy: can re-use samples [Lagoudakis and Parr ’01,’03] 4

Application: Least-squares Policy Iteration Active Sensing for Goal Scoring ' 0 Task: AIBO trying to score goals Repeat Sensing actions: looking at ball, or ' the goals, or the markers • Estimate Q-function from samples S Fixed motion control policy: Uses most likely states to dock the robot w LSTD Q ( S , , ) Ball Goal to the ball, then kicks the ball into k ˆ Q ( s , a ; w ) ( s , a ) w the goal. j j j 1 Robot • Update policy Find sensing strategy that “ best ” supports the given control policy. ˆ ' ( s ) arg max Q ( s , a , w ) Mar ker a A Until ( ) ' Augmented State Space and Experiments Features Strategy learned from simulation Episode ends when: State variables: • Scores (reward +5) Distance to ball • Misses (reward 1.5 – 0.1) Ball Orientation • Loses track of the ball (reward -5) Uncertainty variables: Robot • Fails to dock / accidentally kicks the ball Ent. of ball location away (reward -5) Ent. of robot location Applied to real robot g b Ent. of goal orientation Compared with 2 hand-coded strategies Goal Features: Ball • Panning: robot periodically scans • Pointing: robot periodically looks up at markers/goals ( s , a , d ) , H , H , H , , 1 b b b r a g 5

Rewards (simulation) Success Ratio (simulation) 1 4 2 0.8 0 Average rewards Success Ratio 0.6 -2 -4 0.4 -6 0.2 -8 Learned Learned Pointing Pointing Panning Panning -10 0 0 100 200 300 400 500 600 700 0 100 200 300 400 500 600 700 Episodes Episodes Learned Strategy Results on Real Robots • 45 episodes of goal kicking Initially, robot learns to dock (only looks at ball) Goals Misses Avg. Miss Kick Then, robot learns to look at goal and Distance Failures markers 6 ± 0.3cm Learned 31 10 4 9 ± 2.2cm Robot looks at ball when docking Pointing 22 19 4 Briefly before docking, adjusts by looking Panning 15 21 22 ± 9.4cm 9 at the goal Prefers looking at the goal instead of markers for location information 6

Adding Opponents Learning With Opponents 1 Learned with pre-trained data Learned from scratch Pre-trained 0.8 Robot Lost Ball Ratio 0.6 Opponent 0.4 o d v o 0.2 b Goal u Ball 0 0 100 200 300 400 500 600 700 Episodes Additional features: ball velocity, knowledge about other Robot learned to look at ball when opponent is robots close to it. Thereby avoids losing track of it. Summary Learned effective sensing strategies that make good trade-offs between uncertainties Results on a real robot show improvements over carefully tuned, hand-coded strategies Augmented-MDP (with projections) good approximation for RL LSPI well suited for RL on augmented state spaces 7

Recommend

More recommend