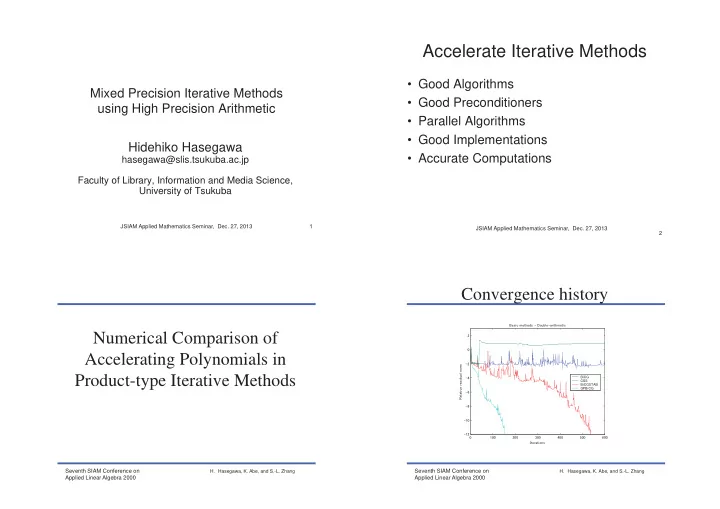

Accelerate Iterative Methods • Good Algorithms Mixed Precision Iterative Methods • Good Preconditioners using High Precision Arithmetic • Parallel Algorithms • Good Implementations Hidehiko Hasegawa • Accurate Computations hasegawa@slis.tsukuba.ac.jp Faculty of Library, Information and Media Science, University of Tsukuba JSIAM Applied Mathematics Seminar, Dec. 27, 2013 1 JSIAM Applied Mathematics Seminar, Dec. 27, 2013 2 Convergence history ��������������������������������� Numerical Comparison of � � Accelerating Polynomials in �� ���������������������� Product-type Iterative Methods �������� �� �������� �������� �������� �� �� ��� ��� � ��� ��� ��� ��� ��� ��� ���������� Seventh SIAM Conference on Seventh SIAM Conference on H. Hasegawa, K. Abe, and S.-L. Zhang H. Hasegawa, K. Abe, and S.-L. Zhang Applied Linear Algebra 2000 Applied Linear Algebra 2000

Convergence history of Bi-CG part Convergence of Bi-CG part: Quadruple ( reconstruct Bi-CG using alpha and beta in each methods) ( reconstruct Bi-CG using alpha and beta in each methods) �������������������������������������������������� ��������������������������������������������������������������� � � � � �� �� ���������������������� ���������������������� �� �� �� �� �������� �������� �� �������� �� �������� �������� �������� �������� �������� ��� ��� ��� ��� � ��� ��� ��� ��� ��� ��� � ��� ��� ��� ��� ��� ��� ���������� ���������� Seventh SIAM Conference on H. Hasegawa, K. Abe, and S.-L. Zhang Seventh SIAM Conference on H. Hasegawa, K. Abe, and S.-L. Zhang Applied Linear Algebra 2000 Applied Linear Algebra 2000 Convergence history based on one Bi-CG How Bi-CG part works? ( alpha and beta in Bi-CG are used in all methods) • Bi-CGSTAB converges by an effect of MR part ����������������������������������������� ( Bi-CG part is still unstable) � • GPBi-CG makes Bi-CG part stable � �� • CGS did not converge in Quadruple arithmetic ���������������������� �� • In Quadruple arithmetic, simple Bi-CG is the best ( Bi-CG is much affected by Rounding errors) �� �������� �� �������� • In Quadruple arithmetic, Bi-CG part in Bi- �������� �������� CGSTAB is bad convergence even if Bi-CG ��� converges. ��� � ��� ��� ��� ��� ��� ��� ���������� Seventh SIAM Conference on Seventh SIAM Conference on H. Hasegawa, K. Abe, and S.-L. Zhang H. Hasegawa, K. Abe, and S.-L. Zhang Applied Linear Algebra 2000 Applied Linear Algebra 2000

Convergence history based on one Bi-CG Convergence history based on one Bi-CG (Quadruple arithmetic is used for ALL) (Quadruple arithmetic is used for Bi-CG) ������������������������������������ ���������������������������������������������������� � � � � �� �� ���������������������� ���������������������� �� �� �������� �� �������� �� �������� �������� �� �� �������� �������� �������� ��� �������� ��� ��� ��� � ��� ��� ��� ��� ��� ��� � �� ��� ��� ��� ��� ���������� ���������� Seventh SIAM Conference on H. Hasegawa, K. Abe, and S.-L. Zhang Seventh SIAM Conference on H. Hasegawa, K. Abe, and S.-L. Zhang Applied Linear Algebra 2000 Applied Linear Algebra 2000 How accelerating polynomial works • Qudaruple arithmetic works very well. Utilizing Quadruple-Precision • If enough accuracy was provided, Bi-CG was the Floating Point Arithmetic best. • Bi-CGSTAB and GPBi-CG work well. Operation for the Krylov • In Quadruple arithmetic, sometimes it works as Subspace Methods braking not as accelerating. • GPBi-CG is robust in both two conditions. • CGS does not work in both conditions because of “squared”. Seventh SIAM Conference on SIAM Conference on 12 H. Hasegawa, K. Abe, and S.-L. Zhang Applied Linear Algebra 2000 Applied Linear Algebra 2003

BiCG Gamma = 1.3 CGS Gamma = 1.3 SIAM Conference on 13 SIAM Conference on 14 Applied Linear Algebra 2003 Applied Linear Algebra 2003 BiCG Gamma = 2.5 BiCGSTAB Gamma = 1.3 SIAM Conference on 15 SIAM Conference on 16 Applied Linear Algebra 2003 Applied Linear Algebra 2003

BiCGSTAB Gamma = 2.5 GPBiCG Gamma = 2.5 SIAM Conference on 17 SIAM Conference on 18 Applied Linear Algebra 2003 Applied Linear Algebra 2003 Observations High Precision Arithmetic • Fast and smooth convergence are gained from • Reducing round-off errors More accurate computations. • Required Mantissa is based on the problems: • Accelerating algorithms mathematically BiCG 53 bit for Gamma = 1.3 • Not easy to use 100 bit for 1.7 200 bit for 2.1 200 bit for 2.5 • Required Mantissa depends on Algorithms: BiCG 200 bit and 190 iterations CGS 300 bit and 160 x BiCGSTAB 1500 bit and 210 x GPBiCG 300 bit and 310 (Gamma = 2.5 ) SIAM Conference on 19 JSIAM Applied Mathematics Seminar, Dec. 27, 2013 Applied Linear Algebra 2003 20

High Precision Arithmetic Important points! without any Special Hardware • Symbolic Computation (Computer Algebra) • Full or Partial • Variable length Multiple Precision One Precision or Mixed Precisions – GMP • Computing Environment – MBLAS Compiler/Emulation/Interpreter – exflib • Program Interface, API • Fixed length Multiple Precision – FORTRAN REAL *16 – IEEE – Double-double JSIAM Applied Mathematics Seminar, Dec. 27, 2013 JSIAM Applied Mathematics Seminar, Dec. 27, 2013 21 22 Our Solution: Advantages Utilize Accurate Computations for Iterative Methods • Tough for round-off errors • Small Additional Memory • Use Double-double • Small Additional Communications • Use D-D vectors and Double Matrices • Much Computations (Mixed Precision Arithmetic Operations) • Applicable for ALL Iterative Methods • Accelerate by SIMD (even if serial computation such as ILU) • Restart with different Precision • Automatic Tuning • Good tools JSIAM Applied Mathematics Seminar, Dec. 27, 2013 JSIAM Applied Mathematics Seminar, Dec. 27, 2013 23 24

Recommend

More recommend