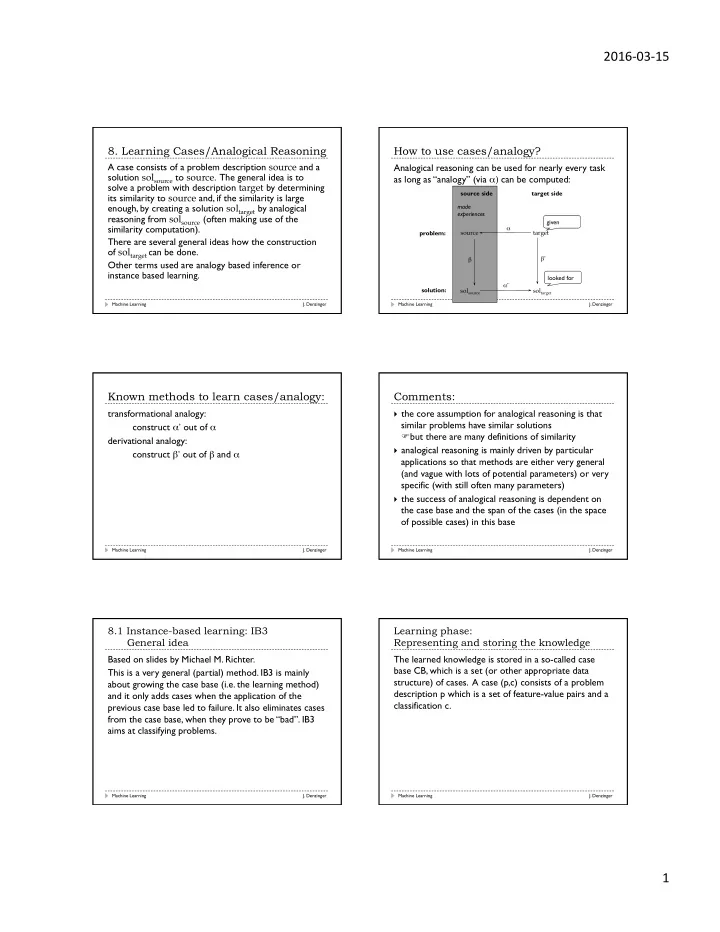

2016-03-15 8. Learning Cases/Analogical Reasoning How to use cases/analogy? A case consists of a problem description source and a Analogical reasoning can be used for nearly every task solution sol source to source . The general idea is to as long as “analogy” (via α ) can be computed: solve a problem with description target by determining source side target side its similarity to source and, if the similarity is large enough, by creating a solution sol target by analogical made experiences reasoning from sol source (often making use of the given similarity computation). α source target problem: There are several general ideas how the construction of sol target can be done. β ’ β Other terms used are analogy based inference or instance based learning. looked for α ’ solution: sol source sol target Machine Learning J. Denzinger Machine Learning J. Denzinger Known methods to learn cases/analogy: Comments: transformational analogy: } the core assumption for analogical reasoning is that similar problems have similar solutions construct α ’ out of α F but there are many definitions of similarity derivational analogy: } analogical reasoning is mainly driven by particular construct β ’ out of β and α applications so that methods are either very general (and vague with lots of potential parameters) or very specific (with still often many parameters) } the success of analogical reasoning is dependent on the case base and the span of the cases (in the space of possible cases) in this base Machine Learning J. Denzinger Machine Learning J. Denzinger 8.1 Instance-based learning: IB3 Learning phase: General idea Representing and storing the knowledge Based on slides by Michael M. Richter. The learned knowledge is stored in a so-called case base CB, which is a set (or other appropriate data This is a very general (partial) method. IB3 is mainly structure) of cases. A case (p,c) consists of a problem about growing the case base (i.e. the learning method) description p which is a set of feature-value pairs and a and it only adds cases when the application of the classification c. previous case base led to failure. It also eliminates cases from the case base, when they prove to be “bad”. IB3 aims at classifying problems. Machine Learning J. Denzinger Machine Learning J. Denzinger 1

2016-03-15 Learning phase: Learning phase: What or whom to learn from Learning method We learn from a sequence F 1 ,...,F n of training cases IB3 creates iteratively the case base CB in the following (although training can always be continued with every manner; it also computes for each element F in CB the new application of the method): measure CQ(F) = # of problems correctly classified with F / # of all problems classified with F: F i = (p i ,c i ) CB := {} For i := 1 to n do CB acep := {F ∈ CB| Acceptable(F)} If CB acep ≠ {} then F source := F‘ = (p‘,c‘) such that sim(F i ,F‘) is maximal else j := random(1,|CB|) F source := F‘ = (p‘,c‘) such that sim(F i ,F‘) is the j-largest for all F ∈ CB Machine Learning J. Denzinger Machine Learning J. Denzinger Learning phase: Application phase: Learning method (cont.) How to detect applicable knowledge If c i ≠ c‘ then CB := CB ∪ {F i } During the learning phase Acceptable is responsible for For all F* =(p*,c*) ∈ CB with sim(p i ,p*) ≥ sim(p i ,p’) do the detection of applicable cases. When trying to apply update CQ for F* a case base (without intent to update it) then the If CQ(F*) is significantly bad then similarity measure is used. CB := CB \ {F*} There are several possibilities to define Acceptable(F) and when CQ(F) is significantly bad. The most simple ones are to simply provide threshold values thresh acc and thresh bad , so that Acceptable(F) iff CQ(F) > thresh acc CQ(F) sign. bad iff CQ(F) < thresh bad Machine Learning J. Denzinger Machine Learning J. Denzinger Application phase: Application phase: How to apply knowledge Detect/deal with misleading knowledge We select the most similar case to a given problem The learning of the case base includes the identification description and as class the class of this case. of bad cases (via the significantly bad evaluation) and this can naturally be continued indefinitely. Machine Learning J. Denzinger Machine Learning J. Denzinger 2

2016-03-15 General questions: General questions: Generalize/detect similarities? Dealing with knowledge from other sources Obviously, the similarity measure is central to the This is not part of this approach, although the similarity success of analogy-based approaches. While rather measure is a potential access point for doing this. general ones like the ones mentioned in 6.1 might work, even more often than in clustering application specific measures are needed. Also, analogy-based reasoning is especially not very interested in generalization! Machine Learning J. Denzinger Machine Learning J. Denzinger (Conceptual) Example (Conceptual) Example (cont.) Problem descriptions contain values for two features CQ((1,1),C) = 1. CB is not changed. (each a number between 1 and 4). We have 4 classes: For ((3,2),D) we have to use ((1,1),C) as case which A,B,C,D. We use Manhattan distance as similarity results in a wrong classification. We add ((3,2),D) to CB measure. thresh acc is set to 0.9 and thresh bad is set to and set CQ((1,1),C) = 2/3 (CQ((3,2),D)=1). 0.5. For ((4,2),D) we have CB acep = {((3,2),D)} and ((3,2),D)‘s The sequence of training examples is classification is correct, so that we do not change CB, ((1,1),C), ((2,2),C), ((3,2),D), ((4,2),D), ((2,3),A), and still CQ((3,2),D)=1. ((3,3),B), ((2,3),A), ((2,2),C) For ((2,3),A) we have CB acep = {((3,2),D)}, which results Since CB is empty, we add ((1,1),C) to it. in a wrong prediction, so that ((2,3),A) is added to CB. CQ((1,1),C) = 1. Therefore we have for ((2,2),C) that CQ((2,3),A) = 1, CQ((3,2),D) = 2/3 and CQ((1,1),C) is CB acep = {((1,1),C)} and use this only element as case, still 2/3. which results in a correct classification and Machine Learning J. Denzinger Machine Learning J. Denzinger (Conceptual) Example (cont.) (Conceptual) Example (cont.) For ((3,3),B) we have to use ((2,3),A) as case which We add ((2,2),C) to CB with CQ((2,2),C)=1 and results in a wrong classification. We add ((2,3),A) to CB change CQ((2,3),A) = 0.5 and CQ((3,2),D) = 1/3, which and set CQ((2,3),A) = 0.5 and CQ((3,3),B)=1. ((3,2),D) results in eliminating ((3,2),D) from CB. is as similar as ((2,3),A) , so that CQ((3,2),D) = 0.5 This leaves us rather far away from the optimal CB = For ((2,3),A) we have CB acep = {((3,3),B)} resulting in the {((2,2),C),((3,2),D),((2,3),A),((3,3),B)}. wrong classification. CB is not updated, since ((2,3),A) is Note that this is not an easy example due to the large already in it. CQ((3,3),B)=0.5, but CQ((2,3),A) = 2/3. overlap (similarity-wise) of the cases. For ((2,2),C) we have CB acep = {}, so that we need a j. Let us assume that j=2. (2,3) and (3,2) have the same similarity to (2,2), so that we need a tiebreaker to decide which is second similar. Assume that this results in (3,2), which leads to the wrong classification. Machine Learning J. Denzinger Machine Learning J. Denzinger 3

Recommend

More recommend