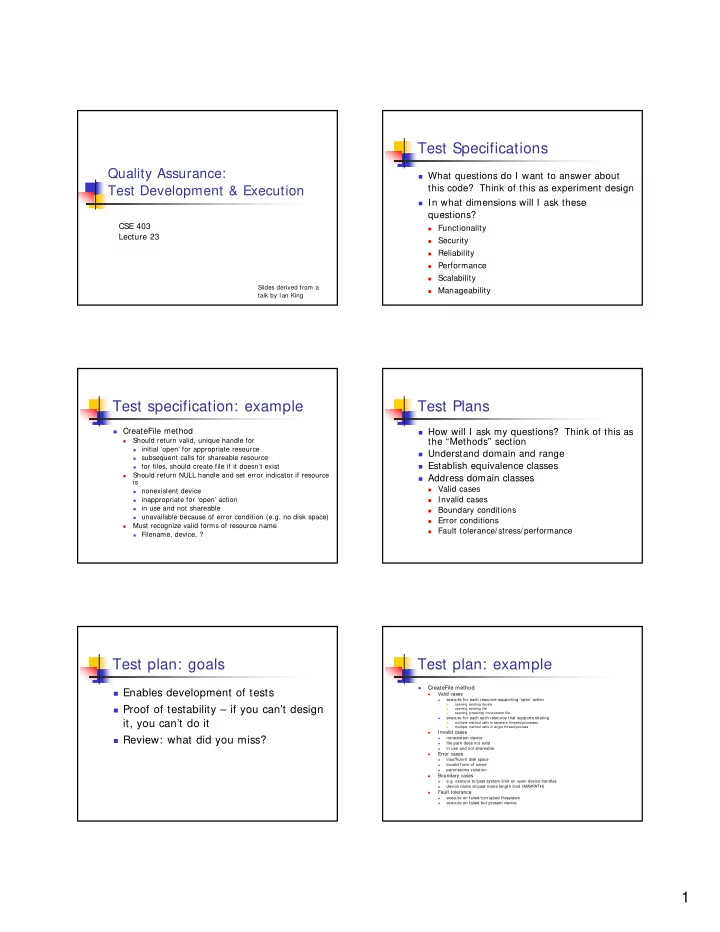

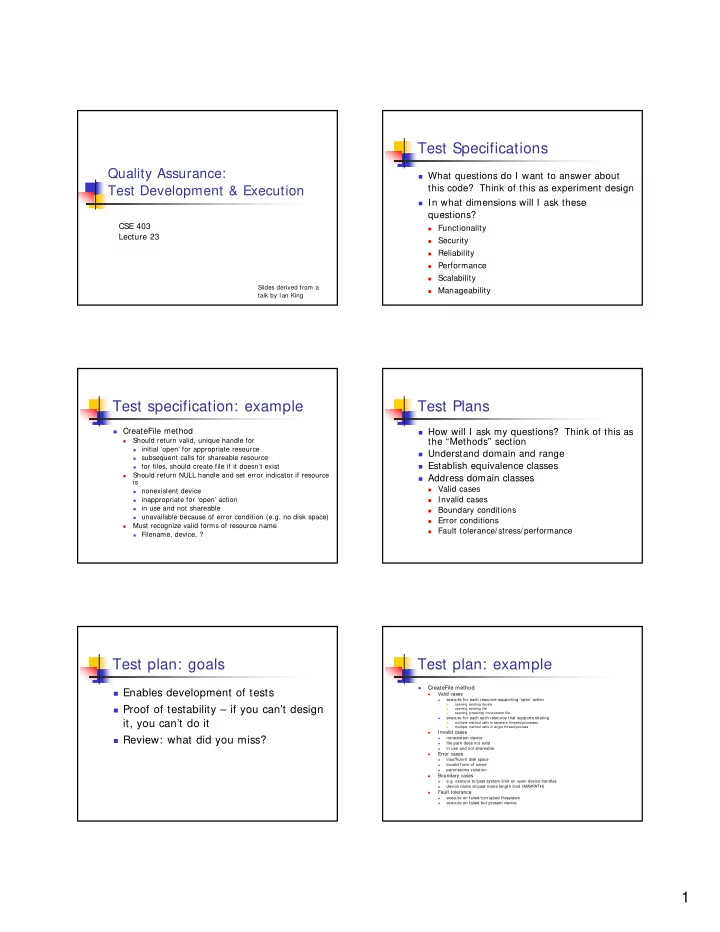

Test Specifications Quality Assurance: � What questions do I want to answer about this code? Think of this as experiment design Test Development & Execution � In what dimensions will I ask these questions? CSE 403 � Functionality Lecture 23 � Security � Reliability � Performance � Scalability Slides derived from a � Manageability talk by Ian King Test specification: example Test Plans � CreateFile method � How will I ask my questions? Think of this as Should return valid, unique handle for the “Methods” section � initial ‘open’ for appropriate resource � � Understand domain and range subsequent calls for shareable resource � � Establish equivalence classes for files, should create file if it doesn’t exist � Should return NULL handle and set error indicator if resource � � Address domain classes is � Valid cases nonexistent device � � Invalid cases inappropriate for ‘open’ action � in use and not shareable � Boundary conditions � unavailable because of error condition (e.g. no disk space) � � Error conditions Must recognize valid forms of resource name � � Fault tolerance/stress/performance Filename, device, ? � Test plan: goals Test plan: example CreateFile method � � Enables development of tests Valid cases � execute for each resource supporting ‘open’ action � � opening existing device � Proof of testability – if you can’t design � opening existing file opening (creating) nonexistent file � execute for each such resource that supports sharing � it, you can’t do it multiple method calls in separate threads/processes � multiple method calls in single thread/process � Invalid cases � � Review: what did you miss? nonexistent device � file path does not exist � in use and not shareable � Error cases � insufficient disk space � invalid form of name � permissions violation � Boundary cases � e.g. execute to/past system limit on open device handles � device name at/past name length limit (MAXPATH) � Fault tolerance � execute on failed/corrupted filesystem � execute on failed but present device � 1

Performance testing Security Testing � Is data/access safe from those who should � Test for performance behavior not have it? � Does it meet requirements? � Is data/access available to those who should � Customer requirements have it? � Definitional requirements (e.g. Ethernet) � How is privilege granted/revoked? � Test for resource utilization � Is the system safe from unauthorized control? � Understand resource requirements � Example: denial of service � Collateral data that compromises security � Test performance early � Example: network topology � Avoid costly redesign to meet performance requirements Stress testing Globalization � Working stress: sustained operation at � Localization or near maximum capability � UI in the customer’s language � Goal: resource leak detection � German overruns the buffers � Japanese tests extended character sets � Breaking stress: operation beyond expected maximum capability � Globalization � Data in the customer’s language � Goal: understand failure scenario(s) � Non-US values ($ vs. Euro, ips vs. cgs) � “Failing safe” vs. unrecoverable failure or data loss � Mars Global Surveyor: mixed metric and SAE Test Cases Test case: example � Actual “how to” for individual tests � CreateFile method � Expected results � Valid cases � English � One level deeper than the Test Plan � open existing disk file with arbitrary name and full path, � Automated or manual? file permissions allowing access create directory ‘c:\foo’ � � Environmental/platform variables copy file ‘bar’ to directory ‘c:\foo’ from test server; � permissions are ‘Everyone: full access’ execute CreateFile(‘c:foo\bar’, etc.) � expected: non-null handle returned � 2

Test Harness/Architecture Test Schedule � Phases of testing � Test automation is nearly always worth Unit testing (may be done by developers) � the time and expense Component testing � Integration testing � � How to automate? System testing � � Commercial harnesses � Dependencies – when are features ready? Use of stubs and harnesses � � Roll-your-own � When are tests ready? � Record/replay tools Automation requires lead time � � The long pole – how long does a test pass take? � Scripted harness � Logging/Evaluation Where The Wild Things Are: Challenges and Pitfalls Test Schedule � “Everyone knows” – hallway design � Phases of testing � “We won’t know until we get there” � Unit testing (may be done by developers) � Component testing � “I don’t have time to write docs” � Integration testing � Feature creep/design “bugs” � System testing � Dependency on external groups � Usability testing What makes a good tester? How do test engineers fail? � Analytical � Desire to “make it work” � Ask the right questions � Impartial judge, not “handyman” � Develop experiments to get answers � Trust in opinion or expertise � Methodical � Trust no one – the truth (data) is in there � Follow experimental procedures precisely � Failure to follow defined test procedure � Document observed behaviors, their � How did we get here? precursors and environment � Brutally honest � Failure to document the data � You can’t argue with the data � Failure to believe the data 3

Testability What color is your box? � Can all of the feature’s code paths be exercised � Black box testing through APIs, events/messages, etc.? � Treats the SUT as atomic � Unreachable internal states � Study the gazinta’s and gozouta’s � Can the feature’s behavior be programmatically � Best simulates the customer experience verified? � White box testing � Is the feature too complex to test? � Examine the SUT internals � Consider configurations, locales, etc. � Can the feature be tested timely with available � Trace data flow directly (in the debugger) resources? � Bug report contains more detail on source of � Long test latency = late discovery of faults defect � May obscure timing problems (race conditions) Designing Good Tests Types of Test Cases � Valid cases � Well-defined inputs and outputs � What should work? � Consider environment as inputs � Invalid cases � Consider ‘side effects’ as outputs � Ariane V – data conversion error (http://www.cs.york.ac.uk/hise/safety-critical- � Clearly defined initial conditions archive/1996/0055.html) � Clearly described expected behavior � Boundary conditions � Fails in September? � Specific – small granularity provides greater � Null input precision in analysis � Error conditions � Test must be at least as verifiable as SUT � Distinct from invalid input Manual Testing Automated Testing � Definition: test that requires direct human � Good: replaces manual testing intervention with SUT � Better: performs tests difficult for manual � Necessary when: testing (e.g. timing related issues) � GUI is present � Best: enables other types of testing � Behavior is premised on physical activity (e.g. card (regression, perf, stress, lifetime) insertion) � Risks: � Advisable when: � Time investment to write automated tests � Automation is more complex than SUT � Tests may need to change when features change � SUT is changing rapidly (early development) 4

Types of Automation Tools: Types of Automation Tools: Record/Playback Scripted Record/Playback � Record “proper” run through test procedure � Fundamentally same as simple (inputs and outputs) record/playback � Play back inputs, compare outputs with � Record of inputs/outputs during manual test recorded values input is converted to script � Advantage: requires little expertise � Advantage: existing tests can be maintained as programs � Disadvantage: little flexibility - easily invalidated by product change � Disadvantage: requires more expertise � Disadvantage: update requires manual � Disadvantage: fundamental changes can involvement ripple through MANY scripts Types of Automation Tools: Script Harness Test Corpus � Tests are programmed as modules, � Body of data that generates known then run by harness results � Harness provides control and reporting � Can be obtained from � Advantage: tests can be very flexible � Real world – demonstrates customer experience � Disadvantage: requires considerable � Test generator – more deterministic expertise and abstract process � Caveats � Bias in data generation � Don’t share test corpus with developers! Instrumented Code: Instrumented Code: Test Hooks Diagnostic Compilers � Code that enables non-invasive testing � Creates ‘instrumented’ SUT for testing � Code remains in shipping product � Profiling – where does the time go? � Code coverage – what code was touched? � May be enabled through � Really evaluates testing, NOT code quality � Special API � Syntax/coding style – discover bad coding � Special argument or argument value � lint, the original syntax checker � Registry value or environment variable � Complexity � Example: Windows CE IOCTLs � Very esoteric, often disputed (religiously) � Risk: silly customers…. � Example: function point counting 5

Recommend

More recommend