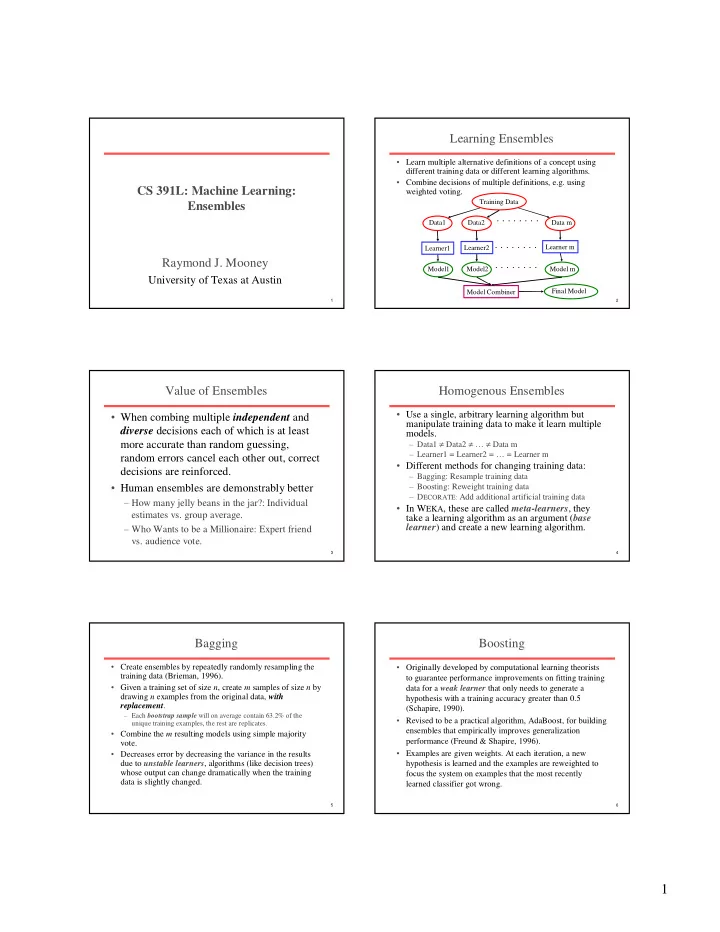

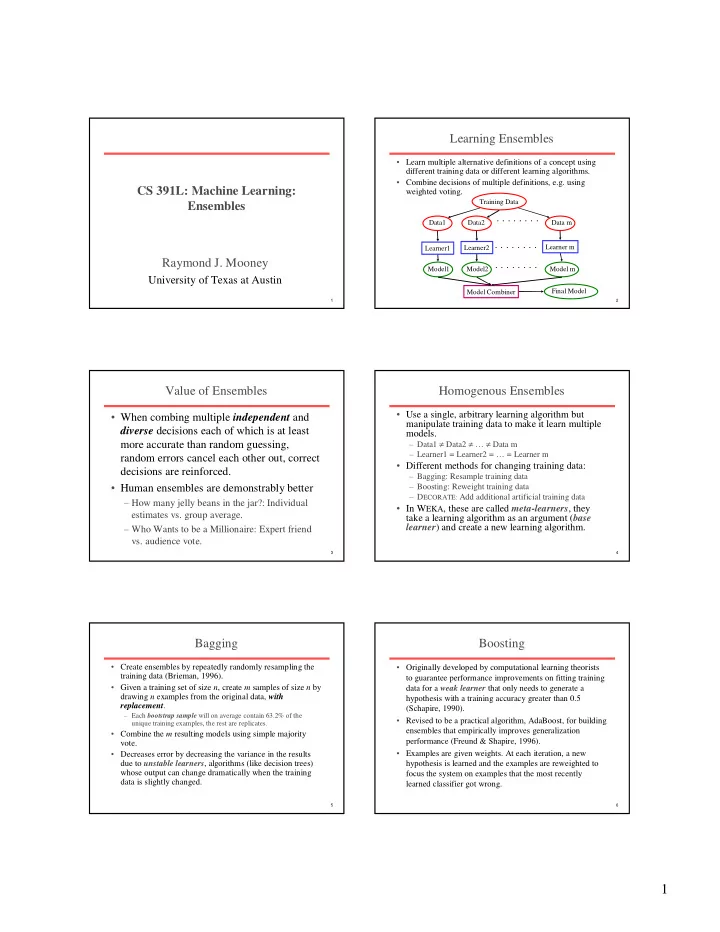

Learning Ensembles • Learn multiple alternative definitions of a concept using different training data or different learning algorithms. • Combine decisions of multiple definitions, e.g. using CS 391L: Machine Learning: weighted voting. Ensembles Training Data ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ Data1 Data2 Data m ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ Learner2 Learner m Learner1 Raymond J. Mooney ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ Model1 Model2 Model m University of Texas at Austin Final Model Model Combiner 1 2 Value of Ensembles Homogenous Ensembles • When combing multiple independent and • Use a single, arbitrary learning algorithm but manipulate training data to make it learn multiple diverse decisions each of which is at least models. – Data1 ≠ Data2 ≠ … ≠ Data m more accurate than random guessing, – Learner1 = Learner2 = … = Learner m random errors cancel each other out, correct • Different methods for changing training data: decisions are reinforced. – Bagging: Resample training data • Human ensembles are demonstrably better – Boosting: Reweight training data – D ECORATE: Add additional artificial training data – How many jelly beans in the jar?: Individual • In W EKA , these are called meta-learners , they estimates vs. group average. take a learning algorithm as an argument ( base learner ) and create a new learning algorithm. – Who Wants to be a Millionaire: Expert friend vs. audience vote. 3 4 Bagging Boosting • Create ensembles by repeatedly randomly resampling the • Originally developed by computational learning theorists training data (Brieman, 1996). to guarantee performance improvements on fitting training • Given a training set of size n , create m samples of size n by data for a weak learner that only needs to generate a drawing n examples from the original data, with hypothesis with a training accuracy greater than 0.5 replacement . (Schapire, 1990). – Each bootstrap sample will on average contain 63.2% of the • Revised to be a practical algorithm, AdaBoost, for building unique training examples, the rest are replicates. ensembles that empirically improves generalization • Combine the m resulting models using simple majority performance (Freund & Shapire, 1996). vote. • Decreases error by decreasing the variance in the results • Examples are given weights. At each iteration, a new due to unstable learners , algorithms (like decision trees) hypothesis is learned and the examples are reweighted to whose output can change dramatically when the training focus the system on examples that the most recently data is slightly changed. learned classifier got wrong. 5 6 1

Boosting: Basic Algorithm AdaBoost Pseudocode TrainAdaBoost(D, BaseLearn) • General Loop: For each example d i in D let its weight w i =1/| D | Let H be an empty set of hypotheses Set all examples to have equal uniform weights. For t from 1 to T do: For t from 1 to T do: Learn a hypothesis, h t , from the weighted examples: h t =BaseLearn( D ) Learn a hypothesis, h t , from the weighted examples Add h t to H Decrease the weights of examples h t classifies correctly Calculate the error, ε t , of the hypothesis h t as the total sum weight of the • Base (weak) learner must focus on correctly examples that it classifies incorrectly. If ε t > 0.5 then exit loop, else continue. classifying the most highly weighted examples Let β t = ε t / (1 – ε t ) while strongly avoiding over-fitting. Multiply the weights of the examples that h t classifies correctly by β t • During testing, each of the T hypotheses get a Rescale the weights of all of the examples so the total sum weight remains 1. Return H weighted vote proportional to their accuracy on TestAdaBoost( ex , H ) the training data. Let each hypothesis, h t , in H vote for ex ’s classification with weight log(1/ β t ) Return the class with the highest weighted vote total. 7 8 Experimental Results on Ensembles Learning with Weighted Examples (Freund & Schapire, 1996; Quinlan, 1996) • Generic approach is to replicate examples in the • Ensembles have been used to improve training set proportional to their weights (e.g. 10 generalization accuracy on a wide variety of replicates of an example with a weight of 0.01 and problems. 100 for one with weight 0.1). • On average, Boosting provides a larger increase in • Most algorithms can be enhanced to efficiently accuracy than Bagging. incorporate weights directly in the learning • Boosting on rare occasions can degrade accuracy. algorithm so that the effect is the same (e.g. implement the WeightedInstancesHandler • Bagging more consistently provides a modest interface in W EKA ). improvement. • For decision trees, for calculating information • Boosting is particularly subject to over-fitting gain, when counting example i , simply increment when there is significant noise in the training data. the corresponding count by w i rather than by 1. 9 10 D ECORATE Overview of D ECORATE (Melville & Mooney, 2003) • Change training data by adding new Current Ensemble Training Examples artificial training examples that encourage + - C1 diversity in the resulting ensemble. - + + • Improves accuracy when the training set is Base Learner small, and therefore resampling and + + - reweighting the training set has limited + - ability to generate diverse alternative Artificial Examples hypotheses. 11 12 2

Overview of D ECORATE Overview of D ECORATE Current Ensemble Current Ensemble Training Examples Training Examples + + - - C1 C1 - - + + + + Base Learner C2 Base Learner C2 + - - - - + + - + C3 - - + + + - Artificial Examples Artificial Examples 13 14 Active-D ECORATE Ensembles and Active Learning Unlabeled Examples Utility = 0.1 • Ensembles can be used to actively select good new training examples. Current Ensemble • Select the unlabeled example that causes the most disagreement amongst the members of Training Examples + C1 + the ensemble. - + C2 - D ECORATE • Applicable to any ensemble method: + C3 + - C4 + – QueryByBagging – QueryByBoosting – ActiveD ECORATE 16 15 16 Active-D ECORATE Issues in Ensembles Unlabeled Examples Utility = 0.1 0.9 • Parallelism in Ensembles: Bagging is easily 0.3 0.2 parallelized, Boosting is not. 0.5 Current Ensemble • Variants of Boosting to handle noisy data. • How “weak” should a base-learner for Boosting Training Examples + C1 + be? - C2 + - • What is the theoretical explanation of boosting’s D ECORATE + C3 - ability to improve generalization? - + C4 - • Exactly how does the diversity of ensembles affect Acquire Label their generalization performance. • Combining Boosting and Bagging. 17 18 17 3

Recommend

More recommend