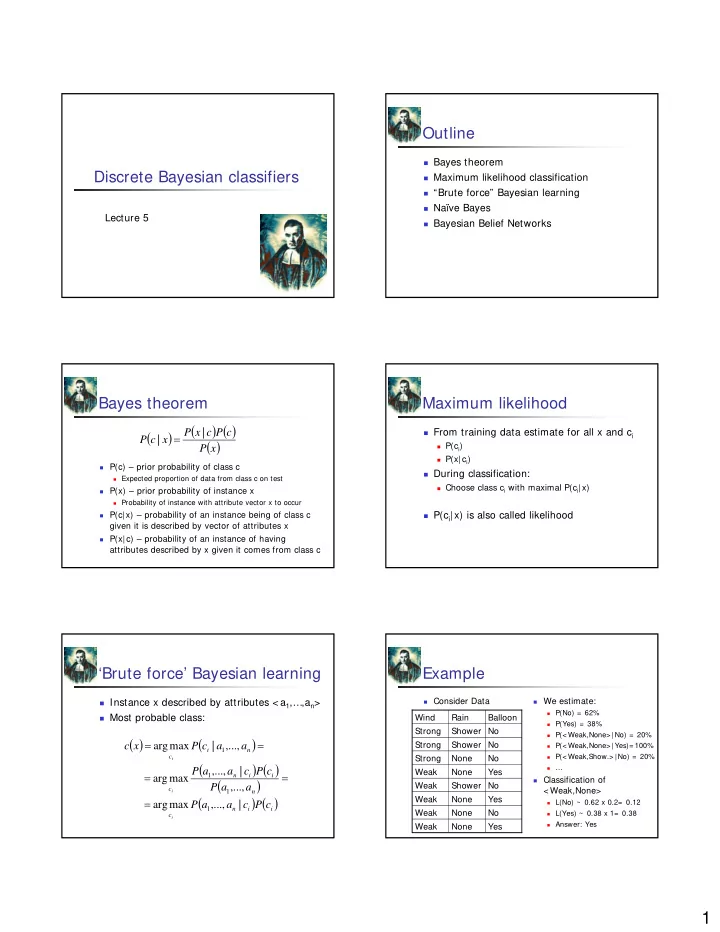

Outline � Bayes theorem Discrete Bayesian classifiers � Maximum likelihood classification � “Brute force” Bayesian learning � Naïve Bayes Lecture 5 � Bayesian Belief Networks Bayes theorem Maximum likelihood ( ) ( ) ( ) P x | c P c � From training data estimate for all x and c i = P c | x ( ) � P(c i ) P x � P(x|c i ) � P(c) – prior probability of class c � During classification: � Expected proportion of data from class c on test � Choose class c i with maximal P(c i |x) � P(x) – prior probability of instance x � Probability of instance with attribute vector x to occur � P(c|x) – probability of an instance being of class c � P(c i |x) is also called likelihood given it is described by vector of attributes x � P(x|c) – probability of an instance of having attributes described by x given it comes from class c ‘Brute force’ Bayesian learning Example � Instance x described by attributes < a 1 ,…,a n > � Consider Data � We estimate: � P(No) = 62% � Most probable class: Wind Rain Balloon � P(Yes) = 38% Strong Shower No � P(< Weak,None> |No) = 20% ( ) ( ) = = arg max | ,..., c x P c a a Strong Shower No � P(< Weak,None> |Yes)= 100% i 1 n c � P(< Weak,Show.> |No) = 20% Strong None No i ( ) ( ) � … P a ,..., a | c P c Weak None Yes = = 1 n i i arg max ( ) � Classification of ,..., Weak Shower No P a a c < Weak,None> i 1 n ( ) ( ) = Weak None Yes arg max ,..., | � L(No) ~ 0.62 x 0.2= 0.12 P a a c P c 1 n i i Weak None No � L(Yes) ~ 0.38 x 1= 0.38 c i � Answer: Yes Weak None Yes 1

Problem with ‘Brute force’ Naïve Bayes ( ) ( ) ( ) � It cannot generalize to unseen examples x new , � Brute force: = arg max ,..., | c x P a a c P c 1 n i i because it does not have estimates P(c i |x new ) c i � It is useless � Naïve Bayes assumes that attributes are independent for instances from a given class: � Brute force does not have any bias ( ) ( ) ∏ = � So in order to make learning possible we P a ,..., a | c P a | c 1 n i j i have to introduce a bias j ( ) ( ) ( ) ∏ � Which gives: = arg max | c x P c P a c i j i c j i � Assumption of independence is often violated by Naïve Bayes works surprisingly well anyway Example Missing estimates � Recall ‘advanced ballooning’ set: � What if none of training instances of class c i have attribute value a j ? Then: Sky Temper. Rain Wind Fly Balloon � P(a j |c i ) = 0, and Sunny Cold None Strong Yes ( ) ( ) = ∏ = ,..., | | 0 P a a c P a c Cloudy Cold Shower Weak Yes � 1 n i j i j Cloudy Cold Shower Strong No � no matter what are the values of other attributes Sunny Hot Shower Strong No � For example: � x = < Sunny, Hot, None, Weak> � Classify: x= < Cloudy, Hot, Shower, Strong> � P(Hot|Yes) = 0, hence � P(Y|x) ~ P(Y) P(Cl|Y) P(H|Y) P(Sh|Y) P (St|Y) � P(Yes|x) = 0 = 0.5 x 0.5 x 0 x 0.5 x 0.5 = 0 � P(N|x) ~ 0.5 x 0.5 x 0.5 x 1 x 1 = 0.125 Solution Learning to classify text � Let m denote the number of possible values of � For example: is an e-mail a spam? attribute a j � Represent each document by a set of words � For each class let us consider adding m “virtual � Independence assumptions: examples” with different values of a j � Order of words does not matter � Bayesian estimate for P(a j |c i ) becomes: � Co-occurrences of words do not matter + ( ) n 1 = � Learning: estimate from training documents: ciaj | P a c + j i n m � For every class c i estimate P(c i ) ci � Where: � For every word w and class c i estimate P(w|c i ) � n ci – number of training examples with class c i � Classification: maximum likelihood � n ciaj – number of training examples with class c i and attribute a j 2

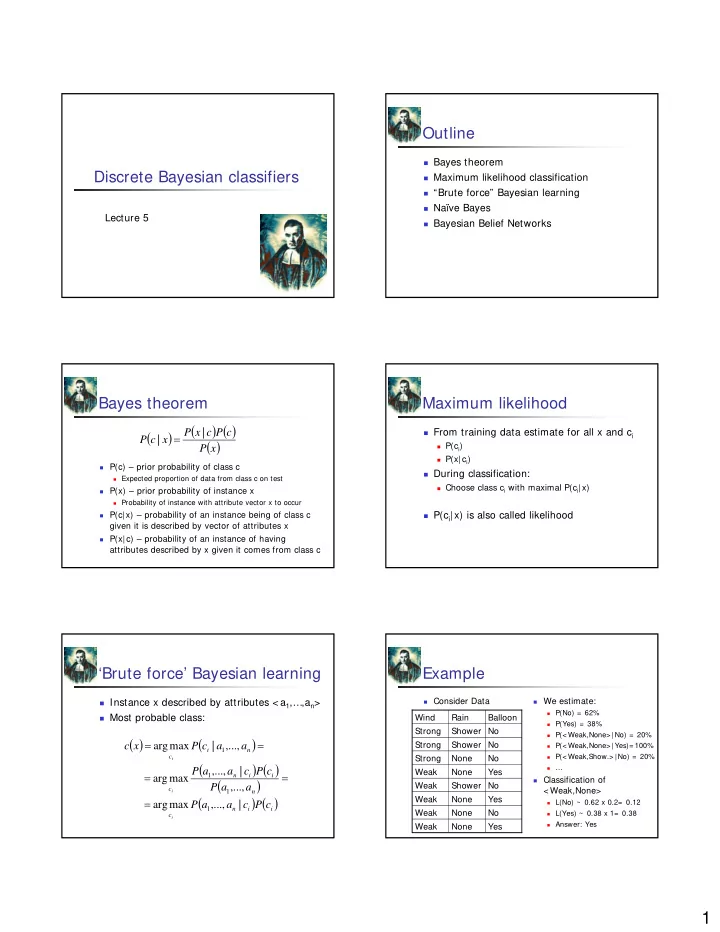

Learning in detail Classification in detail � Vocabulary = all distinct words in training text � Index all words in document to classify by j � For each class c i � i.e. denote j th word in the document by w j ( ) Number of documents of class c ( ) i = ( ) ∏ � Classify: i = P c ( ) arg max | � c document P c P w c Total number of documents i j i c j i � Text ci = concatenated documents of class c i � In practice P(w j |c i ) are small so their product � n ci = total # words in Text ci (count duplicates multiple times) is very close to 0; it is better to use: � For each word w j in Vocabulary ⎡ ⎤ ( ) � n ciwj = number of times word w j occurred in text Text ci ( ) ∏ = = ( ) arg max log ⎢ | ⎥ c document P c P w c + � ( i j i ) 1 n ⎣ ⎦ c = ciwj i j | P w c + j i ⎡ ⎤ n Vocabulary ( ) ⎥ ( ) ∑ ci = + arg max ⎢ log log | P c P w c i j i ⎣ ⎦ c j i Pre-processing Understanding Naïve Bayes � Allows adding background knowledge � Although Naïve Bayes is considered to be subsymbolic, the estimated probabilities may � May dramatically increase accuracy give insight on the classification process � Sample techniques: � For example in spam filtering � Lemmatisation - converts words to basic form � Words with maximum P(w j |spam) are the words � Stop-list - removes 100 most frequent words whose presence most predicts en e-mail to be a spam e-mail Bayesian Belief Networks Conditional independence � Naïve Bayes assumption of conditional � X is conditionally independent of Y given Z if � ∀ x,y,z: P(X= x | Y= y, Z= z) = P(X= x | Z= z) independence of attributes is too restrictive for some problems � Usually written: P(X|Y,Z) = P(X|Z) � Example: � But some assumptions need to be made to P(Thunder|Rain,Ligthining)= P(Thunder|Lightning) allow generalization � Used by Naïve Bayes: � Bayesian Belief Networks assume conditional � P(A 1 ,A 2 |C) = P(A 1 |A 2 ,C) P(A 2 |C) = P(A 1 |C)P(A 2 |C) independence among subset of attributes � Allows combining prior knowledge about Always true Only true if A 1 and A 2 (in)dependencies among attributes conditionally independent 3

Bayesian Belief Network Learning Bayesian Network Flu � Probabilities of attribute values given parents can be estimated from the training set Weakness feVer Headache F 0.1 � Connections describe dependence & causality ¬ F Flu 0.9 � Each node is conditionally independent of its nondescendants, given its immediate predecessors Weakness feVer Headache � Examples: ¬ FV F ¬ V ¬ F ¬ V ¬ F ¬ F � feVer and Headache are independent given flu FV F F � feVer and weakness are not independent given flu W 0.9 0.8 0.5 0.05 V 0.8 0.1 H 0.6 0.1 ¬ W ¬ V ¬ H 0.1 0.2 0.5 0.95 0.2 0.9 0.4 0.9 Inference Naïve Bayes network � During Bayesian classification we compute: Flu ( ) ( ) ( ) ( ) = = arg max ,..., | arg max ,..., , c x P a a c P c P a a c 1 n i i 1 n i c c Sore throat feVer Headache i i � In general in Bayesian network with nodes Y i : n ( ) ( ( ) ) ∏ = P y ,..., y P y | Parents Y � In case of this network: 1 n i i = i 1 ( ) n ( ( ) ) ( ) n ∏ ( ) ∏ ( ( ) ) ( ) = = ,..., , | ,..., , | � Thus P a a c P a Parents A P c P a a c P a Parents A P c 1 n i i 1 n i i = = 1 1 i i n � Example: Classify patient: W,V, ¬ H ( ) ( ) ∏ = P a | c P c i � P(W,V, ¬ H,F) = P(W|VF) P(V|F) P( ¬ H|F) P(F) = i 1 Extensions to Bayesian nets Summary � Network with hidden states, e.g. � Inductive bias of Naïve Bayes: � Attributes are independent. � Although this assumption is often violated, it provides a very efficient tool often used � E.g. For spam filtering. � Applicable to data: � with many attributes (possibly missing), � which take discrete values (e.g. words). � Learning structure of the network from data � Bayesian belief networks � Allow prior knowledge about dependencies 4

Recommend

More recommend