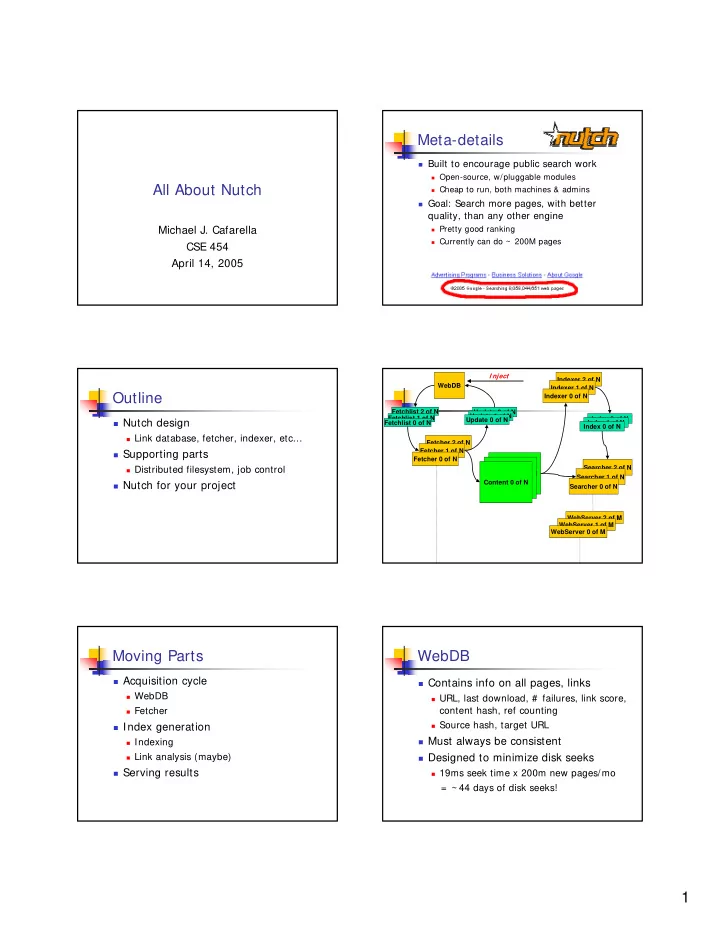

Meta-details � Built to encourage public search work � Open-source, w/pluggable modules All About Nutch � Cheap to run, both machines & admins � Goal: Search more pages, with better quality, than any other engine Michael J. Cafarella � Pretty good ranking � Currently can do ~ 200M pages CSE 454 April 14, 2005 I nject Indexer 2 of N WebDB Indexer 1 of N Outline Indexer 0 of N Fetchlist 2 of N Update 2 of N Update 1 of N Fetchlist 1 of N Index 2 of N Update 0 of N � Nutch design Index 1 of N Fetchlist 0 of N Index 0 of N � Link database, fetcher, indexer, etc… Fetcher 2 of N Fetcher 1 of N � Supporting parts Fetcher 0 of N � Distributed filesystem, job control Searcher 2 of N Content 0 of N Searcher 1 of N Content 0 of N Content 0 of N � Nutch for your project Searcher 0 of N WebServer 2 of M WebServer 1 of M WebServer 0 of M Moving Parts WebDB � Acquisition cycle � Contains info on all pages, links � WebDB � URL, last download, # failures, link score, � Fetcher content hash, ref counting � Index generation � Source hash, target URL � Must always be consistent � Indexing � Link analysis (maybe) � Designed to minimize disk seeks � Serving results � 19ms seek time x 200m new pages/mo = ~ 44 days of disk seeks! 1

Fetcher WebDB/Fetcher Updates � Fetcher is very stupid. Not a “crawler” URL: http://www.cs.washington.edu/index.html Edit: DOWNLOAD_CONTENT � Divide “to-fetch list” into k pieces, one LastUpdated: 3/22/05 URL: http://www.yahoo/index.html for each fetcher machine ContentHash: MD5_sdflkjweroiwelksd ContentHash: MD5_toewkekqmekkalekaa URL: http://www.cnn.com/index.html URL: http://www.cnn.com/index.html Edit: DOWNLOAD_CONTENT � URLs for one domain go to same list, LastUpdated: Never LastUpdated: Today! URL: http://www.cnn.com/index.html ContentHash: None ContentHash: MD5_balboglerropewolefbag ContentHash: MD5_balboglerropewolefbag otherwise random URL: http://www.yahoo/index.html URL: http://www.flickr/com/index.html Edit: NEW_LINK � “Politeness” w/o inter-fetcher protocols LastUpdated: 4/07/05 LastUpdated: Never URL: http://www.flickr.com/index.html ContentHash: MD5_toewkekqmekkalekaa ContentHash: None ContentHash: None � Can observe robots.txt similarly URL: http://www.yahoo.com/index.html Fetcher edits WebDB � Better DNS, robots caching LastUpdated: Today! ContentHash: MD5_toewkekqmekkalekaa � Easy parallelism 4. Repeat for other tables 3. Read streams in parallel, emitting new database 1. Write down fetcher edits 2. Sort edits (externally, if necessary) � Two outputs: pages, WebDB edits Indexing Link analysis � Iterate through all k page sets in parallel, � A page’s relevance depends on both constructing inverted index intrinsic and extrinsic factors � Creates a “searchable document” of: � Intrinsic: page title, URL, text � URL text � Extrinsic: anchor text, link graph � Content text � PageRank is most famous of many � Incoming anchor text � Other content types might have a different � Others include: document fields � HITS � Eg, email has sender/receiver � Simple incoming link count � Any searchable field end-user will want � Uses Lucene text indexer � Link analysis is sexy, but importance generally overstated Query Processing Link analysis (2) Docs 0-1M Docs 1-2M Docs 2-3M Docs 3-4M Docs 4-5M � Nutch performs analysis in WebDB � Emit a score for each known page Ds 2.3M, 2.9M Ds 1.2M, 1.7M ” ” y M e � At index time, incorporate score into “ y b “britney” 2 n r . t Ds 1, 29 i t e 3 i “britney” n r e , b Ds 4.4M, 4.5M y n M “ ” 1 t . i 3 inverted index r s b D “ � Extremely time-consuming � In our case, disk-consuming, too (because 1.2M, 4.4M, 29, … “britney” we want to use low-memory machines) � 0.5 * log(# incoming links) 2

Administering Nutch Administering Nutch (2) � Admin costs are critical � Admin sounds boring, but it’s not! � It’s a hassle when you have 25 machines � Really � Google has maybe > 100k � I swear � Files � Large-file maintenance � WebDB content, working files � Google File System (Ghemawat, Gobioff, � Fetchlists, fetched pages Leung) � Link analysis outputs, working files � Nutch Distributed File System � Inverted indices � Jobs � Job Control � Emit fetchlists, fetch, update WebDB � Map/Reduce (Dean and Ghemawat) � Run link analysis � Build inverted indices Nutch Distributed File System NDFS (2) � Similar, but not identical, to GFS � Data divided into blocks � Requirements are fairly strange � Blocks can be copied, replicated � Extremely large files � Datanodes hold and serve blocks � Most files read once, from start to end � Namenode holds metainfo � Low admin costs per GB � Filename � block list � Equally strange design � Block � datanode-location � Write-once, with delete � Datanodes report in to namenode every � Single file can exist across many machines few seconds, � Wholly automatic failure recovery NDFS File Read NDFS Replication Datanode 0 Datanode 1 Datanode 2 Datanode 0 Datanode 1 Datanode 2 (Blk 90 to dn 0) (33, 95) (46, 95) (33, 104) Namenode Namenode Datanode 3 Datanode 4 Datanode 5 Datanode 3 Datanode 4 Datanode 5 (21, 33, 46) (90) (21, 90, 104) “crawl.txt” (block-33 / datanodes 1, 4) (block-95 / datanodes 0, 2) (block-65 / datanodes 1, 4, 5) 1. Always keep at least k copies of each blk 1. Client asks datanode for filename info 2. Imagine datanode 4 dies; blk 90 lost 2. Namenode responds with blocklist, and 3. Namenode loses heartbeat, decrements blk location(s) for each block 90’s reference count. Asks datanode 5 to 3. Client fetches each block, in sequence, from replicate blk 90 to datanode 0 a datanode 4. Choosing replication target is tricky 3

Map/Reduce Map/Reduce (2) � Map/Reduce is programming model � Task: count words in docs from Lisp (and other places) � Input consists of (url, contents) pairs � Easy to distribute across nodes � map(key= url, val= contents): � Nice retry/failure semantics � For each word w in contents, emit (w, “1”) � map(key, val) is run on each item in set � reduce(key= word, values= uniq_counts): � emits key/val pairs � Sum all “1”s in values list � reduce(key, vals) is run for each unique � Emit result “(word, sum)” key emitted by map() � emits final output � Many problems can be phrased this way Map/Reduce (3) Map/Reduce (4) � Task: grep How is this distributed? � � Input consists of (url+ offset, single line) Partition input key/value pairs into 1. chunks, run map() tasks in parallel � map(key= url+ offset, val= line): After all map()s are complete, consolidate � If contents matches regexp, emit (line, “1”) 2. all emitted values for each unique � reduce(key= line, values= uniq_counts): emitted key � Don’t do anything; just emit line Now partition space of output map keys, 3. � We can also do graph inversion, link and run reduce() in parallel analysis, WebDB updates, etc If map() or reduce() fails, reexecute! � Map/Reduce Job Processing Searching webcams � Index size will be small TaskTracker 0 TaskTracker 1 TaskTracker 2 � Need all the hints you can get JobTracker � Page text, anchor text � URL sources like Yahoo or DMOZ entries TaskTracker 3 TaskTracker 4 TaskTracker 5 � Webcam-only content types “grep” 1. Client submits “grep” job, indicating code � Avoid processing images at query time and input files 2. JobTracker breaks input file into k chunks, � Take a look at Nutch pluggable content (in this case 6). Assigns work to ttrackers. 3. After map(), tasktrackers exchange map- types (current examples include PDF, output to build reduce() keyspace MS Word, etc.). Might work. 4. JobTracker breaks reduce() keyspace into m chunks (in this case 6). Assigns work. 5. reduce() output may go to NDFS 4

Searching webcams (2) Conclusion � Annotate Lucene document with new � http://www.nutch.org/ fields � Partial documentation � “Image qualities” might contain “indoors” � Source code or “daylight” or “flesh tones” � Developer discussion board � Parse text for city names to fill “location” � “Lucene in Action” by Hatcher, field � Multiple downloads to compute “lattitude” Gospodnetic (you can borrow mine) field � Questions? � Others? � Will require new search procedure, too 5

Recommend

More recommend