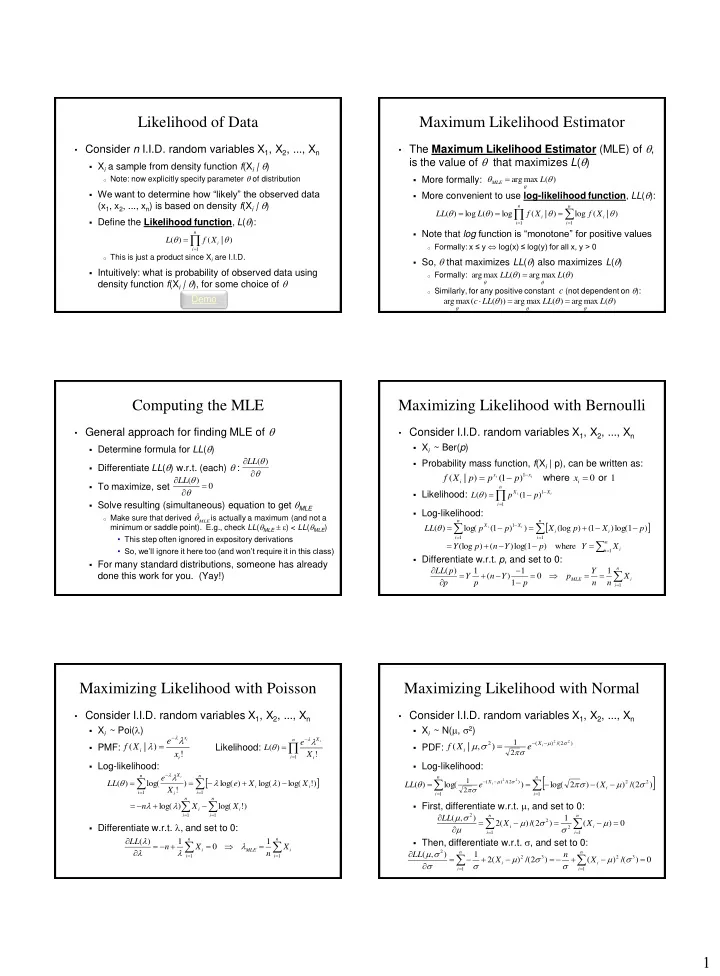

Likelihood of Data Maximum Likelihood Estimator • The Maximum Likelihood Estimator (MLE) of , • Consider n I.I.D. random variables X 1 , X 2 , ..., X n is the value of that maximizes L ( ) X i a sample from density function f (X i | ) MLE o Note: now explicitly specify parameter of distribution More formally: arg max ( ) L We want to determine how “likely” the observed data More convenient to use log-likelihood function , LL ( ): (x 1 , x 2 , ..., x n ) is based on density f (X i | ) n n LL ( ) log L ( ) log f ( X | ) log f ( X | ) Define the Likelihood function , L ( ): i i i 1 i 1 Note that log function is “monotone” for positive values n L ( ) f ( X | ) i o Formally: x ≤ y log(x) ≤ log(y) for all x, y > 0 i 1 o This is just a product since X i are I.I.D. So, that maximizes LL ( ) also maximizes L ( ) Intuitively: what is probability of observed data using o Formally: arg max LL ( ) arg max L ( ) density function f (X i | ), for some choice of o Similarly, for any positive constant c (not dependent on ): Demo arg max ( ( )) arg max ( ) arg max ( ) c LL LL L Computing the MLE Maximizing Likelihood with Bernoulli • General approach for finding MLE of • Consider I.I.D. random variables X 1 , X 2 , ..., X n Determine formula for LL ( ) X i ~ Ber( p ) LL ( ) Probability mass function, f (X i | p), can be written as: Differentiate LL ( ) w.r.t. (each) : x 1 x f ( X | p ) p ( 1 p ) where x 0 or 1 i i LL ( ) i i To maximize, set 0 n ( Likelihood: X 1 X L ) p ( 1 p ) i i Solve resulting (simultaneous) equation to get MLE i 1 Log-likelihood: ˆ o Make sure that derived is actually a maximum (and not a MLE n n minimum or saddle point). E.g., check LL ( MLE ) < LL ( MLE ) ( X 1 X LL ) log( p ( 1 p ) ) X (log p ) ( 1 X ) log( 1 p ) i i i i • This step often ignored in expository derivations i 1 i 1 n (log ) ( ) log( 1 ) where Y p n Y p Y X • So, we’ll ignore it here too (and won’t require it in this class) i i 1 Differentiate w.r.t. p , and set to 0: For many standard distributions, someone has already n LL ( p ) 1 1 Y 1 done this work for you. (Yay!) Y ( n Y ) 0 p X MLE i p p 1 p n n i 1 Maximizing Likelihood with Poisson Maximizing Likelihood with Normal • Consider I.I.D. random variables X 1 , X 2 , ..., X n • Consider I.I.D. random variables X 1 , X 2 , ..., X n X i ~ Poi( l ) X i ~ N( m , 2 ) l l x l e l X i n e i m 1 m 2 2 l 2 ( X ) /( 2 ) ( | ) ( | , ) PMF: f X Likelihood: ( ) PDF: f X e i L i i 2 x ! ! X 1 i i i Log-likelihood: Log-likelihood: l l X n n e i n n l l 1 m 2 2 m ( ) log( ) log( ) log( ) log( ! ) ( X ) /( 2 ) 2 2 LL e X X LL ( ) log( e ) log( 2 ) ( X ) /( 2 ) i i i ! i X 2 1 1 i i i i 1 i 1 n n First, differentiate w.r.t. m , and set to 0: l log( l ) log( ! ) n X X i i m 1 1 2 i i LL ( , ) n 1 n m m 2 2 ( X ) /( 2 ) ( X ) 0 Differentiate w.r.t. l , and set to 0: m i 2 i i 1 i 1 l n n Then, differentiate w.r.t. , and set to 0: LL ( ) 1 1 l n X 0 X l l i MLE i m n ( , 2 ) n 1 n LL n i 1 i 1 m m 2 ( ) 2 /( 2 3 ) ( ) 2 /( 3 ) 0 X X i i i 1 i 1 1

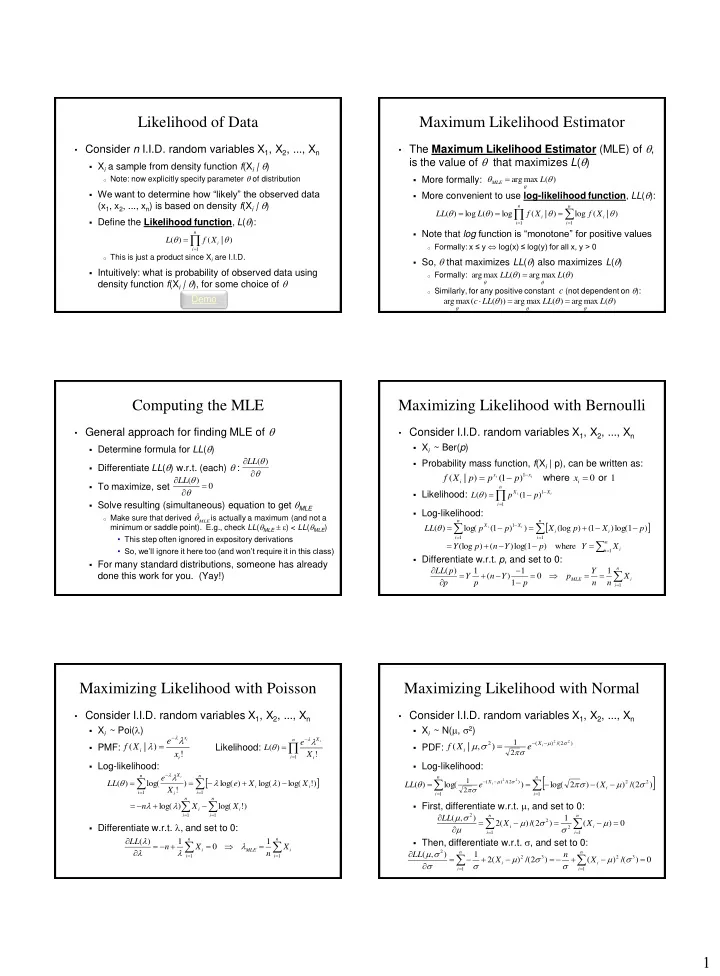

Being Normal, Simultaneously Maximizing Likelihood with Uniform Now have two equations, two unknowns: • Consider I.I.D. random variables X 1 , X 2 , ..., X n 1 n n n X i ~ Uni( a , b ) m m 2 3 ( X ) 0 ( X ) /( ) 0 1 2 i i a x b b a i 1 i 1 PDF: f ( X | a , b ) i i 0 otherwise First, solve for m MLE : n 1 a x , x ,..., x b n n n 1 1 Likelihood: b a ( ) 1 2 n m m m L ( X ) 0 X n X 2 i i MLE i n 0 otherwise i 1 i 1 i 1 o Constraint a < x 1 , x 2 , …, x n < b makes differentiation tricky Then, solve for 2MLE : o Intuition: want interval size (b – a) to be as small as possible to n n n m 2 3 2 m 2 ( ) /( ) 0 ( ) X n X maximize likelihood function for each data point i i 1 1 i i n o But need to make sure all observed data contained in interval 1 m 2 2 ( X ) MLE i MLE • If all observed data not in interval, then L ( ) = 0 n i 1 Note: m MLE unbiased, but 2 Solution: a MLE = min(x 1 , …, x n ) b MLE = max(x 1 , …, x n ) MLE biased (same as MOM) Understanding MLE with Uniform Once Again, Small Samples = Problems • Consider I.I.D. random variables X 1 , X 2 , ..., X n • How do small samples effect MLE? 1 n X i ~ Uni(0, 1) m In many cases, = sample mean X MLE i n 1 Observe data: i o Unbiased. Not too shabby… o 0.15, 0.20, 0.30, 0.40, 0.65, 0.70, 0.75 1 n m 2 2 As seen with Normal, ( X ) MLE i MLE n Likelihood: L( a, 1) Likelihood: L(0, b ) i 1 o Biased. Underestimates for small n (e.g., 0 for n = 1) As seen with Uniform, a MLE ≥ a and b MLE ≤ b L(a, 1 ) L( 0, b) o Biased. Problematic for small n (e.g., a = b when n = 1) Small sample phenomena intuitively make sense: o Maximum likelihood best explain data we’ve seen o Does not attempt to generalize to unseen data a b Properties of MLE Maximizing Likelihood with Multinomial • Maximum Likelihood Estimators are generally: • Consider I.I.D. random variables Y 1 , Y 2 , ..., Y n m ˆ p 1 Consistent: for > 0 lim (| | ) 1 P Y k ~ Multinomial(p 1 , p 2 , ..., p m ), where i n i 1 m X n X i = number of trials with outcome i where i Potentially biased (though asymptotically less so) i 1 n ! x x x Asymptotically optimal PDF: f ( X ,..., X | p ,..., p ) p 1 p 2 ... p m ! ! ! 1 m 1 m x x x 1 2 m 1 2 m o Has smallest variance of “good” estimators for large samples m m ( Log-likelihood: ) log( ! ) log( ! ) log( ) LL n X X p Often used in practice where sample size is large i i i 1 1 i i relative to parameter space m Account for constraint when differentiating LL ( ) p 1 i o But be careful, there are some very large parameter spaces i 1 Use Lagrange multipliers (drop non-p i terms): o Joint distributions of several variables can cause problems m m • Parameter space grows exponentially Rock on, dog! l ( ) log( ) ( 1 ) A X p p i i i • Parameter space for 10 dependent binary variables 2 10 1 1 i i Joseph-Louis Lagrange (1736-1813) 2

Recommend

More recommend