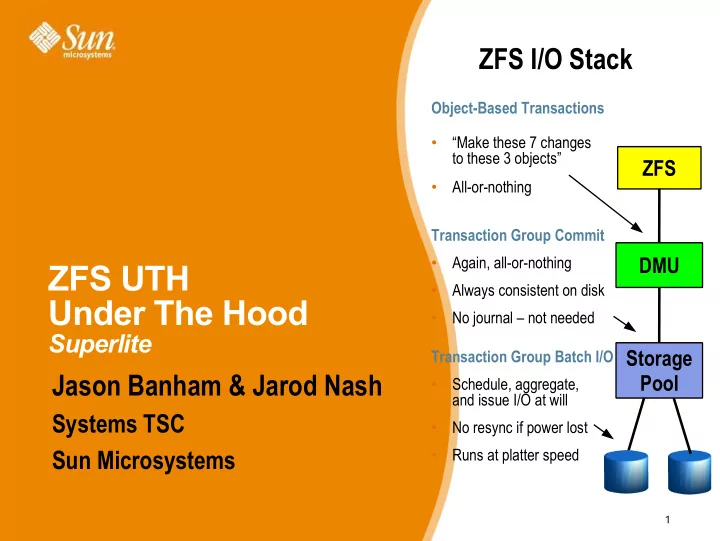

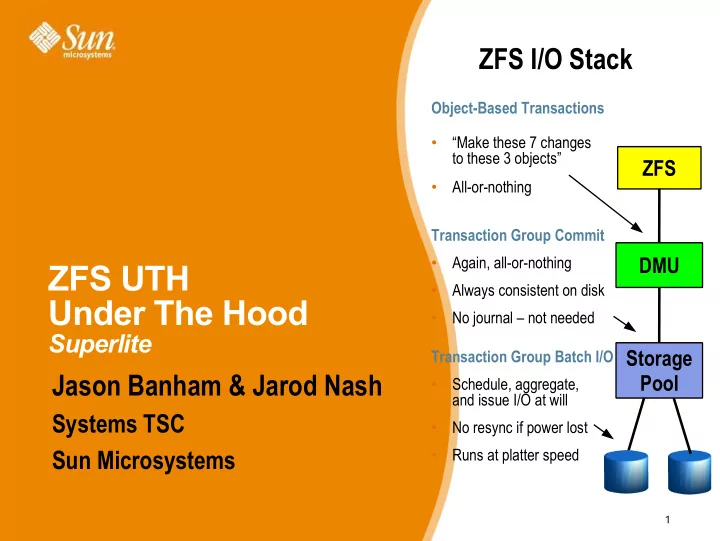

ZFS I/O Stack Object-Based Transactions • “Make these 7 changes to these 3 objects” ZFS • All-or-nothing Transaction Group Commit Again, all-or-nothing DMU • ZFS UTH Always consistent on disk • Under The Hood • No journal – not needed Superlite Storage Transaction Group Batch I/O Jason Banham & Jarod Nash Pool Schedule, aggregate, • and issue I/O at will Systems TSC • No resync if power lost Sun Microsystems Runs at platter speed • 1

ZFS Elevator Pitch “To create a reliable storage system from inherently unreliable components” • Data Integrity > Historically considered “too expensive” > Turns out, no it isn't > Real world evidence shows silent corruption a reality > Alternative is unacceptable • Ease of Use > Combined filesystem and volume management > Underlying storage managed as Pools which simply admin > Two commands: zpool & zfs > zpool: manage storage pool (aka volume management) > zfs: manage filesystems 2

ZFS Data Integrity 2 Aspects 1.Always consistent on disk format > Everything is copy-on-write (COW) > Never overwrite live data > On-disk state always valid – no “windows of vulnerability” > Provides snapshots “for free” > Everything is transactional > Related changes succeed or fail as a whole – AKA Transaction Group (TXG) > No need for journaling 2.End to End checksums > Filesystem metadata and file data protected using checksums > Protects End to End across interconnect, handling failings between storage and host 3

ZFS COW: Copy On Write 1. Initial block tree 2. COW some blocks 3. COW indirect blocks 4. Rewrite uberblock (atomic) 4

FS/Volume Model vs. ZFS FS/Volume I/O Stack ZFS I/O Stack Object-Based Transactions ZFS Block Device Interface FS • “Make these 7 changes “Write this block, • to these 3 objects” then that block, ...” • All-or-nothing • Loss of power = loss of on- disk consistency Transaction Group Commit DMU Workaround: journaling, • • Again, all-or-nothing which is slow & complex • Always consistent on disk Volume No journal – not needed • Block Device Interface Storage Transaction Group Batch I/O Pool • Write each block to each disk immediately to keep mirrors in Schedule, aggregate, • sync and issue I/O at will • Loss of power = resync No resync if power lost • • Synchronous and slow Runs at platter speed • 5

ZFS End to End Checksums ZFS Data Authentication Disk Block Checksums • Checksum stored with data block Checksum stored in parent block pointer • • Any self-consistent block will pass • Fault isolation between data and checksum • Can't even detect stray writes • Entire storage pool is a • Inherent FS/volume interface limitation Address Address self-validating Merkle tree Checksum Checksum Address Address Data Data Checksum Checksum Checksum Checksum Data Data ZFS validates the entire I/O path Disk checksum only validates media ✔ Bit rot ✔ Bit rot ✗ Phantom writes ✔ Phantom writes ✗ Misdirected reads and writes ✔ Misdirected reads and writes ✗ DMA parity errors ✔ DMA parity errors ✗ Driver bugs ✔ Driver bugs ✗ Accidental overwrite ✔ Accidental overwrite 6

Traditional Mirroring 1. Application issues a read. 2. Volume manager passes 3. Filesystem returns bad data Mirror reads the first disk, bad block up to filesystem. to the application. which has a corrupt block. If it's a metadata block, the It can't tell. filesystem panics. If not... Application Application Application FS FS FS xxVM mirror xxVM mirror xxVM mirror 7

Self-Healing Data in ZFS 1. Application issues a read. 2. ZFS tries the second disk. 3. ZFS returns good data ZFS mirror tries the first disk. Checksum indicates that the to the application and block is good. repairs the damaged block. Checksum reveals that the block is corrupt on disk. Application Application Application ZFS mirror ZFS mirror ZFS mirror 8

ZFS Administration • Pooled storage – no more volumes! > All storage is shared – no wasted space, no wasted bandwidth • Hierarchical filesystems with inherited properties > Filesystems become administrative control points – Per-dataset policy: snapshots, compression, backups, privileges, etc. – Who's using all the space? du(1) takes forever, but df(1M) is instant! > Manage logically related filesystems as a group > Control compression, checksums, quotas, reservations, and more > Mount and share filesystems without /etc/vfstab or /etc/dfs/dfstab > Inheritance makes large-scale administration a snap • Online everything 9

FS/Volume Model vs. ZFS Traditional Volumes ZFS Pooled Storage • Abstraction: virtual disk • Abstraction: malloc/free • Partition/volume for each FS • No partitions to manage • Grow/shrink by hand • Grow/shrink automatically • Each FS has limited bandwidth • All bandwidth always available • Storage is fragmented, stranded • All storage in the pool is shared FS FS FS ZFS ZFS ZFS Volume Volume Volume Storage Pool 10

Dynamic Striping • Automatically distributes load across all devices • Writes: striped across all four mirrors • Writes: striped across all five mirrors • Reads: wherever the data was written • Reads: wherever the data was written • Block allocation policy considers: • No need to migrate existing data > Old data striped across 1-4 > Capacity > New data striped across 1-5 > Performance (latency, BW) > COW gently reallocates old data > Health (degraded mirrors) ZFS ZFS ZFS ZFS ZFS ZFS Storage Pool Storage Pool Add Mirror 5 Add Mirror 5 1 1 2 3 4 1 2 3 4 5 2 3 4 1 2 3 4 5 11

Snapshots for Free • The combination of COW and TXGs means constant time snapshots fall out for free* • At end of TXG, don't free COWed blocks > Actually cheaper to take a snapshot than not! Snapshot Live root root *Nothing is ever free, old COWed blocks of course consume space 12

Disk Scrubbing • Finds latent errors while they're still correctable > ECC memory scrubbing for disks • Verifies the integrity of all data > Traverses pool metadata to read every copy of every block > Verifies each copy against its 256-bit checksum > Self-healing as it goes • Provides fast and reliable resilvering > Traditional resilver: whole-disk copy, no validity check > ZFS resilver: live-data copy, everything checksummed > All data-repair code uses the same reliable mechanism – Mirror resilver, RAIDZ resilver, attach, replace, scrub 13

ZFS Commands • zfs(1m) used to administer ZFS ZFS ZFS filesystems, zvols, and dataset properties Storage Pool • zpool(1m) used to control the storage pool 14

ZFS Live Demo 15

ZFS Availability • OpenSolaris > Open Source version of latest Solaris in development (nevada) > Available via: > Solaris Express Developer Edition > Solaris Express Community Edition > OpenSolaris Developer Preview 2 (Project Indiana) > Other distros (Belenix, Nexenta, Schilix, MarTux) • Solaris 10 > Since Update 2 (latest is Update 4) • OpenSolaris versions will always have the latest and greatest bits and therefore best version to play with and explore the potential of ZFS 16

ZFS Under The Hood • Full day of ZFS Presentations and Talks > Covering: > Overview – more of this presentation and “manager safe” > Issues – known issues around current ZFS implementation > Under The Hood – how ZFS does what it does • If you are seriously interested in ZFS and want to know more, or have discussions or just plainly interested in how it works, then drop us a line: > Jarod.Nash@sun.com > Jason.Banham@sun.com 17

Recommend

More recommend