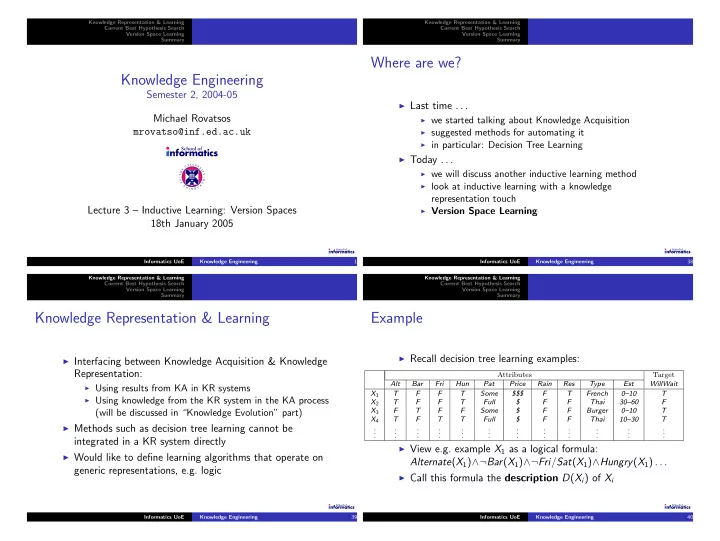

Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Where are we? Knowledge Engineering Semester 2, 2004-05 ◮ Last time . . . Michael Rovatsos ◮ we started talking about Knowledge Acquisition ◮ suggested methods for automating it mrovatso@inf.ed.ac.uk ◮ in particular: Decision Tree Learning ◮ Today . . . U N I V E R S E I ◮ we will discuss another inductive learning method H T T Y O H F G ◮ look at inductive learning with a knowledge E R D B U I N representation touch Lecture 3 – Inductive Learning: Version Spaces ◮ Version Space Learning 18th January 2005 Informatics UoE Knowledge Engineering 1 Informatics UoE Knowledge Engineering 38 Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Knowledge Representation & Learning Example ◮ Recall decision tree learning examples: ◮ Interfacing between Knowledge Acquisition & Knowledge Representation: Attributes Target Alt Bar Fri Hun Pat Price Rain Res Type Est WillWait ◮ Using results from KA in KR systems X 1 T F F T Some $$$ F T French 0–10 T ◮ Using knowledge from the KR system in the KA process X 2 T F F T Full $ F F Thai 30–60 F (will be discussed in “Knowledge Evolution” part) X 3 F T F F Some $ F F Burger 0–10 T X 4 T F T T Full $ F F Thai 10–30 T ◮ Methods such as decision tree learning cannot be . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . integrated in a KR system directly ◮ View e.g. example X 1 as a logical formula: ◮ Would like to define learning algorithms that operate on Alternate ( X 1 ) ∧¬ Bar ( X 1 ) ∧¬ Fri / Sat ( X 1 ) ∧ Hungry ( X 1 ) . . . generic representations, e.g. logic ◮ Call this formula the description D ( X i ) of X i Informatics UoE Knowledge Engineering 39 Informatics UoE Knowledge Engineering 40

Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Example: Describing DTL in First-Order Logic Example Recall decision tree from last lecture: ◮ Classification: WillWait ( X 1 ) Patrons? ◮ Use generalised notation Q ( X i )/ ¬ Q ( X i ) for classification None Some Full of positive/negative examples F T WaitEstimate? ◮ Training set = conjunction of all description and >60 30−60 10−30 0−10 classification sentences F Alternate? Hungry? T No Yes No Yes D ( X 1 ) ∧ Q ( X 1 ) ∧ D ( X 2 ) ∧ ¬ Q ( X 2 ) ∧ D ( X 3 ) ∧ Q ( X 3 ) . . . Reservation? Fri/Sat? T Alternate? No Yes No Yes No Yes ◮ Each hypothesis H i is equivalent to a candidate Bar? T F T T Raining? definition C i ( x ) such that ∀ xQ ( X ) ⇔ C i ( x ) No Yes No Yes F T F T Informatics UoE Knowledge Engineering 41 Informatics UoE Knowledge Engineering 42 Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Example Hypotheses and Hypothesis Spaces This is equivalent to the disjunction of all branchens that lead to a “true” node (formula for each branch = conjunction of ◮ Set of examples that satisfy a candidate definition = attribute values on branch) extension of the respective hypothesis ◮ In the learning process, we can rule out hypotheses that Q ( r ) C i ( r ) � �� � � �� � are not consistent with examples ∀ r WillWait ( r ) ⇔ Patrons ( r , Some ) ◮ Two cases: ∨ ( Patrons ( r , Full ) ∧ Hungry ( r ) ∧ Type ( r , French )) ◮ False negative : hypothesis predicts negative outcome ( Patrons ( r , Full ) ∧ Hungry ( r ) ∧ Type ( r , Thai ) ∨ but classification of example is positive ∧ Fri / Sat ( r )) ◮ False positive : hypothesis predicts positive outcome ∨ ( Patrons ( r , Full ) ∧ Hungry ( r ) ∧ Type ( r , Burger )) but classification of example is negative Informatics UoE Knowledge Engineering 43 Informatics UoE Knowledge Engineering 44

Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Hypotheses and Hypothesis Spaces Current-Best Hypothesis Search ◮ Idea very simple: adjust hypothesis to maintain ◮ Learning algorithm believes that one of its hypotheses is consistency with examples true, i.e. H 1 ∨ H 2 ∨ H 3 ∨ . . . ◮ Uses specialisation / generalisation of current ◮ Each false positive/false negative could be used to rule hypothesis to exclude false positives/include false negatives out inconsistent hypotheses from the hyp. space general model of inductive learning –� –� –� –� –� –� –� –� –� –� –� –� –� –� –� –� –� –� –� ◮ But not practicable if hyp. space is vast, e.g. all formulae –� –� –� –� –� + + + + + + + + + + + –� + –� + –� + –� + –� –� of first-order logic –� –� –� –� + + + + + + + + + + –� + + + –� + ++ –� ++ –� ++ –� ◮ Have to look for simpler methods: ++ –� ++ –� –� –� –� –� –� –� –� –� –� –� ◮ Current-best hypothesis search (a) (b) (c) (d) (e) ◮ Version space learning ◮ Assumes “more general than” and “more specific than” relations to search hypothesis space efficiently Informatics UoE Knowledge Engineering 45 Informatics UoE Knowledge Engineering 46 Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Current-Best Hypothesis Search Version Space Learning ◮ Problems of current-best learning: Current-Best-Learning ( examples ) ◮ Have to check all examples again after each modification 1 H ← any hypothesis consistent with the first example in examples ◮ Involves great deal of backtracking 2 for each remaining example e in examples do ◮ Alternative: maintain set of all hypotheses consistent with 3 if e is a false positive for H then 4 H ← choose a specialisation of H consistent with examples examples 5 else if e is a false negative for H then ◮ Version space = set of remaining hypotheses 6 H ← choose a generalisation of H consistent with examples ◮ Algorithm: 7 if no consistent specialisation/generalisation can be found then fail Version-Space-Learning ( examples ) 8 return H 1 V ← set of all hypotheses 2 for each example e in examples do Things to note: 3 if V is not empty ◮ Non-deterministic choice of specialisation/generalisation 4 then V ← { h ∈ V : h is consistent with e } ◮ Does not provide rules for spec./gen. 5 return V ◮ One possibility: add/drop conditions Informatics UoE Knowledge Engineering 47 Informatics UoE Knowledge Engineering 48

Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Version Space Learning Version Space Learning ◮ Advantages: ◮ incremental approach This region all inconsistent (don’t have to consider old examples again) G 1 G 2 G 3 . . . G m ◮ least-commitment algorithm More general ◮ Problem: How to write down disjunction of all hypotheses? think of interval notation [1 , 2] ◮ Exploit ordering on hypotheses and boundary sets More specific ◮ G-set most general boundary (no more general S 1 S 2 . . . S n hypotheses are consistent with all examples) ◮ S-set most specific boundary (no more specific This region all inconsistent hypotheses are consistent with all examples) Informatics UoE Knowledge Engineering 49 Informatics UoE Knowledge Engineering 50 Knowledge Representation & Learning Knowledge Representation & Learning Current Best Hypothesis Search Current Best Hypothesis Search Version Space Learning Version Space Learning Summary Summary Version Space Learning Version space learning There are no known examples “between” S and G , i.e. outside ◮ Everything between G and S (version space) is consistent S but inside G : with examples and represented by boundary sets ◮ Initially: G = { True } , S = { False } ◮ How to prove that this is a reasonable representation? –� –� –� G 1 ◮ Need to show two properties: –� –� –� ◮ Every consistent H not in the boundary sets is more –� G 2 + + –� specific than some G i and more general than some S j –� S 1 + + + –� (follows from definition) + + ◮ Every H more specific than some G i and more general + + + than some S j is consistent. –� Any such H rejects all negative examples rejected by –� –� –� each member of G and accepts all positive examples accepted by any member of S H consistent Informatics UoE Knowledge Engineering 51 Informatics UoE Knowledge Engineering 52

Recommend

More recommend