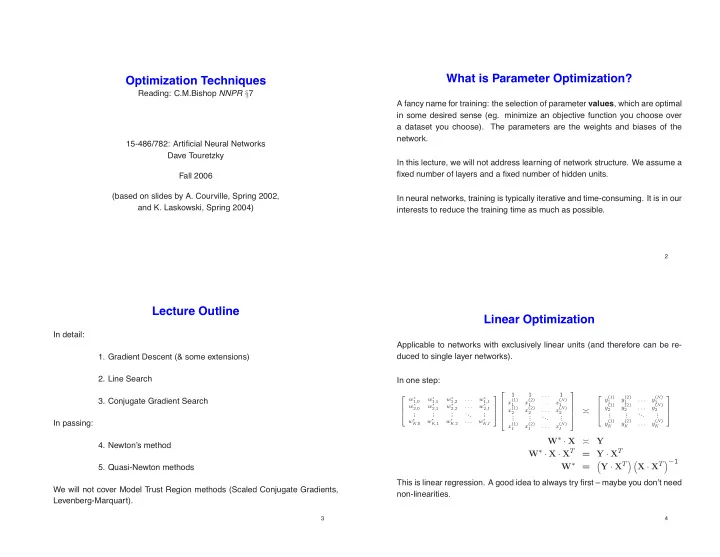

What is Parameter Optimization? Optimization Techniques Reading: C.M.Bishop NNPR § 7 A fancy name for training: the selection of parameter values , which are optimal in some desired sense (eg. minimize an objective function you choose over a dataset you choose). The parameters are the weights and biases of the network. 15-486/782: Arti fi cial Neural Networks Dave Touretzky In this lecture, we will not address learning of network structure. We assume a fi xed number of layers and a fi xed number of hidden units. Fall 2006 (based on slides by A. Courville, Spring 2002, In neural networks, training is typically iterative and time-consuming. It is in our and K. Laskowski, Spring 2004) interests to reduce the training time as much as possible. 2 Lecture Outline Linear Optimization In detail: Applicable to networks with exclusively linear units (and therefore can be re- duced to single layer networks). 1. Gradient Descent (& some extensions) 2. Line Search In one step: 1 1 . . . 1 y (1) y (2) y ( N ) w ∗ w ∗ w ∗ w ∗ . . . . . . 3. Conjugate Gradient Search x (1) x (2) x ( N ) 1 , 0 1 , 1 1 , 2 1 ,I . . . 1 1 1 w ∗ w ∗ w ∗ . . . w ∗ 1 1 1 y (1) y (2) y ( N ) . . . x (1) x (2) x ( N ) 2 , 0 2 , 1 2 , 2 2 ,I . . . ≍ 2 2 2 . . . ... . . . . 2 2 2 ... . . . . . . . . . . . . . . ... . . . . . . . . . w ∗ w ∗ w ∗ w ∗ . . . In passing: y (1) y (2) y ( N ) . . . K, 0 K, 1 K, 2 K,I x (1) x (2) x ( N ) . . . K K K I I I W ∗ · X ≍ Y 4. Newton’s method W ∗ · X · X T = Y · X T � Y · X T � � X · X T � − 1 W ∗ = 5. Quasi-Newton methods This is linear regression. A good idea to always try fi rst – maybe you don’t need We will not cover Model Trust Region methods (Scaled Conjugate Gradients, non-linearities. Levenberg-Marquart). 3 4

� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � Non-linear Optimization Try It In Matlab >> W = [1 2 3]; Given a fi xed neural network architecture with non-linearities, we seek iterative algorithms which implement a search in parameter space: >> x = [ ones(1,50); rand(2,50)]; >> y = W*x; = w ( τ ) + ∆ w ( τ ) w ( τ +1) ℜ W w ∈ >> W_star = (y*x’) * inv(x*x’) W_star = At each timestep τ , ∆ w ( τ ) is chosen to reduce an objective (error) function 1.0000 2.0000 3.0000 E ( { x , t } ; w ) . For example, for a network with K linear output units, the appro- priate choice is the sum-of-squares error: >> W_hat = y * pinv(x) N K 1 � � � y k ( x n ; w ) − t n � 2 W_hat = E = k 2 n =1 k =1 where N is the number of patterns. 1.0000 2.0000 3.0000 5 6 Approximating Error Surface Behaviour The Parameter Space Holding the dataset { x , t } fi xed, consider a second order Taylor series expan- ���� ���� ���� ���� ���� ���� w (2) w (2) w (2) · · · 0 , 1 1 , 1 J, 1 y 1 y k y K sion of E ( w ) about a point w 0 : · · · · · · . . ... . = � ������������������������������� � ���������������������������������������������� � ������������������������������� W 2 . . . � � � � � ����������������� � � � ����������������� � � � � � � ����������������� . . . � � ������������ � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � w (2) w (2) w (2) � � � � � � � � � � � � � � � � � � � · · · � � � � � � � � � � � � � � � � � � � � � � E ( w 0 ) + ( w − w 0 ) T b + 1 � � � � � � � � � � � � � � � � � � � � 0 ,K 1 ,K J,K � � � � � � � � � � � � � � � � � � � � � � 2( w − w 0 ) T H ( w − w 0 ) � � � � � � � � � � � � � � � � � E ( w ) = (1) � � � � � � � � � � � � � � � � � � � � ℜ ( K,J +1) ���� ���� � ���� ���� � � � � ���� ���� � ���� ���� � � ���� ���� ���� ���� � � � � � � � � � � � � � � � � � � � � � ∈ � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � z 1 � z 2 � z 3 z 4 z j z J 1 � · · · · · · � ���������������������������������������������� � ������������������������������� � ��������������������������������������� � ������������������������������� � � � � � � ����������������� � ������������������������ � ������������������ � ������������������������ � � � � � � � � ������������ � ������������ � � ������������ � � � � ������������ � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � where b is the gradient of E | w 0 and H is the Hessian of E | w 0 : � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � w (1) w (1) w (1) � � � � � � � � � � � � � � � � � � � � � � � � � � · · · � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 0 , 1 1 , 1 I, 1 � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � . . ... . � � � � � � � � � � � � � � W 1 = � ���� ���� � � � ���� ���� � � � � � � � ���� ���� � � ���� ���� � . . . � � � � � � � � � � � . . . � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � x 1 � x 2 � · · · x i · · · x I w (1) w (1) w (1) 1 b ≡ ∇ E | w 0 ∇ 2 E | w 0 · · · H ≡ 0 ,J 1 ,J I,J ℜ ( J,I +1) ∂ E ∂ 2 E ∂ 2 E ∈ · · · ∂ w 1 ∂ w 2 ∂ w 1 ∂ w W . = . 1 . . ... . = . . . . ∂ E ∂ 2 E ∂ 2 E Want to think of (and operate on) weight matrices as a single vector: ∂ w W w 0 · · · ∂ w 2 ∂ w W ∂ w 1 w 0 W w = mapping ( W 1 , W 2 ) In a similar way, we can de fi ne a fi rst order approximation to the gradient: ℜ W , ∈ W = J ( I + 1) + K ( J + 1) ∇ E | w = b + H ( w − w 0 ) (2) Doesn’t matter what mapping is, as long as we can reverse it when necessary. 7 8

Recommend

More recommend