Verifying Electronic Voting Protocols in the Applied Pi Calculus Mark Ryan University of Birmingham joint work with St´ ephanie Delaune and Steve Kremer LSV Cachan, France SoCS Seminar November 2007

Outline

Electronic voting Electronic voting has the potential to provide more efficient elections with higher voter participation, greater accuracy and lower costs compared to manual methods. better security than manual methods, such as vote-privacy even in presence of corrupt election authorities voter verification, i.e. the ability of voters and observers to check the declared outcome against the votes cast. Governments world over have been trialling e-voting, e.g. USA, UK, Canada, Brasil, the Netherlands and Estonia.

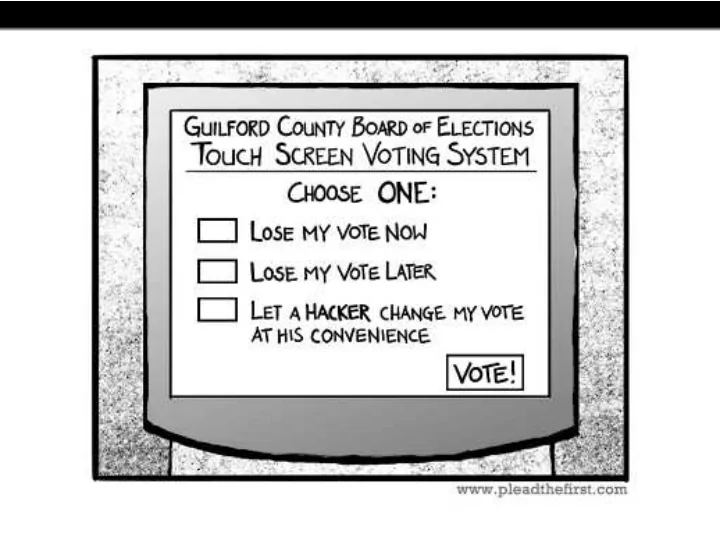

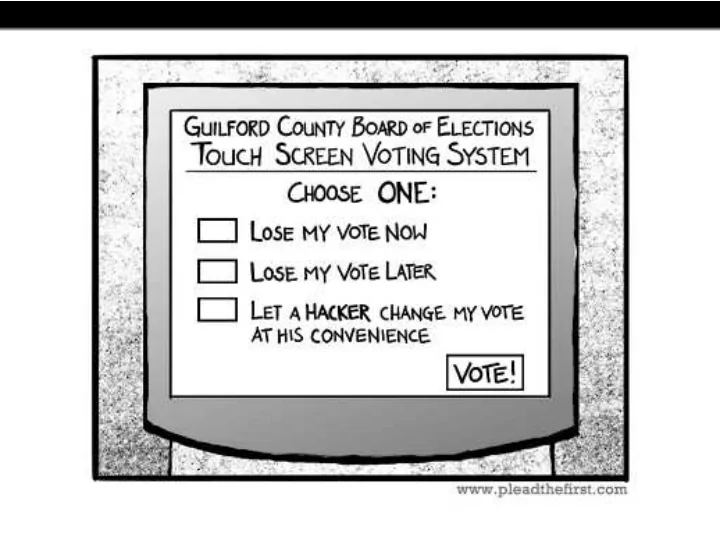

Current situation The potential benefits have turned out to be hard to realise. In UK May 2007 elections included 5 local authorities that piloted a range of electronic voting machines. Electoral Commission report concluded that the implementation and security risk was significant and unacceptable and recommends that no further e-voting take place until a sufficiently secure and transparent system is available. In USA: Diebold controversy since 2003 when code leaked on internet. Kohno/Stubblefield/Rubin/Wallach analysis concluded Diebold system far below even most minimal security standards. Voters without insider privileges can cast unlimited votes without being detected.

Current situation in USA, continued In 2007, Secr. of State for California commissioned “top-to-bottom” review by computer science academics of the four machines certified for use in the state. Result is a catalogue of vulnerabilities, including appalling software engineering practices, such as hardcoding crypto keys in source code; bypassing OS protection mechanisms, . . . susceptibility of voting machines to viruses that propogate from machine to machine, and that could maliciously cause votes to be recorded incorrectly or miscounted “weakness-in-depth”, architecturally unsound systems in which even as known flaws are fixed, new ones are discovered. In response to these reports, she decertified all four types of voting machine for regular use in California, on 3 August 2007.

Voting system: desired properties Eligibility: only legitimate voters can vote, and only once (This also implies that the voting authorities cannot insert votes) Fairness no early results can be obtained which could influence the remaining voters Privacy: the fact that a particular voted in a particular way is not revealed to anyone Receipt-freeness: a voter cannot later prove to a coercer that she voted in a certain way Coercion-resistance: a voter cannot interactively cooperate with a coercer to prove that she voted in a certain way Individual verifiability: a voter can verify that her vote was really counted Universal verifiability: a voter can verify that the published outcome really is the sum of all the votes . . . and all this even in the presence of corrupt election authorities!

Are these properties even simultaneously satisfiable? Contradiction? Eligibility: only legitimate voters can vote, and only once Effectiveness: the number of votes for each candidate is Contradiction? published after the election Receipt-freeness: a voter Privacy: the fact that a cannot later prove to a coercer particular voted in a particular that she voted in a certain way way is not revealed to anyone Individual verifiability: a (not even the election voter can verify that her vote authorities) was really counted Individual verifiability (stronger): . . . , and if her vote wasn’t counted, she can prove that .

How could it be secure?

Security by trusted client software → → → → → → → → → → trusted by user not trusted by user does not need to be doesn’t need to be trusted by authorities trusted by anyone or other voters

FOO 92 protocol [FujiokaOkamotoOhta92] Alice aDministrator Collector { } blind ( commit ( v , c ), b ) − 1 A I { } ( ( , ), ) blind commit v c b − 1 D { } (...) = ( , ) unblind commit v c − 1 D II { } ( , ) commit v c − 1 D . ( , ( , )) publ l commit v c ( c , ) l III open (...) = v publ . v

LBDKYY’03 protocol [LeeBoydDawsonKimYangYoo] Alice Administrator Collector ( ) { } Coll , c Sign v Alice 1 reencrypt ( ) { } c Coll , Sign v Admin 2 ( ) 1 , { } { } c c DVP v = v 2 Coll Coll ( ) { } Coll , c Sign v Admin 2

Attacker model Ideally, we want to model a very powerful attacker: It has “Dolev-Yao” capabilities, i.e. it completely controls the communication channels, so it is able to record, alter, delete, insert, redirect, reorder, and reuse past or current messages, and inject new messages (The network is the attacker) manipulate data in arbitrary ways, including applying crypto operations provided has the necessary keys It includes the election authorities. It includes the other voters.

Where are we?

The applied π -calculus Applied pi-calculus: [Abadi & Fournet, 01] basic programming language with constructs for concurrency and communication based on the π -calculus [Milner et al. , 92] in some ways similar to the spi-calculus [Abadi & Gordon, 98], but more general w.r.t. cryptography Advantages: naturally models a Dolev-Yao attacker allows us to model less classical cryptographic primitives both reachability and equivalence-based specification of properties automated proofs using ProVerif tool [Blanchet] powerful proof techniques for hand proofs successfully used to analyze a variety of security protocols

How to verify protocols in the applied-pi framework 1. Create equations to model the cryptography. Examples Encryption and signatures 1 decrypt( encrypt(m,pk(k)), k ) = m checksign( sign(m,k), m, pk(k) ) = ok

How to verify protocols in the applied-pi framework 1. Create equations to model the cryptography. Examples Encryption and signatures 1 decrypt( encrypt(m,pk(k)), k ) = m checksign( sign(m,k), m, pk(k) ) = ok Blind signatures 2 unblind( sign( blind(m,r), sk ), r ) = sign(m,sk)

How to verify protocols in the applied-pi framework 1. Create equations to model the cryptography. Examples Encryption and signatures 1 decrypt( encrypt(m,pk(k)), k ) = m checksign( sign(m,k), m, pk(k) ) = ok Blind signatures 2 unblind( sign( blind(m,r), sk ), r ) = sign(m,sk) Designated verifier proof of re-encryption 3 The term dvp(x,rencrypt(x,r),r,pkv) represents a proof designated for the owner of pkv that x and rencrypt(x,r) have the same plaintext. checkdvp(dvp(x,rencrypt(x,r),r,pkv),x,rencrypt(x,r),pkv) = ok checkdvp( dvp(x,y,z,skv), x, y, pk(skv) ) = ok.

How to verify protocols in the applied-pi framework 1. Create equations to model the cryptography. 2. For each property to be verified, decide who is protected, i.e. for whom the property will be verified; must be honest in order to guarantee the property; may be dishonest, i.e. may be controlled by the DY attacker. Examples: Protocol property protected must be honest may be dishonest FOO eligibility voters other voters admin, collector fairness voters other voters admin, collector privacy voters same voters admin, collector Lee et al. privacy voters same voters, admin collector receipt voters same voters, admin, other voters -freeness collector

How to verify protocols in the applied-pi framework 1. Create equations to model the cryptography. 2. For each property to be verified, decide who is protected / must be honest / may be dishonest 3. Code the honest parties as processes. Example ([FOO’92]): Alice aDministrator Collector { } processV = blind ( commit ( v , c ), b ) − 1 A new b; new c; { } ( ( , ), ) blind commit v c b D − 1 let bcv = blind(commit(v,c),b) in out(ch, (sign(bcv, skv))); { } unblind (...) = commit ( v , c ) − 1 D in(ch,m2); { } commit ( v , c ) if getMess(m2,pka)=bcv then − 1 D let scv = unblind(m2,b) in phase 1; publ . ( l , commit ( v , c )) ( c l , ) out(ch, scv); in(ch,(l, =scv)); phase 2; open (...) = v out(ch,(l,c)). publ . v

How to verify protocols in the applied-pi framework 1. Create equations to model the cryptography. 2. For each property to be verified, decide who is protected / must be honest / may be dishonest 3. Code the honest parties as processes. 4. Code the intended property, as a reachability property, or an observational equivalence property. Examples: Property type intuition Eligibility reachab. ineligible vote not published Fairness reachab. without last phase, no votes published Privacy obs. eq. undetectable whether A,B swap votes Receipt-freeness obs. eq. even if A cooperates with attacker, unde- tectable whether A,B swap votes

Recommend

More recommend