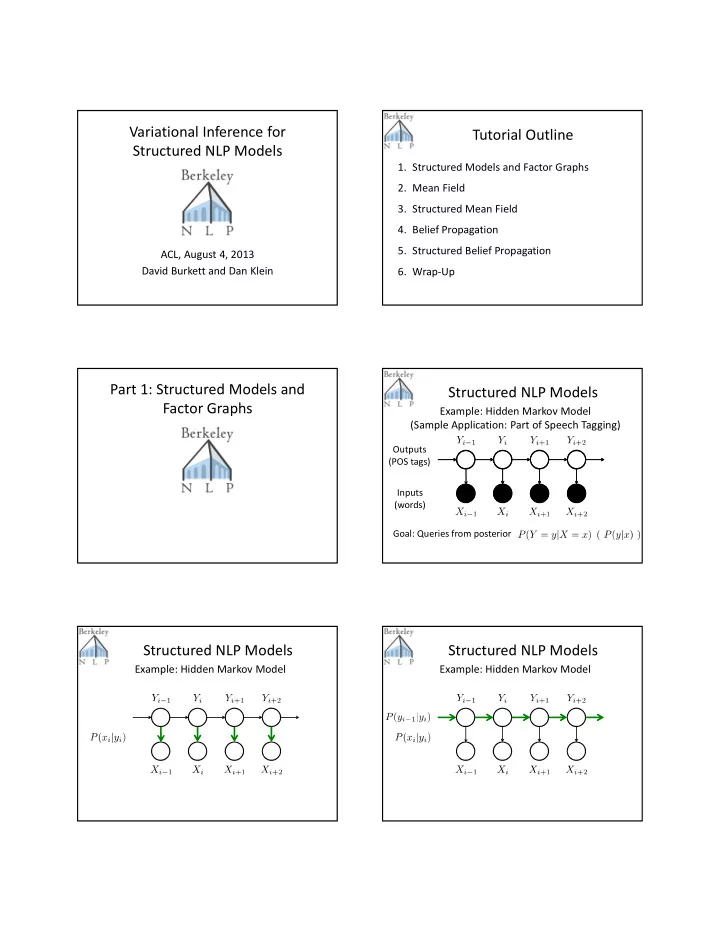

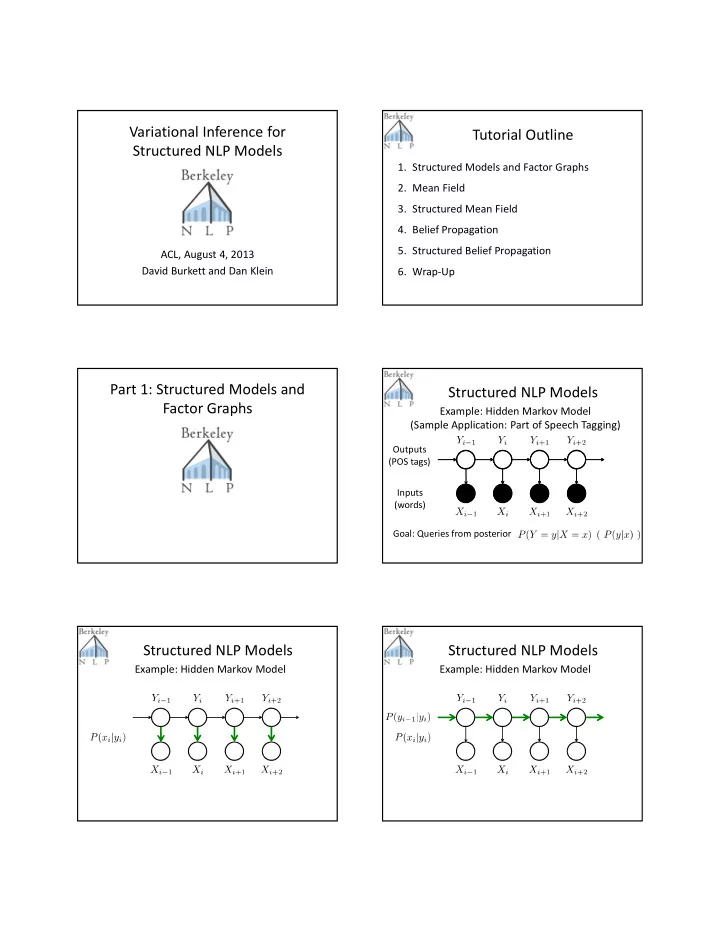

Variational Inference for Tutorial Outline Structured NLP Models 1. Structured Models and Factor Graphs 2. Mean Field 3. Structured Mean Field 4. Belief Propagation 5. Structured Belief Propagation ACL, August 4, 2013 David Burkett and Dan Klein 6. Wrap-Up Part 1: Structured Models and Structured NLP Models Factor Graphs Example: Hidden Markov Model (Sample Application: Part of Speech Tagging) Outputs (POS tags) Inputs (words) Goal: Queries from posterior Structured NLP Models Structured NLP Models Example: Hidden Markov Model Example: Hidden Markov Model

Structured NLP Models Structured NLP Models Example: Hidden Markov Model Example: Hidden Markov Model Structured NLP Models Structured NLP Models Example: Hidden Markov Model Example: Hidden Markov Model Factor Graph Notation Factor Graph Notation Variables Y i Variables Y i Factors Factors Cliques Cliques Binary Factor Unary Factor

(Lafferty et al., 2001) Factor Graph Notation Structured NLP Models Example: Conditional Random Field (Sample Application: Named Entity Recognition) Variables Y i Factors Cliques Variables have factor (clique) neighbors: Factors have variable neighbors: Structured NLP Models Structured NLP Models Example: Conditional Random Field Example: Conditional Random Field Structured NLP Models Structured NLP Models Example: Edge-Factored Dependency Parsing Example: Edge-Factored Dependency Parsing L O O O O L O O O O O L L L L L O O O L the cat ate the rat the cat ate the rat (McDonald et al., 2005)

Structured NLP Models Structured NLP Models Example: Edge-Factored Dependency Parsing Example: Edge-Factored Dependency Parsing O O O O O O L O R O O R O L R O L L O L rat rat the cat ate the the cat ate the Structured NLP Models Inference Example: Edge-Factored Dependency Parsing Input: Factor Graph L L L L L L Output: Marginals L L L L Inference Complex Structured Models Typical NLP Approach: Dynamic Programs! POS Tagging Joint Examples: Sequence Models (Forward/Backward) Named Entity Phrase Structure Parsing (CKY, Inside/Outside) Recognition Dependency Parsing (Eisner algorithm) ITG Parsing (Bitext Inside/Outside) (Sutton et al., 2004)

Complex Structured Models Complex Structured Models Word Alignment Dependency Parsing with Second Order Features vi el gato frío (McDonald & Pereira, 2006) I saw the cold cat (Carreras, 2007) (Taskar et al., 2005) Complex Structured Models Variational Inference Word Alignment Approximate inference techniques that can be applied to any graphical model This tutorial: vi Mean Field: Approximate the joint distribution el with a product of marginals Belief Propagation: Apply tree inference gato algorithms even if your graph isn’t a tree Structure: What changes when your factor graph frío has tractable substructures I saw the cold cat Mean Field Warmup Part 2: Mean Field Wanted: Idea: coordinate ascent Key object: assignments Iterated Conditional Modes (Besag, 1986)

Mean Field Warmup Mean Field Warmup Wanted: Wanted: Mean Field Warmup Mean Field Warmup Wanted: Wanted: Iterated Conditional Modes Mean Field Warmup Example Wanted: Approximate Result:

Iterated Conditional Modes Iterated Conditional Modes Example Example Iterated Conditional Modes Iterated Conditional Modes Example Example Iterated Conditional Modes Iterated Conditional Modes Example Example

Iterated Conditional Modes Mean Field Intro Example Mean Field is coordinate ascent, just like Iterated Conditional Modes, but with soft assignments to each variable! Mean Field Intro Mean Field Intro Wanted: Idea: coordinate ascent Key object: (approx) marginals Mean Field Intro Mean Field Intro Wanted:

Mean Field Intro Mean Field Intro Wanted: Wanted: Mean Field Procedure Mean Field Procedure Wanted: Wanted: Mean Field Procedure Mean Field Procedure Wanted: Wanted:

Example Results Mean Field Derivation Goal: Approximation: Constraint: Objective: Procedure: Coordinate ascent on each What’s the update? Mean Field Update Approximate Expectations f 1) Y i 2) 3-9) Lots of algebra 10) General: General Update * Mean Field Inference Example Exponential Family: 1 1 1 2 5 1 2 1 .4 .6 .5 .5 Generic: .7 .2 .5 .5 .5 .3 .2 .1

Mean Field Inference Example Mean Field Inference Example 1 1 1 1 1 2 5 1 2 5 1 2 1 1 2 1 .4 .6 .5 .5 .4 .6 .5 .5 .7 .2 .5 .69 .7 .2 .5 .69 .3 .2 .1 .31 .3 .2 .1 .31 Mean Field Inference Example Mean Field Inference Example 1 1 1 1 1 2 5 1 2 5 1 2 1 1 2 1 .4 .6 .40 .60 .4 .6 .40 .60 .7 .2 .5 .69 .7 .2 .5 .73 .3 .2 .1 .31 .3 .2 .1 .27 Mean Field Inference Example Mean Field Inference Example 1 1 1 1 1 2 5 1 2 5 1 2 1 1 2 1 .4 .6 .38 .62 .4 .6 .38 .62 .7 .2 .5 .73 .7 .2 .5 .73 .28 .45 .27 .27 .10 .17 .3 .2 .1 .3 .2 .1

Mean Field Inference Example Mean Field Inference Example 2 1 1 1 2 1 1 1 9 1 1 1 1 1 1 5 .67 .33 .67 .33 .62 .38 .82 .18 .67 .44 .22 .67 .44 .22 .62 .56 .06 .82 .67 .15 .33 .22 .11 .33 .22 .11 .38 .06 .31 .18 .15 .03 Mean Field Q&A Why Only Local Optima?! Are the marginals guaranteed to converge to Variables: the right thing, like in sampling? No Discrete distributions: Is the algorithm at least guaranteed to e.g. P(0,1,0,…0) = 1 converge to something? All distributions Yes (all convex combos) So it’s just like EM? Mean field approximable Yes (can represent all discrete ones, but not all) Mean Field Approximation Part 3: Structured Mean Field Model: Approximate Graph: … … … … … … … …

Structured Mean Field Structured Mean Field Approximation Approximation Model: Approximate Graph: … … … … … … … … (Xing et al, 2003) Structured Mean Field Structured Mean Field Approximation Approximation Computing Structured Updates Computing Structured Updates Marginal probability of under . ?? Updating . Computed with consists of computing forward-backward all marginals .

Structured Mean Field Notation Structured Mean Field Notation Structured Mean Field Notation Structured Mean Field Notation Structured Mean Field Notation Structured Mean Field Notation Neighbors: Connected Components

Structured Mean Field Updates Expected Feature Counts Naïve Mean Field: Structured Mean Field: Component Factorizability * Component Factorizability * (Abridged) Condition Example Feature Generic Condition Use conjunctive indicator features (pointwise product) Joint Parsing and Alignment Joint Parsing and Alignment Input: Sentences project project 产品 产品 and and 、 、 product product 项目 项目 of of 水平 水平 levels levels 高 高 High High (Burkett et al, 2010)

Joint Parsing and Alignment Joint Parsing and Alignment Alignments Output: Trees contain Output: Nodes project project 产品 产品 and and 、 、 product product 项目 项目 of of 水平 水平 levels levels 高 高 High High Joint Parsing and Alignment Joint Parsing and Alignment Alignments Output: Output: contain Bispans project project 产品 产品 and and 、 、 product product 项目 项目 of of 水平 水平 levels levels 高 高 High High Joint Parsing and Alignment Joint Parsing and Alignment Variables Variables project project 产品 产品 and and 、 、 product product 项目 项目 of of 水平 水平 levels levels 高 高 High High

Joint Parsing and Alignment Joint Parsing and Alignment Variables Factors project project 产品 产品 and and 、 、 product product 项目 项目 of of 水平 水平 levels levels 高 高 High High Joint Parsing and Alignment Joint Parsing and Alignment Factors Factors project project 产品 产品 and and 、 、 product product 项目 项目 of of 水平 水平 levels levels 高 高 High High Joint Parsing and Alignment Notational Abuse Factors Subscript Omission: Shorthand: project 产品 and 、 product 项目 of 水平 levels 高 Skip Nonexistent Substructures: High Structural factors are implicit

Model Form Training Expected Feature Marginals Counts Structured Mean Field Approximate Component Scores Approximation Monolingual parser: Score for If we knew : Score for To compute : Score for Expected Feature Counts Inference Procedure Initialize: For fixed : Marginals computed Marginals computed with bitext inside-outside with inside-outside

Inference Procedure Approximate Marginals Iterate marginal updates: …until convergence! Decoding Structured Mean Field Summary Split the model into pieces you have dynamic programs for Substitute expected feature counts for actual counts in cross-component factors Iterate computing marginals until convergence (Minimum Risk) Structured Mean Field Tips Break Time! Try to make sure cross-component features are products of indicators You don’t have to run all the way to convergence; marginals are usually pretty good after just a few rounds Recompute marginals for fast components more frequently than for slow ones e.g. For joint parsing and alignment, the two monolingual tree marginals ( ) were updated until convergence between each update of the ITG marginals ( )

Recommend

More recommend