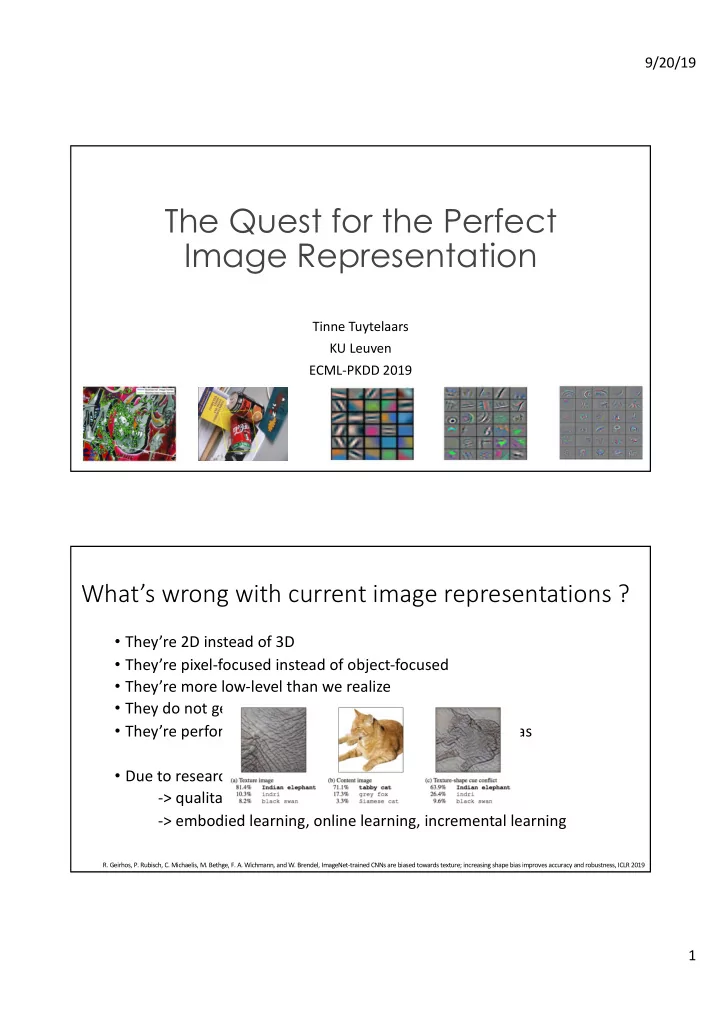

9/20/19 The Quest for the Perfect Image Representation Tinne Tuytelaars KU Leuven ECML-PKDD 2019 What’s wrong with current image representations ? • They’re 2D instead of 3D • They’re pixel-focused instead of object-focused • They’re more low-level than we realize • They do not generalize well • They’re performant because they (over)exploit dataset bias • Due to research being too dataset-focused ? -> qualitative evaluation, network interpretation -> embodied learning, online learning, incremental learning R. Geirhos, P. Rubisch, C. Michaelis, M. Bethge, F. A. Wichmann, and W. Brendel, ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness, ICLR 2019 1

9/20/19 Overview • Network interpretation/visualization (ICLR19) • Disentangled representations (ICIP19) Network interpretation “Interpretability is the degree to which a human can understand the cause of a decision” (Miller, 2017) • Interpretation = explaining the model, introspection • Explanation = explaining a specific decision, for a given input 2

9/20/19 Interpretation: related work Visualizing pre-images Visualizing node activations Link to proxy tasks But: subjective But: too many nodes But: biased Explanation: related work • Natural language explanations But: typically trained using human explanations / descriptions; causal assumption not satisfied • Heatmaps 3

9/20/19 Heatmap evaluation But: bias towards human interpretation But: subjective; bias towards human interpretation Tackling some of these challenges 1. Interpretation: too many neurons 2. Explanation: visualizing and evaluating heatmaps J. Oramas, K. Wang, T. Tuytelaars, Visual Explanation by Interpretation: Improving Visual Feedback Capabilities of Deep Neural Networks, ICLR2019 4

9/20/19 1. Interpretation: too many neurons Identifying relevant features (neurons) 5

9/20/19 Interpretation visualization: ImageNet For every identified relevant feature of each class: - Select top-k images with highest response - Crop every image based on the receptive field - Compute average image Interpretation visualization: Cats (blurriness and artifacts due to poor alignment of receptive fields) 6

9/20/19 Interpretation visualization: Fashion 144k 2. Explanation: visualizing heatmaps 7

9/20/19 Explanation visualization Evaluation of methods for interpretability • Evaluation is problematic • Interpretability vs. correctness / accuracy • Interpretability evaluation needs humans in the loop ? “Interpretability is the degree to which a human can understand the cause of a decision” (Miller, 2017) 8

9/20/19 3. Explanation: evaluation 3. Explanation: evaluation 9

9/20/19 Generated interpretations Overview • Network interpretation/visualization (ICLR19) • Disentangled representations (ICIP19) 10

9/20/19 Translating shape, preserving style Towards Object Shape Translation Through Unsupervised Generative Deep Models, L Bollens, T Tuytelaars, J Oramas-Mogrovejo, ICIP 2019, pp.4220-4224. Model: step 1: VAE for domain A 11

9/20/19 Model: step 2: VAE for domain B Model: step 3: GAN losses 12

9/20/19 Model: step 3: Cycle GAN loss Model: step 3: similarity loss 13

9/20/19 Results input Integrated unpaired appearance-preserving shape translation across domains, K Wang, L Ma, J Oramas, L Van Gool, T Tuytelaars, arXiv preprint arXiv:1812.02134, 2019. 14

9/20/19 Model Conclusions • Still lots of room for improvement • Embodied learning, online learning, incremental learning • 3D instead of 2D • Multimodal learning • Selfsupervised learning • … 15

Recommend

More recommend