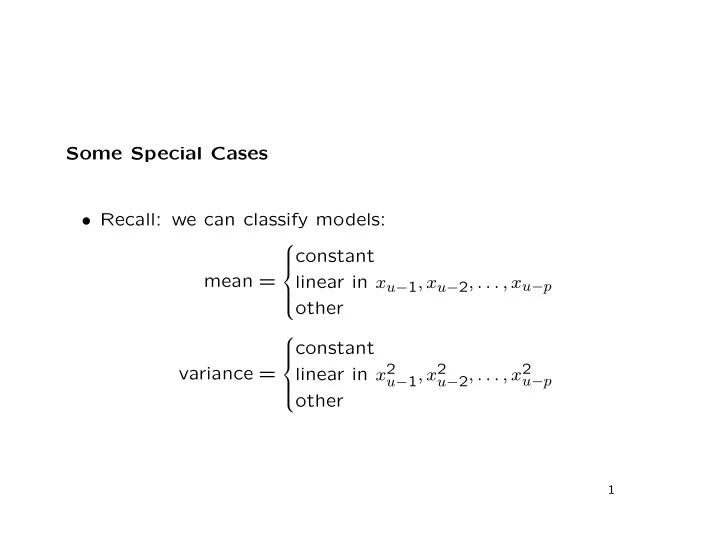

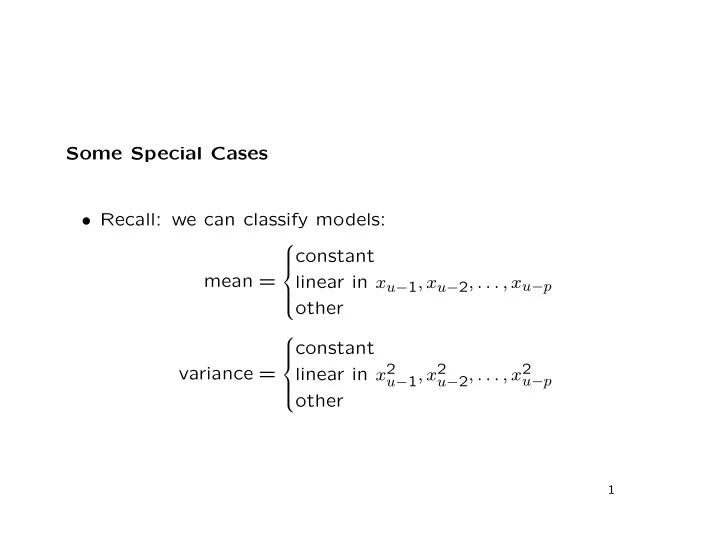

Some Special Cases • Recall: we can classify models: constant mean = linear in x u − 1 , x u − 2 , . . . , x u − p other constant linear in x 2 u − 1 , x 2 u − 2 , . . . , x 2 variance = u − p other 1

Autoregressions • The simplest special case is the autoregression . • If: µ t = linear function of x t − 1 , x t − 2 , . . . , x t − p = φ 1 x t − 1 + φ 2 x t − 2 + · · · + φ p x t − p and σ 2 t = constant = τ 2 then X t is called autoregressive of order p (AR( p )). 2

• We usually define � X t | X t − 1 , X t − 2 , . . . � ǫ t = X t − E � � = X t − φ 1 X t − 1 + φ 2 X t − 2 + · · · + φ p X t − p . • We then write the model as X t = φ 1 X t − 1 + φ 2 X t − 2 + · · · + φ p X t − p + ǫ t where � = 0 , E � ǫ t | X t − 1 , X t − 2 , . . . and � = τ 2 . Var � ǫ t | X t − 1 , X t − 2 , . . . 3

• If in addition the shape of the conditional density is fixed, then the ǫ ’s are independent and identically distributed. • The polynomial φ 1 z + φ 2 x 2 + · · · + φ p z p � � φ ( z ) = 1 − , where we view z as a complex variable, plays an important role in the theory of autoregressions. 4

• In particular, if the zeros of φ ( z ) are outside the unit circle, then: – 1 /φ ( z ) has a Taylor series expansion 1 φ ( z ) = 1 + ψ 1 z + ψ 2 z 2 + . . . which converges for | z | ≤ 1; – X t is a linear combination of ǫ t , ǫ t − 1 , . . . : X t = ǫ t + ψ 1 ǫ t − 1 + ψ 2 ǫ t − 2 + . . . 5

• These equations are often written in terms of the back-shift operator B , defined by BX t = X t − 1 , Bǫ t = ǫ t − 1 , . . . • Then � � ǫ t = X t − φ 1 X t − 1 + φ 2 X t − 2 + · · · + φ p X t − p φ 1 BX t + φ 2 B 2 X t + · · · + φ p B p X t � � = X t − = φ ( B ) X t . 6

• So it is natural to write 1 X t = φ ( B ) ǫ t 1 + ψ 1 B + ψ 2 B 2 + . . . � � = ǫ t = ǫ t + ψ 1 ǫ t − 1 + ψ 2 ǫ t − 2 + . . . 7

Conditional Heteroscedasticity: ARCH • Another special case is Engle’s AutoRegressive Conditionally Heteroscedastic, or ARCH, model. • If µ t = constant and σ 2 t = linear function of x 2 t − 1 , x 2 t − 2 , . . . , x 2 t − q = ω + α 1 x 2 t − 1 + α 2 x 2 t − 2 + · · · + α q x 2 t − q then X t is called AutoRegressive Conditionally Heteroscedas- tic of order q (ARCH( q )). 8

• ARCH models are important in finance, because many finan- cial time series show variances that fluctuate over time, while usually having constant, essential zero, conditional means. Logarithmic returns on the S&P500 Index 0.10 diff(log(spx$Close)) 0.00 −0.10 −0.20 1950 1960 1970 1980 1990 2000 2010 9

A Modest Generalization • In practice, large values of p or q are sometimes needed to get a good fit with the AR( p ) and ARCH( q ) models. • That introduces many parameters to be estimated, which is problematic. • We need models that allow large p with few parameters. 10

• Suppose for instance that X t = θX t − 1 − θ 2 X t − 2 − · · · + ǫ t for some θ , − 1 < θ < 1. • That is, p = ∞ , but φ r = − ( − θ ) r ⇒ only one parameter, θ . • Then 1 ǫ t = X t − θX t − 1 + θ 2 X t − 2 + · · · = 1 + θBX t , or X t = (1 + θB ) ǫ t = ǫ t + θǫ t − 1 . 11

• This is called a Moving Average model; specifically, the first- order Moving Average, MA(1). • The general MA( q ) model has q terms: X t = ǫ t + θ 1 ǫ t − 1 + θ 2 ǫ t − 2 + · · · + θ q ǫ t − q = (1 + θ 1 B + θ 2 B 2 + · · · + θ q B q ) ǫ t = θ ( B ) ǫ t . 12

• We can mix AR and MA structure: X t = φ 1 X t − 1 + φ 2 X t − 2 + · · · + φ p X t − p + ǫ t + θ 1 ǫ t − 1 + θ 2 ǫ t − 2 + · · · + θ q ǫ t − q or � � φ 1 X t − 1 + φ 2 X t − 2 + · · · + φ p X t − p X t − = ǫ t + θ 1 ǫ t − 1 + θ 2 ǫ t − 2 + · · · + θ q ǫ t − q or φ ( B ) X t = θ ( B ) ǫ t . • This is the AutoRegressive Moving Average model of or- der ( p, q ) (ARMA( p, q )). 13

Integrated Models • Sometimes we cannot find an ARMA( p, q ) that fits the data for reasonably small order p and q . • For instance, a random walk X t = X t − 1 + ǫ t is like an AR(1) but with φ = 1. • But the first differences X t − X t − 1 are simple: X t − X t − 1 = ǫ t a trivial model with p = q = 0. 14

• More generally, we might find that X t − X t − 1 is ARMA( p, q ). • More generally yet, we might have to difference X t more than once. • Note that X t − X t − 1 = (1 − B ) X t . • Define the d th difference by (1 − B ) d X t , d = 1 , 2 , . . . . • If the d th difference of X t is ARMA( p, q ), we say that X t is AutoRegressive Integrated Moving Average of order ( p, d, q ) (ARIMA( p, d, q )). 15

Recommend

More recommend