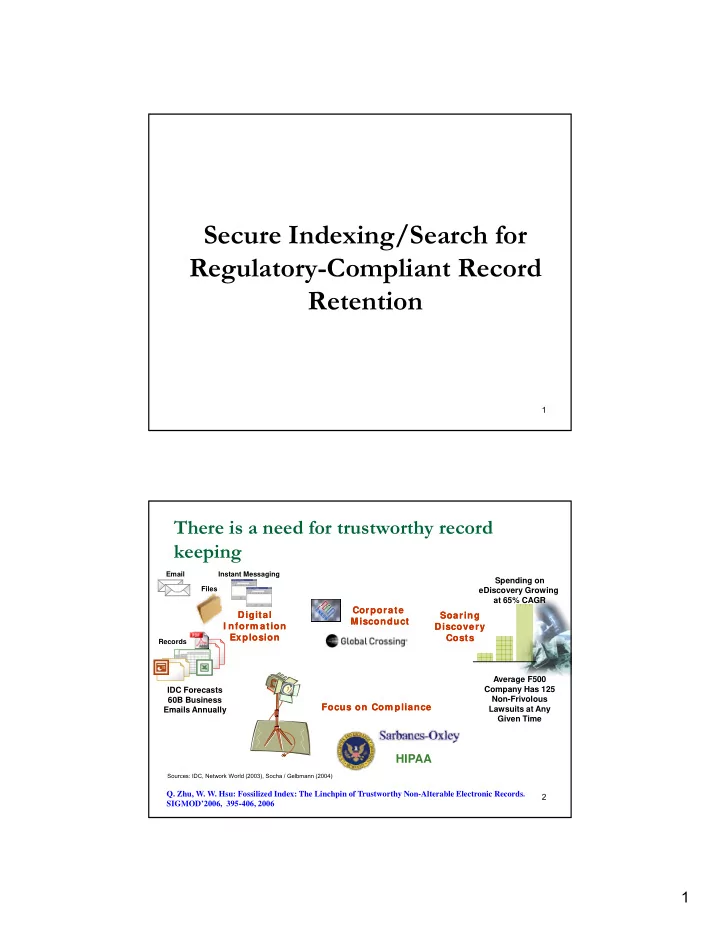

Secure Indexing/Search for Regulatory-Compliant Record Retention 1 There is a need for trustworthy record keeping Email Instant Messaging Spending on Files eDiscovery Growing at 65% CAGR Corporate C Corporate Digital Digital Soaring Soaring Misconduct Misconduct I nform ation I nform ation Discovery Discovery Explosion Explosion Costs Costs Records Average F500 Company Has 125 IDC Forecasts Non-Frivolous 60B Business Focus on Com pliance Focus on Com pliance Lawsuits at Any y Emails Annually Emails Annually Given Time HIPAA Sources: IDC, Network World (2003), Socha / Gelbmann (2004) Q. Zhu, W. W. Hsu: Fossilized Index: The Linchpin of Trustworthy Non-Alterable Electronic Records. 2 SIGMOD’2006, 395-406, 2006 1

What is trustworthy record keeping? Establish solid proof of events that have occurred Storage Storage tim e tim e Device Com m it Record Regret Query Alice Bob Adversary Bob should get back Alice’s data 3 This leads to a unique threat model tim e Query is Commit is Adversary has trustworthy trustworthy trustworthy trustworthy super user privileges super-user privileges • Access to storage device Record is created Record is • Access to any keys properly queried properly Adversary could be Alice herself 4 2

Traditional schemes do not work tim e t e Cannot rely on Alice’s signature 5 WORM storage helps address the problem Record Overwrite/ New Record Delete Delete Adversary cannot delete Alice s record delete Alice’s record Write Once Read Many (WORM) 6 3

WORM storage helps address the problem Record Overwrite/ New Record Delete Delete Build on top of conventional Adversary cannot rewritable magnetic delete Alice’s record delete Alice s record disk, with write-once semantics enforced through software, with file modification Write Once Read Many and premature deletion operations disallowed. 7 Index required due to high volume of records Index tim e Com m it Record Query from Update I ndex I ndex Regret Bob Alice Adversary 8 4

In effect, records can be hidden/altered by modifying the index Or replace B Hide record B with B’ from the A A B B B B B B’ index index The index must also be secured (fossilized) 9 Btree for increasing sequence can be created on WORM 23 13 7 31 2 4 29 31 7 11 19 23 13 10 5

B+tree index is insecure, even on WORM 23 25 7 13 31 27 4 4 7 11 7 11 13 19 13 19 23 29 23 29 31 31 25 26 25 26 30 30 2 2 Path to an element depends on elements inserted later – Adversary can attack it 11 Is this a real threat? Would someone want to delete a record after a day its created? d it t d? Intrusion detection logging Once adversary gain control, he would like to delete records of his initial attack Record regretted moments after creation g Email best practice - Must be committed before its delivered 12 6

Several levels of indexing … 1 …query … …query … 3 … data … … base … base …index … Keywords Query 1 3 11 17 Data 3 9 Base 3 19 Posting Lists Worm 7 36 Worm Index 3 To find documents containing keywords “Query” and “Data” and “Base” * Retrieve lists for Query, Data and Base, and intersect the document ids in the list 13 GHT: A Generalized Hash Tree Fossilized Index Tree grows from the root down to the leaves without relocating committed entries without relocating committed entries “Balanced” without requiring dynamic adjustments to its structure For hash-based scheme, dynamic hashing scheme that do not require rehashing 14 7

GHT Defined by {M,K, H} M = {m 0 m 1 M {m 0 , m 1 , …}, m i is } m i is size of a tree node (number of buckets) at level i K = {k 0 , k 1 ,…}, k i is the growth factor for level i A tree has k i times as many nodes at level (i+1) y ( ) as at level i H = {h 0 , h 1 ,…}, h i is a m 0 = m 1 … = 4 hash function for level I k 0 = k 1 … = 2 Different H values lead to different GHT variants 15 Standard (Default) GHT – Thin Tree h 0 Defined by {M,K, H} M = {m 0 m 1 M {m 0 , m 1 , …}, m i is } m i is size of a tree node h 1 (number of buckets) at level i K = {k 0 , k 1 ,…}, k i is the growth factor for level i h 2 h 2 A tree has k i times as many nodes at level (i+1) y ( ) as at level i H = {h 0 , h 1 ,…}, h i is a m 0 = m 1 … = 4 hash function for level i k 0 = k 1 … = 2 16 8

Standard (Default) GHT – Thin Tree h 0 Defined by {M,K, H} M {m 0 , m 1 , …}, m i is M = {m 0 m 1 } m i is size of a tree node h 1 (number of buckets) at level i K = {k 0 , k 1 ,…}, k i is the growth factor for level i h 2 h 2 A tree has k i times as many nodes at level (i+1) y ( ) as at level i H = {h 0 , h 1 ,…}, h i is a m 0 = m 1 … = 4 hash function for level i k 0 = k 1 … = 2 What about h 2 ? x mod 16? h 0 = x mod 4 h 1 = x mod 8 17 Standard (Default) GHT – Thin Tree h 0 Defined by {M,K, H} M = {m 0 m 1 M {m 0 , m 1 , …}, m i is } m i is size of a tree node h 1 (number of buckets) at level i K = {k 0 , k 1 ,…}, k i is the growth factor for level i h 2 h 2 A tree has k i times as many nodes at level (i+1) y ( ) as at level i H = {h 0 , h 1 ,…}, h i is a m 0 = m 1 … = 4 hash function for level i k 0 = k 1 … = 2 h 0 = x mod 4 h 1 = x mod 8 h 2 = h 3 = … = x mod 8 18 9

GHT Variant (Fat Tree) Can tolerate non-ideal hash functions better h 0 because there are many more potential target buckets at each level h 1 Hashing at different levels is independent h 2 Can allocate different levels to different disks and access them in parallel ll l m 0 = m 1 … = 4 h 0 = x mod 4 Expensive to maintain k 0 = k 1 … = 2 h 1 = x mod 8 children pointers in each h 2 = x mod 16 node – number of h i = x mod 4*2 i pointers grow exponentially 19 GHT (Standard) Insertion Bucket = (Level, Child – left or right, Entry within bucket) (0, 0, 1) (1, 1, 2) (2, 0, 1) 20 10

GHT Insertion Insert whose hash values at the various levels are shown. (0, 0, 1) Occupied/ h0(key) h0(key) = 1 1 collision lli i (1, 1, 2) h1(key) = 6 (2, 0, 1) h2(key) = 1 ( y) h3(key) = 3 21 GHT Insertion Insert whose hash values at the various levels are shown. (0, 0, 1) Occupied/ h0(key) h0(key) = 1 1 collision lli i (1, 1, 2) h1(key) = 6 (2, 0, 1) h2(key) = 1 ( y) h3(key) = 3 (3, 0, 3) If hash functions are uniform, tree grows top-down in a balanced fashion 22 11

GHT Search Search for whose hash values at the various levels are shown - Similar to insertion - Need to deal with duplicate key values (0, 0, 1) h0(key) h0(key) = 1 1 (1, 1, 2) h1(key) = 6 (2, 0, 1) h2(key) = 1 ( y) h3(key) = 3 (3, 0, 3) Only for point queries Cannot support range search 23 Summary Trustworthy record keeping is important However, need to also ensure efficient retrieval Existing indexing structures may be manipulated GHT is a “trustworthy” index structure GHT is a trustworthy index structure Once record is committed, it cannot be manipulated! 24 12

Most business records are unstructured, searched by inverted index Keywords Posting Lists Query 1 3 11 17 Data 3 9 Base 3 19 Worm 7 36 3 Index One WORM file for each posting list 25 S. Mitra, W. W. Hsu, M. Winslett: Trustworthy Keyword Search for Regulatory-Compliant Record Retention. VLDB’2006, 1001-1012, 2006 Index must be updated as new documents arrive Keywords Posting Lists D Doc: 79 79 Query 1 3 11 17 79 Data 3 9 79 Query Base 3 19 Data Worm 7 36 Index Index 3 79 500 keywords = 500 disk seeks ~1 sec per document 26 13

Amortize cost by updating in batch Buffer Keywords Posting Lists Doc: 79 Query 79 81 83 Query 1 3 11 17 Doc: 80 Data 3 9 Doc: 81 Base 3 19 Query Worm 7 36 Index 3 Doc: 82 Doc: 83 1 seek per keyword in batch Query Large buffer to benefit infrequent terms Over 100,000 documents to achieve 2 docs/sec 27 Index is not updated immediately Index Alice Com m it tim e Record Alter Omit Buffer Buffer Adversary Prevailing practice – email must be committed before it is delivered 28 14

Can storage server cache help? Storage servers have huge cache Data committed into cache is effectively on disk Is battery backed-up Inside the WORM box, so is trustworthy 29 Caching works in blocks (One block per posting list) Cache Hit Cache Miss Doc: 79 Doc: 79 Q Query 1 3 11 17 79 80 3 9 Data Query Cache Miss 79 Base 3 19 Base Worm 7 36 Index Index 3 79 Cache Miss Doc: 80 Query Query Caching does not benefit infrequent terms (number of posting lists >> number of cache blocks) 30 15

Simulation results show caching is not enough Cache Misses Per Doc 500 450 400 350 I/O Per Doc 300 250 200 Cache Miss 150 100 100 50 0 0 4 8 6 2 4 8 6 2 4 8 6 GB 1 3 6 2 5 1 2 4 9 1 2 5 0 0 0 1 2 4 Cache Size 31 Simulation results show caching is not enough What if number posting lists ≤ Number of cache blocks? Each update will hit the cache 32 16

Recommend

More recommend