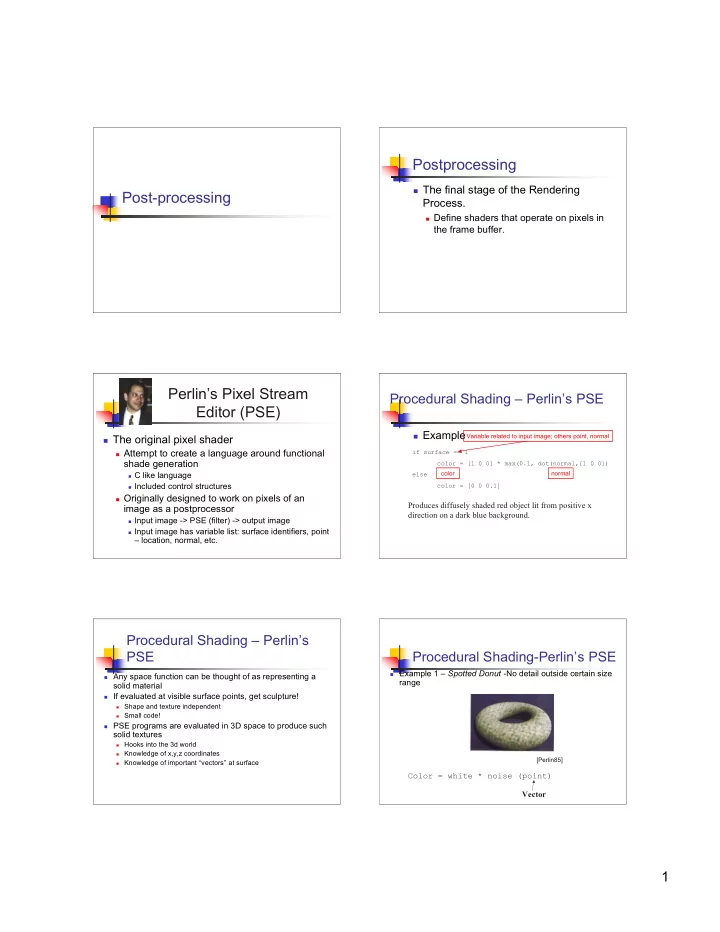

Postprocessing The final stage of the Rendering Post-processing Process. Define shaders that operate on pixels in the frame buffer. Perlin’s Pixel Stream Procedural Shading – Perlin’s PSE Editor (PSE) Example Variable related to input image; others point, normal The original pixel shader Attempt to create a language around functional if surface == 1 shade generation color = [1 0 0] * max(0.1, dot(normal,[1 0 0]) color normal C like language else Included control structures color = [0 0 0.1] Originally designed to work on pixels of an Produces diffusely shaded red object lit from positive x image as a postprocessor direction on a dark blue background. Input image -> PSE (filter) -> output image Input image has variable list: surface identifiers, point – location, normal, etc. Procedural Shading – Perlin’s PSE Procedural Shading-Perlin’s PSE Example 1 – Spotted Donut -No detail outside certain size Any space function can be thought of as representing a range solid material If evaluated at visible surface points, get sculpture! Shape and texture independent Small code! PSE programs are evaluated in 3D space to produce such solid textures Hooks into the 3d world Knowledge of x,y,z coordinates [Perlin85] Knowledge of important “vectors” at surface Color = white * noise (point) Vector 1

Procedural Shading-Perlin’s PSE Procedural Shading-Perlin’s PSE Example 2 – Bozo’s Donut Dnoise – Vector valued differential of noise signal, i.e., gradiant/derivative of noise function Dnoise (x,y,z) = (dNoise/dx, dNoise/dy, dNoise/dz) Good for modifying normal vector [Perlin85] (bump mapping) Color = Colorful(noise (k*point)) Constant multiplier Creating Wrinkles Procedural Shading-Perlin’s PSE Adding successive noise at different but Dnoise example – Bumpy Donut regular frequencies 1/f, self-similar quality (Fractal-like…more on fractals later) i x i = N-1 Noise( b ) ∑ NOISE( x ) = i [Perlin85] a i = 0 Normal += Dnoise (point) Procedural Shading - Perlin’s Creating Wrinkles PSE Perlin example: Wrinkled Donut Perlin - turbulence example Function marble(point) x = point[1] + turbulence (point) return marble_color(sin x) Perturbs the layer [Perlin85] [Perlin85] 2

RenderMan imager shader RenderMan imager shaders Manipulates a final pixel color after all of Global variables P point Surface position the geometric and shading processing Ci color Pixel color has concluded. Oi color Pixel opacity In the context of an imager shader, P is alpha float Fractional pixel coverage ncomps Number of color components the position of the surface closest to the time Current shutter time camera in that pixel (i.e. the viewing ray intersection or the Z-buffer entry). Ci color varying Output pixel color Oi color varying Output pixel opacity RenderMan imager shader “imager shaders” in GLSL Use of imager shader is not in vogue GLSL knows only about vertices and fragments. Evidence by fact that prman does not “imager shading” can be done by fragment support it. shader Use tool optimized for image manipulation: Needs access to frame buffer. Photoshop Achieved via in memory textures GIMP, etc. Fragment shader take texture as parameter. Texture coords set up to verticies of frame buffer Pass thru to fragment phase Frame buffer access Frame buffer access Render to texture Pbuffers Aka copy-to-texture (CTT) Advanced feature Render as usual Rendering to an off-screen buffer. Copy framebuffer to texture Pbuffer look and act like the frame buffer. Can be passed in as a texture to a shader glCopyTexSubImage*() Shader writes back into frame buffer Need context switch to get back to screen frame buffer Pixel copies done in video memory 3

Frame buffer access Chaining fragment shaders Frame Buffer Object (FBO) extension Introduced by nVidia in 2005 Easy management of “in memory textures” Requires a single rendering context http://www.gamedev.net/reference/articles/ article2331.asp Example Demo1 Raun Krisch M.S -- C.S. RIT Real-time Photographic Tone Reproduction for Video Games. Why use pixel shaders? HDRI and Tone Mapping Non-photorealistic rendering high dynamic range imaging is a set of techniques that allow a far greater dynamic range of exposures than normal digital imaging techniques. The intention is to accurately represent the wide range of intensity levels found in real scenes, ranging from direct sunlight to the deepest shadows. Katsuaki HIRAMITSU using BMRT Wikipedia 4

Tone mapping / tone reproduction HDR in computer graphics Compresses HDR of scene to LDR of display, for optimal viewing Why photography was invented. [Ward 2001] HDR in Computer Graphics HDR in Computer Graphics Photosphere Example http://www.anyhere.com Greg Ward’s software company http://www.debevec.org/RNL/ [Debevec 1998] HDR in Games HDR and Bloom http://www.gamespot.com/features/614 7127/p-4.html Adam Lake and Cody Northrop http://www.gamedev.net/reference/articles/article2208.asp 5

Media Based Framework HDR and You HDR Techniques / software available today to Image create HDR images from digital cameras Exposure series Photography of the future? Camera Media Presentation In CG, can simulate lighting to create HDR. Will always need tone mapping simulation Will always need “imager” shaders Output Device Image device Appearance color management About the Lab Questions? Problematic One last order of business Imager Shader support Note on official course evaluations. prman doesn’t have it Aqsis does (but new version will be released Ways to improve the course tomorrow!) Written comments welcomed. GLSL FBO extension required. 6

Recommend

More recommend