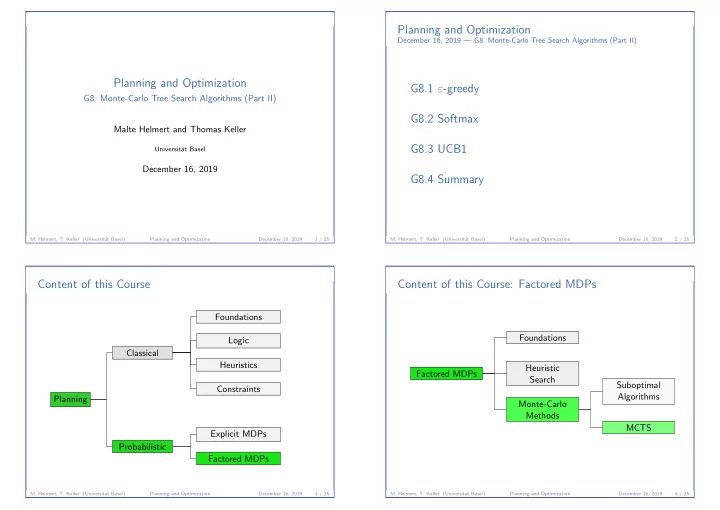

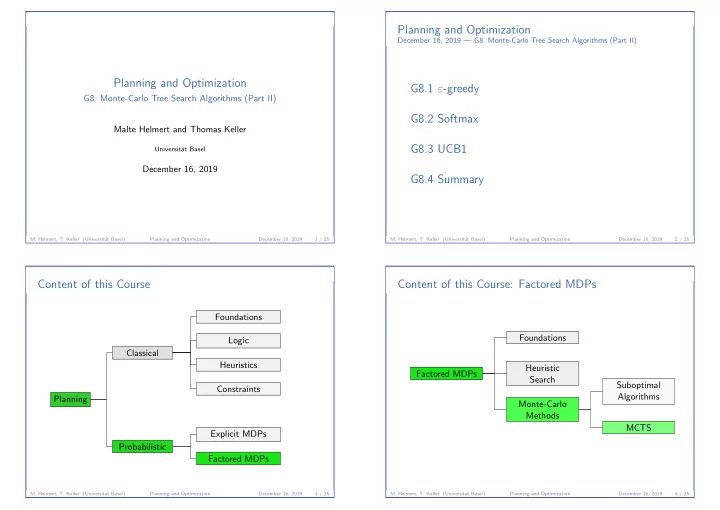

Planning and Optimization December 16, 2019 — G8. Monte-Carlo Tree Search Algorithms (Part II) Planning and Optimization G8.1 ε -greedy G8. Monte-Carlo Tree Search Algorithms (Part II) G8.2 Softmax Malte Helmert and Thomas Keller G8.3 UCB1 Universit¨ at Basel December 16, 2019 G8.4 Summary M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 1 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 2 / 25 Content of this Course Content of this Course: Factored MDPs Foundations Foundations Logic Classical Heuristics Heuristic Factored MDPs Search Suboptimal Constraints Algorithms Planning Monte-Carlo Methods MCTS Explicit MDPs Probabilistic Factored MDPs M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 3 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 4 / 25

G8. Monte-Carlo Tree Search Algorithms (Part II) ε -greedy G8. Monte-Carlo Tree Search Algorithms (Part II) ε -greedy ε -greedy: Idea ◮ tree policy parametrized with constant parameter ε ◮ with probability 1 − ε , pick one of the greedy actions uniformly at random G8.1 ε -greedy ◮ otherwise, pick non-greedy successor uniformly at random ε -greedy Tree Policy � 1 − ǫ if a ∈ L k ⋆ ( d ) | L k ⋆ ( d ) | π ( a | d ) = ǫ otherwise, | L ( d ( s )) \ L k ⋆ ( d ) | ⋆ ( d ) = { a ( c ) ∈ L ( s ( d )) | c ∈ arg min c ′ ∈ children( d ) ˆ with L k Q k ( c ′ ) } . M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 5 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 6 / 25 G8. Monte-Carlo Tree Search Algorithms (Part II) ε -greedy G8. Monte-Carlo Tree Search Algorithms (Part II) ε -greedy ε -greedy: Example ε -greedy: Asymptotic Optimality d Asymptotic Optimality of ε -greedy ◮ explores forever c 1 c 2 c 3 c 4 ◮ not greedy in the limit ˆ ˆ ˆ ˆ Q ( c 1 ) = 6 Q ( c 2 ) = 12 Q ( c 3 ) = 6 Q ( c 4 ) = 9 � not asymptotically optimal Assuming a ( c i ) = a i and ε = 0 . 2, we get: asymptotically optimal variant uses decaying ε , e.g. ε = 1 k ◮ π ( a 1 | d ) = 0 . 4 ◮ π ( a 3 | d ) = 0 . 4 ◮ π ( a 2 | d ) = 0 . 1 ◮ π ( a 4 | d ) = 0 . 1 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 7 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 8 / 25

G8. Monte-Carlo Tree Search Algorithms (Part II) ε -greedy G8. Monte-Carlo Tree Search Algorithms (Part II) Softmax ε -greedy: Weakness Problem: when ε -greedy explores, all non-greedy actions are treated equally G8.2 Softmax d c l +2 c 1 c 2 c 3 . . . ˆ ˆ ˆ ˆ Q ( c 1 ) = 8 Q ( c 2 ) = 9 Q ( c 3 ) = 50 Q ( c l +2 ) = 50 aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa � �� � ℓ nodes Assuming a ( c i ) = a i , ε = 0 . 2 and ℓ = 9, we get: ◮ π ( a 1 | d ) = 0 . 8 ◮ π ( a 2 | d ) = π ( a 3 | d ) = · · · = π ( a 11 | d ) = 0 . 02 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 9 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 10 / 25 G8. Monte-Carlo Tree Search Algorithms (Part II) Softmax G8. Monte-Carlo Tree Search Algorithms (Part II) Softmax Softmax: Idea Softmax: Example ◮ tree policy with constant parameter τ d ◮ select actions proportionally to their action-value estimate ◮ most popular softmax tree policy uses Boltzmann exploration c 1 c 2 c 3 c l +2 − ˆ . . . Qk ( c ) ◮ ⇒ selects actions proportionally to e ˆ ˆ ˆ ˆ τ Q ( c 1 ) = 8 Q ( c 2 ) = 9 Q ( c 3 ) = 50 Q ( c l +2 ) = 50 aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa � �� � ℓ nodes Tree Policy based on Boltzmann Exploration − ˆ Qk ( c ) e τ Assuming a ( c i ) = a i , τ = 10 and ℓ = 9, we get: π ( a ( c ) | d ) = − ˆ Qk ( c ′ ) � ◮ π ( a 1 | d ) = 0 . 49 c ′ ∈ children( d ) e τ ◮ π ( a 2 | d ) = 0 . 45 ◮ π ( a 3 | d ) = . . . = π ( a 11 | d ) = 0 . 007 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 11 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 12 / 25

G8. Monte-Carlo Tree Search Algorithms (Part II) Softmax G8. Monte-Carlo Tree Search Algorithms (Part II) Softmax Boltzmann Exploration: Asymptotic Optimality Boltzmann Exploration: Weakness a 2 a 2 a 1 a 1 a 3 Asymptotic Optimality of Boltzmann Exploration ◮ explores forever P P ◮ not greedy in the limit: a 3 ◮ state- and action-value estimates converge to finite values ◮ therefore, probabilities also converge to positive, finite values � not asymptotically optimal cost cost ◮ Boltzmann exploration and ε -greedy only 1 asymptotically optimal variant uses decaying τ , e.g. τ = consider mean of sampled action-values log k careful: τ must not decay faster than logarithmically ◮ as we sample the same node many times, we can also gather (i.e., must have τ ≥ const log k ) to explore infinitely information about variance (how reliable the information is) ◮ Boltzmann exploration ignores the variance, treating the two scenarios equally M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 13 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 14 / 25 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 Upper Confidence Bounds: Idea G8.3 UCB1 Balance exploration and exploitation by preferring actions that ◮ have been successful in earlier iterations (exploit) ◮ have been selected rarely (explore) M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 15 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 16 / 25

G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 Upper Confidence Bounds: Idea Bonus Term of UCB1 ◮ select successor c of d that minimizes ˆ Q k ( c ) − E k ( d ) · B k ( c ) � 2 · ln N k ( d ) ◮ use B k ( c ) = as bonus term ◮ based on action-value estimate ˆ Q k ( c ), N k ( c ) ◮ exploration factor E k ( d ) and ◮ bonus term is derived from Chernoff-Hoeffding bound: ◮ bonus term B k ( c ). ◮ gives the probability that a sampled value (here: ˆ Q k ( c )) ◮ select B k ( c ) such that ◮ is far from its true expected value (here: Q ⋆ ( s ( c ) , a ( c ))) ◮ in dependence of the number of samples (here: N k ( c )) Q ⋆ ( s ( c ) , a ( c )) ≤ ˆ Q k ( c ) − E k ( d ) · B k ( c ) ◮ picks the optimal action exponentially more often with high probability ◮ Idea: ˆ Q k ( c ) − E k ( d ) · B k ( c ) is a lower confidence bound ◮ concrete MCTS algorithm that uses UCB1 is called UCT on Q ⋆ ( s ( c ) , a ( c )) under the collected information M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 17 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 18 / 25 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 Exploration Factor (1) Exploration Factor (2) Exploration factor E k ( d ) serves two roles in SSPs: ◮ UCB1 designed for MAB with reward in [0 , 1] Exploration factor E k ( d ) serves two roles in SSPs: ⇒ ˆ Q k ( c ) ∈ [0; 1] for all k and c ◮ E k ( d ) allows to adjust balance � 2 · ln N k ( d ) ◮ bonus term B k ( c ) = always ≥ 0 between exploration and exploitation N k ( c ) ◮ when d is visited, ◮ search with E k ( d ) = ˆ V k ( d ) very greedy ◮ B k +1 ( c ) > B k ( c ) if a ( c ) is not selected ◮ in practice, E k ( d ) is often multiplied with constant > 1 ◮ B k +1 ( c ) < B k ( c ) if a ( c ) is selected ◮ UCB1 often requires hand-tailored E k ( d ) to work well ◮ if B k ( c ) ≥ 2 for some c , UCB1 must explore ◮ hence, ˆ Q k ( c ) and B k ( c ) are always of similar size ⇒ set E k ( d ) to a value that depends on ˆ V k ( d ) M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 19 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 20 / 25

G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 Asymptotic Optimality Symmetric Search Tree up to depth 4 full tree up to depth 4 Asymptotic Optimality of UCB1 ◮ explores forever ◮ greedy in the limit � asymptotically optimal However: ◮ no theoretical justification to use UCB1 for SSPs/MDPs (MAB proof requires stationary rewards) ◮ development of tree policies active research topic M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 21 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 22 / 25 G8. Monte-Carlo Tree Search Algorithms (Part II) UCB1 G8. Monte-Carlo Tree Search Algorithms (Part II) Summary Asymmetric Search Tree of UCB1 (equal number of search nodes) G8.4 Summary M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 23 / 25 M. Helmert, T. Keller (Universit¨ at Basel) Planning and Optimization December 16, 2019 24 / 25

Recommend

More recommend