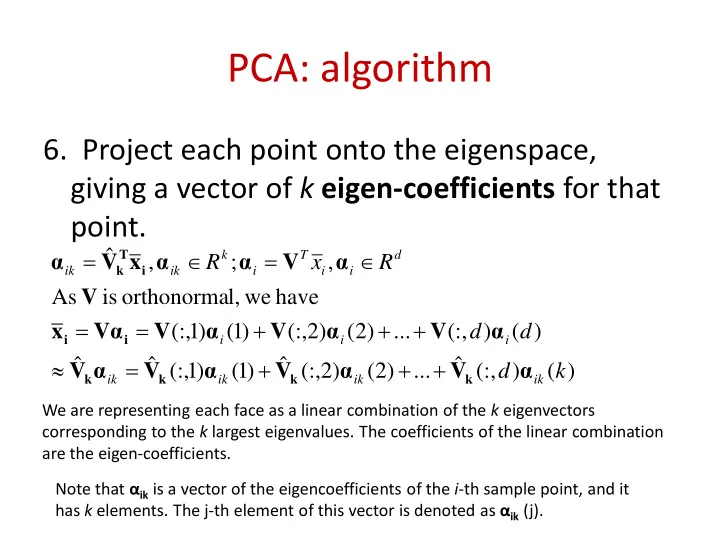

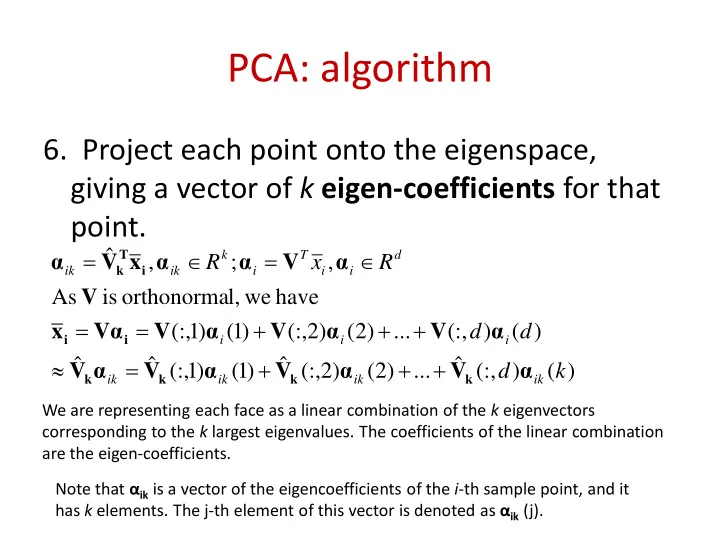

PCA: algorithm 6. Project each point onto the eigenspace, giving a vector of k eigen-coefficients for that point. = ∈ = ∈ ˆ α T α α α k T d V x V , R ; x , R k i ik ik i i i V As is orthonorma l, we have = = + + + V α α α α x V V V (:, 1 ) ( 1 ) (:, 2 ) ( 2 ) ... (:, d ) ( d ) i i i i i ≈ = + + + ˆ ˆ ˆ ˆ α α α α V V V V (:, 1 ) ( 1 ) (:, 2 ) ( 2 ) ... (:, d ) ( k ) k k k k ik ik ik ik We are representing each face as a linear combination of the k eigenvectors corresponding to the k largest eigenvalues. The coefficients of the linear combination are the eigen-coefficients. Note that α ik is a vector of the eigencoefficients of the i -th sample point, and it has k elements. The j-th element of this vector is denoted as α ik (j).

PCA: Algorithm 1. Compute the mean of the given points: N 1 ∑ = ∈ ∈ d d x x x x , R , R i i N = i 1 2. Deduct the mean from each point: = − x x x i i 3. Compute the covariance matrix of these mean-deducted points: N N 1 1 ∑ ∑ × = = − − ∈ T T d d C x x (x x )(x x ) C , Note : R − − i i i i N 1 N 1 = = i 1 i 1 C Note : is a symmetric matrix, and it is positive - semidefini te

PCA: algorithm 4. Find the eigenvectors of C : = ∈ × ∈ × V Λ Λ d d d d CV V , R , R − V matrix of eigenvecto rs (each column is an eigenvecto r), − Λ diagonal matrix of eigenvalue s = = T T V VV V V I C Note : is an orthonorma l matrix (i.e. ), as is a covariance matrix and hence it is symmetric. Λ Note : contains non - negative values on the diagonal (eigen - values) 5. Extract the k eigenvectors corresponding to the k largest eigenvalues. This is called the extracted eigenspace: There is an implicit assumption here that the first k indices V k = ˆ V (:, 1 : k ) indeed correspond to the k largest eigenvalues. If that is not true, you would need to pick the appropriate indices.

PCA and Face Recognition: Eigen-faces Consider a database of cropped, frontal face images (which we • will assume are aligned and under the same illumination). These are the gallery images . We will reshape each such image (a 2D array of size H x W after • cropping) to form a column vector of d = HW elements. Each image will be a vector x i , as per the notation on the previous two slides. And then carry out the six steps mentioned before. • The eigenvectors that we get in this case are called eigenfaces . • Each eigenvector has d elements. If you reshape those eigenvectors to form images of size H x W , those images look like (filtered!) faces.

Example 1 A face database http://people.ece.cornell.edu/land/courses/ece4760/FinalProjects/s2011/bjh78_caj65/b jh78_caj65/

Top 25 Eigen-faces for this database! http://people.ece.cornell.edu/land/courses/ece4760/FinalProjects/s2011/bjh78_caj65/b jh78_caj65/

One word of caution: Eigen-faces • The algorithm described earlier is computationally infeasible for eigen-faces, as it requires storage of a d x d Covariance matrix ( d – the number of image pixels - could be more than 10,000). And the computation of the eigen- vectors of such a matrix is a O( d 3 ) operation! • We will study a modification to this that will bring down the computational cost drastically.

Eigen-faces: reducing computational complexity. • In such a case, the rank of C is at the most N-1 . So C will have at the most N-1 non-zero eigen- values. • We can write C in the following way: N 1 ∑ = ∝ T T C x x XX , where − i i N 1 = i 1 × = ∈ d N X [ x | x | ... | x ] R 1 2 N

Back to Eigen-faces: reducing computational complexity. • Consider the matrix X T X (size N x N ) instead of XX T (size d x d ). Its eigenvectors are of the form: = λ ∈ T N X Xw w w , R → = λ − T XX Xw Xw X ( ) ( )[ pre multiplyin g by ] Xw is an eigenvector of C=XX T ! Computing all eigenvectors of C will now have a complexity of only O ( N 3 ) for computation of the eigenvectors of X T X + O(N x dN) for computation of Xw from each w = total of O( N 3 + dN 2 ) which is much less than O ( d 3 ). Note that C has at most only min(N- 1,d) eigenvectors corresponding to non-zero eigen-values (why?).

Eigenfaces: Algorithm (N << d case) 1. Compute the mean of the given points: N 1 ∑ = ∈ ∈ d d x x x x , R , R i i N = i 1 2. Deduct the mean from each point: = − x x x i i 3. Compute the following matrix: × × = ∈ = ∈ T N N d N L X X L X x | x | ... | x , R , [ ] R 1 2 N L Note : is a symmetric matrix, and it is positive - semidefini te

Eigen-faces: Algorithm ( N << d case) 4. Find the eigenvectors of L : = − − W Γ Γ LW W , eigenvecto rs , eigenvalue s , = T WW I 5. Obtain the eigenvectors of C from those of L : = ∈ × ∈ × ∈ × d N N N N N V XW X W V , R , R , R 6. Unit-normalize the columns of V . 7. C will have at most only N eigenvectors corresponding to non-zero eigen-values*. Out of these you pick the top k ( k < N ) corresponding to the largest eigen-values. * Actually this number is at most N -1 – this is due to the mean subtraction, else it would have been at most N .

Top 25 eigenfaces from the previous database Reconstruction of a face image using the top 1,8,16,32,…,104 eigenfaces (i.e. k varied from 1 to 104 in steps of 8) k ∑ = + ≈ + ˆ ˆ α α x x V x V (:, l ) ( l ) i i ik = l 1

Example 2 The Yale Face database

What if both N and d are large? • This can happen, for example, if you wanted to build an eigenspace for face images of all people in Mumbai. • Divide people into coherent groups based on some visual attributes (eg: gender, age group etc) and build separate eigenspaces for each group.

PCA: A closer look • PCA has many applications – apart from face/object recognition – in image processing/computer vision, statistics, econometrics, finance, agriculture, and you name it! • Why PCA? What’s special about PCA? See the next slides!

PCA: what does it do? • It finds ‘ k ’ perpendicular directions (all passing through the mean vector) such that the original data are approximated as accurately as possible when projected onto these ‘ k ’ directions. • We will see soon why these ‘ k ’ directions are eigenvectors of the covariance matrix of the data!

PCA Look at this scatter-plot of points in 2D. The points are highly spread out in the direction of the light blue line.

PCA This is how the data would look if they were rotated in such a way that the major axis of the ellipse (the light blue line) now coincided with the Y axis. As the spread of the X coordinates is now relatively insignificant ( observe the axes! ), we can approximate the rotated data points by their projections onto the Y-axis (i.e. their Y coordinates alone!). This was not possible prior to rotation!

PCA e • Aim of PCA: Find the line passing through x the sample mean (i.e. ), such that the x i − x projection of any mean-deducted point e x i onto , most accurately approximates it. x i − x e x - x e a e a = ∈ Projection of onto is , R , i i i a e T ( x - x ) = a i i i x ( a e ) ( x x ) 2 = − − Error of approximat ion i i e Note: Here is a unit vector.

PCA • Summing up over all points, we get: N ∑ e e x x 2 = = − − Sum total error of approximat ion J ( ) ( a ) ( ) i i = i 1 N N N ∑ ∑ ∑ e x x e x x 2 = + − 2 − − T ( a ) 2 a ( ) i i i i = = = i 1 i 1 i 1 N N N ∑ ∑ ∑ e x x e x x 2 = + − 2 − − T ( a ) 2 a ( ) i i i i = = = i 1 i 1 i 1 N N N ∑ ∑ ∑ x x e x x = + − 2 − − 2 T a 2 a ( ) i i i i = = = i 1 i 1 i 1 N N N ∑ ∑ ∑ x x e x x = + − 2 − = − 2 2 T a 2 a , ( a ( )) i i i i i = = = i 1 i 1 i 1 N N ∑ ∑ x x = − + − 2 2 a i i = = i 1 i 1

PCA N N ∑ ∑ e x x = = − + − 2 2 Sum total error of approximat ion J ( ) a i i = = N N i 1 i 1 ∑ ∑ e x x x x = − − + − 2 t 2 ( ( )) i i = = i 1 i 1 This term is proportional to the variance of the data points N N ∑ ∑ e x x x x e x x = − − − + − 2 t t when projected onto the ( )( ) i i i direction e . = = i 1 i 1 N ∑ e S e x x S C = − + − 2 = − t ( where ( N 1 ) ) i = i 1

Recommend

More recommend