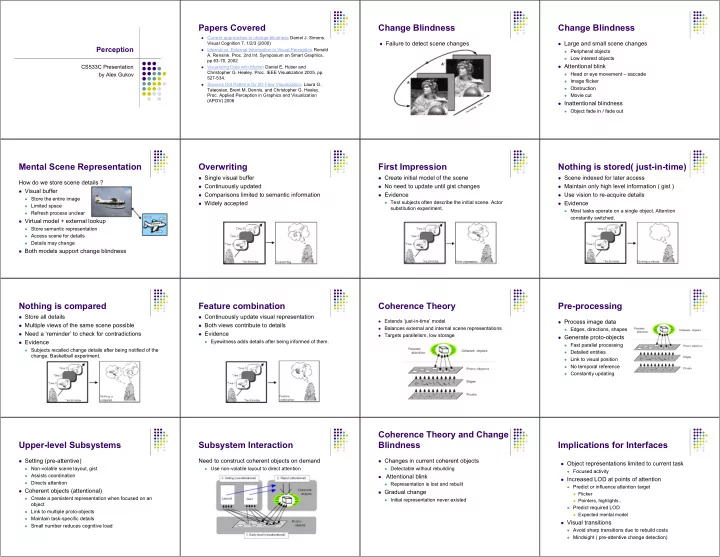

Papers Covered Change Blindness Change Blindness Current approaches to change blindness Daniel J. Simons. Visual Cognition 7, 1/2/3 (2000) Failure to detect scene changes Large and small scene changes Perception Internal vs. External Information in Visual Perception Ronald Peripheral objects A. Rensink. Proc. 2nd Int. Symposium on Smart Graphics, Low interest objects pp 63-70, 2002 Attentional blink CS533C Presentation Visualizing Data with Motion Daniel E. Huber and Christopher G. Healey. Proc. IEEE Visualization 2005, pp. by Alex Gukov Head or eye movement – saccade 527-534. Image flicker Stevens Dot Patterns for 2D Flow Visualization. Laura G. Obstruction Tateosian, Brent M. Dennis, and Christopher G. Healey. Movie cut Proc. Applied Perception in Graphics and Visualization (APGV) 2006 Inattentional blindness Object fade in / fade out Mental Scene Representation Overwriting First Impression Nothing is stored( just-in-time) Single visual buffer Create initial model of the scene Scene indexed for later access How do we store scene details ? Continuously updated No need to update until gist changes Maintain only high level information ( gist ) Visual buffer Comparisons limited to semantic information Evidence Use vision to re-acquire details Store the entire image Widely accepted Test subjects often describe the initial scene. Actor Evidence Limited space substitution experiment. Most tasks operate on a single object. Attention Refresh process unclear constantly switched. Virtual model + external lookup Store semantic representation Access scene for details Details may change Both models support change blindness Nothing is compared Feature combination Coherence Theory Pre-processing Store all details Continuously update visual representation Extends ‘just-in-time’ model Process image data Multiple views of the same scene possible Both views contribute to details Balances external and internal scene representations Edges, directions, shapes Need a ‘reminder’ to check for contradictions Evidence Targets parallelism, low storage Generate proto-objects Evidence Eyewitness adds details after being informed of them. Fast parallel processing Subjects recalled change details after being notified of the Detailed entities change. Basketball experiment. Link to visual position No temporal reference Constantly updating Coherence Theory and Change Upper-level Subsystems Subsystem Interaction Blindness Implications for Interfaces Setting (pre-attentive) Need to construct coherent objects on demand Changes in current coherent objects Object representations limited to current task Non-volatile scene layout, gist Use non-volatile layout to direct attention Detectable without rebuilding Focused activity Assists coordination Attentional blink Increased LOD at points of attention Directs attention Representation is lost and rebuilt Predict or influence attention target Coherent objects (attentional) Gradual change Flicker Create a persistent representation when focused on an Initial representation never existed Pointers, highlights.. object Predict required LOD Link to multiple proto-objects Expected mental model Maintain task-specific details Visual transitions Small number reduces cognitive load Avoid sharp transitions due to rebuild costs Mindsight ( pre-attentive change detection)

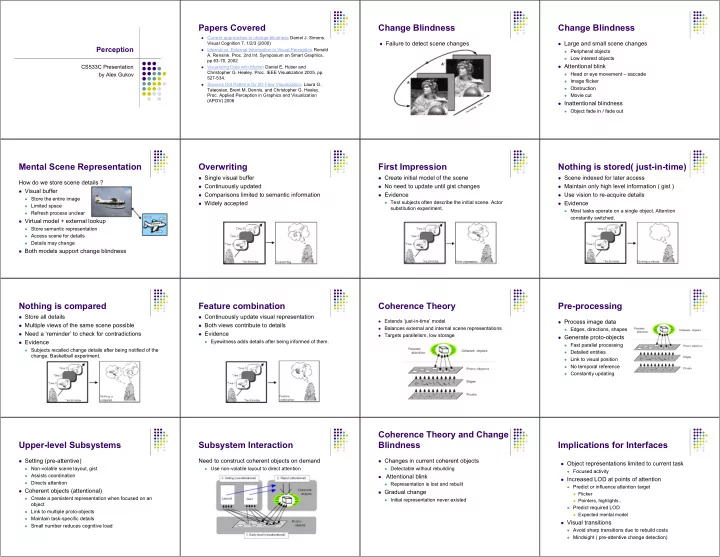

Critique Visualizing Data with Motion Previous Work Flicker Experiment Extremely important phenomenon Multidimensional data sets more common Detection Test detection against background flicker Will help understand fundamental perception mechanisms Common visualization cues 2-5% frequency difference from background Coherency 1 o /s speed difference from the background Theories lack convincing evidence Color In phase / out of phase with the background 20 o direction difference from the background Experiments do not address a specific goal Texture Cycle difference Peripheral objects need greater separation Experiment results can be interpreted in favour of a Position Cycle length specific theory (Basketball case) Grouping Shape Oscillation pattern – must be in phase Cues available from motion Notification Flicker Direction Motion encoding superior to color, shape change Speed Flicker Experiment - Results Direction Experiment Direction Experiment - Results Speed Experiment Coherency Test detection against background motion Absolute direction Test detection against background motion Out of phase trials detection error ~50% Absolute direction Does not affect detection Absolute speed Exception for short cycles - 120ms Direction difference Direction difference Speed difference Appeared in phase 15 o minimum for low error rate and detection time Cycle difference, cycle length (coherent trials) Further difference has little effect High detection results for all values Speed Experiment - Results Applications Applications Critique Absolute speed Can be used to visualize flow fields Study Does not affect detection Original data 2D slices of 3D particle positions over Grid density may affect results Speed difference time (x,y,t) 0.42 o /s minimum for low error rate and detection time Multiple target directions Animate keyframes Further difference has little effect Technique Temporal change increases cognitive load Color may be hard to track over time Difficult to focus on details Stevens Model for 2D Flow Visualization Idea Stevens Model Stevens Model Initial Setup Predict perceived direction for a neighbourhood of dots Start with a regular dot pattern Segment weight Apply global transformation Enumerate line segments in a small neighbourhood Superimpose two patterns Calculate segment directions Glass Penalize long segments Resulting pattern identifies the global transform Select the most common Stevens direction Individual dot pairs create perception of local Repeat for all neighbourhoods direction Multiple transforms can be detected

Stevens Model 2D Flow Visualization Algorithm Results Ideal neighbourhood – empirical results Stevens model estimates perceived direction Data 2D slices of 3D particle positions over a period of time 6-7 dots per neighbourhood How can we use it to visualize flow fields ? Algorithm Density 0.0085 dots / pixel Construct a dot neighbourhoods such that the Start with a regular grid Neighbourhood radius desired direction matches what is perceived Calculate direction error around a single point 16.19 pixels Desired direction: keyframe data Implications for visualization algorithm Perceived direction: Stevens model Move one of the neighbourhood points to decrease Multiple zoom levels required error Repeat for all neighbourhoods Critique Model Shouldn’t we penalize segments which are too short ? Algorithm Encodes time dimension without involving cognitive processing Unexplained data clustering as a visual artifact More severe if starting with a random field

Recommend

More recommend