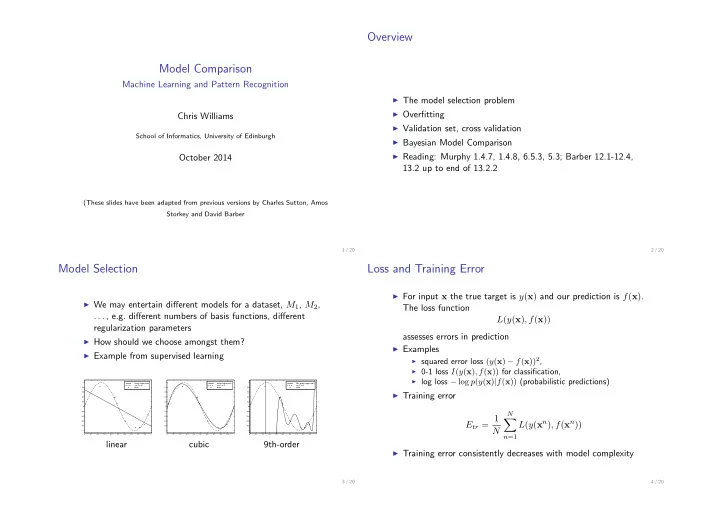

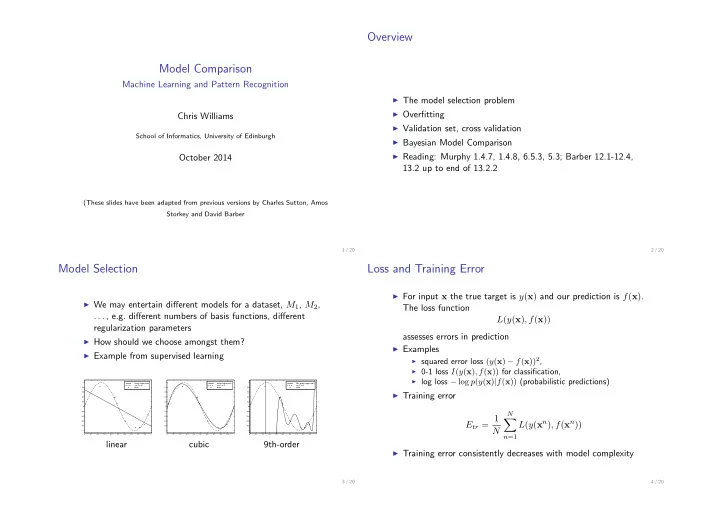

Overview Model Comparison Machine Learning and Pattern Recognition ◮ The model selection problem ◮ Overfitting Chris Williams ◮ Validation set, cross validation School of Informatics, University of Edinburgh ◮ Bayesian Model Comparison ◮ Reading: Murphy 1.4.7, 1.4.8, 6.5.3, 5.3; Barber 12.1-12.4, October 2014 13.2 up to end of 13.2.2 (These slides have been adapted from previous versions by Charles Sutton, Amos Storkey and David Barber 1 / 20 2 / 20 Model Selection Loss and Training Error ◮ For input x the true target is y ( x ) and our prediction is f ( x ) . ◮ We may entertain different models for a dataset, M 1 , M 2 , The loss function . . . , e.g. different numbers of basis functions, different L ( y ( x ) , f ( x )) regularization parameters assesses errors in prediction ◮ How should we choose amongst them? ◮ Examples ◮ Example from supervised learning ◮ squared error loss ( y ( x ) − f ( x )) 2 , ◮ 0-1 loss I ( y ( x ) , f ( x )) for classification, ◮ log loss − log p ( y ( x ) | f ( x )) (probabilistic predictions) 1 1 1 linear regression cubic regression 9th−order regression sin(2 π x) sin(2 π x) sin(2 π x) 0.8 data 0.8 data 0.8 data ◮ Training error 0.6 0.6 0.6 0.4 0.4 0.4 0.2 0.2 0.2 0 0 0 −0.2 −0.2 −0.2 N E tr = 1 −0.4 −0.4 −0.4 � L ( y ( x n ) , f ( x n )) −0.6 −0.6 −0.6 −0.8 −0.8 −0.8 N −1 −1 −1 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 n =1 linear cubic 9th-order ◮ Training error consistently decreases with model complexity 3 / 20 4 / 20

Overfitting Validation Set ◮ Partition the available data into two: a training set (for fitting the model), and a validation set (aka hold-out set) for ◮ Generalization (or test) error assessing performance � ◮ Estimate the generalization error with E gen = L ( y ( x ) , f ( x )) p ( x , y ) d x dy V E val = 1 ◮ Overfitting (Mitchell 1997, p. 67) � L ( y ( x v ) , f ( x v )) V A hypothesis f is said to overfit the data if there exists some v =1 alternative hypothesis f ′ such that f has a smaller training where we sum over cases in the validation set error than f ′ , but f ′ has a smaller generalization error than f . ◮ Unbiased estimator of the generalization error ◮ Suggested split: 70% training, 30% validation 5 / 20 6 / 20 Cross Validation Cross Validation: Example ◮ Split the data into K pieces (folds) ln lambda −20.135 ln lambda −8.571 ◮ Train on K − 1 , test on the remaining fold 20 20 15 ◮ Cycle through, using each fold for testing once 15 10 10 ◮ Uses all data for testing, cf. the hold-out method 5 5 0 0 −5 −5 −10 −10 −15 0 5 10 15 20 0 5 10 15 20 Figure credit: Murphy Fig 7.7 ◮ Degree 14 polynomial with N = 21 datapoints ◮ Regularization term λ w T w ◮ How to choose λ ? Figure credit: Murphy Fig 1.21(b) 7 / 20 8 / 20

Bayesian Model Comparison mean squared error 25 0.9 ◮ Have a set of different possible models train mse negative log marg. likelihood CV estimate of MSE test mse 0.8 20 0.7 M i ≡ p ( D| θ, M i ) and p ( θ | M i ) 15 0.6 for i = 1 , . . . , K 0.5 10 0.4 ◮ Each model is set of distributions that have associated 0.3 5 parameters. Usually some models are more complex (have 0.2 more parameters) than others 0 0.1 −25 −20 −15 −10 −5 0 5 −20 −15 −10 −5 0 5 log lambda log lambda ◮ Bayesian way: Have a prior p ( M i ) over the set of models M i , then compute posterior p ( M i |D ) using Bayes’ rule Figure credit: Murphy Fig 7.7 ◮ Left-hand end of x -axis ≡ low regularization p ( M i ) p ( D| M i ) p ( M i |D ) = ◮ Notice that training error increases monotonically with λ � K j =1 p ( M j ) p ( D| M j ) ◮ Miminum of test error is for an intermediate value of λ ◮ ◮ Both cross validation and a Bayesian procudure (coming � p ( D| M ) = p ( D| θ, M ) p ( θ | M ) dθ soon) choose regularized models This is called the marginal likelihood or the evidence . 9 / 20 10 / 20 Comparing models Computing the Marginal Likelihood Bayes factor = P ( D| M 1 ) P ( D| M 2 ) ◮ Exact for conjugate exponential models, e.g. beta-binomial, Dirichlet-multinomial, Gaussian-Gaussian (for fixed variances) ◮ E.g. for Dirichlet-multinomial P ( M 2 |D ) = P ( M 1 ) P ( M 1 |D ) P ( M 2 ) .P ( D| M 1 ) r P ( D| M 2 ) Γ( α ) Γ( α i + N i ) � p ( D| M ) = Posterior ratio = Prior ratio × Bayes factor Γ( α + N ) Γ( α i ) i =1 ◮ Also exact for (generalized) linear regression (for fixed prior Strength of evidence from Bayes factor (Kass, 1995; after Jeffreys, 1961) and noise variances) ◮ Otherwise various approximations (analytic and Monte Carlo) 1 to 3 Not worth more than a bare mention 3 to 20 Positive are possible 20 to 150 Strong > 150 Very strong 11 / 20 12 / 20

BIC approximation ◮ Why Bayesian model selection? Why not compute best fit parameters and compare? ◮ More parameters=better fit to data. ML: bigger is better. ◮ But might be overfitting: only these parameters work. Many θ ) − dof (ˆ θ ) BIC = log p ( D| ˆ log N others don’t. 2 ◮ Bayesian information criterion (Schwarz, 1978) ◮ ˆ θ is MLE ◮ dof (ˆ θ ) is the degrees of freedom in the model ( ∼ number of parameters in the model) ◮ BIC penalizes ML score by a penalty term ◮ BIC is quite a crude approximation to the marginal likelihood ◮ Prefer models that are unlikely to ‘accidentally’ explain the data. 13 / 20 14 / 20 Binomial Example Binomial Example Example You are an auditor of a firm. You receive details about the Example sales that a particular salesman is making. He attempts to make 4 sales a day to independent companies. You receive a list Data: 1 2 2 4 1 4 3 2 4 1 3 3 2 4 3 3 2 3 3 of the number of sales by this agent made on a number of days. ◮ M = 1 - From P 1 ( x | p ) a binomial distribution Binomial( 4 ). Explain why you would expect the total number of sales to be binomially distributed. Prior on p is uniform. If the agent was making the sales numbers up as part of a ◮ M = 2 - From P 2 ( x ) a uniform distribution Uniform(0,. . . ,4). fraud, you might expect the agent (as he is a bit dim) to choose ◮ Discuss what you would do? the number of sales at random from a uniform distribution. ◮ P ( M = 1) = 0 . 8 . You are aware of the fraud possibility, and you understand there is something like a 1 / 5 chance this salesman is involved. Given daily sales counts of 1 2 2 4 1 4 3 2 4 1 3 3 2 4 3 3 2 3 3, do you think the salesman is lying? 15 / 20 16 / 20

Binomial Example Linear Regression Example d=1, logev=−18.593, EB d=3, logev=−21.718, EB 70 300 250 60 Example 200 50 Data: 1 2 2 4 1 4 3 2 4 1 3 3 2 4 3 3 2 3 3 150 40 100 30 50 ◮ M = 1 - From P 1 ( x | p ) a binomial distribution Binomial( 4 ). 20 0 Prior on p is uniform. 10 −50 0 −100 ◮ M = 2 - From P 2 ( x ) a uniform distribution Uniform(0,. . . ,4). −10 −150 ◮ P ( M = 1) = 0 . 8 . −200 −20 −2 0 2 4 6 8 10 12 −2 0 2 4 6 8 10 12 N=5, method=EB d=2, logev=−20.218, EB 80 1 � P ( D|M = 1) = dp P 1 ( D| p ) P ( p ) , P ( D|M = 2) = P 2 ( D ) 60 0.8 40 P(M|D) 0.6 20 P ( D|M ) P ( M ) P ( M|D ) = 0 0.4 P ( D|M = 1) P ( M = 1) + P ( D|M = 2) P ( M = 2) −20 ◮ Left as an exercise! (see tutorial) 0.2 −40 −60 0 1 2 3 M −80 −2 0 2 4 6 8 10 12 17 / 20 18 / 20 Summary d=3, logev=−107.410, EB d=1, logev=−106.110, EB 70 100 60 80 50 60 40 30 40 20 20 10 0 ◮ Training and test error, overfitting 0 ◮ Validation set, cross validation −10 −20 −2 0 2 4 6 8 10 12 −2 0 2 4 6 8 10 12 N=30, method=EB d=2, logev=−103.025, EB 80 ◮ Bayesian Model Comparison 1 70 0.8 60 50 P(M|D) 0.6 40 0.4 30 20 0.2 10 0 0 1 2 3 −10 M −2 0 2 4 6 8 10 12 19 / 20 20 / 20

Recommend

More recommend