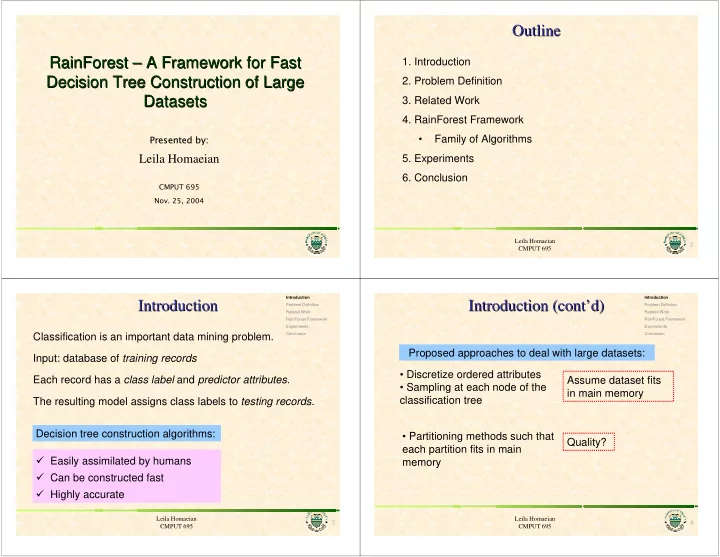

Outline Outline RainForest – – A Framework for Fast A Framework for Fast RainForest 1. Introduction Decision Tree Construction of Large Decision Tree Construction of Large 2. Problem Definition Datasets Datasets 3. Related Work 4. RainForest Framework Pre Presented by ted by: • Family of Algorithms Leila Homaeian 5. Experiments 6. Conclusion CMPUT 695 Nov. 25, 2004 Leila Homaeian 2 CMPUT 695 Introduction Introduction Introduction Introduction (cont’d) Introduction Introduction (cont’d) Problem Definition Problem Definition Related Work Related Work RainForest Framework RainForest Framework Experiments Experiments Classification is an important data mining problem. Conclusion Conclusion Proposed approaches to deal with large datasets: Input: database of training records • Discretize ordered attributes Each record has a class label and predictor attributes . Assume dataset fits • Sampling at each node of the in main memory classification tree The resulting model assigns class labels to testing records . Decision tree construction algorithms: • Partitioning methods such that Quality? each partition fits in main � Easily assimilated by humans memory � Can be constructed fast � Highly accurate Leila Homaeian Leila Homaeian 3 4 CMPUT 695 CMPUT 695

Introduction Introduction Introduction (cont’d) Introduction (cont’d) Problem Definition Problem Definition Problem Definition Problem Definition Related Work Related Work RainForest Framework RainForest Framework Experiments Experiments Conclusion Conclusion Outlook? RainForest framework scales up the existing decision sunny rain overcast tree construction algorithms. Humidity? Windy? P Data access algorithms scale with the size of high normal true false database, adapt to available main memory, and are not restricted to a specific classification algorithm . N P N P For each internal node n • Splitting attribute RainForest applied to existing algorithms, results in a • Set of predicates scalable version of the algorithm without modifying • Combined information of splitting attribute and splitting predicates: the result of the algorithm . splitting criteria on n , denoted as crit(n). Leila Homaeian Leila Homaeian 5 6 CMPUT 695 CMPUT 695 Introduction Introduction Problem Definition (cont’d) Problem Definition (cont’d) Related Work Problem Definition Related Work Problem Definition Related Work Related Work RainForest Framework RainForest Framework Experiments Experiments Conclusion Conclusion Outlook? The literature survey shows that almost all the previous sunny rain approaches do not scale to large datasets. overcast Humidity? Windy? P high normal true false Sprint [SAM96] works for large databases. • Builds classification trees with binary split N P N P Family of a node: F(n) • Uses gini index to decide on splitting criteria • Uses Minimal Description Length pruning (no test sample is needed) How to control the size of the classification tree? Bottom-up or Top-down Orthogonal issue [SAM96] J. Shafer, R. Agrawal, and M. Mehta. SPRINT: A scalable parallel classifier for data mining. In Proc of VLDB , 96 Leila Homaeian Leila Homaeian 7 8 CMPUT 695 CMPUT 695

Introduction Introduction RainForest Framework Problem Definition RainForest Framework Problem Definition Related Work (cont’d) Related Work (cont’d) Related Work Related Work RainForest Framework RainForest Framework Experiments Experiments • To decide on splitting attribute at a tree node n, Sprint needs Conclusion Conclusion to access F(n) for each ordered attribute in sorted order. • Creates an attribute list for each attribute. rid Humidity Class rid Temperature Class 1 High N 1 Hot N 2 High N 2 Hot N A costly hash join to 3 High P 3 Hot P 4 High P 13 Hot P distribute family of a 8 High N 4 Mild P 12 High P 8 Mild N node among its children 14 High N 10 Mild P 5 Normal P 11 Mild P Most decision tree algorithms (C4.5, CART, CHAID, FACT, ID3, SLIQ, Sprint, and Quest) 6 Normal N 12 Mild P 7 Normal P 14 Mild N • consider every attribute individually 9 Normal P 5 Cool P 10 Normal P 6 Cool N • need the distribution of class labels for each distinct value of an attribute to 11 Normal P 7 Cool P 13 Normal P 9 Cool P decide on the splitting criteria. Leila Homaeian Leila Homaeian 9 10 CMPUT 695 CMPUT 695 Introduction Introduction RainForest Framework (cont’d) RainForest Framework (cont’d) RainForest Framework (cont’d) Problem Definition RainForest Framework (cont’d) Problem Definition Related Work Related Work RainForest Framework RainForest Framework Experiments Experiments Conclusion Conclusion Outlook? RainForest refines this generic schema sunny rain overcast Humidity? Windy? P AVC-set (Attribute Value Classlabel) of a predictor high attribute a at a node n is the projection of F(n) onto a normal true and the class label whereby counts of individual class N P N P labels are aggregated. P N AVC-set of attribute Sunny 2 3 Outlook Overcast 4 0 Size of AVC-set of an attribute a AVC-group of a node n is the set of all AVC-sets at n Rainy 3 2 at node n depends on the number of distinct values of a & P N AVC-set of attribute class labels in F(n) High 0 3 Humidity Normal 2 0 Leila Homaeian Leila Homaeian 11 12 CMPUT 695 CMPUT 695

Introduction Introduction RainForest Framework (cont’d) RainForest Framework (cont’d) RainForest Framework (cont’d) Problem Definition RainForest Framework (cont’d) Problem Definition Related Work Related Work RainForest Framework RainForest Framework Experiments Experiments Conclusion Conclusion Depending on the amount of main memory available, three cases may happen: 1. The AVC-Group of the root node fits in main memory. 2. Each individual AVC-set of the root node fits in main memory, but not the AVC-Group of the root. 3. None of individual AVC-sets of the root node fits in main memory. Proposed algorithms RF-Write , RF-Read , and RF-Hybrid deal with case 1, and RF-Vertical deals with case 2 Leila Homaeian Leila Homaeian 13 14 CMPUT 695 CMPUT 695 Introduction RainForest Framework RainForest Framework (cont’d) RF- -Write Write RainForest Framework (cont’d) Problem Definition RF RF-Write Related Work RF-Read RainForest Framework RF-Hybrid Experiments RF-Vertical Conclusion AVC-Group Size Estimation In RainForest family of algorithms, the following steps are • First the database is scanned to build the AVC-group of the root carried for each node n : node r . • Then the AVC-group is passed to the CL (classification algorithm being scaled by RainForest) to compute crit(r). 1. AVC-group construction 2. Choose splitting attribute and predicate • The children are allocated & another scan is made over the database to partition the database across children of the root node r. 3. Partition F(n) across the children nodes • RF-Write is applied to each partition recursively At each level of the tree, families belonging to that level, are read twice and written once. Leila Homaeian Leila Homaeian 15 16 CMPUT 695 CMPUT 695

Recommend

More recommend