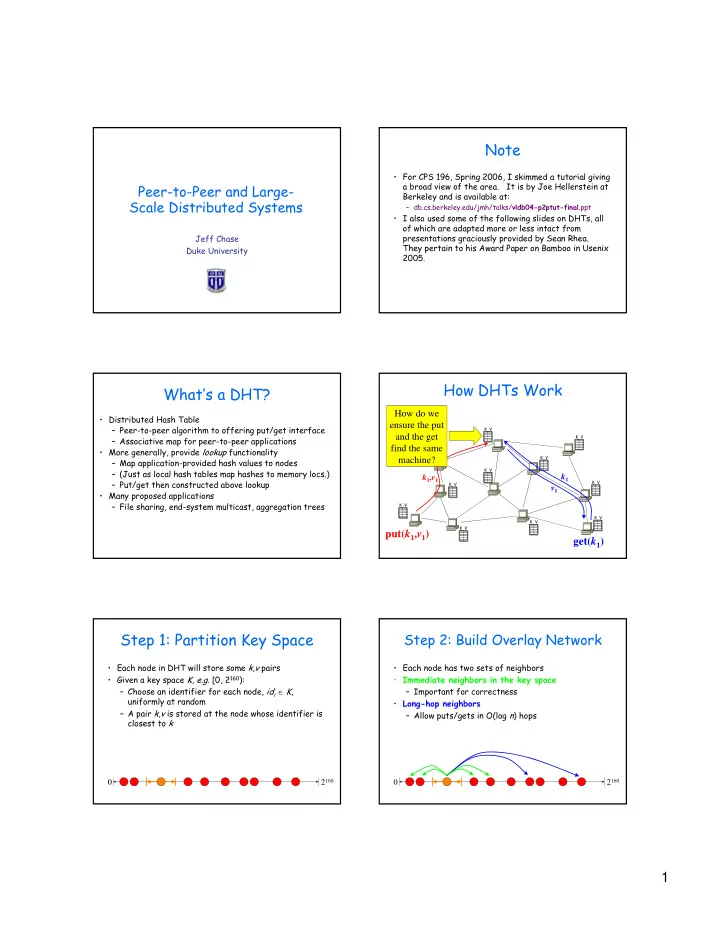

Note • For CPS 196, Spring 2006, I skimmed a tutorial giving a broad view of the area. It is by Joe Hellerstein at Peer-to-Peer and Large- Berkeley and is available at: Scale Distributed Systems – db.cs.berkeley.edu/jmh/talks/ vldb04-p2ptut-final .ppt • I also used some of the following slides on DHTs, all of which are adapted more or less intact from Jeff Chase presentations graciously provided by Sean Rhea. They pertain to his Award Paper on Bamboo in Usenix Duke University 2005. How DHTs Work What’s a DHT? How do we • Distributed Hash Table ensure the put – Peer-to-peer algorithm to offering put/get interface K V and the get K V – Associative map for peer-to-peer applications find the same • More generally, provide lookup functionality K V machine? – Map application-provided hash values to nodes K V – (Just as local hash tables map hashes to memory locs.) k 1 k 1 , v 1 – Put/get then constructed above lookup K V K V v 1 • Many proposed applications – File sharing, end-system multicast, aggregation trees K V K V K V K V put( k 1 , v 1 ) get( k 1 ) Step 1: Partition Key Space Step 2: Build Overlay Network • Each node in DHT will store some k , v pairs • Each node has two sets of neighbors • Given a key space K , e.g. [0, 2 160 ): • Immediate neighbors in the key space – Choose an identifier for each node, id i ∈ K , – Important for correctness uniformly at random • Long-hop neighbors – A pair k , v is stored at the node whose identifier is – Allow puts/gets in O(log n ) hops closest to k 2 160 2 160 0 0 1

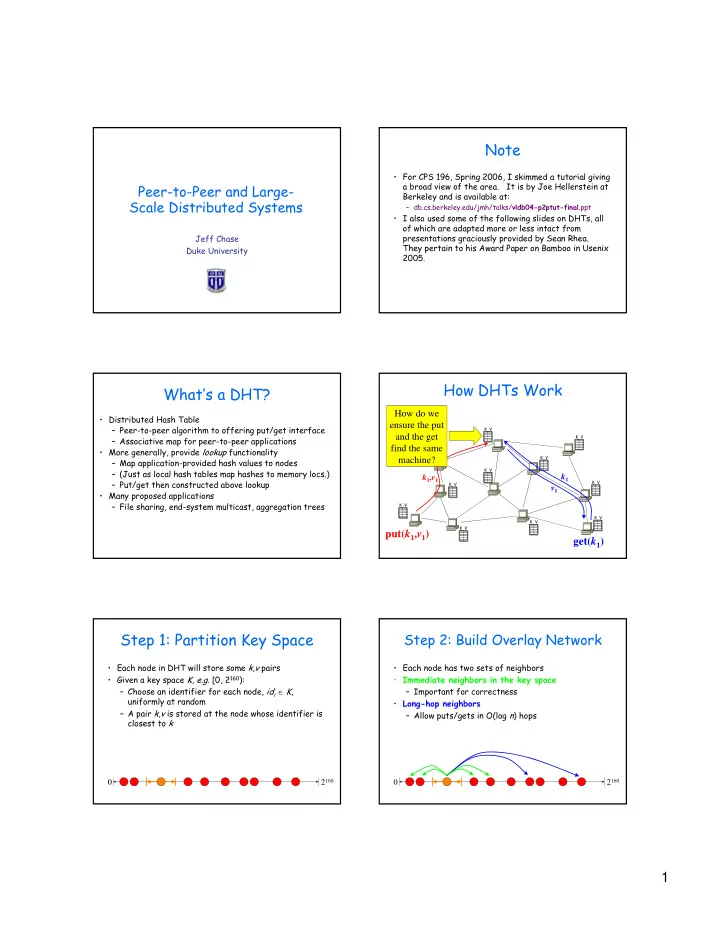

How Does Lookup Work? Step 3: Route Puts/Gets Thru Overlay Source • Assign IDs to nodes – Map hash values to node • Route greedily, always making progress 111… with closest ID • Leaf set is successors and predecessors 0… – All that’s needed for 110… correctness R • Routing table matches e get( k ) s p successively longer prefixes o n – Allows efficient lookups s e 2 160 0 10… k Lookup ID How Bad is Churn in Real Systems? Note on CPS 196, Spring 2006 Lifetime Session • We did not cover any of the following material on Time managing DHT’s under churn. time arrive depart arrive depart An hour is an incredibly short MTTF! Authors Systems Observed Session Time SGG02 Gnutella, Napster 50% < 60 minutes CLL02 Gnutella, Napster 31% < 10 minutes SW02 FastTrack 50% < 1 minute BSV03 Overnet 50% < 60 minutes GDS03 Kazaa 50% < 2.4 minutes Routing Around Failures Routing Around Failures • Under churn, neighbors may have failed • If we don’t receive an ACK, resend through different neighbor • To detect failures, acknowledge each hop ACK Timeout! ACK 0 2 160 0 2 160 k k 2

Computing Good Timeouts Computing Good Timeouts • Must compute timeouts carefully • Chord errs on the side of caution – If too long, increase put/get latency – Very stable, but gives long lookup latencies – If too short, get message explosion Timeout! Timeout! 2 160 2 160 0 0 k k Calculating Good Timeouts Recovering From Failures • Use TCP-style timers – Keep past history of latencies Recursive Iterative • Can’t route around failures forever – Use this to compute timeouts for new requests – Will eventually run out of neighbors • Works fine for recursive lookups • Must also find new nodes as they join – Only talk to neighbors, so – Especially important if they’re our immediate history small, current predecessors or successors: • In iterative lookups, source responsibility directs entire lookup – Must potentially have good 0 2 160 timeout for any node Recovering From Failures Recovering From Failures • Can’t route around failures forever • Obvious algorithm: reactive recovery – Will eventually run out of neighbors – When a node stops sending acknowledgements, • Must also find new nodes as they join notify other neighbors of potential – Especially important if they’re our immediate replacements predecessors or successors: – Similar techniques for arrival of new nodes old responsibility new node 0 A A B C D 2 160 0 2 160 new responsibility 3

The Problem with Reactive Recovering From Failures Recovery • What if B is alive, but network is congested? • Obvious algorithm: reactive recovery – C still perceives a failure due to dropped ACKs – When a node stops sending acknowledgements, – C starts recovery, further congesting network notify other neighbors of potential – More ACKs likely to be dropped replacements – Creates a positive feedback cycle – Similar techniques for arrival of new nodes 2 160 2 160 0 A A B C D 0 A A B C D B failed, use D B failed, use A B failed, use D B failed, use A The Problem with Reactive Periodic Recovery Recovery • What if B is alive, but network is congested? • Every period, each node sends its neighbor list to • This was the problem with Pastry each of its neighbors – Combined with poor congestion control, causes network to partition under heavy churn 0 A A B C D 2 160 0 A A B C D 2 160 B failed, use D B failed, use A my neighbors are A, B, D, and E Periodic Recovery Periodic Recovery • Every period, each node sends its neighbor list to • Every period, each node sends its neighbor list to each of its neighbors each of its neighbors – Breaks feedback loop 0 A A B C D 2 160 0 A A B C D 2 160 my neighbors are A, B, D, and E my neighbors are A, B, D, and E 4

Periodic Recovery • Every period, each node sends its neighbor list to each of its neighbors – Breaks feedback loop – Converges in logarithmic number of periods 2 160 0 A A B C D my neighbors are A, B, D, and E 5

Recommend

More recommend