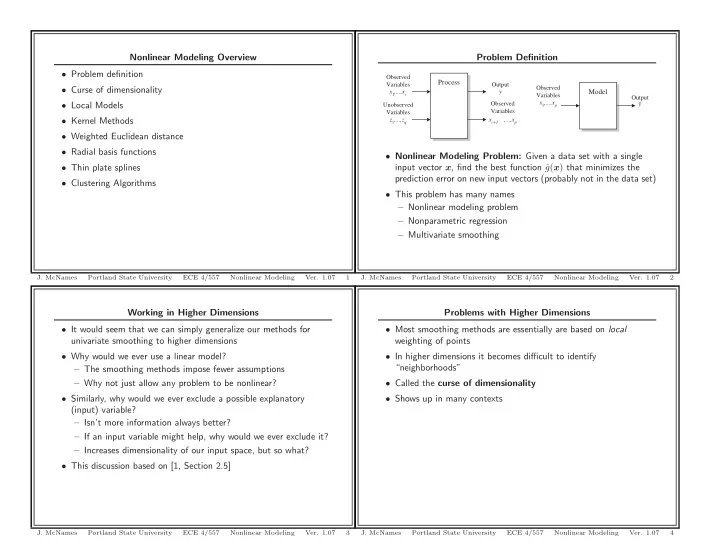

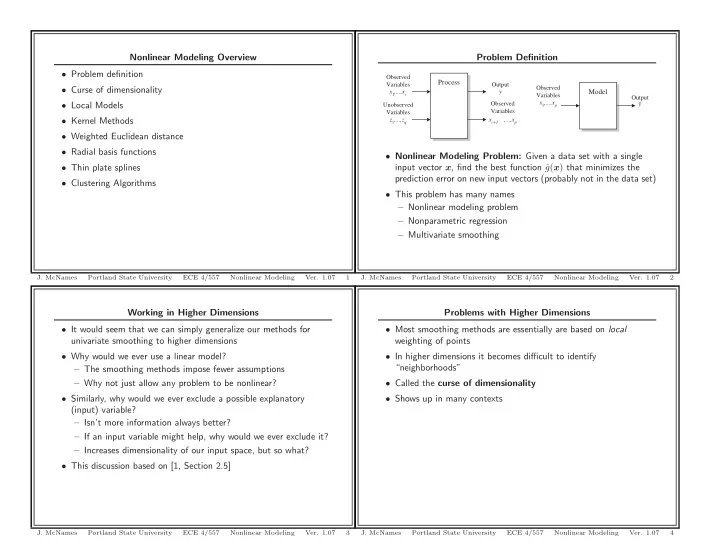

Nonlinear Modeling Overview Problem Definition • Problem definition Observed Process Variables Output • Curse of dimensionality Observed Model x 1 ,...,x c y Variables Output x 1 ,...,x p • Local Models Observed Unobserved y Variables Variables • Kernel Methods z 1 ,...,z q x c+1 ,...,x p • Weighted Euclidean distance • Radial basis functions • Nonlinear Modeling Problem: Given a data set with a single • Thin plate splines input vector x , find the best function ˆ g ( x ) that minimizes the prediction error on new input vectors (probably not in the data set) • Clustering Algorithms • This problem has many names – Nonlinear modeling problem – Nonparametric regression – Multivariate smoothing J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 1 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 2 Working in Higher Dimensions Problems with Higher Dimensions • It would seem that we can simply generalize our methods for • Most smoothing methods are essentially are based on local univariate smoothing to higher dimensions weighting of points • Why would we ever use a linear model? • In higher dimensions it becomes difficult to identify “neighborhoods” – The smoothing methods impose fewer assumptions – Why not just allow any problem to be nonlinear? • Called the curse of dimensionality • Similarly, why would we ever exclude a possible explanatory • Shows up in many contexts (input) variable? – Isn’t more information always better? – If an input variable might help, why would we ever exclude it? – Increases dimensionality of our input space, but so what? • This discussion based on [1, Section 2.5] J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 3 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 4

What is “Local”? Extrapolation versus Interpolation in High Dimensions • Suppose we have p inputs uniformly distributed in a unit • Suppose our inputs are uniformly distributed within a unit hypercube hyper-sphere centered at the origin • Let us use a smaller hypercube only a fraction k/n of the points • The median distance from the origin to the nearest point is given to build our local model by 1 − 0 . 5 1 /n � 1 /p • An unusual neighborhood, but suitable for the point � d ( p, n ) = • What is the edge length of our hypercube neighborhood? • d (5000 , 10) ≈ 0 . 52 , which is more than half way to the boundary – The volume of our neighborhood is r � k/n = e p where e is the edge length • Thus, most of the data points are closer to the boundary than any – Thus e p ( r ) = ( r ) 1 /p other data point – If we wish to use a neighborhood that captures 1% of the • This means we are always trying to estimate near the edges volume/points, and p = 10 , then e 10 (0 . 01) = 0 . 63 ! • In higher dimensions, we are effectively attempting to extrapolate – Similarly e 10 (0 . 10) = 0 . 80 rather than interpolate or smooth between the data points – The entire range of each input is only 1.0 • How can such neighborhoods be considered “local”? J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 5 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 6 Euclidean Distance in High Dimensions Sampling Density in High Dimensions • If the inputs are drawn from an i.i.d. distribution, the Euclidean • Suppose we want a uniform sampling density in one dimension distance can be viewed as a scaled estimate of the average consisting of n = 100 points along a grid distance along a coordinate, δ 2 j = ( x j − x i,j ) 2 • In two dimensions we would need n = 100 2 points to have the same sampling density (spacing between neighboring points along p δ 2 = 1 i = 1 ( x j − x i,j ) 2 ≈ E[( x j − x i,j ) 2 ] ˆ � pd 2 the axes) p • In p dimensions we would need n = 100 p points! j =1 • If p = 10 we would need n = 100 10 , which is impractical σ 2 δ 2 = var[ δ 2 ] = p − 1 σ 2 δ 2 � µ δ 2 µ ˆ ˆ δ 2 • Thus, in high dimensions all data sets sparsely sample the input σ 2 d 2 = var[ pδ 2 ] = pσ 2 µ d 2 = pµ δ 2 space δ 2 • Thus the coefficient of variation is γ � σ d 2 1 σ δ 2 µ d 2 = √ p µ δ 2 • All neighbors become more equidistant as p increases! J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 7 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 8

Coping with The Curse Imposing Structure • If the complexity of the problem grows with dimensionality (e.g., • Another possible mechanism to cope with the curse is to use PCA g ( x ) = e −|| x || 2 , a simple bump in p dimensions), we must have a or similar techniques dense sample ( n ∝ n p 1 ) to estimate g ( x ) with the same accuracy – Appropriate when the inputs are correlated over all values of p – In other words, when the inputs fall close to a lower dimensional hyperplane in the p dimensional space • In practice we don’t have this luxury • May also be appropriate to consider weighted distances • This also suggests that adding inputs (increasing p ) makes matters much worse, a paradoxical result – Idea is that a few inputs may dominate the distance measure • The way out of the curse is to impose structure on g ( x ) • There are many other ideas for imposing structure – For example a linear model may be reasonable • Is the key difference between different nonlinear modeling strategies y = w T x + ε – Now we only need n ≈ 10 p , dramatically fewer points to build our model – This model doesn’t suffer from the curse J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 9 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 10 Nonlinear Modeling The RampHill Data Set • In low dimensional spaces local models continue to work well • Like the Motorcycle data set for the univariate smoothing problem, I have a favorite data set for the nonlinear modeling problem – Also applies in high-dimensional spaces that can be reduced to lower dimensions • The RampHill data set [2, p. 150] • The following slides focus on “local” models as applied to a two • It is a synthetic data set dimensional problem • It has a number of nice properties for testing nonlinear models – Two flat regions in which the process is constant – A local bump (function of two input variables) – A global ramp that is locally linear – Two sharp edges (most models are smoother than this) • It only has two inputs so that we can plot the output (surface) • The inputs were drawn from a uniform distribution • No noise J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 11 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 12

Example 1: Ramp Hill Example 1: Ramp Hill • Number of points per (training/estimation) data set: 250 1.5 • Number of evaluation points (test/evaluation) data set: 2500 1 • Noise power: 0.07 • Signal ( g ( x ) ) power: 0.70 0.5 • Signal-to-noise ratio (SNR): 10.01 x 2 • Number of data sets: 250 0 −0.5 −1 −1.5 −1.5 −1 −0.5 0 0.5 1 1.5 x 1 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 13 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 14 Example 1: Ramp Hill Surface Plot Example 1: MATLAB Code function [] = ShowRampHill () 1 1 % ============================================================================== % Author -Specified Parameters % ============================================================================== 0.5 0.5 nPointsBuild = 250; nDataSetsBuild = 250; g ( x 1 , x 2 ) nPointsSide = 50; g ( x 1 , x 2 ) 0 noisePower = 0.07; 0 % ============================================================================== −0.5 % Preprocessing −0.5 % ============================================================================== x1Side = linspace (-1.5 ,1.5 , nPointsSide ); −1 x2Side = linspace (-1.5 ,1.5 , nPointsSide ); 1 −1 1 −1 [x1Block , x2Block] = meshgrid(x1Side ,x2Side ); 0 0 0 1.5 1 −1 1 −1 x1Test = reshape(x1Block , nPointsSide ^2 ,1); 0.5 0 −0.5 x 2 −1 −1.5 x 1 x2Test = reshape(x2Block , nPointsSide ^2 ,1); x 1 x 2 xTest = [x1Test x2Test ]; functionName = mfilename; fileIdentifier = fopen ([ functionName ’.tex ’],’w’); % ============================================================================== % Create the Data Sets % ============================================================================== DataSetsBuild = repmat(struct(’x’ ,[],’y’ ,[]), nDataSetsBuild ,1); J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 15 J. McNames Portland State University ECE 4/557 Nonlinear Modeling Ver. 1.07 16

Recommend

More recommend