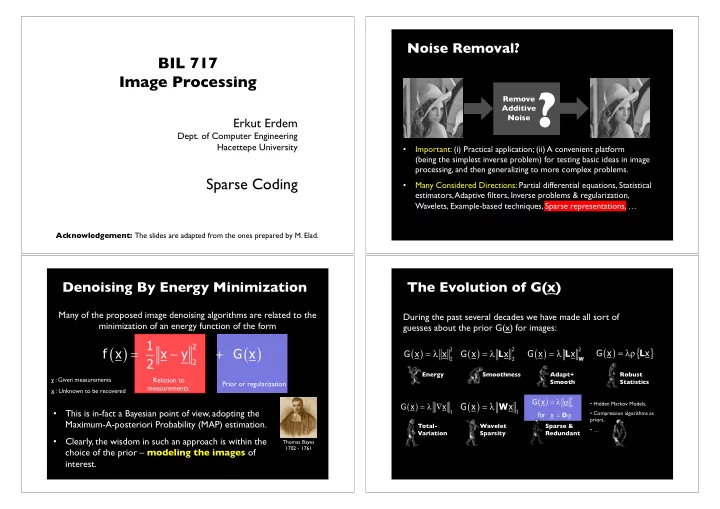

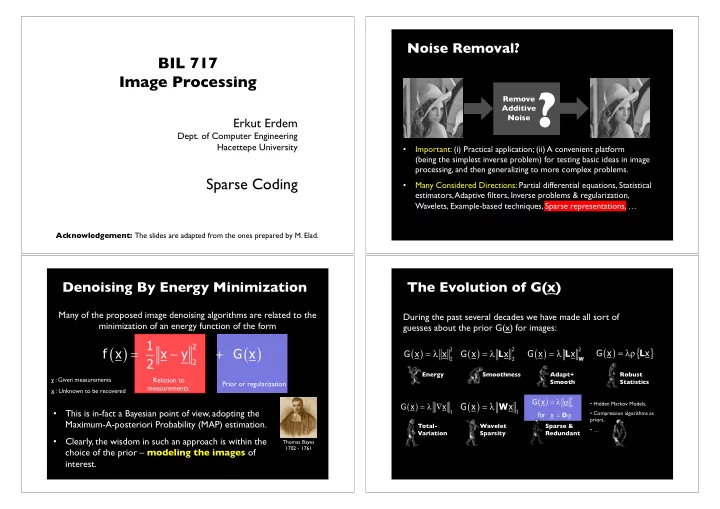

! Noise Removal? " BIL 717 ! Image Processing ! Noise " ? " " Remove Additive Erkut Erdem ! Dept. of Computer Engineering ! Hacettepe University ! • Important: (i) Practical application; (ii) A convenient platform (being the simplest inverse problem) for testing basic ideas in image " processing, and then generalizing to more complex problems. " " Sparse Coding • Many Considered Directions: Partial differential equations, Statistical estimators, Adaptive filters, Inverse problems & regularization, Wavelets, Example-based techniques, Sparse representations, … " Acknowledgement: The slides are adapted from the ones prepared by M. Elad. " Denoising By Energy Minimization " The Evolution of G(x) " Many of the proposed image denoising algorithms are related to the During the past several decades we have made all sort of minimization of an energy function of the form " guesses about the prior G(x) for images: " 1 ( ) 2 ( ) ( ) { } f x = x − y + G x ( ) ( ) ( ) 2 2 2 = λρ L G x = λ x G x = λ L x G x = λ L x G x x 2 2 2 W 2 Energy " Smoothness " Adapt+ Robust y : Given measurements " Relation to Smooth " Statistics " Prior or regularization " measurements " x : Unknown to be recovered " ( ) 0 G x = λ α ( ) ( ) G x = λ W x • Hidden Markov Models, " G x = λ ∇ x 0 This is in-fact a Bayesian point of view, adopting the • 1 1 • Compression algorithms as for x = D α priors, " Maximum-A-posteriori Probability (MAP) estimation. " Total- Wavelet Sparse & • … " Variation " Sparsity " Redundant " • Clearly, the wisdom in such an approach is within the Thomas Bayes 1702 - 1761 " choice of the prior – modeling the images of interest. "

Sparseland Signals are Special " Sparse Modeling of Signals " Every column in ! • Interesting Model: " D (dictionary) is ! M M Simple: Every generated signal is a prototype signal • M K built as a linear combination of (atom). " few atoms from our dictionary D " The vector α is • = N • Rich: A general model: the generated randomly N α obtained signals are a union of with few (say L) x many low-dimensional Gaussians. " non-zeros at Multiply A sparse & random locations by D A fixed Dictionary " • Familiar: We have been using D random and with random this model in other context for a vector " x = D values. " α α while now (wavelet, JPEG, …). " • We shall refer to this model as Sparseland ! 5 Sparse & Redundant Rep. Modeling? " Sparse & Redundant Rep. Modeling? " ( ) 2 f x = x p α 1 α 2 Our signal ! x = D α where α is sparse 1 model is thus: " p α p p α p → 0 p 1 p < 1 k ∑ p p α = α j p j 1 = x -1 +1 Our signal ! x = D α where α is sparse model is thus: "

Sparse & Redundant Rep. Modeling? " Back to Our MAP Energy Function " ( ) 2 p f x = x α • L 0 norm effectively 1 α 1 2 0 2 α ˆ = arg min D x α − y s . t . α ≤ L As p ! 0 we get 1 counts the number of 0 2 2 α non-zeros in α . " a count ! p α p p α ˆ x = D α of the non-zeros ˆ p → 0 p 1 • The vector α is the in the vector " p < 1 representation ( sparse / redundant ) 0 D α -y = - α of the desired 0 signal x. " x -1 +1 • The core idea: while few (L out of K) atoms can be merged Our signal ! 0 x x = = D D α α where where α α is sparse ≤ L to form the true signal, the noise cannot be fitted well. Thus, we model is thus: " 0 obtain an effective projection of the noise onto a very low-dimensional space, thus getting denoising effect. " To Summarize So Far … " Wait! There are Some Issues " • Numerical Problems: How should we solve or approximate the Image denoising (and solution of the problem " Use a model for many other problems signals/images based " 2 in image processing) 0 2 0 What can on sparse and 2 min D α − y s . t . α ≤ L min α s . t . D α − y ≤ ε " " " " " or " 0 0 requires a model for 2 2 we do? " redundant α α " the desired image " representations " 2 0 min λ α + D α − y or ? " 0 α 2 " There are some issues: " Great! • Theoretical Problems: Is there a unique sparse representation? If we 1. Theoretical " No? " are to approximate the solution somehow, how close will we get? " 2. How to approximate? " " 3. What about D ? " Practical Problems: What dictionary D should we use, such that all • this leads to effective denoising? Will all this work in applications? " "

Matrix “Spark” " Lets Start with the Noiseless Problem " = Suppose we build a signal by * " the relation " Definition: Given a matrix D , σ =Spark{ D } is the smallest " " D α = x number of columns that are linearly dependent. " Donoho & E. (‘02) " We aim to find the signal’s representation: " Known " 0 1 0 0 0 1 ⎡ ⎤ Example: " α = ArgMin α s.t. x = D α ˆ Rank = 4 " ⎢ ⎥ 0 0 1 0 0 1 α Spark = 3 " ⎢ ⎥ α = α ˆ Why should we necessarily get ? " ⎢ 0 0 1 0 0 ⎥ Uniqueness " " ⎢ ⎥ 0 0 0 0 0 1 0 α < α ˆ ⎣ ⎦ * " In tensor decomposition, It might happen that eventually . " 0 0 Kruskal defined something similar already in 1989. " Uniqueness Rule " Our Goal " 2 0 2 Suppose this problem has been solved somehow " This is a combinatorial min α s . t . D α − y ≤ ε problem, proven to be 0 2 0 α α = ArgMin α s.t. x = D α ˆ NP-Hard! " 0 α Here is a recipe for solving this problem: " Uniqueness " If we found a representation that satisfy " Solve the LS problem " Gather all the σ 2 ( ) " min D α − y s . t . sup p α = S " α < Set L=1 " supports {S i } i " LS error � � 2 ? " ˆ i 2 α 2 0 " of cardinality L " Donoho & E. (‘02) " Yes " for each support " No " Then necessarily it is unique (the sparsest). " There are ( K ) L " such supports " Set L=L+1 " This result implies that if generates signals M using “sparse enough” α , the solution of the Assume: K=1000, L=10 (known!), 1 nano-sec per each LS " Done " above will find it exactly. " We shall need ~8e+6 years to solve this problem !!!!! "

Lets Approximate " Relaxation – The Basis Pursuit (BP) " 2 0 Instead of solving " Solve Instead " 2 min α s . t . D α − y ≤ ε 0 0 2 Min α s . t . D α − y ≤ ε Min α s . t . D α − y ≤ ε α 1 2 0 2 α α • This is known as the Basis-Pursuit (BP) [Chen, Donoho & Saunders (’95)] . " • The newly defined problem is convex (quad. programming). " • Very efficient solvers can be deployed: " Greedy methods " Relaxation methods " " Interior point methods [Chen, Donoho, & Saunders (‘95)] [Kim, Koh, Lustig, Boyd, & D. Gorinevsky (`07)]. " Build the solution one Smooth the L 0 and use " Sequential shrinkage for union of ortho-bases [Bruce et.al. (‘98)] . " non-zero element at a continuous optimization " Iterative shrinkage [Figuerido & Nowak (‘03)] [Daubechies, Defrise, & De-Mole (‘04)] time " techniques " [E. (‘05)] [E., Matalon, & Zibulevsky (‘06)] [Beck & Teboulle (`09)] … " Go Greedy: Matching Pursuit (MP) " Pursuit Algorithms " ≅ 2 The MP is one of the greedy • 0 2 min α s . t . D α − y ≤ ε algorithms that finds one atom at a 0 2 α time [Mallat & Zhang (’93)] . " • Step 1: find the one atom that best There are various algorithms designed for approximating the solution matches the signal. " of this problem: " • Next steps: given the previously found atoms, • Greedy Algorithms: Matching Pursuit, Orthogonal Matching Pursuit (OMP), find the next one to best fit the residual. " Least-Squares-OMP , Weak Matching Pursuit, Block Matching Pursuit [1993- today]. " • The algorithm stops when the error is below the destination D α − y 2 • Relaxation Algorithms: Basis Pursuit (a.k.a. LASSO), Dnatzig Selector & threshold. " numerical ways to handle them [1995-today]. " • The Orthogonal MP (OMP) is an improved version that re-evaluates the • Hybrid Algorithms: StOMP , CoSaMP , Subspace Pursuit, Iterative Hard- coefficients by Least-Squares after each round. " Thresholding [2007-today]. " • … " 21 "

Recommend

More recommend