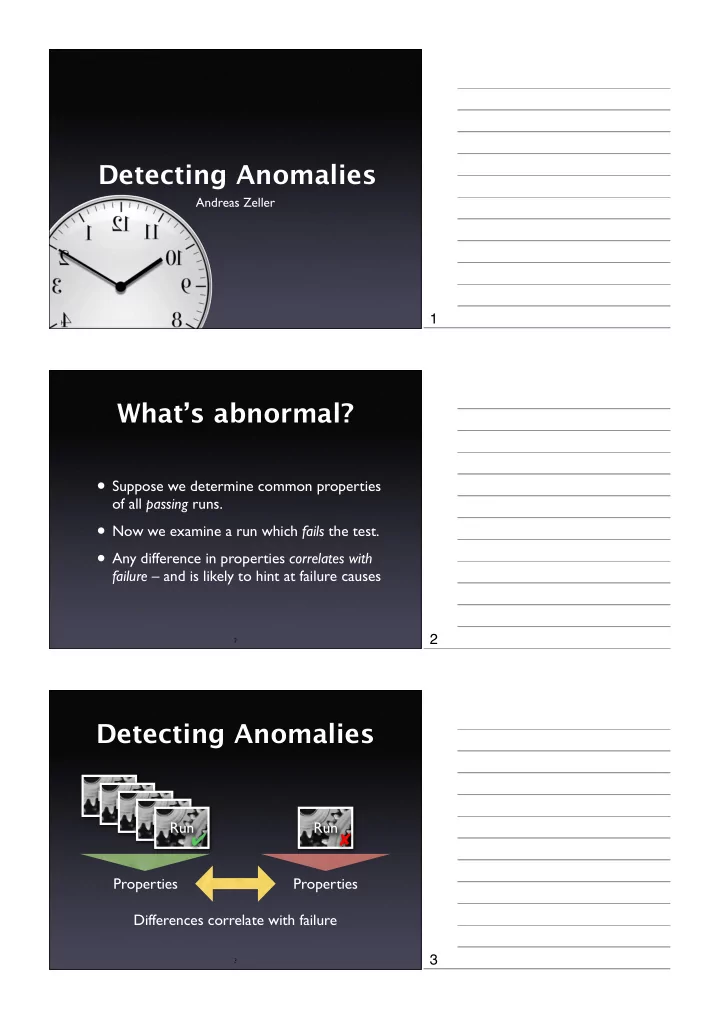

Detecting Anomalies Andreas Zeller 1 What’s abnormal? • Suppose we determine common properties of all passing runs. • Now we examine a run which fails the test. • Any difference in properties correlates with failure – and is likely to hint at failure causes 2 2 Detecting Anomalies Run Run Run Run Run Run ✔ ✘ Properties Properties Differences correlate with failure 3 3

Properties Data properties that hold in all runs: • “At f(), x is odd” • “0 ≤ x ≤ 10 during the run” Code properties that hold in all runs: • “f() is always executed” • “After open(), we eventually have close()” 4 4 Techniques Dynamic Value Sampled Invariants Ranges Values 5 5 Techniques Dynamic Value Sampled Invariants Ranges Values 6 6

Dynamic Invariants Run Run Run Run Run Run ✔ ✘ Invariant Property At f(), x is odd At f(), x = 2 7 7 Daikon • Determines invariants from program runs • Written by Michael Ernst et al. (1998–) • C++, Java, Lisp, and other languages • analyzed up to 13,000 lines of code 8 8 Daikon public int ex1511(int[] b, int n) Precondition { n == size(b[]) int s = 0; b != null int i = 0; n <= 13 n >= 7 while (i != n) { s = s + b[i]; Postcondition i = i + 1; b[] = orig(b[]) } return == sum(b) return s; } • Run with 100 randomly generated arrays of length 7–13 9 9

Daikon get trace Run Run Trace Run Run Run ✔ filter invariants Postcondition report results Invariant Invariant b[] = orig(b[]) Invariant return == sum(b) Invariant 10 10 Getting the Trace Run Run Trace Run Run Run ✔ • Records all variable values at all function entries and exits • Uses VALGRIND to create the trace 11 11 Filtering Invariants Trace • Daikon has a library of invariant patterns over variables and constants • Only matching patterns are preserved Invariant Invariant Invariant Invariant 12 12

Method Specifications using primitive data x = 6 x ∈ {2, 5, –30} x < y y = 5x + 10 z = 4x +12y +3 z = fn(x, y) using composite data x ∈ A A subseq B sorted(A) checked at method entry + exit 13 13 Object Invariants string.content[string.length] = ‘\0’ node.left.value ≤ node.right.value this.next.last = this checked at entry + exit of public methods 14 14 Matching Invariants A == B public int ex1511(int[] b, int n) { int s = 0; Pattern int i = 0; while (i != n) { s = s + b[i]; s size(b[]) i = i + 1; sum(b[]) n } orig(n) return s; return … } Variables 15 15

Matching Invariants size( sum orig( == s n ret A == B b[]) (b[]) n) s ✘ ✘ Pattern n ✘ ✘ ✘ size(b[]) s s size(b[]) ✘ sum(b[]) sum(b[]) i n ✘ ✘ orig(n) orig(n) n return … ✘ ✘ ret Variables run 1 16 16 Matching Invariants size( sum orig( == s n ret A == B b[]) (b[]) n) s ✘ ✘ ✘ Pattern ✘ n ✘ ✘ ✘ ✘ size(b[]) ✘ ✘ s s size(b[]) ✘ ✘ ✘ sum(b[]) sum(b[]) i n ✘ ✘ ✘ ✘ orig(n) orig(n) n return … ✘ ✘ ✘ ret Variables run 2 17 17 Matching Invariants size( sum orig( == s n ret A == B b[]) (b[]) n) s ✘ ✘ ✘ Pattern ✘ n ✘ ✘ ✘ ✘ size(b[]) ✘ ✘ s s size(b[]) ✘ ✘ ✘ sum(b[]) sum(b[]) i n ✘ ✘ ✘ ✘ orig(n) orig(n) n return … ✘ ✘ ✘ ret run 3 Variables 18 18

Matching Invariants size( sum orig( s == sum(b[]) == s n ret b[]) (b[]) n) s ✘ ✘ ✘ s == ret ✘ n ✘ ✘ ✘ ✘ ✘ ✘ size(b[]) n == size(b[]) ✘ ✘ ✘ sum(b[]) ✘ ✘ ✘ ✘ orig(n) ret == sum(b[]) ✘ ✘ ✘ ret 19 19 Matching Invariants s == sum(b[]) public int ex1511(int[] b, int n) { int s = 0; s == ret int i = 0; while (i != n) { s = s + b[i]; n == size(b[]) i = i + 1; } return s; ret == sum(b[]) } 20 20 polymorphic variables: treat “object x” like “int x” if possible Enhancing Relevance derived values: have “size(…)” as extra value to compare against redundant invariants: like x > 0 • Handle polymorphic variables => x >= 0 • Check for derived values statistical threshold: to eliminate random occurrences • Eliminate redundant invariants verify correctness: to make sure invariants always hold • Set statistical threshold for relevance • Verify correctness with static analysis 21 21

Daikon Discussed • As long as some property can be observed, it can be added as a pattern • Pattern vocabulary determines the invariants that can be found (“sum()”, etc.) • Checking all patterns (and combinations!) is expensive • Trivial invariants must be eliminated 22 22 Techniques Dynamic Value Sampled Invariants Ranges Values 23 23 Dynamic Invariants Run Run Run Run Can we check this Run Run on the fly? ✔ ✘ Invariant Property At f(), x is odd At f(), x = 2 24 24

Diduce • Determines invariants and violations • Written by Sudheendra Hangal and Monica Lam (2001) • Java bytecode • analyzed > 30,000 lines of code 25 25 Diduce Run Run Run Run Run Run ✔ ✘ Invariant Property Training mode Checking mode 26 26 Training Mode Run Run • Start with empty set Run Run Run of invariants ✔ • Adjust invariants according to values found during run Invariant 27 27

Invariants in Diduce For each variable, Diduce has a pair (V, M) • V = initial value of variable • M = range of values: i-th bit of M is cleared if value change in i-th bit was observed • With each assignment of a new value W, M is updated to M := M ∧ ¬ (W ⊗ V) • Differences are stored in same format 28 28 In Code, i = 10 is decimal 10; i, V, M are binary values. Training Example Code i Values Differences Invariant V M V M 1010 1010 1111 – – i = 10 i = 10 i += 1 1011 1010 1110 1 1111 10 ≤ i ≤ 11 ∧ |i ′ – i| = 1 i += 1 1100 1010 1000 1 1111 8 ≤ i ≤ 15 ∧ |i ′ – i| = 1 i += 1 1101 1010 1000 1 1111 8 ≤ i ≤ 15 ∧ |i ′ – i| = 1 i += 2 1111 1010 1000 1 1101 8 ≤ i ≤ 15 ∧ |i ′ – i| ≤ 2 During checking, clearing an M-bit is an anomaly 29 29 Diduce vs. Daikon • Less space and time requirements • Invariants are computed on the fly • Smaller set of invariants • Less precise invariants 30 30

Techniques Dynamic Value Sampled Invariants Ranges Values 31 31 Detecting Anomalies Run Run Run Run How do we collect Run Run data in the field? ✔ ✘ Properties Properties Differences correlate with failure 32 32 Liblit’s Sampling • We want properties of runs in the field • Collecting all this data is too expensive • Would a sample suffice? • Sampling experiment by Liblit et al. (2003) 33 33

Return Values • Hypothesis: function return values correlate with failure or success • Classified into positive / zero / negative 34 34 CCRYPT fails • CCRYPT is an interactive encryption tool • When CCRYPT asks user for information before overwriting a file, and user responds with EOF, CCRYPT crashes • 3,000 random runs • Of 1,170 predicates, only file_exists() > 0 and xreadline() == 0 correlate with failure 35 35 Liblit’s Sampling • Can we apply this Run Run Run technique to remote Run Run runs, too? ✔ • 1 out of 1000 return values was sampled Properties • Performance loss <4% 36 36

Failure Correlation 140 120 Number of "good" features left After 3,000 runs, 100 only five predicates are left that correlate with failure 80 60 40 20 0 0 500 1000 1500 2000 2500 3000 Number of successful trials used 37 37 Web Services • Sampling is first choice for web services • Have 1 out of 100 users run an instrumented version of the web service • Correlate instrumentation data with failure • After sufficient number of runs, we can automatically identify the anomaly 38 38 Techniques Dynamic Value Sampled Invariants Ranges Values 39 39

Anomalies and Causes • An anomaly is not a cause, but a correlation • Although correlation ≠ causation, anomalies can be excellent hints • Future belongs to those who exploit • Correlations in multiple runs • Causation in experiments 40 40 Concepts Comparing data abstractions shows anomalies correlated with failure Variety of abstractions and implementations Anomalies can be excellent hints Future: Integration of anomalies + causes 41 41 This work is licensed under the Creative Commons Attribution License. To view a copy of this license, visit http://creativecommons.org/licenses/by/1.0 or send a letter to Creative Commons, 559 Abbott Way, Stanford, California 94305, USA. 42 42

Recommend

More recommend